Primary sources: Difference between revisions

(Created page with "== Bibliography and state of the art == == Methods == == Performances ==") |

No edit summary |

||

| (11 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

A method for retrieving mentions of named entities in Primary Sources using Word Spotting in the context of ClioWire. | |||

== Motivations == | |||

Technology is not yet able to fully retranscribe text from old handwritten documents, yet these documents constitute the vast majority of primary sources of interest in a historian perspective. Therefore, the opportunity to retrieve even mentions of named entities becomes very valuable. For instance, a historian looking for records on a certain person could know quickly in which pages of which documents this person is mentioned, with a certain level of confidence. | |||

This would save him a lot of time scrutinizing the old writing and would make the information, even though not fully accessible since not digitized, much faster and convenient to retrieve. It could also foster a more focused, perhaps on-demand finer grained transcription (using other methods, costlier algorithms or taking advantage of human expertise) of particularly relevant documents. | |||

== Bibliography and state of the art == | == Bibliography and state of the art == | ||

== | When working with digitized documents, Optical Character Recognition (OCR) is traditionally used to recognize words in a character-by-character fashion. However, in the case of offline handwritten text recognition, it does perform rather poorly. A more suited approach to this problem is the Word Spotting technology. First proposed in S. Madhvanath et al., (1996) <ref name="Manmatha1997"> | ||

== | Manmatha, R., Han, C., & Riseman, E. M. (1996, June). Word spotting: A new | ||

approach to indexing handwriting. In Computer Vision and Pattern Recognition, | |||

1996. Proceedings CVPR'96, 1996 IEEE Computer Society Conference on (pp. | |||

631-637). IEEE. </ref>, this approach does not attempt to recognize characters or wors per say, | |||

but tries instead to retrieve all instances matching a user query (either a word or an | |||

image) in a set of document images. It is noted that Word Spotting is of primary interest in the case of historical documents. <ref>Rath, T. M., & | |||

Manmatha, R. (2007). Word spotting for historical documents. International | |||

Journal on Document Analysis and Recognition, 9(2), 139-152.</ref> <ref name="Giotis2017">Giotis, A. P., Sfikas, G., Gatos, B., & Nikou, C. (2017). A survey of | |||

document image word spotting techniques. Pattern Recognition, 68, | |||

310-332.</ref> | |||

There are three main characteristics that constrain Word Spotting methods<ref name="Giotis2017"/>: | |||

# The need for a segmentation, which can be done by word, line or arbitrarily. Bad segmentation might results in errors if poorly chosen, thus there have been attempts at coming up with segmentation-free methods cf. Leydier et Al. (2007)<ref> Leydier, Y., Lebourgeois, F., & Emptoz, H. (2007). Text search for medieval manuscript images. Pattern Recognition, 40(12), 3552-3567.</ref>. | |||

# The way of searching for a word, either by string or by example. Search by example is more restrictive, provided an example as first to be identified prior to querying, while other methods have attempted to allow for a search by string cf. Edwards et Al. (2005) <ref> Edwards, J., Teh, Y. W., Bock, R., Maire, M., Vesom, G., & Forsyth, D. A. (2005). Making latin manuscripts searchable using gHMM's. In Advances in Neural Information Processing Systems (pp. 385-392).</ref> | |||

# The use of a training set, i.e. human annotated data. Most often, methods based on a training set appear to perform far better <ref name="Giotis2017"/>. | |||

Currently, the best performing methods rely on segmentation by word, querying by example and using a training set. Moreover, they also make use of Neural Networks.<ref name="Giotis2017"/> | |||

However, a preferable method would not need segmentation, and allow to query by string. New methods with such features have emerged and show promising results, c.f. Wilkinson et al. (2017)<ref>Wilkinson, T., Lindström, J., & | |||

Brun, A. (2017). Neural Ctrl-F: Segmentation-free Query-by-String Word Spotting | |||

in Handwritten Manuscript Collections. arXiv preprint arXiv:1703.07645.</ref>. | |||

<references /> | |||

== Detailed description of the method == | |||

=== Argus & Word Spotting === | |||

For Word Spotting itself, our method relies on a commercial algorithm called ArgusSearch, edited by the German company Planet, which we use as a black box. The Word Spotting is done on the company servers side, where an undisclosed trained model is used to perform the indexing of pages. For a subscription priced on the basis of a number of pages to index, one can send high resolution pictures to their servers and receive in a short while a database with the results of the indexing, which we can then query for names. | |||

However, the usage of this black-box at the core of our bot is not very problematic. It could be swapped easily for any other better performing algorithm, without changing the core functionalities of the method - for instance, the algorithm being developed at the DHLab at the time. Eventually, using several algorithms and comparing their results would allow cross-checking the results. | |||

=== Our implementation === | |||

To use this database, we created a simple server that connects to a postgresSQL database that contains the index. Then we can simply write http queries to search the indexed pages, the results returned is a json file containing the confidence of the match, the reference of the page with the match as well as the coordinates of the bounding box (which delimits the area of the match) We also created a simple front-end interface to allow for manual assessment in a visual way of the quality of results. | |||

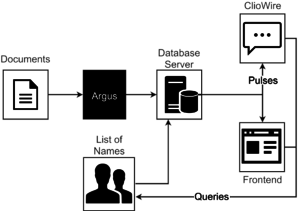

[[File:Pipeline_primarysources.png|300px|thumb|left|The pipeline of our method]] | |||

To retrieve the named entities (for the scope of this project, persons names), we were given a list of 3749 Venetian named entities, which we parsed down to a meaningful list of 405 unique last names. The use of Venetian names was relevant to our project, since the actual documents we had at our disposition came from the State Archives of Venice. Looking up full name (first + last names) gave too little results, while looking up first names only, although it got many matches from the database, did not appear relevant to us compared to last names only. As such, there would be no point in feeding a massive number of random mentions of first names - unless other bots are integrated, which could for instance infer and retrieve subsequent last names from the coordinates of the bounding boxes. We also tried queries based on publicly available list of Italian names, which wasn’t quite as successful. | |||

=== Pulses === | |||

It is not desirable to to query the server whenever and each time one is looking for information, hence ideally as much information as possible should be processed from the corpus at once, and published on ClioWire as formatted pulses. This way, knowledge is actually created by extracting key dormant informations from the documents. For this project, we created pulses based on the results of queries made with list of Venetian last names previously mentioned, filtered after a confidence threshold of 60%. | |||

As was agreed between all the actors building ClioWire (which novel information architecture rests on a mutual negotiation of the tags between the contributors), our pulses have the format “#Mention #Name_entity #In #document_uid #Source url #Prob_score score” | |||

Example : | |||

''Mention #Francesco_554eacdd_6734_4c91_9b9a_5e40869403e7_000490_942_5513_363_139 #In #554eacdd_6734_4c91_9b9a_5e40869403e7 #Source https://images.center/iiif/554eacdd-6734-4c91-9b9a-5e40869403e7-000490/942,5513,363,139/full/0/default.jpg #Prob_Score 0.95'' | |||

The pulse is organised in three key tags : first, it is clearly specified that the pulse is essentially the #mention of a #named entity, which is the name itself attached to the UUID of the volume. The purpose of this long unique identifier is to anticipate and resolve the expected ambiguities, whence the important need to have every single mention receive an unique id. It is always possible to define equivalence between mentioned entities in successive pulses. Then we specify again #in which #volume UUID is the mention present, to be able to search directly for volumes UUID in ClioWire (it is expected that future bots will be able to create links from UUIDs to volumes’ titles). Then we have the #source tag to provide a link to the bounded picture of the spotted named entity in the page, hosted on the DHlab server, and resized with IIIF. At last, there is an optional parameter #Prob_Score that shows the confidence score returned by the algorithm. [[File:Francescoraspibot.png|200px|thumb|left|Meet Francesco_raspi_bot]] | |||

The Python script *...* accessible in the GitHub repository performs the query on our corpus with the provided list of names. The pulses created are then posted on ClioWire by Francesco_raspi_bot (named after the glorious Venice Time Machine legendary character) bot on ClioWire. While creating the pulses, we set a json file to log the mention that have been posted as pulses already, so that they would not be added twice as two identical pulses. This file can be given as an argument to our script to control the pulse submission process. | |||

Finally, our whole method is essentially designed to become a bot of its own. With further work, it should be made automatic, so that new pulses with named entities published on ClioWire would eventually trigger further queries on the available corpora, and feed ClioWire back with more pulses. | |||

== Quantitive analysis of the performances == | |||

We were first given a sample of 791 pages. Then at the end of the project, the indexing of a collection of 30’000 pages was started, yet some problems were encountered on the Argus indexing server side, and by the time of performing the analysis, we had but another subset of 8’201 pages. We see two prior limitations of our analysis : the fact that many of the sample pages were indexes, meaning they could have been potentially written in a way that is easier for the model to classify (more clearly than less formally formatted handwritten documents), and the fact that many pages are blank. | |||

We firsted tested our preliminary sample of 791 pages to get an idea of the results we would get. | |||

Using a list of 980 italian firstnames, we got 904 matches @80% confidence threshold, a good result but not really relevant. | |||

Using the list of Venetian full names (677), we got 173 matches @60% confidence, a rather poor result. | |||

Using the list of Venetian last names (405), we got 3097 matches @60% confidence and 1192 matches @80% confidence. It is much better than with full names, but obviously the matches are more vague. So it appeared that a strategy focusing on last names only was the most interesting. Last names ideally discriminate a family, whereas first names are too loosely defining entities to be considered useful (though searching first names can still be relevant with helper bots). | |||

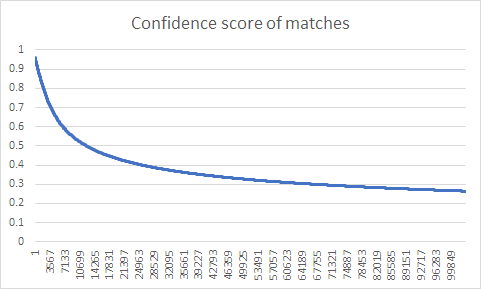

We then queried the 8'201 pages subset with our list of Venetian last names. The query had 103404 matches, out of which only 6592 had a confidence score above 60% (and would be posted as pulses, although the final submission of pulses will be made out of the 30’000 pages fully indexed database). The distribution is as follows, where we can see that it quickly drop under 50%, then slowly decay down to the search threshold 26% : | |||

[[File:Confidence_of_matches.png]] | |||

=== Precision/Recall === | |||

Because we mostly lack reference, estimating the quantitative performance by mean of the usual precision/recall with our method is not straightforward. As such, we cannot estimate the proper recall of the method, as we have no reasonable way to count the total number of occurrences of named entities documents, other than going through the 791 pages of the sample subset manually looking for them. | |||

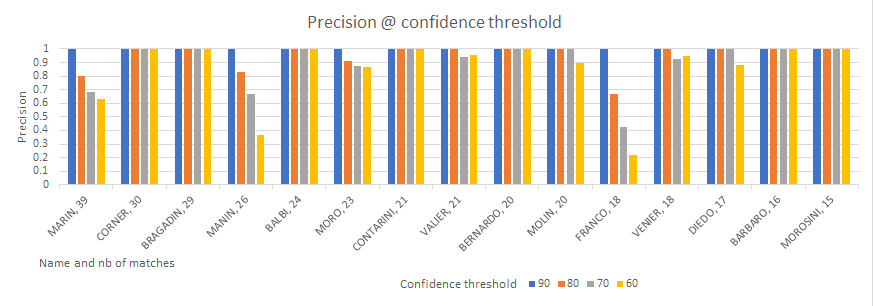

We can estimate the precision of the method (estimate false positives and false negatives). To do so, we run a query with the 405 parsed Venetian names that we have and we set a score for each name, the number of hits that it gets from the database above a threshold confidence of 60%. In the ArgusSearch SDK settings, the threshold is set at 26,4%, but we found that under 60% the precision was mostly too low to bother counting occurences. Then, we can rank the names by number of occurrence, pick say 15 highly matching names and manually query them through our visual interface. Finally, we can count the number of results that appear correct to our eyes by intervals of confidence. Results are as follows | |||

[[File:Precisions.png]] | |||

The system performs really well with some names with high precision @ all thresholds, whereas some other names encounter a lot of ambiguity problems : Franco is mostly matched as Francesco, Porta is retrieved as the Italian noun porto, etc. Therefore precision really depends on the name itself and its potential ambiguity. A name that is likely to obtain a high precision in the queries should be well distant from other names or words... | |||

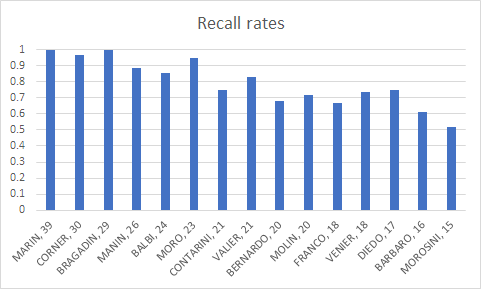

Regarding the recall, we could eventually filter our results by the page where the match is found, and compute recall on an even smaller sample. We chose to compute a less rigorous recall based on the results of the indexing. It happens that correct matches are given with a low index of confidence. We can consider them as false negatives and count their number to estimate this lesser recall. Results are as follows | |||

[[File:Recalls.png]] | |||

We see that recall looks quite good. There also seems to be a tendency for recall to decrease with a decrease number of matches. Yet, our sample is too small conclude. | |||

== Source Code == | |||

The source code of Francesco_Raspi_bot is available here [https://github.com/sriak/cliowire-primary-sources]. | |||

Latest revision as of 09:44, 20 December 2017

A method for retrieving mentions of named entities in Primary Sources using Word Spotting in the context of ClioWire.

Motivations

Technology is not yet able to fully retranscribe text from old handwritten documents, yet these documents constitute the vast majority of primary sources of interest in a historian perspective. Therefore, the opportunity to retrieve even mentions of named entities becomes very valuable. For instance, a historian looking for records on a certain person could know quickly in which pages of which documents this person is mentioned, with a certain level of confidence. This would save him a lot of time scrutinizing the old writing and would make the information, even though not fully accessible since not digitized, much faster and convenient to retrieve. It could also foster a more focused, perhaps on-demand finer grained transcription (using other methods, costlier algorithms or taking advantage of human expertise) of particularly relevant documents.

Bibliography and state of the art

When working with digitized documents, Optical Character Recognition (OCR) is traditionally used to recognize words in a character-by-character fashion. However, in the case of offline handwritten text recognition, it does perform rather poorly. A more suited approach to this problem is the Word Spotting technology. First proposed in S. Madhvanath et al., (1996) [1], this approach does not attempt to recognize characters or wors per say, but tries instead to retrieve all instances matching a user query (either a word or an image) in a set of document images. It is noted that Word Spotting is of primary interest in the case of historical documents. [2] [3]

There are three main characteristics that constrain Word Spotting methods[3]:

- The need for a segmentation, which can be done by word, line or arbitrarily. Bad segmentation might results in errors if poorly chosen, thus there have been attempts at coming up with segmentation-free methods cf. Leydier et Al. (2007)[4].

- The way of searching for a word, either by string or by example. Search by example is more restrictive, provided an example as first to be identified prior to querying, while other methods have attempted to allow for a search by string cf. Edwards et Al. (2005) [5]

- The use of a training set, i.e. human annotated data. Most often, methods based on a training set appear to perform far better [3].

Currently, the best performing methods rely on segmentation by word, querying by example and using a training set. Moreover, they also make use of Neural Networks.[3] However, a preferable method would not need segmentation, and allow to query by string. New methods with such features have emerged and show promising results, c.f. Wilkinson et al. (2017)[6].

- ↑ Manmatha, R., Han, C., & Riseman, E. M. (1996, June). Word spotting: A new approach to indexing handwriting. In Computer Vision and Pattern Recognition, 1996. Proceedings CVPR'96, 1996 IEEE Computer Society Conference on (pp. 631-637). IEEE.

- ↑ Rath, T. M., & Manmatha, R. (2007). Word spotting for historical documents. International Journal on Document Analysis and Recognition, 9(2), 139-152.

- ↑ 3.0 3.1 3.2 3.3 Giotis, A. P., Sfikas, G., Gatos, B., & Nikou, C. (2017). A survey of document image word spotting techniques. Pattern Recognition, 68, 310-332.

- ↑ Leydier, Y., Lebourgeois, F., & Emptoz, H. (2007). Text search for medieval manuscript images. Pattern Recognition, 40(12), 3552-3567.

- ↑ Edwards, J., Teh, Y. W., Bock, R., Maire, M., Vesom, G., & Forsyth, D. A. (2005). Making latin manuscripts searchable using gHMM's. In Advances in Neural Information Processing Systems (pp. 385-392).

- ↑ Wilkinson, T., Lindström, J., & Brun, A. (2017). Neural Ctrl-F: Segmentation-free Query-by-String Word Spotting in Handwritten Manuscript Collections. arXiv preprint arXiv:1703.07645.

Detailed description of the method

Argus & Word Spotting

For Word Spotting itself, our method relies on a commercial algorithm called ArgusSearch, edited by the German company Planet, which we use as a black box. The Word Spotting is done on the company servers side, where an undisclosed trained model is used to perform the indexing of pages. For a subscription priced on the basis of a number of pages to index, one can send high resolution pictures to their servers and receive in a short while a database with the results of the indexing, which we can then query for names.

However, the usage of this black-box at the core of our bot is not very problematic. It could be swapped easily for any other better performing algorithm, without changing the core functionalities of the method - for instance, the algorithm being developed at the DHLab at the time. Eventually, using several algorithms and comparing their results would allow cross-checking the results.

Our implementation

To use this database, we created a simple server that connects to a postgresSQL database that contains the index. Then we can simply write http queries to search the indexed pages, the results returned is a json file containing the confidence of the match, the reference of the page with the match as well as the coordinates of the bounding box (which delimits the area of the match) We also created a simple front-end interface to allow for manual assessment in a visual way of the quality of results.

To retrieve the named entities (for the scope of this project, persons names), we were given a list of 3749 Venetian named entities, which we parsed down to a meaningful list of 405 unique last names. The use of Venetian names was relevant to our project, since the actual documents we had at our disposition came from the State Archives of Venice. Looking up full name (first + last names) gave too little results, while looking up first names only, although it got many matches from the database, did not appear relevant to us compared to last names only. As such, there would be no point in feeding a massive number of random mentions of first names - unless other bots are integrated, which could for instance infer and retrieve subsequent last names from the coordinates of the bounding boxes. We also tried queries based on publicly available list of Italian names, which wasn’t quite as successful.

Pulses

It is not desirable to to query the server whenever and each time one is looking for information, hence ideally as much information as possible should be processed from the corpus at once, and published on ClioWire as formatted pulses. This way, knowledge is actually created by extracting key dormant informations from the documents. For this project, we created pulses based on the results of queries made with list of Venetian last names previously mentioned, filtered after a confidence threshold of 60%.

As was agreed between all the actors building ClioWire (which novel information architecture rests on a mutual negotiation of the tags between the contributors), our pulses have the format “#Mention #Name_entity #In #document_uid #Source url #Prob_score score”

Example : Mention #Francesco_554eacdd_6734_4c91_9b9a_5e40869403e7_000490_942_5513_363_139 #In #554eacdd_6734_4c91_9b9a_5e40869403e7 #Source https://images.center/iiif/554eacdd-6734-4c91-9b9a-5e40869403e7-000490/942,5513,363,139/full/0/default.jpg #Prob_Score 0.95

The pulse is organised in three key tags : first, it is clearly specified that the pulse is essentially the #mention of a #named entity, which is the name itself attached to the UUID of the volume. The purpose of this long unique identifier is to anticipate and resolve the expected ambiguities, whence the important need to have every single mention receive an unique id. It is always possible to define equivalence between mentioned entities in successive pulses. Then we specify again #in which #volume UUID is the mention present, to be able to search directly for volumes UUID in ClioWire (it is expected that future bots will be able to create links from UUIDs to volumes’ titles). Then we have the #source tag to provide a link to the bounded picture of the spotted named entity in the page, hosted on the DHlab server, and resized with IIIF. At last, there is an optional parameter #Prob_Score that shows the confidence score returned by the algorithm.

The Python script *...* accessible in the GitHub repository performs the query on our corpus with the provided list of names. The pulses created are then posted on ClioWire by Francesco_raspi_bot (named after the glorious Venice Time Machine legendary character) bot on ClioWire. While creating the pulses, we set a json file to log the mention that have been posted as pulses already, so that they would not be added twice as two identical pulses. This file can be given as an argument to our script to control the pulse submission process.

Finally, our whole method is essentially designed to become a bot of its own. With further work, it should be made automatic, so that new pulses with named entities published on ClioWire would eventually trigger further queries on the available corpora, and feed ClioWire back with more pulses.

Quantitive analysis of the performances

We were first given a sample of 791 pages. Then at the end of the project, the indexing of a collection of 30’000 pages was started, yet some problems were encountered on the Argus indexing server side, and by the time of performing the analysis, we had but another subset of 8’201 pages. We see two prior limitations of our analysis : the fact that many of the sample pages were indexes, meaning they could have been potentially written in a way that is easier for the model to classify (more clearly than less formally formatted handwritten documents), and the fact that many pages are blank.

We firsted tested our preliminary sample of 791 pages to get an idea of the results we would get. Using a list of 980 italian firstnames, we got 904 matches @80% confidence threshold, a good result but not really relevant. Using the list of Venetian full names (677), we got 173 matches @60% confidence, a rather poor result. Using the list of Venetian last names (405), we got 3097 matches @60% confidence and 1192 matches @80% confidence. It is much better than with full names, but obviously the matches are more vague. So it appeared that a strategy focusing on last names only was the most interesting. Last names ideally discriminate a family, whereas first names are too loosely defining entities to be considered useful (though searching first names can still be relevant with helper bots).

We then queried the 8'201 pages subset with our list of Venetian last names. The query had 103404 matches, out of which only 6592 had a confidence score above 60% (and would be posted as pulses, although the final submission of pulses will be made out of the 30’000 pages fully indexed database). The distribution is as follows, where we can see that it quickly drop under 50%, then slowly decay down to the search threshold 26% :

Precision/Recall

Because we mostly lack reference, estimating the quantitative performance by mean of the usual precision/recall with our method is not straightforward. As such, we cannot estimate the proper recall of the method, as we have no reasonable way to count the total number of occurrences of named entities documents, other than going through the 791 pages of the sample subset manually looking for them. We can estimate the precision of the method (estimate false positives and false negatives). To do so, we run a query with the 405 parsed Venetian names that we have and we set a score for each name, the number of hits that it gets from the database above a threshold confidence of 60%. In the ArgusSearch SDK settings, the threshold is set at 26,4%, but we found that under 60% the precision was mostly too low to bother counting occurences. Then, we can rank the names by number of occurrence, pick say 15 highly matching names and manually query them through our visual interface. Finally, we can count the number of results that appear correct to our eyes by intervals of confidence. Results are as follows

The system performs really well with some names with high precision @ all thresholds, whereas some other names encounter a lot of ambiguity problems : Franco is mostly matched as Francesco, Porta is retrieved as the Italian noun porto, etc. Therefore precision really depends on the name itself and its potential ambiguity. A name that is likely to obtain a high precision in the queries should be well distant from other names or words...

Regarding the recall, we could eventually filter our results by the page where the match is found, and compute recall on an even smaller sample. We chose to compute a less rigorous recall based on the results of the indexing. It happens that correct matches are given with a low index of confidence. We can consider them as false negatives and count their number to estimate this lesser recall. Results are as follows

We see that recall looks quite good. There also seems to be a tendency for recall to decrease with a decrease number of matches. Yet, our sample is too small conclude.

Source Code

The source code of Francesco_Raspi_bot is available here [1].