Humans of Paris 1900: Difference between revisions

| (37 intermediate revisions by 3 users not shown) | |||

| Line 2: | Line 2: | ||

== Motivation == | == Motivation == | ||

Our project takes inspiration from the famous Instagram page, [https://en.wikipedia.org/wiki/Humans_of_New_York Humans of New York], which features pictures and stories of people living in current day New York. | Our project takes inspiration from the famous Instagram page, [https://en.wikipedia.org/wiki/Humans_of_New_York Humans of New York], which features pictures and stories of people living in current day in New York City. In similar fashion, our project, Humans of Paris, has the aim to be a platform to connect us to the people of 19th century Paris. | ||

In similar fashion, our project, Humans of Paris, has the aim to be a platform to connect us to the people of 19th century Paris. | |||

Photography was still in its early stages when [https://en.wikipedia.org/wiki/Nadar Nadar] took up the craft in his atelier in Paris. Through the thousands of pictures taken by him and his son we can get a glimpse of who lived at the time. | Photography was still in its early stages when [https://en.wikipedia.org/wiki/Nadar Nadar] took up the craft in his atelier in Paris. Through the thousands of pictures taken by him and his son we can get a glimpse of who lived at the time. We explore the use of deep learning models to cluster similar faces to get an alternative, innovative view of the collection which allows serendipitous discoveries of patterns and people. | ||

We explore the use of deep learning models to cluster similar faces to get an alternative, innovative view of the collection | |||

There is a story behind every person, and our interface highlights this by association people’s story with their picture. | There is a story behind every person, and our interface highlights this by association people’s story with their picture. | ||

| Line 11: | Line 10: | ||

[[File:Nadars.gif|500px|right|thumb|Atelier Nadar - rendering by [https://data.bnf.fr/fr/16763735/jerome_le_scanff/ Jérôme Le Scanff]]] | [[File:Nadars.gif|500px|right|thumb|Atelier Nadar - rendering by [https://data.bnf.fr/fr/16763735/jerome_le_scanff/ Jérôme Le Scanff]]] | ||

The collection at the heart of our project is comprised | The collection at the heart of our project is comprised over 23000 photographs (positives) and caricatures by Atelier Nadar. We focus on individual portraits of people for our interface. | ||

The portrait studio Atelier Nadar was founded by Nadar, otherwise known by his birth name Felix Tournachon (1820-1910). Nadar | The portrait studio Atelier Nadar was founded by the first Nadar, otherwise known by his birth name Felix Tournachon (1820-1910), and ran by his brother and nephew, Adrien and Paul. The first Nadar established himself as a known caricaturist after working as a journalist. Then, having been introduced to photography by his brother, Adrien, he soon realized the potential of this new artform and with the support of a banker friend, he went on to create the Studio in Paris in 1854. | ||

<ref> | <ref>MetMuseum: https://www.metmuseum.org/toah/hd/nadr/hd_nadr.htm November 2019</ref> | ||

Photography had started to become a viable business as new collioduim-on-glass negatives, invented in 1851, allowed for the creation of copies from one negative while being cheaper than previous methods due to the use of glass all while having short exposure times. <ref> | Photography had started to become a viable business as new collioduim-on-glass negatives, invented in 1851, allowed for the creation of copies from one negative while being cheaper than previous methods due to the use of glass all while having short exposure times. <ref>MetMuseum: https://www.metmuseum.org/toah/hd/nadr/hd_nadr.htm November 2019</ref> At the same time, in the 19th century, subtle social differences defined the world of photography. The competition between photographic studios, whose number mushroomed in the 1860s, led to a dual phenomenon. On the one hand, it resulted in specialization: none of the studios were generalists and each of them established their mastery in specific domains. On the other hand, the large portrait studios had different target audiences, making them socially identifiable. | ||

"Tell me who took your portrait and how it was taken, and I’ll tell you who you are in society’s eyes." | |||

<ref>http://expositions.bnf.fr/les-nadar/en/the-art-of-the-portrait.html</ref> | |||

Nadar himself believed that | In this sense, Nadar’s studio is characterized by his anti-Royalistic leanings. The studio was frequented by anyone in the public eye such as politicians, famous artists and writers of the time. Famous individuals of the time who still have a large cultural importance today were captured by the photos including Liszt, Victor Hugo and Baudelaire. | ||

His talent in engaging | His minimalistic, no-frills style and lack of editing, allows the photos to highlight the expressions and as such the personalities of his subjects. Nadar himself believed that his feel for light and how he connected to the subjects of his photographs set him apart from other photographers. His talent in engaging people was especially important in the mid 19th century as the camera was a bulky box under which the photographer would disappear <ref>https://www.theguardian.com/books/2015/dec/23/books-felix-nadar-france-photography-flight</ref> | ||

Later, starting 1860, as Nadar himself moves on the other endeavors, his son, Paul, took over the studio. Paul moved the studio into a more commercial direction, in part due to the increasing competition. A different style of photography started to emerge from | Later, starting 1860, as Nadar himself moves on the other endeavors, his son, Paul, took over the studio. Paul moved the studio into a more commercial direction, in part due to the increasing competition. A different style of photography started to emerge from this studio such as theater photography and miniature portrait photography. | ||

The collection held by the | The collection held by the French state was acquired in 1949 and since 1965 the positives of the photos are stored with the BnF, while the negatives are held at Fort de Saint-Cyr | ||

<ref>https://gallica.bnf.fr/html/und/images/acces-par-collection?mode=desktop</ref> | |||

By looking into our project, we can see the personalities that Nadar captured from the liberal bourgeoisie and how the collection changed as the Third Nadar took charge of the studio, giving an interesting representation of France during its turbulent and beautiful years. | |||

== Implementation == | == Implementation == | ||

[[File:tags.gif|300px|right|thumb| Our tag search interface]] | |||

[[File:facemap.png|300px|right|thumb| Our facemap interface]] | |||

=== Website Description === | === Website Description === | ||

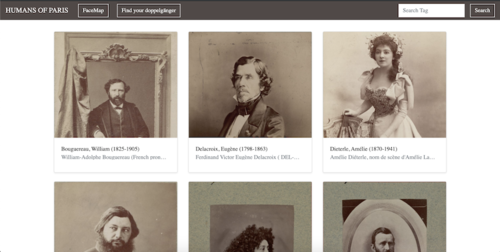

In more concrete terms, our project involves four core interfaces. | In more concrete terms, our project involves four core interfaces. | ||

* A home page highlighting the most known individuals according | * A home page highlighting the most known individuals according to the number of pictures or the amount of text written about them in Wikipedia | ||

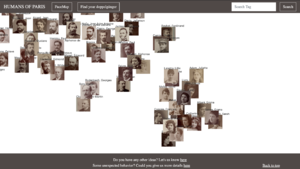

* A page (FaceMap) that | * A page (FaceMap) that clusters and plots faces of the people in the dataset, highlightinh similarities and differences among them | ||

* A page to find your 19th century [https://en.wikipedia.org/wiki/Doppelg%C3%A4nger doppelgänger], for fun and to gather interest in people the user my otherwise would never have known. | * A page to find your 19th century [https://en.wikipedia.org/wiki/Doppelg%C3%A4nger doppelgänger], for fun and to gather interest in people the user my otherwise would never have known. | ||

* A way to search using tags, to allow users to find individuals of interest. | * A way to search using tags, to allow users to find individuals of interest. | ||

To each person in the pictures we associate background information crawled from wikipedia. | To each person in the pictures we associate background information crawled from wikipedia. | ||

All the code is available | All the code is available on [https://github.com/liabifano/humans-of-paris-1900 github] and the detailed instructions to run the application can be found in the README.md file. | ||

== Methods & Evaluation == | == Methods & Evaluation == | ||

To create our interface, we need to have following data: | |||

* names, age and gender for each individual we want to include in our interface | |||

* the pictures corresponding to each individual | |||

* a mapping from each picture to a given individual | |||

* the wikipedia page of each individual (if present) | |||

* tags for tag search | |||

* a vector representing peoples faces to power the facemap and doppelgänger search | |||

Then we need to build a front and backend to serve our interface to users. | |||

The following section now describes how we acquired and processed the needed data and set up our website. | |||

A quality assessment is given after each method description under "Evaluation". | |||

=== Getting & Processing Metadata === | === Getting & Processing Metadata === | ||

[[File:Preprocessing.PNG|400px|right|thumb|Workflow of metadata to give additional information of images]] | [[File:Preprocessing.PNG|400px|right|thumb|Workflow of metadata to give additional information of images]] | ||

As a first step in the processing pipeline, we use the [http://fdh.epfl.ch/index.php/Gallica_wrapper Gallica Wrapper provided by Raphael] to get a list of all the photos in the collection of the foundation Nadar | As a first step in the processing pipeline, we use the [http://fdh.epfl.ch/index.php/Gallica_wrapper Gallica Wrapper provided by Raphael] to get a list of all the photos in the collection of the foundation Nadar in Gallica. Nadar's collection contains a variety of genres: portraits, comics, caricatures, paysage, sculptures, etc. In order to stick to our emphasis on ‘people of 19th century Paris’, we filtered out photographs that are not directly relevant to people of that time. | ||

===== Getting individual portraits ===== | ===== Getting individual portraits ===== | ||

We used metadata of Gallica collection to filter irrelevant photographs. Among a number of attribute objects in the metadata, we concentrated on the ‘dc:subject’ attribute. This field contains a list | We used metadata of Gallica collection to filter irrelevant photographs. Among a number of attribute objects in the metadata, we concentrated on the ‘dc:subject’ attribute. This field contains a list with detailed information about the photograph and the entity in that photo: [Names of individuals],(year of birth - year of death) -- [genre of the photograph]. We ignored subjects who didn't have the substring “-- Portraits” and returned the new list of subjects so we could discard the landscape, comics, caricatures, and sculptures. | ||

After filtering only ‘Portraits’, we had column | After filtering only ‘Portraits’, we had a column containing a list that varied in length: that is, the number of people featured in photographs differed. Since our intention is to connect ourselves to people of 19th century Paris by presenting story of each Parisienne in the photograph, we filtered out the photographs that features more than one person. | ||

In order to filter and get insights of people who work in the same field or had the same role we created the concept of tags that helps us to access and query groups of people and will be explained later. | |||

====== First attempt ====== | ====== First attempt ====== | ||

Gallica ‘dc:subject’ metadata | Gallica ‘dc:subject’ metadata has very brief information on each person - only name, year of birth, and year of death, which is not enough information to sort categorise each person. Thus, in our first attempt, we tried to use ‘dc:title’ metadata (that gives title of each photograph) to get additional information on people. However, titles were inconsistent in their formatting, making it hard to preprocess and extract useful information from them. Moreover, most of the time, titles of images contained either the name of people in the photograph, or the fictional characters the people (especially performers) played. Since this approach did not give us useful information to categorise people, we decided to move to our second approach which consisted in querying the names we have to get well-formulated information associated with the names and the personages. | ||

====== Second attempt ====== | ====== Second attempt ====== | ||

Data.bnf.fr is the project driven by the BnF in order to make the data produced by the BnF more visible on the Web | Data.bnf.fr is the project driven by the BnF in order to make the data produced by the BnF more visible on the Web and federate them within and outside the catalogues. | ||

Since Gallica is one of the projects of the BnF, it is reasonable to assume that a person who has their name in Gallica metadata will have some document or page in data.bnf.fr semantic web. In order to get corresponding pages, we queried metadata | Since Gallica is one of the projects of the BnF, it is reasonable to assume that a person who has their name in Gallica metadata will have some document or page in data.bnf.fr semantic web. In order to get corresponding pages, we queried metadata through a python [https://github.com/RDFLib/sparqlwrapper SPARQL API] wrapper. To do so, we had to understand the XML schema that is returned by the API. | ||

The Data.bnf XML schema has three name attributes: foaf:name, foaf:givenName, foaf:famliyName. We | The Data.bnf XML schema has three name attributes: foaf:name, foaf:givenName, foaf:famliyName. We checked how names in <dc:subject> are arranged, compare the arrangement with some names in Data.bnf, rearranged names accordingly to it and query them | ||

Sometimes query | Sometimes query results contained different entities that had the same name. In this case, we exploited the fact that we are handling 19th century data taken by Nadar and among the namesakes, we chose the one whose living period overlaps the most with that of Nadar. | ||

==== Creating Tags out of metadata ==== | ==== Creating Tags out of metadata ==== | ||

The table from the data.bnf html page contains a variety of useful information, such as: name, nationality, language, gender, short description. | The table from the data.bnf html page contains a variety of useful information, such as: name, nationality, language, gender, short description. We used the description and nationality to create some tags. | ||

We used the description and nationality to create tags. | To get tags from the descriptions we considered using the most frequent words that appear across different people (stop-words were excluded) as tags. | ||

The problem with this approach is that some important keywords and entities are broken up using the word count method; for example, “Legion d’honneur”, is split into “legion” and “d’honneur” and the original meaning is lost. | |||

The problem with this is that some important keywords and entities are broken up | We were able to handle this issue manually. First of all, we checked the word count without stop-words. Then we got frequent words that are not related to occupations. After this we checked notes that contain those words and examined whether they are part of important (and frequent) phrases. For the found phrases, we concatenated them as one word so we could use it as a tag. After this step, we fetched 300 major keywords, some being feminine or plural conjugation of another. Cleaning those semantic duplicates was also done manually and for future improvement, this task could be accomplished by using a french NLP library. | ||

Once this list of possible tags is created, we assigned tags to each picture by taking the intersection between the list of all tags and the list of words in each description. | |||

We handle this | |||

Once this list of possible tags is created, we assigned tags | |||

==== Using Wikipedia as criteria of importance ==== | ==== Using Wikipedia as criteria of importance ==== | ||

Data.bnf contains useful information on each person, but it is hard to infer their historic influence only by looking at the table. | Data.bnf contains useful information on each person, but it is hard to infer their historic influence only by just looking at the table. To circumvent this limitation, we used wikipedia as a criteria to define people’s ‘importance’. That is, the more images on their page they more important they are. | ||

We used Wikipedia API library that is available in | We used the Wikipedia API library that is available in [https://pypi.org/project/wikipedia/ pypy]. | ||

Each request is made using this API. The response to query containing a person's name contains a wikipedia page, number of images and reference links connected with this person. We turned on the auto-suggestion mode of the libary, to be able to find more than just the 'exact matches'. Since this might lead to numerous false positives, we verified if the content of each page contains the name and the birth/death year of the person. | |||

If the content of the wikipedia is not sufficiently overlapping with what we would expect, we considered this a false positive and ignored the result. We use the number of images in each Wikipedia page to define the ‘weight’ of a person, which means how important they are. We queried every record in English and French Wikipedia in order to represent the importance outside francophone culture as well. The final weight (or importance) is the average between two. | |||

''' Evaluation ''' | ''' Evaluation ''' | ||

| Line 88: | Line 104: | ||

|- | |- | ||

! Metadata | ! Metadata | ||

! Accuracy (%) | ! Positive Accuracy (%) | ||

! Negative Accuracy (%) | |||

|- | |- | ||

| Data.bnf.fr page | | Data.bnf.fr page | ||

| | | 92 | ||

| -- | |||

|- | |- | ||

| English Wikipedia | | English Wikipedia | ||

| 100 | | 100 | ||

| 80 | |||

|- | |- | ||

| French Wikipedia | | French Wikipedia | ||

| 98 | | 98 | ||

| 80 | |||

|} | |} | ||

Mismatches in data.bnf.fr happened for the ones who have namesakes (the person who bears the identical name) in the database and the ones whose name or birth/death year written in <dc:subject> attribute are ambiguous. | Mismatches in data.bnf.fr happened for the ones who have namesakes (the person who bears the identical name) in the database and the ones whose name or birth/death year written in <dc:subject> attribute are ambiguous. Positive results in English Wikipedia were shown to have surprisingly good accuracy in this sample. Mismatches ZFrench Wikipedia happened for the queries that do not have a Wikipedia page. Thus we are redirected us to some random page by the auto-suggestion algorithm. On the contrary, negative Wikipedia query error is around 20%. This was due to either 1) the Wikipedia API auto-suggestion not being complete, and therefore did not returning anything even, if there was some result, or 2) the queried person is so well-known that the auto-suggested query returned the list of work of the person, not the page on the person himself. | ||

English Wikipedia | Overall, the error in metadata processing occurred for the people with limited information or record and Wikipedia API incompleteness. Even though it shows a fair result, one way to improve this is to use 'external reference' stored in data.bnf that contains wikidata and wikipedia links. | ||

Overall, the error in metadata processing occurred for the people with limited information or record. | |||

=== Image processing and cropping === | === Image processing and cropping === | ||

To give users a better aesthetic experience on our webpage, we decided to crop the background on which the photograph is placed. Cropping the background was done based on edge detection | To give users a better aesthetic experience on our webpage, we decided to crop the background on which the photograph is placed. Cropping the background was done based on edge detection and we based our code off of (https://github.com/polm/ndl-crop) but made modifications to adapt it to use on sepia photography on blue backgrounds. We added preprocessing step that sets the blue background to white, determined using RGB values. | ||

It uses image processing techniques provided by OpenCV to find the contours in the images, from which we infer boundaries | It uses image processing techniques provided by OpenCV to find the contours in the images, from which we can infer boundaries and setting the blue background to white gives us better contour detection. Additionally, using heuristics about the size of the photo against the background we choose the most appropriate boundary. | ||

Cropping the face is done using the facial rectangle returned by the py-agender api (see below) and | Cropping the face is done using the facial rectangle returned by the [https://pypi.org/project/py-agender/ py-agender] api (see below) and MTCNN (https://github.com/ipazc/mtcnn) which is an other python library implementing a Deep Neural Network as proposed by FaceNet (also used to find the facial vectors, see openface). Py-agender was much quicker so we use it for the initial cropping. | ||

'''Evaluation''' | '''Evaluation''' | ||

To check how well the background is cropped, we randomly sampled 100 images and check for the cropping | To check how well the background is cropped, we randomly sampled 100 images and check for the cropping and 91% of images are correctly cropped. The incorrectly cropped images contain some border but still have the face in it and none of them are overcropped. We might be able to do better by tuning the assumptions we make to choose the most appropriate boundary, as we are currently missing very large pictures and very small ones however for the current stage of the project we find this accuracy to be sufficient. | ||

Looking at the face cropping done with py-agender, we find a total of 52 incorrectly cropped images. | Looking at the face cropping done with py-agender, we find a total of 52 incorrectly cropped images. Besides that, we find 71 images where no face could be found despite there is one being in the image. We find this number by looking through all 1874 cropped images. | ||

We rectify that badly cropped images using the MTCNN library, which correctly identifies that cropped images that do not contain a face and correctly crops those images given the original image. In the end we get 5 incorrectly cropped portraits | We rectify that badly cropped images using the MTCNN library, which correctly identifies that cropped images that do not contain a face and correctly crops those images given the original image. In the end we get just 5 incorrectly cropped portraits which were all very washed out and damaged photos and we replaced those images with manually cropped faces. The accuracy of the method could be improved by re-training each model with our data, to better match our use-case. | ||

The accuracy of the method could be improved by re-training each model with our data, to better match our use-case. | |||

=== Age and Gender === | === Age and Gender === | ||

The data from the Gallica API wrapper contains neither information about gender nor about the age of the person pictured at the time the photograph was taken. | The data from the Gallica API wrapper contains neither information about gender nor about the age of the person pictured at the time the photograph was taken. Therefore we had to use some other methods to guess the age and gender on an individual in the image. We later find that data.Bnf has ground truth labels for the gender for many individuals in the database so used this for the evaluation of the gender labels but we used the ground truth labels in our final product. | ||

Therefore had to use some other | |||

We later find that data.Bnf has ground truth labels for the gender for many individuals in the database | |||

Given the data at hand, we could use the name to guess the gender. However, a quick survey of available methods to detect gender from names shows that no database adapted to the task of classifying 19th century french names exists. Not having the means to create such a database from scratch, we focus on the photo and faces in them. | Given the data at hand, we could use the name to guess the gender. However, a quick survey of available methods to detect gender from names shows that no database adapted to the task of classifying 19th century french names exists. Not having the means to create such a database from scratch, we focus on the photo and faces in them. | ||

Detecting age and gender from photos has long been a topic of Deep Learning research | Detecting age and gender from photos has long been a topic of Deep Learning research and there are many pre-trained state of the art models trained on modern data exist. People presumably have not changed much in 100 years, so we can expect methods to work well. | ||

We use the python library py-agender which implements a state of the art model proposed by Rasmus Rothe et al., the implementation can be found [https://github.com/yu4u/age-gender-estimation here] and it was trained on the [https://github.com/imdeepmind/processed-imdb-wiki-dataset IMBD-Wiki dataset]. | We use the python library py-agender which implements a state of the art model proposed by Rasmus Rothe et al., the implementation can be found [https://github.com/yu4u/age-gender-estimation here] and it was trained on the [https://github.com/imdeepmind/processed-imdb-wiki-dataset IMBD-Wiki dataset]. | ||

| Line 136: | Line 151: | ||

'''Evalutation''' | '''Evalutation''' | ||

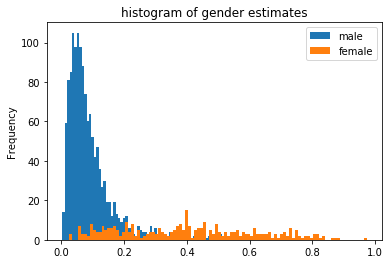

The underlying model itself has limitations | The underlying model itself has its own limitations, as can be seen in figure of [https://raw.githubusercontent.com/wiki/yu4u/age-gender-estimation/images/loss.png validation loss], both age and gender are still high, despite being the state of the art. Concerning the application of the model to our data, we create a histogram of all the estimates we and the histogram for gender is strongly skewed towards male values. | ||

[[File: GenderHistogram.png|400px|right|thumb|Histogram of gender estimates]] | [[File: GenderHistogram.png|400px|right|thumb|Histogram of gender estimates]] | ||

We also see that there is a large overlap in values assigned to female and male subjects. Clearly, the model is not working well | We also see that there is a large overlap in values assigned to female and male subjects. Clearly, the model is not working well and to give exact values to each issue, consider the following confusion matrix: | ||

| Line 157: | Line 172: | ||

Here we can clearly see that the biggest issue is that the model classifies women as men. | Here we can clearly see that the biggest issue is that the model classifies women as men. | ||

Doing a qualitative analysis of the 100 images, we formulate the following hypothesis why the gender estimation model creates so many errors. | Doing a qualitative analysis of the 100 images, we formulate the following hypothesis why the gender estimation model creates so many errors. | ||

While people’s faces have not changed, these days women tend to have long flowing hair and wear more visible makeup. This is not the case in our dataset where women appear to mostly have updos and wear barely visible makeup. An additional issue might be the angle at which people posed. | While people’s faces have not changed, these days women tend to have long flowing hair and wear more visible makeup. This is not the case in our dataset where women appear to mostly have updos and wear barely visible makeup. An additional issue might be the angle at which people posed. In order to solve this problem, we would have to retrain the model on a labeled sample of out images or just retrain the last layers of the network. | ||

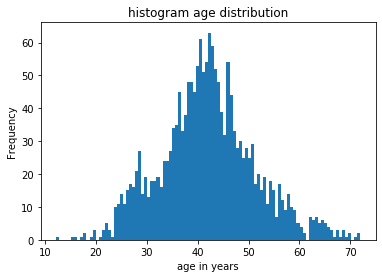

The age histogram follows a gaussian like distribution centered around 40. | The age histogram follows a gaussian like distribution centered around 40. Age much harder to estimate whether this is an accurate assessment because metadata does not give us any information on when the photo was taken. | ||

For this reason we just use random sampling to get a subsample of 95 images on which we do a qualitative evaluation. We annotate the samples by the age, but only giving the decade | For this reason we just use random sampling to get a subsample of 95 images on which we do a qualitative evaluation. We annotate the samples by the age, but only giving the decade because even for humans guessing the age is difficult. We established that decades are enough. We got the decade correctly for 72 out of 95 (76%) so we assumed that the age estimation algorithm works reasonably well. For the ones that we do miss, the difference between the exact age estimation on the estimated decade is less than a full decade. | ||

[[File: AgeHistogram.png|400px|right|thumb| distribution of age]] | [[File: AgeHistogram.png|400px|right|thumb| distribution of age]] | ||

=== Getting the face vector for facemap and doppelganger search === | === Getting the face vector for facemap and doppelganger search === | ||

To represent a complex object such as the image of a face in two dimensional space and examine similarities between faces we need to convert it into a vector space | To represent a complex object such as the image of a face in two dimensional space and examine similarities between faces we need to convert it into a vector space and this can also be done using a Deep Learning model. We use the openface which is an implementation based on the CVPR 2015 paper [https://arxiv.org/abs/1503.03832 FaceNet: A Unified Embedding for Face Recognition and Clustering by Florian Schroff, Dmitry Kalenichenko, and James Philbin at Google]. | ||

[[File:workflow.png|400px|right|thumb|Work flow proposed by openface [https://raw.githubusercontent.com/cmusatyalab/openface/master/images/summary.jpg]]] | [[File:workflow.png|400px|right|thumb|Work flow proposed by openface [https://raw.githubusercontent.com/cmusatyalab/openface/master/images/summary.jpg]]] | ||

This method first finds a bounding box and important facial landmarks, which are then used to normalize and crop the photo. This cropped photo is then fed to the neural network, which returns points lying on a 128D hypersphere. Issues can arise at each step of this process and a special property of the resulting vector is that euclidean distance in the feature space represents closeness in facial features. | |||

To find a person's doppelganger we can therefore use cosine similarity between the vectors of the people in our dataset and the vector of the person who uploads their | To find a person's doppelganger we can therefore use cosine similarity between the vectors of the people in our dataset and the vector of the person who uploads their own images. | ||

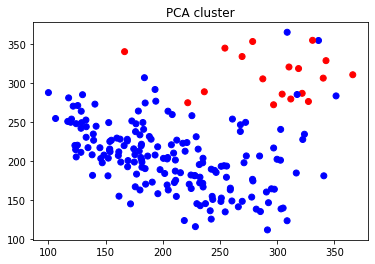

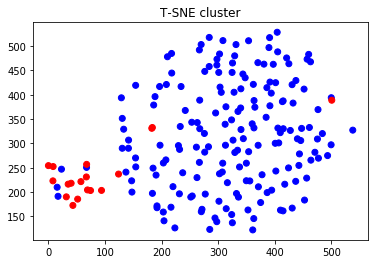

In order to visualize clusters of people who are similar we have to go from a 128D space to a 2D requires the use of dimensionality reduction algorithm to project the higher space vector into a lower dimensional space. There are many method available to perform it and we have tried the following algorithms: the linear method PCA and the non-linear method T-SNE. | |||

'''Evaluation''' | '''Evaluation''' | ||

''Creation of face vector:'' | ''Creation of face vector:'' | ||

To estimate the quality of the resulting vector we again have the accuracy of the underlying method, and how it applies to our case. The deep learning method used to generate the vector is trained on a face classification task. On this task the model has accuracy of 0.9292 ± 0.0134 and AUC (area under curve) of 0.973 | To estimate the quality of the resulting vector we again have the accuracy of the underlying method, and how it applies to our case. The deep learning method used to generate the vector is trained on a face classification task. On this task the model has accuracy of 0.9292 ± 0.0134 and AUC (area under curve) of 0.973 (humans can achieve AUC of 0.995). The papers also discusses the application of the face vectors to clustering and they also highlight that the method is invariant to occlusion, lighting, pose and even age. | ||

In our task, we check whether the face is correctly detected and how well the resulting vector works for the tasks at hand - clustering and finding doppelgängers. Due to the style of photography, we can not produce a face vector as they show only their profile in the images. The models we use are not adapted to such a strong variation from frontal pictures and in total we have 71 people for which we could not find vectors. | |||

''Dimensionality reductions:'' | ''Dimensionality reductions:'' | ||

A qualitative evaluation of both reduction algorithm was done by checking the face-map clusters. | A qualitative evaluation of both reduction algorithm was done by checking the face-map clusters. | ||

We found that | We found that T-SNE better approximates gender boundaries and similarities in faces and for this reason we use T-SNE in our final implementation. | ||

[[File:cluster_1.png|400px|right|thumb| PCA cluster ]] | [[File:cluster_1.png|400px|right|thumb| PCA cluster ]] | ||

[[File:cluster_2.png|400px|right|thumb| T-SNE cluster ]] | [[File:cluster_2.png|400px|right|thumb| T-SNE cluster ]] | ||

''Finding your Doppelganger: '' | |||

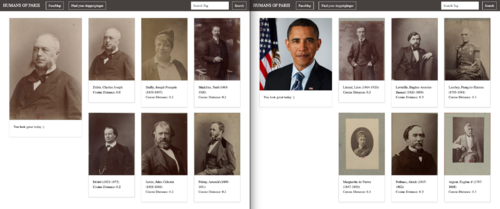

One way to to evaluate the closest faces to a person in Nadar's collection is compare the cosine distance with the returned people and checking if more similar faces holds lower cosine distances. The concept of similarity in this case is subjective and evaluated manually. However Nadar's collection lacks of black and asian people so the faces returned for these groups are not as good as the ones returned when we submit white person so we collected some famous people pictures and submitted to the platform. The image bellow contains the closest faces of a person in the collection and of Barack Obama. | |||

[[File:Evaluation doppelganger.png|500px|center|thumb|Doppelganger]] | |||

As we can see, the cosine distance for the same person inside the collection is zero and for others around 0.2. On the other hand, for Barack Obama the closest face has a distance of 0.3 and as we can see we can not find a lot of features in common among them and one of them is even a woman. In summary, we can state that we can find good matches and they are coherent for people similar in the collect but the output is poor for people who don't share the same features as expected. | |||

=== Frontend === | === Frontend === | ||

Use adobe xd to do initial prototype to explore how to design frontend and then use bootstrap templates to match our prototypes | Use adobe xd to do initial prototype to explore how to design frontend and then use bootstrap templates to match our prototypes and we also use[https://d3js.org/ D3.js] to make the facemap interactive. | ||

D3 (Data-Driven Documents) is the most flexible javascript library to produce interactive data visualizations. Even though it has a lot of built-in functions, it also gives total control to the programmer customize its own visualizations. | D3 (Data-Driven Documents) is the most flexible javascript library to produce interactive data visualizations. Even though it has a lot of built-in functions, it also gives total control to the programmer customize its own visualizations. | ||

| Line 196: | Line 217: | ||

=== Backend === | === Backend === | ||

To render images and text to a web interface we use Django 2.2.7 which is a powerful web framework written in python. Combined with scripts written Javascript, they give the frontend behavior that we see and interact on the site. Django also contains its own ORM (object-relational mapping) layer which turns easy to store data, update and query it to be handled on the interface. | To render images and text to a web interface we use [https://code.djangoproject.com/wiki/PyPy Django 2.2.7] which is a powerful web framework written in python. Combined with scripts written Javascript, they give the frontend behavior that we see and interact on the site. Django also contains its own ORM (object-relational mapping) layer which turns easy to store data, update and query it to be handled on the interface. | ||

[[File: | [[File:Humans-of-paris-data-model.png|600px|center|thumb|Datamodel used to deal with diverse granularities and data sources]] | ||

Underneath we are using Sqlite3 to store our data however due to the ORM properly that turn the storage option agnostic with respect to the code and framework implementation. | Underneath we are using Sqlite3 to store our data however due to the ORM properly that turn the storage option agnostic with respect to the code and framework implementation. | ||

Unfortunately openface is just available in python2.7 which will be deprecated in January 2020 and our project was written in python3. In order to run both versions smoothly when a new image is uploaded we decided to spin openface separately as another service that receives a post request with a new image and returns the encoded vector | Unfortunately openface is just available in python2.7 which will be deprecated in January 2020 and our project was written in python3. In order to run both versions smoothly when a new image is uploaded we decided to spin openface separately as another service that receives a post request with a new image and returns the encoded vector our original website. The openface project was forked in [https://github.com/liabifano/openface github] and on top of that we implemented a Flask application who is ready to receive post request at the endpoint \get_vector and returns json response which will be consumed by our application and find the doppelgänger. | ||

== Project execution plan == | == Project execution plan == | ||

The milestones and the project schedule is bellow: | The milestones and draft of the project schedule is given bellow: | ||

{| class="wikitable" style="margin:auto; margin:auto;" | {| class="wikitable" style="margin:auto; margin:auto;" | ||

| Line 298: | Line 319: | ||

==References== | ==References== | ||

Latest revision as of 19:10, 15 December 2019

Motivation

Our project takes inspiration from the famous Instagram page, Humans of New York, which features pictures and stories of people living in current day in New York City. In similar fashion, our project, Humans of Paris, has the aim to be a platform to connect us to the people of 19th century Paris.

Photography was still in its early stages when Nadar took up the craft in his atelier in Paris. Through the thousands of pictures taken by him and his son we can get a glimpse of who lived at the time. We explore the use of deep learning models to cluster similar faces to get an alternative, innovative view of the collection which allows serendipitous discoveries of patterns and people. There is a story behind every person, and our interface highlights this by association people’s story with their picture.

Historical Background

The collection at the heart of our project is comprised over 23000 photographs (positives) and caricatures by Atelier Nadar. We focus on individual portraits of people for our interface.

The portrait studio Atelier Nadar was founded by the first Nadar, otherwise known by his birth name Felix Tournachon (1820-1910), and ran by his brother and nephew, Adrien and Paul. The first Nadar established himself as a known caricaturist after working as a journalist. Then, having been introduced to photography by his brother, Adrien, he soon realized the potential of this new artform and with the support of a banker friend, he went on to create the Studio in Paris in 1854. [1]

Photography had started to become a viable business as new collioduim-on-glass negatives, invented in 1851, allowed for the creation of copies from one negative while being cheaper than previous methods due to the use of glass all while having short exposure times. [2] At the same time, in the 19th century, subtle social differences defined the world of photography. The competition between photographic studios, whose number mushroomed in the 1860s, led to a dual phenomenon. On the one hand, it resulted in specialization: none of the studios were generalists and each of them established their mastery in specific domains. On the other hand, the large portrait studios had different target audiences, making them socially identifiable.

"Tell me who took your portrait and how it was taken, and I’ll tell you who you are in society’s eyes." [3]

In this sense, Nadar’s studio is characterized by his anti-Royalistic leanings. The studio was frequented by anyone in the public eye such as politicians, famous artists and writers of the time. Famous individuals of the time who still have a large cultural importance today were captured by the photos including Liszt, Victor Hugo and Baudelaire. His minimalistic, no-frills style and lack of editing, allows the photos to highlight the expressions and as such the personalities of his subjects. Nadar himself believed that his feel for light and how he connected to the subjects of his photographs set him apart from other photographers. His talent in engaging people was especially important in the mid 19th century as the camera was a bulky box under which the photographer would disappear [4]

Later, starting 1860, as Nadar himself moves on the other endeavors, his son, Paul, took over the studio. Paul moved the studio into a more commercial direction, in part due to the increasing competition. A different style of photography started to emerge from this studio such as theater photography and miniature portrait photography.

The collection held by the French state was acquired in 1949 and since 1965 the positives of the photos are stored with the BnF, while the negatives are held at Fort de Saint-Cyr [5]

By looking into our project, we can see the personalities that Nadar captured from the liberal bourgeoisie and how the collection changed as the Third Nadar took charge of the studio, giving an interesting representation of France during its turbulent and beautiful years.

Implementation

Website Description

In more concrete terms, our project involves four core interfaces.

- A home page highlighting the most known individuals according to the number of pictures or the amount of text written about them in Wikipedia

- A page (FaceMap) that clusters and plots faces of the people in the dataset, highlightinh similarities and differences among them

- A page to find your 19th century doppelgänger, for fun and to gather interest in people the user my otherwise would never have known.

- A way to search using tags, to allow users to find individuals of interest.

To each person in the pictures we associate background information crawled from wikipedia.

All the code is available on github and the detailed instructions to run the application can be found in the README.md file.

Methods & Evaluation

To create our interface, we need to have following data:

- names, age and gender for each individual we want to include in our interface

- the pictures corresponding to each individual

- a mapping from each picture to a given individual

- the wikipedia page of each individual (if present)

- tags for tag search

- a vector representing peoples faces to power the facemap and doppelgänger search

Then we need to build a front and backend to serve our interface to users. The following section now describes how we acquired and processed the needed data and set up our website. A quality assessment is given after each method description under "Evaluation".

Getting & Processing Metadata

As a first step in the processing pipeline, we use the Gallica Wrapper provided by Raphael to get a list of all the photos in the collection of the foundation Nadar in Gallica. Nadar's collection contains a variety of genres: portraits, comics, caricatures, paysage, sculptures, etc. In order to stick to our emphasis on ‘people of 19th century Paris’, we filtered out photographs that are not directly relevant to people of that time.

Getting individual portraits

We used metadata of Gallica collection to filter irrelevant photographs. Among a number of attribute objects in the metadata, we concentrated on the ‘dc:subject’ attribute. This field contains a list with detailed information about the photograph and the entity in that photo: [Names of individuals],(year of birth - year of death) -- [genre of the photograph]. We ignored subjects who didn't have the substring “-- Portraits” and returned the new list of subjects so we could discard the landscape, comics, caricatures, and sculptures. After filtering only ‘Portraits’, we had a column containing a list that varied in length: that is, the number of people featured in photographs differed. Since our intention is to connect ourselves to people of 19th century Paris by presenting story of each Parisienne in the photograph, we filtered out the photographs that features more than one person. In order to filter and get insights of people who work in the same field or had the same role we created the concept of tags that helps us to access and query groups of people and will be explained later.

First attempt

Gallica ‘dc:subject’ metadata has very brief information on each person - only name, year of birth, and year of death, which is not enough information to sort categorise each person. Thus, in our first attempt, we tried to use ‘dc:title’ metadata (that gives title of each photograph) to get additional information on people. However, titles were inconsistent in their formatting, making it hard to preprocess and extract useful information from them. Moreover, most of the time, titles of images contained either the name of people in the photograph, or the fictional characters the people (especially performers) played. Since this approach did not give us useful information to categorise people, we decided to move to our second approach which consisted in querying the names we have to get well-formulated information associated with the names and the personages.

Second attempt

Data.bnf.fr is the project driven by the BnF in order to make the data produced by the BnF more visible on the Web and federate them within and outside the catalogues. Since Gallica is one of the projects of the BnF, it is reasonable to assume that a person who has their name in Gallica metadata will have some document or page in data.bnf.fr semantic web. In order to get corresponding pages, we queried metadata through a python SPARQL API wrapper. To do so, we had to understand the XML schema that is returned by the API. The Data.bnf XML schema has three name attributes: foaf:name, foaf:givenName, foaf:famliyName. We checked how names in <dc:subject> are arranged, compare the arrangement with some names in Data.bnf, rearranged names accordingly to it and query them

Sometimes query results contained different entities that had the same name. In this case, we exploited the fact that we are handling 19th century data taken by Nadar and among the namesakes, we chose the one whose living period overlaps the most with that of Nadar.

Creating Tags out of metadata

The table from the data.bnf html page contains a variety of useful information, such as: name, nationality, language, gender, short description. We used the description and nationality to create some tags. To get tags from the descriptions we considered using the most frequent words that appear across different people (stop-words were excluded) as tags. The problem with this approach is that some important keywords and entities are broken up using the word count method; for example, “Legion d’honneur”, is split into “legion” and “d’honneur” and the original meaning is lost. We were able to handle this issue manually. First of all, we checked the word count without stop-words. Then we got frequent words that are not related to occupations. After this we checked notes that contain those words and examined whether they are part of important (and frequent) phrases. For the found phrases, we concatenated them as one word so we could use it as a tag. After this step, we fetched 300 major keywords, some being feminine or plural conjugation of another. Cleaning those semantic duplicates was also done manually and for future improvement, this task could be accomplished by using a french NLP library. Once this list of possible tags is created, we assigned tags to each picture by taking the intersection between the list of all tags and the list of words in each description.

Using Wikipedia as criteria of importance

Data.bnf contains useful information on each person, but it is hard to infer their historic influence only by just looking at the table. To circumvent this limitation, we used wikipedia as a criteria to define people’s ‘importance’. That is, the more images on their page they more important they are. We used the Wikipedia API library that is available in pypy. Each request is made using this API. The response to query containing a person's name contains a wikipedia page, number of images and reference links connected with this person. We turned on the auto-suggestion mode of the libary, to be able to find more than just the 'exact matches'. Since this might lead to numerous false positives, we verified if the content of each page contains the name and the birth/death year of the person. If the content of the wikipedia is not sufficiently overlapping with what we would expect, we considered this a false positive and ignored the result. We use the number of images in each Wikipedia page to define the ‘weight’ of a person, which means how important they are. We queried every record in English and French Wikipedia in order to represent the importance outside francophone culture as well. The final weight (or importance) is the average between two.

Evaluation

To check how well the metadata is processed, we randomly sampled metadata 50 metadata, each for data.bnf.fr page, English Wikipedia, and French Wikipedia. It is considered correct if : 1) the name 2) the face 3) the birth/death year matches.

| Metadata | Positive Accuracy (%) | Negative Accuracy (%) |

|---|---|---|

| Data.bnf.fr page | 92 | -- |

| English Wikipedia | 100 | 80 |

| French Wikipedia | 98 | 80 |

Mismatches in data.bnf.fr happened for the ones who have namesakes (the person who bears the identical name) in the database and the ones whose name or birth/death year written in <dc:subject> attribute are ambiguous. Positive results in English Wikipedia were shown to have surprisingly good accuracy in this sample. Mismatches ZFrench Wikipedia happened for the queries that do not have a Wikipedia page. Thus we are redirected us to some random page by the auto-suggestion algorithm. On the contrary, negative Wikipedia query error is around 20%. This was due to either 1) the Wikipedia API auto-suggestion not being complete, and therefore did not returning anything even, if there was some result, or 2) the queried person is so well-known that the auto-suggested query returned the list of work of the person, not the page on the person himself. Overall, the error in metadata processing occurred for the people with limited information or record and Wikipedia API incompleteness. Even though it shows a fair result, one way to improve this is to use 'external reference' stored in data.bnf that contains wikidata and wikipedia links.

Image processing and cropping

To give users a better aesthetic experience on our webpage, we decided to crop the background on which the photograph is placed. Cropping the background was done based on edge detection and we based our code off of (https://github.com/polm/ndl-crop) but made modifications to adapt it to use on sepia photography on blue backgrounds. We added preprocessing step that sets the blue background to white, determined using RGB values. It uses image processing techniques provided by OpenCV to find the contours in the images, from which we can infer boundaries and setting the blue background to white gives us better contour detection. Additionally, using heuristics about the size of the photo against the background we choose the most appropriate boundary.

Cropping the face is done using the facial rectangle returned by the py-agender api (see below) and MTCNN (https://github.com/ipazc/mtcnn) which is an other python library implementing a Deep Neural Network as proposed by FaceNet (also used to find the facial vectors, see openface). Py-agender was much quicker so we use it for the initial cropping.

Evaluation

To check how well the background is cropped, we randomly sampled 100 images and check for the cropping and 91% of images are correctly cropped. The incorrectly cropped images contain some border but still have the face in it and none of them are overcropped. We might be able to do better by tuning the assumptions we make to choose the most appropriate boundary, as we are currently missing very large pictures and very small ones however for the current stage of the project we find this accuracy to be sufficient.

Looking at the face cropping done with py-agender, we find a total of 52 incorrectly cropped images. Besides that, we find 71 images where no face could be found despite there is one being in the image. We find this number by looking through all 1874 cropped images. We rectify that badly cropped images using the MTCNN library, which correctly identifies that cropped images that do not contain a face and correctly crops those images given the original image. In the end we get just 5 incorrectly cropped portraits which were all very washed out and damaged photos and we replaced those images with manually cropped faces. The accuracy of the method could be improved by re-training each model with our data, to better match our use-case.

Age and Gender

The data from the Gallica API wrapper contains neither information about gender nor about the age of the person pictured at the time the photograph was taken. Therefore we had to use some other methods to guess the age and gender on an individual in the image. We later find that data.Bnf has ground truth labels for the gender for many individuals in the database so used this for the evaluation of the gender labels but we used the ground truth labels in our final product. Given the data at hand, we could use the name to guess the gender. However, a quick survey of available methods to detect gender from names shows that no database adapted to the task of classifying 19th century french names exists. Not having the means to create such a database from scratch, we focus on the photo and faces in them. Detecting age and gender from photos has long been a topic of Deep Learning research and there are many pre-trained state of the art models trained on modern data exist. People presumably have not changed much in 100 years, so we can expect methods to work well.

We use the python library py-agender which implements a state of the art model proposed by Rasmus Rothe et al., the implementation can be found here and it was trained on the IMBD-Wiki dataset.

The library endpoint returns a list of dictionary objects containing:

- the facial rectangle

- a gender estimate as a value between [0, 1]; 1 indicating the individual is male, 0 indicating the individual is female

- an age estimate, as a floating point number.

We get estimates for all individuals in the dataset, based on one image. We first try for one random image per individual, and if the model can not detect a face, we try another image, until we get a result or no more images are left.

Evalutation

The underlying model itself has its own limitations, as can be seen in figure of validation loss, both age and gender are still high, despite being the state of the art. Concerning the application of the model to our data, we create a histogram of all the estimates we and the histogram for gender is strongly skewed towards male values.

We also see that there is a large overlap in values assigned to female and male subjects. Clearly, the model is not working well and to give exact values to each issue, consider the following confusion matrix:

| True Male | True Female | |

|---|---|---|

| Labeled Male | 1393 | 114 |

| Labeled Female | 237 | 130 |

Here we can clearly see that the biggest issue is that the model classifies women as men. Doing a qualitative analysis of the 100 images, we formulate the following hypothesis why the gender estimation model creates so many errors. While people’s faces have not changed, these days women tend to have long flowing hair and wear more visible makeup. This is not the case in our dataset where women appear to mostly have updos and wear barely visible makeup. An additional issue might be the angle at which people posed. In order to solve this problem, we would have to retrain the model on a labeled sample of out images or just retrain the last layers of the network.

The age histogram follows a gaussian like distribution centered around 40. Age much harder to estimate whether this is an accurate assessment because metadata does not give us any information on when the photo was taken. For this reason we just use random sampling to get a subsample of 95 images on which we do a qualitative evaluation. We annotate the samples by the age, but only giving the decade because even for humans guessing the age is difficult. We established that decades are enough. We got the decade correctly for 72 out of 95 (76%) so we assumed that the age estimation algorithm works reasonably well. For the ones that we do miss, the difference between the exact age estimation on the estimated decade is less than a full decade.

Getting the face vector for facemap and doppelganger search

To represent a complex object such as the image of a face in two dimensional space and examine similarities between faces we need to convert it into a vector space and this can also be done using a Deep Learning model. We use the openface which is an implementation based on the CVPR 2015 paper FaceNet: A Unified Embedding for Face Recognition and Clustering by Florian Schroff, Dmitry Kalenichenko, and James Philbin at Google.

This method first finds a bounding box and important facial landmarks, which are then used to normalize and crop the photo. This cropped photo is then fed to the neural network, which returns points lying on a 128D hypersphere. Issues can arise at each step of this process and a special property of the resulting vector is that euclidean distance in the feature space represents closeness in facial features.

To find a person's doppelganger we can therefore use cosine similarity between the vectors of the people in our dataset and the vector of the person who uploads their own images.

In order to visualize clusters of people who are similar we have to go from a 128D space to a 2D requires the use of dimensionality reduction algorithm to project the higher space vector into a lower dimensional space. There are many method available to perform it and we have tried the following algorithms: the linear method PCA and the non-linear method T-SNE.

Evaluation

Creation of face vector: To estimate the quality of the resulting vector we again have the accuracy of the underlying method, and how it applies to our case. The deep learning method used to generate the vector is trained on a face classification task. On this task the model has accuracy of 0.9292 ± 0.0134 and AUC (area under curve) of 0.973 (humans can achieve AUC of 0.995). The papers also discusses the application of the face vectors to clustering and they also highlight that the method is invariant to occlusion, lighting, pose and even age.

In our task, we check whether the face is correctly detected and how well the resulting vector works for the tasks at hand - clustering and finding doppelgängers. Due to the style of photography, we can not produce a face vector as they show only their profile in the images. The models we use are not adapted to such a strong variation from frontal pictures and in total we have 71 people for which we could not find vectors.

Dimensionality reductions:

A qualitative evaluation of both reduction algorithm was done by checking the face-map clusters.

We found that T-SNE better approximates gender boundaries and similarities in faces and for this reason we use T-SNE in our final implementation.

Finding your Doppelganger: One way to to evaluate the closest faces to a person in Nadar's collection is compare the cosine distance with the returned people and checking if more similar faces holds lower cosine distances. The concept of similarity in this case is subjective and evaluated manually. However Nadar's collection lacks of black and asian people so the faces returned for these groups are not as good as the ones returned when we submit white person so we collected some famous people pictures and submitted to the platform. The image bellow contains the closest faces of a person in the collection and of Barack Obama.

As we can see, the cosine distance for the same person inside the collection is zero and for others around 0.2. On the other hand, for Barack Obama the closest face has a distance of 0.3 and as we can see we can not find a lot of features in common among them and one of them is even a woman. In summary, we can state that we can find good matches and they are coherent for people similar in the collect but the output is poor for people who don't share the same features as expected.

Frontend

Use adobe xd to do initial prototype to explore how to design frontend and then use bootstrap templates to match our prototypes and we also useD3.js to make the facemap interactive.

D3 (Data-Driven Documents) is the most flexible javascript library to produce interactive data visualizations. Even though it has a lot of built-in functions, it also gives total control to the programmer customize its own visualizations. In order to adapt D3 to have a better zooming of the images we used tsne visualization which uses perperxity to calculate the distance between images based on the predefined clusters (see discussion of clustering).

Backend

To render images and text to a web interface we use Django 2.2.7 which is a powerful web framework written in python. Combined with scripts written Javascript, they give the frontend behavior that we see and interact on the site. Django also contains its own ORM (object-relational mapping) layer which turns easy to store data, update and query it to be handled on the interface.

Underneath we are using Sqlite3 to store our data however due to the ORM properly that turn the storage option agnostic with respect to the code and framework implementation.

Unfortunately openface is just available in python2.7 which will be deprecated in January 2020 and our project was written in python3. In order to run both versions smoothly when a new image is uploaded we decided to spin openface separately as another service that receives a post request with a new image and returns the encoded vector our original website. The openface project was forked in github and on top of that we implemented a Flask application who is ready to receive post request at the endpoint \get_vector and returns json response which will be consumed by our application and find the doppelgänger.

Project execution plan

The milestones and draft of the project schedule is given bellow:

| Timeframe | Task | Completion |

|---|---|---|

| Week 4 | ||

| 07.11 | Understanding Gallica Query Gallica API | ✓ |

| Query Gallica API | ||

| Week 5 | ||

| 14.10 | Start preprocessing images | ✓ |

| Choose suitable Wikipedia API | ||

| Week 6 | ||

| 21.10 | Choose face recognition library | ✓ |

| Get facial vectors | ||

| Try database design with Docker & Django | ||

| Week 7 | ||

| 28.10 | Remove irrelevant backgrounds of images | ✓ |

| Extract age and gender from images | ||

| Design data model | ||

| Extract tags, names, birth and death years out of metadata | ||

| Week 8 | ||

| 04.11 | Set up database environment | ✓ |

| Set up mockup user-interface | ||

| Prepare midterm presentation | ||

| Week 9 | ||

| 11.11 | Get tags, names, birth and death years in ready-to-use format | ✓ |

| Handle Wikipedia false positives | ||

| Integrate face recognition functionalities into database | ||

| Week 10 | ||

| 18.11 | Create draft of the website (frontend) | ✓ |

| Create FaceMap using D3 | ||

| Week 11 | ||

| 25.11 | Integrate all functionalities | ✓ |

| Finalize project website | ||

| Week 12 | ||

| 02.12 | Write Project report | ✓ |

References

- ↑ MetMuseum: https://www.metmuseum.org/toah/hd/nadr/hd_nadr.htm November 2019

- ↑ MetMuseum: https://www.metmuseum.org/toah/hd/nadr/hd_nadr.htm November 2019

- ↑ http://expositions.bnf.fr/les-nadar/en/the-art-of-the-portrait.html

- ↑ https://www.theguardian.com/books/2015/dec/23/books-felix-nadar-france-photography-flight

- ↑ https://gallica.bnf.fr/html/und/images/acces-par-collection?mode=desktop