Love2Late: Difference between revisions

Todor.manev (talk | contribs) (Creation of the page) |

Todor.manev (talk | contribs) mNo edit summary |

||

| (14 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Project Description= | =Project Description= | ||

The goal of | The goal of Love2Late is to collect data from matrimonial ads from the beginning of the 20th century and to find matches between people. Features of candidates are automatically extracted from the text and compatibility scores are computed based on their similarities. The website presenting the results is structured in the following way: | ||

* | * the user can select certain criteria in a search form and find their own match from the dataset | ||

* | * once the result is generated, the information about the publication is presented (date, title) as well as the match that was assigned to the corresponding person. | ||

Considering that all the publications are anonymous, the results of a search will be presented as extracts from the actual pages of the journals the corresponding ads came from | Considering that all the publications are anonymous, the results of a search will be presented as extracts from the actual pages of the journals the corresponding ads came from. | ||

=Planning= | =Planning= | ||

{|class="wikitable" | |||

! style="text-align:center;"|Step | |||

! Things to do | |||

! Week | |||

|- | |||

|1 | |||

|Classification of information by category | |||

|10-11 | |||

|- | |||

|2 | |||

|Clustering in category | |||

|11 | |||

|- | |||

|3 | |||

|Implement metric and compute matches | |||

|11-12 | |||

|- | |||

|4 | |||

|Data analysis | |||

|13 | |||

|- | |||

|5 | |||

|Data visualization | |||

|13 | |||

|- | |||

|} | |||

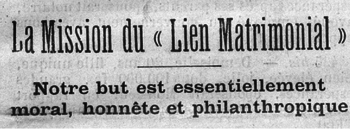

[[File:Fdh.png|350px|right|thumb| ''Le Lien du Mariage'', defending its legitimacy ]] | |||

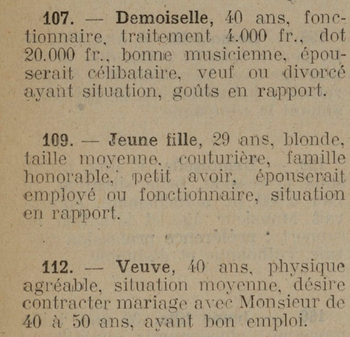

[[File:Fdh1.png|350px|right|thumb| Typical ads from ''L'intermédiaire'']] | |||

=Historical Source= | |||

The project was based on the issues available on Gallica of four periodicals containing matrimonial ads from the late 19th and early 20th centrury: ''Le trait d’union des intérêts réciproques, Le Lien du Mariage, Revue Mensuelle and L’intermédiaire''. The journals contain ads written by people looking for a partner in which they generally describe themselves and what they are looking for. The content of the publications quite often suggests that the main motivation for their authors was more economical than romantic. Other than the paid announcements, every issue contains some articles, defending the legitimacy of its purpose. This may lead us to believe that such method of union was unconventional at the time. | |||

The format of the ads is quite uniform. People generally use short, sometimes nominal sentences, keeping their descriptions concise. There is a lot of redundancy in the choice of words between ads with very few outliers. Some characteristics are almost always presented in the same way, as if they were following a precise convention. | |||

=Methodology= | |||

==Cleaning | ==Cleaning data== | ||

After downloading the set of images for each journal using GalicaWrapper, we used Transkribus for OCR, giving as output an XML file for every page, containing the text and the metadata (positions of elements and their reading order). | |||

From these files we extracted a dataset for each journal, creating a pandas data frame. Every row represents a paragraph and its attributes are the text, the image file it comes from, its coordinates on the page, as well as the metadata for the corresponding periodical issue (its title and date of publication). | |||

After the extraction of this data, a chain of filtering was applied, mainly on the text, in order to obtain a set of ads that fit a standard that was defined in order to easily extract and structure useful information. | |||

The first part consisted of distinguishing the ads from all other text. The method was based on a couple of simple observations such as the facts that the text regions always have the same width, they are never in the margins and depending on the journal, they either start with a number (ads are obviously anonymized), bold text, or indentation. The standardized shape and positioning of the ads also allowed for the implementation of an algorithm identifying paragraphs sliced in two (either at the end of a page or a column or because of an error in the OCR). | |||

The second stage of filtering gets rid of all ads that do not include in their text a word that marks the separation between the self-description and what the person is looking for. The list of those separator words was defined by looking at a sample of the data, and noting the ones commonly used. The conventions people used for this type of publications renders the list quite short. It is entirely comprised of variations of the words cherche, épouserait, desire. | |||

Once the description of the person is clearly defined, their sex can be identified. To do so we made two lists of frequently appearing words and groups of words, each gender specific. For every ad we counted the number of terms contained in each list from the two subsections. If opposing categories were predominant, the gender of the person was deduced. If the results were ambiguous, the paragraph was discarded to avoid any error. | |||

# | After that, we filter the paragraphs in which the age of the author cannot be extracted. As this is arguably the most important parameter for those searching for a union, it is always present. Moreover, as expected, the value is always two-digit and is followed by the word ans. Whenever this is not the case due to inaccurate OCR, the paragraph is discarded. | ||

The last step of filtering was to get rid of duplicates. We observed that the same announcement often appeared more than once, sometimes up to ten times. This is probably the case because of subscriptions (people paying to have their ad published in every issue). The imperfections of the OCR did not allow for the direct removal of the duplicates because they have small differences. In order to define which ads were the same, we calculated the Levenshtein distance between them (the minimum number of changes to transform one string of characters into another). Defining a threshold of 5%, we only kept one copy of each ad. | |||

In addition to those steps, the text was further cleaned by removing unnecessary characters and correcting the most frequently appearing OCR errors. | |||

==Features extraction and matching algorithm== | |||

Once the database of well-defined unique ads was completed, we extracted a sample of it that can be covered manually in order to extract key words. Because of the repetition of some standard words, not related to personal characteristics, as well as various formulations having the same meaning, this operation could not be done automatically. However, by reading a small portion of the dataset, we were able to cluster a number of words and phrases into more general qualities that we modeled as lists of tags, either describing the person or defining their interests. The process was repeated for the profession of the person and their social status. | |||

As a result, a large portion of all words appearing in the ads could be associated with tags characterizing the person. This gives us a database containing the following key elements: | |||

*gender | |||

*age | |||

*age slot of the desired partner (if specified) | |||

*tags grouped as conditions – if present in one ad, they have to appear in the other one in order for a match to be possible. | |||

*tags grouped as qualities and interests – their presence in both ads (or the pair description – searched for) enhances the compatibility and thus makes a match more probable. Professions fall under this category as well. | |||

One distinctive attribute that we devised was rapport which is a key word that appeared in many of the ads. This tag can take the values age and situation and indicates that a close similarity between the age group or the social status of the two people is a necessary condition. | |||

==Match algorithm== | |||

In order to find the best match for each person, we developed a score system attributing different weights to the tags. We store these values in a matrix with all men as rows and women as columns. The slice of the data frame that requires score calculation is first filtered for every person based on their age and the mandatory conditions. Subsequently the mutual tags are awarded points. | |||

At the end of the process our matrix contains the scores of compatibility between any two people. In order to form the matches, we tried two different algorithms: | |||

#The first one maximizes the number of matches. Given that the women in the database are less than the men, we start by finding the best match for the woman having the least number of possibilities. Then we discard the corresponding row and column and we do the same until all matches have been formed. | |||

#The second maximizes the quality of the matches. At each iteration the maximum value in the matrix is found and the corresponding match is made. The algorithm stops when all the remaining values are zeros. | |||

=Quality assessment= | |||

==Overall pipeline== | |||

We were able to create an efficient pipeline for extracting the useful information from the digitized periodicals. Although at every step not having any false positives was a priority and we thus discarded ambiguous results, the loss rate was fairly low. Although the percentage of correctly extracted ads cannot be calculated precisely because we do not know how many duplicates and non-ads the discarded paragraphs contained, we can roughly estimate that our success rate is around 60%. | |||

==Basic features extraction== | |||

As mentioned above, some of the attributes we were interested in, such as age, gender or social situation, were present in all of the ads. However, extracting them was not an obvious task because of erroneous OCR. Nevertheless, we were able to fix a reasonable amount of the inaccuracies and extract the basic features for the majority of the dataset. Although the loss is not negligible, it would be fair to say that it did not have a significant impact on the overall quality of the project. | |||

==Efficiency of algorithms== | |||

Given that some of the calculations required comparison of each pair of elements (namely the duplicates removal and the score calculation), it was important to optimize the algorithms in order to be able to run them several times and make adjustments based on the results. We were able to implement some time-saving methods and calculation cost was never a problem during this project. For more details, refer to the project repository. | |||

==Results== | |||

Because of the nature of the results we find (matches between people), there is no easy way to objectively assess the quality of the outcome. The weights we have attributed to different criteria are somewhat arbitrary and thus the quality of any match could be argued. The success or failure of the algorithm can only be perceived when some necessary conditions are not met, which is sometimes the case. Occasionally some crucial features are not detected and translated to tags which could lead to some obvious contradictions. Improvement wouldn’t be easy because of the multitude of ways any given condition can be phrased and could only be achieved with more manual work. Furthermore, conditions are sometimes ambiguous, even when read by a human. On a couple of occasions, we had to transform conditions into quality tags in order to not make the algorithm too restrictive and to obtain a good number of pairs. Once again, it is difficult to evaluate the impact of such decision and their enhancement could be the subject of future discussions. | |||

=Website and visualization= | |||

In order to visualize the results, we created a simple website containing a single search form. The user can choose between certain features, equivalent to the tags we have created. Once the form is submitted, a simplified version of the match making algorithm calculates the score between the user and the ads and returns the most suitable match. The picture of the ad is displayed along with the information and the title page of the issue it appeared in. The ad of the match for the person is displayed as well at the end of the page. | |||

The idea behind the website is to give the user an idea of what people published in the matrimonial ads journals as well as a glimpse of the results of our match-making algorithm. We were careful not to allow the user to be too selective and not to make the algorithm too restrictive in order to keep it possible to access any ad with some search parameters. The conditions we used to make the computation are not stated, leaving to the user the possibility to interpret the results. | |||

= Links = | |||

* [http://love2late.pythonanywhere.com/ Project website] | |||

* [https://github.com/todormanev956/love2late Github repository] | |||

=== Gallica Journals:=== | |||

* [https://gallica.bnf.fr/services/engine/search/sru?operation=searchRetrieve&version=1.2&collapsing=disabled&query=%28dc.title%20all%20%22mariage%22%20or%20text%20all%20%22mariage%22%20%29%20%20and%20%28dc.type%20all%20%22fascicule%22%29%20and%20arkPress%20all%20%22cb32879388q_date%22&rk=150215;2 Le Trait d'Union des Intérêts Réciproques] | |||

* [https://gallica.bnf.fr/services/engine/search/sru?operation=searchRetrieve&version=1.2&collapsing=disabled&query=%28dc.title%20all%20%22mariage%22%20or%20text%20all%20%22mariage%22%20%29%20%20and%20%28dc.type%20all%20%22fascicule%22%29%20and%20arkPress%20all%20%22cb32807513f_date%22&rk=85837;2 Le Lien du Mariage] | |||

* [https://gallica.bnf.fr/ark:/12148/cb328605835/date.r= Revue Mensuelle] | |||

* [https://gallica.bnf.fr/ark:/12148/cb327932647/date.r= L'Intermédiaire] | |||

Latest revision as of 17:29, 17 December 2019

Project Description

The goal of Love2Late is to collect data from matrimonial ads from the beginning of the 20th century and to find matches between people. Features of candidates are automatically extracted from the text and compatibility scores are computed based on their similarities. The website presenting the results is structured in the following way:

- the user can select certain criteria in a search form and find their own match from the dataset

- once the result is generated, the information about the publication is presented (date, title) as well as the match that was assigned to the corresponding person.

Considering that all the publications are anonymous, the results of a search will be presented as extracts from the actual pages of the journals the corresponding ads came from.

Planning

| Step | Things to do | Week |

|---|---|---|

| 1 | Classification of information by category | 10-11 |

| 2 | Clustering in category | 11 |

| 3 | Implement metric and compute matches | 11-12 |

| 4 | Data analysis | 13 |

| 5 | Data visualization | 13 |

Historical Source

The project was based on the issues available on Gallica of four periodicals containing matrimonial ads from the late 19th and early 20th centrury: Le trait d’union des intérêts réciproques, Le Lien du Mariage, Revue Mensuelle and L’intermédiaire. The journals contain ads written by people looking for a partner in which they generally describe themselves and what they are looking for. The content of the publications quite often suggests that the main motivation for their authors was more economical than romantic. Other than the paid announcements, every issue contains some articles, defending the legitimacy of its purpose. This may lead us to believe that such method of union was unconventional at the time.

The format of the ads is quite uniform. People generally use short, sometimes nominal sentences, keeping their descriptions concise. There is a lot of redundancy in the choice of words between ads with very few outliers. Some characteristics are almost always presented in the same way, as if they were following a precise convention.

Methodology

Cleaning data

After downloading the set of images for each journal using GalicaWrapper, we used Transkribus for OCR, giving as output an XML file for every page, containing the text and the metadata (positions of elements and their reading order).

From these files we extracted a dataset for each journal, creating a pandas data frame. Every row represents a paragraph and its attributes are the text, the image file it comes from, its coordinates on the page, as well as the metadata for the corresponding periodical issue (its title and date of publication).

After the extraction of this data, a chain of filtering was applied, mainly on the text, in order to obtain a set of ads that fit a standard that was defined in order to easily extract and structure useful information.

The first part consisted of distinguishing the ads from all other text. The method was based on a couple of simple observations such as the facts that the text regions always have the same width, they are never in the margins and depending on the journal, they either start with a number (ads are obviously anonymized), bold text, or indentation. The standardized shape and positioning of the ads also allowed for the implementation of an algorithm identifying paragraphs sliced in two (either at the end of a page or a column or because of an error in the OCR).

The second stage of filtering gets rid of all ads that do not include in their text a word that marks the separation between the self-description and what the person is looking for. The list of those separator words was defined by looking at a sample of the data, and noting the ones commonly used. The conventions people used for this type of publications renders the list quite short. It is entirely comprised of variations of the words cherche, épouserait, desire. Once the description of the person is clearly defined, their sex can be identified. To do so we made two lists of frequently appearing words and groups of words, each gender specific. For every ad we counted the number of terms contained in each list from the two subsections. If opposing categories were predominant, the gender of the person was deduced. If the results were ambiguous, the paragraph was discarded to avoid any error.

After that, we filter the paragraphs in which the age of the author cannot be extracted. As this is arguably the most important parameter for those searching for a union, it is always present. Moreover, as expected, the value is always two-digit and is followed by the word ans. Whenever this is not the case due to inaccurate OCR, the paragraph is discarded.

The last step of filtering was to get rid of duplicates. We observed that the same announcement often appeared more than once, sometimes up to ten times. This is probably the case because of subscriptions (people paying to have their ad published in every issue). The imperfections of the OCR did not allow for the direct removal of the duplicates because they have small differences. In order to define which ads were the same, we calculated the Levenshtein distance between them (the minimum number of changes to transform one string of characters into another). Defining a threshold of 5%, we only kept one copy of each ad.

In addition to those steps, the text was further cleaned by removing unnecessary characters and correcting the most frequently appearing OCR errors.

Features extraction and matching algorithm

Once the database of well-defined unique ads was completed, we extracted a sample of it that can be covered manually in order to extract key words. Because of the repetition of some standard words, not related to personal characteristics, as well as various formulations having the same meaning, this operation could not be done automatically. However, by reading a small portion of the dataset, we were able to cluster a number of words and phrases into more general qualities that we modeled as lists of tags, either describing the person or defining their interests. The process was repeated for the profession of the person and their social status.

As a result, a large portion of all words appearing in the ads could be associated with tags characterizing the person. This gives us a database containing the following key elements:

- gender

- age

- age slot of the desired partner (if specified)

- tags grouped as conditions – if present in one ad, they have to appear in the other one in order for a match to be possible.

- tags grouped as qualities and interests – their presence in both ads (or the pair description – searched for) enhances the compatibility and thus makes a match more probable. Professions fall under this category as well.

One distinctive attribute that we devised was rapport which is a key word that appeared in many of the ads. This tag can take the values age and situation and indicates that a close similarity between the age group or the social status of the two people is a necessary condition.

Match algorithm

In order to find the best match for each person, we developed a score system attributing different weights to the tags. We store these values in a matrix with all men as rows and women as columns. The slice of the data frame that requires score calculation is first filtered for every person based on their age and the mandatory conditions. Subsequently the mutual tags are awarded points. At the end of the process our matrix contains the scores of compatibility between any two people. In order to form the matches, we tried two different algorithms:

- The first one maximizes the number of matches. Given that the women in the database are less than the men, we start by finding the best match for the woman having the least number of possibilities. Then we discard the corresponding row and column and we do the same until all matches have been formed.

- The second maximizes the quality of the matches. At each iteration the maximum value in the matrix is found and the corresponding match is made. The algorithm stops when all the remaining values are zeros.

Quality assessment

Overall pipeline

We were able to create an efficient pipeline for extracting the useful information from the digitized periodicals. Although at every step not having any false positives was a priority and we thus discarded ambiguous results, the loss rate was fairly low. Although the percentage of correctly extracted ads cannot be calculated precisely because we do not know how many duplicates and non-ads the discarded paragraphs contained, we can roughly estimate that our success rate is around 60%.

Basic features extraction

As mentioned above, some of the attributes we were interested in, such as age, gender or social situation, were present in all of the ads. However, extracting them was not an obvious task because of erroneous OCR. Nevertheless, we were able to fix a reasonable amount of the inaccuracies and extract the basic features for the majority of the dataset. Although the loss is not negligible, it would be fair to say that it did not have a significant impact on the overall quality of the project.

Efficiency of algorithms

Given that some of the calculations required comparison of each pair of elements (namely the duplicates removal and the score calculation), it was important to optimize the algorithms in order to be able to run them several times and make adjustments based on the results. We were able to implement some time-saving methods and calculation cost was never a problem during this project. For more details, refer to the project repository.

Results

Because of the nature of the results we find (matches between people), there is no easy way to objectively assess the quality of the outcome. The weights we have attributed to different criteria are somewhat arbitrary and thus the quality of any match could be argued. The success or failure of the algorithm can only be perceived when some necessary conditions are not met, which is sometimes the case. Occasionally some crucial features are not detected and translated to tags which could lead to some obvious contradictions. Improvement wouldn’t be easy because of the multitude of ways any given condition can be phrased and could only be achieved with more manual work. Furthermore, conditions are sometimes ambiguous, even when read by a human. On a couple of occasions, we had to transform conditions into quality tags in order to not make the algorithm too restrictive and to obtain a good number of pairs. Once again, it is difficult to evaluate the impact of such decision and their enhancement could be the subject of future discussions.

Website and visualization

In order to visualize the results, we created a simple website containing a single search form. The user can choose between certain features, equivalent to the tags we have created. Once the form is submitted, a simplified version of the match making algorithm calculates the score between the user and the ads and returns the most suitable match. The picture of the ad is displayed along with the information and the title page of the issue it appeared in. The ad of the match for the person is displayed as well at the end of the page. The idea behind the website is to give the user an idea of what people published in the matrimonial ads journals as well as a glimpse of the results of our match-making algorithm. We were careful not to allow the user to be too selective and not to make the algorithm too restrictive in order to keep it possible to access any ad with some search parameters. The conditions we used to make the computation are not stated, leaving to the user the possibility to interpret the results.