Terzani online museum: Difference between revisions

Maxime.jan (talk | contribs) No edit summary |

Maxime.jan (talk | contribs) No edit summary |

||

| Line 10: | Line 10: | ||

== Acquiring IIIF annotations == | == Acquiring IIIF annotations == | ||

As the whole project is based on IIIF annotations of photographies, we must first collect them. The Terzani archive can be found on the Cini Foundation server[http://dl.cini.it/]. However, it doesn't provide any specific API to download the IIIF manifests of all collections at once. Therefore we used the Python module Beautiful Soup to read the root page of the archive[http://dl.cini.it/collections/show/1352] and to extract all collection ID from there. Once we had collected these IDs, we could make a request to each corresponding IIIF manifest using urllib. | As the whole project is based on IIIF annotations of photographies, we must first collect them. The Terzani archive can be found on the Cini Foundation server[http://dl.cini.it/]. However, it doesn't provide any specific API to download the IIIF manifests of all collections at once. Therefore we used the Python module Beautiful Soup to read the root page of the archive[http://dl.cini.it/collections/show/1352] and to extract all collection ID from there. Once we had collected these IDs, we could make a request to each corresponding IIIF manifest using urllib. We could then simply read this manifest and only keep the annotations whose label explicitely says that it represents the recto of the photography. | ||

In our project, we're displaying photographies in a gallery sorted by country. The country information is however not present in the IIIF annotation. It is however available on the root page of the Terzani archive[http://dl.cini.it/collections/show/1352] : collections' names take after their origins. As these names are however not all formatted the same and written in Italian, we decided that it would be easier to map each collection to its country by hand rather doing it algorithmically. One problem occured for the collections whose name is made of multiple countries. It is impossible for us to know which photo is part of which of these countries. Thus we decided to not assign a country to any of these collections. | |||

== Annotating the photographies == | |||

Once in posession of the IIIF of the photographies, we annotated them using Google Cloud Vision[https://cloud.google.com/vision]. This tool provides a Python API with a myriad of annotation features. In the scope of this project we decided to use the following : | |||

* Object localization : detects which objects are on the image with their bounding box | |||

* Text detection : OCR tool giving all text that could be read on the image alongside with their bounding box | |||

* Label detection : gives general labels to the whole image | |||

* Landmark detection : in the case where the image represents a famous landmark, gives the name of this place as well as its coordinates | |||

* Web detection : if any similar photo is on the web, gives its reference alongside with a small description. We only make use of the description as an additional label for the whole image. | |||

For each IIIF annotation, we first download its corresponding photo and then use the Google Vision API to get all these new information. However some of the values returned by Google Vision cannot be used as they are. We processed bounding boxes and all texts the following way : | |||

* Bounding boxes : a bounding box is given by its 4 coordinates normalized between 0 and 1. To able to display the bounding box with the IIIF format, we need the non-normalized coordinates of its top left corner and its width and height. Luckily, we the whole photo width and height is present in the IIIF annotation, which allows us to "de-normalize" the coordinates. The computation of the bouding box height and width is then only a matter of very simple algebra. | |||

* Texts : Ravi ? | |||

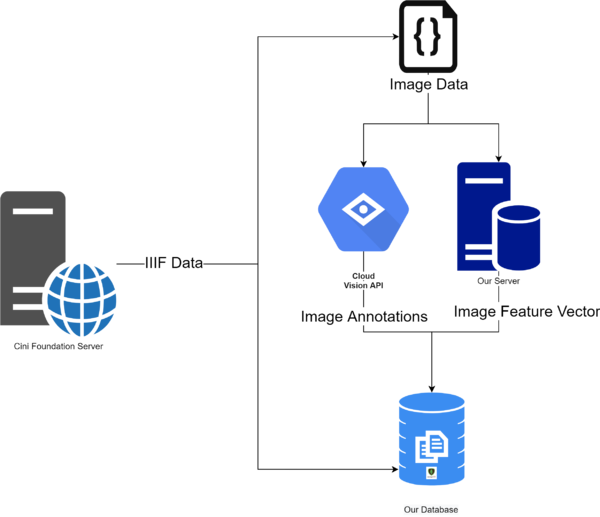

== Architecture == | == Architecture == | ||

Revision as of 21:27, 11 December 2020

Introduction

Many inventions in human history have set the course for the future. One of them is a camera, which has transformed the way we share bondings and stories.

Storytelling is an essential part of the human journey. Starting from what one does when waking up in the morning to the explorations in the deep space, the connections are formed through stories. The tales were mostly transferred through sound until recently[1]. The invention of the camera[2] started to change the landscape of this narrative. People could capture live-action into images. Later, this leads to the invention of the video camera[3], color photos/videos[4] [5], and now we can nearly see the entire world in augmented reality[6] as if we are living the moment. However, many important events in history are captured as photographs for a long time now. To understand the then situations better, one would immensely gain by looking at the photographs if not videos. If not for research, it is always fascinating to visit history and appealing to see the pictures of past times. Nevertheless, having to go through an innumerable number of images is not productive. Thus, we want to create an online platform that would help access these photographs and travel through them.

Tiziano Terzani[7] was an Italian journalist and writer. He had extensively traveled in east Asia[8] and witnessed many important events. During the travel, he and his team have captured many pictures. The Cini foundation digitized some of his photo collections Terzani photo collections. Through this project, we try to bring the photographs taken during his trip to South Asian countries to an online platform and present them to the 21st-century digital audience.

Through this platform, the viewers can search the photos based on location or text search or by uploading an image to get similar images. Also, as an experimental feature, users can try to colorize the pictures.

Methods

Acquiring IIIF annotations

As the whole project is based on IIIF annotations of photographies, we must first collect them. The Terzani archive can be found on the Cini Foundation server[9]. However, it doesn't provide any specific API to download the IIIF manifests of all collections at once. Therefore we used the Python module Beautiful Soup to read the root page of the archive[10] and to extract all collection ID from there. Once we had collected these IDs, we could make a request to each corresponding IIIF manifest using urllib. We could then simply read this manifest and only keep the annotations whose label explicitely says that it represents the recto of the photography.

In our project, we're displaying photographies in a gallery sorted by country. The country information is however not present in the IIIF annotation. It is however available on the root page of the Terzani archive[11] : collections' names take after their origins. As these names are however not all formatted the same and written in Italian, we decided that it would be easier to map each collection to its country by hand rather doing it algorithmically. One problem occured for the collections whose name is made of multiple countries. It is impossible for us to know which photo is part of which of these countries. Thus we decided to not assign a country to any of these collections.

Annotating the photographies

Once in posession of the IIIF of the photographies, we annotated them using Google Cloud Vision[12]. This tool provides a Python API with a myriad of annotation features. In the scope of this project we decided to use the following :

- Object localization : detects which objects are on the image with their bounding box

- Text detection : OCR tool giving all text that could be read on the image alongside with their bounding box

- Label detection : gives general labels to the whole image

- Landmark detection : in the case where the image represents a famous landmark, gives the name of this place as well as its coordinates

- Web detection : if any similar photo is on the web, gives its reference alongside with a small description. We only make use of the description as an additional label for the whole image.

For each IIIF annotation, we first download its corresponding photo and then use the Google Vision API to get all these new information. However some of the values returned by Google Vision cannot be used as they are. We processed bounding boxes and all texts the following way :

- Bounding boxes : a bounding box is given by its 4 coordinates normalized between 0 and 1. To able to display the bounding box with the IIIF format, we need the non-normalized coordinates of its top left corner and its width and height. Luckily, we the whole photo width and height is present in the IIIF annotation, which allows us to "de-normalize" the coordinates. The computation of the bouding box height and width is then only a matter of very simple algebra.

- Texts : Ravi ?

Architecture

Database Design

Creation of Image tags, annotation, and Landmarks

Creation of Image feature vectors

Creation of Text based Search

Creation of Image based Search

Creation of Country based gallery

Quality assessment

We have successfully created a structured pipeline to perform the crucial steps for extracting the data and making it available for search engines. In further subsections, we provide the analysis and evaluation of the effectiveness of each step.

Data Harvesting

The first step in the pipeline is to obtain the photographs available on the Cini Foundation server. As the website did not provide an API to access the data, we have resorted to standard web scrapping techniques on the HTML page and create a binary file to store the IIIF annotation of the image. Although we have successfully extracted all the images present on the server, there is an amount of manual work that prevents making this an autonomous step. The other hurdle is the country information for each photograph for which we have manually annotated countries by going through the website. Thus not all images have a country associated with them as collections have multiple countries associated with them.

Text based Image search

The creation of tags to search images based on is one of the trickiest steps in the pipeline, as we have the least control over the process of creation. The Google Vision API has produced sufficiently reliable results for most of the photographs that have observable objects in recent past photography. However, in terms of text, the API itself has constraints on the languages that it can automatically. Thus, most of the detected text is the one that contains English alphabets. Nevertheless, for that text that is visible, we have results that are many times not evident to the human eyes. As we do not store any of the images on google cloud storage, the process itself cannot happen asynchronously and amounts to large amounts of lead time.

It is always strenuous for the user to search for exact words and find a match. Thus we resorted to using regular expressions for the search queries. Nevertheless, this comes with the problem of returning many search results that might not be relevant always. For instance, the search for a car or a cat can show images of carving or a cathedral.

<write user feedback on search results>

The results in the section of the website were widely acceptable.

Similar Image search

Alike the other search engine, the results in this section are also not measurable through a metric. The observation from the search results is that they are returned based on the structures present in the source image. These results are appropriate most of the time as the engine would return faces for faces, buildings for buildings, and cars for cars. Due to the constraint that most of the photographs are monochromatic, the colors in the source images do not significantly aid the search process.

Although the next issue does not directly concern the quality of the result produced, it would affect the user's interaction with the service. Currently, the search between the images happens sequentially. Parallelizing this would expedite the process.

<write user feedback on search results>

Code Realisation and Github Repository

The GitHub repository of the project is at Terzani Online Museum. There are two principal components of the project. The first one corresponds to the creation of a database of the images with their corresponding tags, bounding boxes of objects, landmarks and text identified, and their feature vectors. The functions related to these operations are inside the folder (package) terzani and the corresponding script in the scrpits folder. The second component is the website that is in the website directory. The details of installation and usage are available on the Github repository.

Limitations and Scope for Improvement

Schedule

| Timeframe | Tasks |

|---|---|

| Week 5-6 |

|

| Week 6-7 |

|

| Week 7-8 |

|

| Week 8-9 |

|

| Week 9-10 |

|

| Week 10-11 |

|

| Week 11-12 |

|

| Week 12-13 |

|

| Week 13-14 |

|