VenBioGen: Difference between revisions

| Line 88: | Line 88: | ||

=== Results === | === Results === | ||

==== General Overview ==== | |||

==== Adjusted versus unadjusted biographies ==== | |||

==== Final qualitative assessment ==== | |||

== Links == | == Links == | ||

Revision as of 23:14, 12 December 2020

Introduction

In this project, we use generative models to come up with creative biographies of Venetian people that existed before the 20th century. Our motivation was originally to observe how such a model would pick up on underlying relationships between Venetian actors in old centuries as well as their relationships with people in the rest of the world. These underlying relationships might or might not come to light in every generated biography, but we can be sure that the model has the potential to offer fresh perspectives on historical tendencies.

Motivation

This project is an interesting exploration into the intersection between Humanities and Natural Language Processing (NLP) applied to text generation.

By generating fictional biographies of Venetians up to and including the 19th century, this model allows, on one hand, us to evaluate the current state, progress and limitation of text generation. Even more worthwhile, the question of whether underlying historical tendencies - most commonly under the form of international or inter-familial relationships - are learnt by the model can be explored.

Project Plan and Milestones

Milestone 1 (up to 1 October)

- Brainstorm project ideas

- Prepare presentation to present two ideas for the project

Milestone 2 (up to 14 November)

- Finalize project planning

- Build generative model, using Python with Tensorflow 1.x (to guarantee compatibility with pre-built GPT-2 and BERT models)

- Finetune GPT-II model

- Finetune Bert

- Connect both models to create generative pipeline

- Examine generated biographies, and acceptance threshold, and think about further improvements

Milestone 3 (up to 20 November)

- Create frontend for interface (leveraging Bootstrap and jQuery)

- Create backend for interface (using flask), and connect it to the generative module

- Finalize the RESTful API for communication between both ends

Milestone 4 (up to 5 December)

- Add post-processing date adjustment module

- Add historical realism enhancement module

- Assess feasibility and costs involved with outsourcing evaluation to a platform such as AMZ Mechanical Turk

Milestone 5 (up to 11 December)

- Have reached a decision with regards to evaluation procedure

- Conduct model evaluation

- Review code

- Finalize documentation

- Finalize report

- Results & Result analysis section

- Screenshot of interface

- Limitations

- Challenging parts ?

- Interface section

- Include link to repo

- Remove this TODO list !

Interface

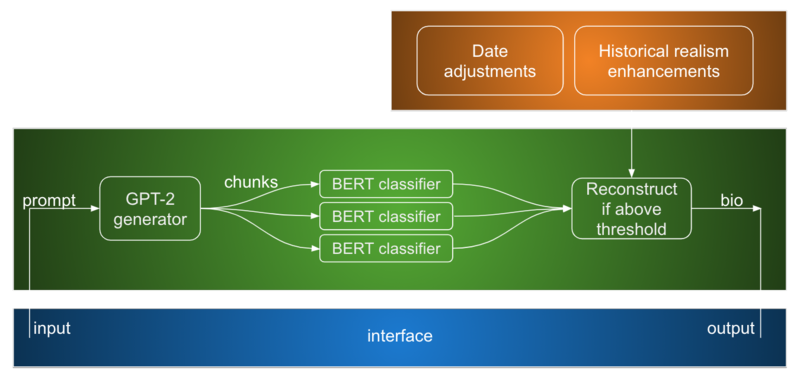

Generative module

Data processing pipeline

As a first step in the processing pipeline, we used the wikipediaapi [1] and wikipedia [2] packages to query articles from the Wikipedia category "People from Venice" [3].

The main task of this part of the data processing pipeline was to map Wikipedia HTML text to human-readable text, which includes eliminating section titles as well as removing references, lists, and tables. Detecting these proved to be a challenging task, and a few articles in the dataset still contain undesirable elements.

We chose to train the generative model with biographies of people having lived prior to the 20th century, as we would like the generated text to mainly reflect the historical aspects of Venetian figures.

The final dataset used for training comprises 1164 biographies. In further research, one could could include more biography sources in order to have a richer set of corpora, which would result in more diverse generated biographies.

Text generation

GPT2

The GPT-2 submodule is used for text generation. It is constituted of a GPT-2 [1] model which has been fine-tuned using Venetian biographies in order to be able to, given a prompt, generate a made up biography. This submodule hence plays the role of the "generator" in our GAN inspired module.

BERT

The BERT submodule is used for text assessment. It is constituted of a BERT model [2] which is trained to assess the realism of texts. Each biography is split into chunks before being fed to this module. It gives each chunk a realism score. The smallest realism score is used to determine whether the module should generate a new biography. This submodule serves as the "discriminator" in our GAN inspired module.

Enhancements module

Date Adjustments

The Date adjustments submodule is used in order to increase the realism of the generated biographies. This is done in several ways -

- The years are fetched using regex and then scaled in order to make sure that a biographee's lifespan is realistic.

- The centuries mentioned are fetched as well and are adjusted so that they match the years mentioned in the biography.

- Events (determined by verbs) are fetched and we search for "birth" and "death" events. Their corresponding dates are fetched and adjusted so that they are realistic.

Entity Replacement

The entity replacement submodule is used in order to increase historical realism. This is done by searching for historical figures in the generated biographies and checking whether they belong to the era indicated by the dates in the biography. If they don't, a replacement if fetched from a historical figures dataset [3] such that the replacement is as accurately close to the historical figure as possible (i.e. in terms of geography and occupation).

Quality assessment

To evaluate the generated biographies' realism, three types of biographies were prepared: real biographies of Venetians, which had been used for the model training, unadjusted fake biographies of Venetians, and adjusted fake biographies of Venetians. To create the fake biographies, it was necessary to temporarily abandon the semi-rigid prompt format we more commonly use and make available through the interface. Otherwise, the first sentence of each biography would have given away its real or fake nature. As such, the prompts used were instead the first sentence of wikipedia articles of other Italian people (for example, from Naples or Bologna), slightly changed so they were related to Venice instead of their true birthplace, when applicable.

In total, 160 biographies were read and evaluated, 80 of which were real biographies, 30 unadjusted fake biographies, and 50 adjusted fake biographies. More biographies were dedicated to the adjusted version since they are the true result of all modules, but 30 were still dedicated to the unadjusted biographies in order to provide a baseline results for just the GAN-inspired generative module's output.

Results

General Overview

Adjusted versus unadjusted biographies

Final qualitative assessment

Links

References

- ↑ Radford, Alec and Wu, Jeff and Child, Rewon and Luan, David and Amodei, Dario and Sutskever, Ilya, Language Models are Unsupervised Multitask Learners, 2019

- ↑ Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova, BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, 2019

- ↑ N/A, made available under a "Kaggle InClass" competition, Historical Figures