VenBioGen: Difference between revisions

| (46 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

In this project, we use generative models to come up with creative biographies of Venetian people | In this project, we use generative models to come up with creative biographies of Venetian people up to the 20th century. Our motivation was originally to observe how such a model would pick up on underlying relationships between Venetian actors in old centuries as well as their relationships with people in the rest of the world. These underlying relationships might or might not come to light in every generated biography, but the model certainly has the potential to pick up on some of them and offer fresh perspectives on historical tendencies. | ||

== Motivation == | == Motivation == | ||

| Line 7: | Line 7: | ||

By generating fictional biographies of Venetians up to and including the 19th century, this model allows, on one hand, us to evaluate the current state, progress and limitation of text generation. Even more worthwhile, the question of whether underlying historical tendencies - most commonly under the form of international or inter-familial relationships - are learnt by the model can be explored. | By generating fictional biographies of Venetians up to and including the 19th century, this model allows, on one hand, us to evaluate the current state, progress and limitation of text generation. Even more worthwhile, the question of whether underlying historical tendencies - most commonly under the form of international or inter-familial relationships - are learnt by the model can be explored. | ||

We were able to create a pipeline which creates fictional biographies with a level of realism that exceeded our initial expectations. In fact, on average, 1 out of 4 fake biographies was able to fool us when we conducted an evaluation. To generate the fake biographies, almost all of our pipeline was engaged, with the sole exception of the web interface and server. The generative module handled the generation of unadjusted biographies which satisfied decent realism criteria by employing its two models. In addition, the enhancements module was used to increase realism in the biographies which were deemed "realistic" enough. These adjustments concern mainly dates’ fine-tuning - to make sure that the dates in a given biography are as coherent as possible - as well as entity replacement – a submodule which makes sure that the historical figures mentioned in a biography correspond to the era of the biography | |||

Though the interface module was not used to generate biographies for evaluation, it has the nevertheless important task of facilitating interaction with the back end. It should also be mentioned that the back end provides a more extensive API than what is made available through this web interface. | |||

Overall, we are satisfied with the obtained results, though it is impossible for us to authoritatively comment on their true historical accuracy and references to less well-known historical events and tendencies. | |||

== Project Plan and Milestones == | == Project Plan and Milestones == | ||

The project can be roughly split into three modules, all of which will presented in greater detail in later sections of this page: | |||

* an interface and webserver, to allow interaction with the model; | |||

* a generative model, employing a GPT-2 model finetuned to generate venetian biographies, and a BERT model finetuned to act as a realness discriminator preventing lower quality biographies of being outputted; | |||

* an enhancements module, which applies date and historical figures adjustments to the generated biographies in order to make them more realistic and congruent. | |||

Below, we present an overview of the project's evolution and milestones: | |||

<blockquote> | |||

'''Milestone 1 (up to 1 October)''' | '''Milestone 1 (up to 1 October)''' | ||

* Brainstorm project ideas | * Brainstorm project ideas | ||

* Prepare presentation to present two ideas for the project | * Prepare presentation to present our two ideas for the project | ||

# '''Venetian biographies generator''', one of the projects proposed by the professor, using a Language Model to generate fictional biographies of Venetians | |||

# '''Chat-a-ghost''', our original idea, inspired by the one above, where we would create a chat bot that would try emulate a fictional Venetian with whom the user could chat in order to obtain insights and better understand its (fictional) life | |||

'''Milestone 2 (up to 14 November)''' | '''Milestone 2 (up to 14 November)''' | ||

| Line 38: | Line 55: | ||

* Finalize documentation | * Finalize documentation | ||

* Finalize report | * Finalize report | ||

</blockquote> | |||

== Interface == | |||

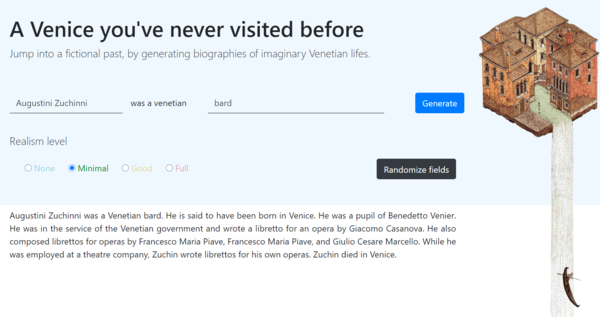

[[File:VenBioGenInterface.png|thumb|The user interface with an example of a generated biography|600px]] | |||

In order to create an engaging, user-friendly experience, we created an interface which displays the generated biographies. A RESTful API was used to link the backend to the frontend, and the Flask framework was used to develop the server. | |||

Users can come up with a name and occupation combination and start the generation process by clicking the 'Generate' button. It is possible to specify the desired realism level, where a higher level results in more refined biographies, though, on average, a higher level also takes more time to produce a result (the discriminator requires a higher standard, and several generated biographies can fail to pass this requirement before one is accepted). | |||

A useful feature for when the user needs inspiration is the "Randomize fields" button, which creates a random name and occupation combination from a list of 303 surnames, 21 forenames, and 94 occupations commonly used throughout Venetian history. | |||

The interface is easily accessible and the code to run it can be found in our [https://github.com/liliaellouz/biography-generation/ GitHub repository]. | |||

== Generative module == | == Generative module == | ||

=== Data processing pipeline === | === Data processing pipeline === | ||

| Line 59: | Line 77: | ||

We chose to train the generative model with biographies of people having lived prior to the 20<sup>th</sup> century, as we would like the generated text to mainly reflect the historical aspects of Venetian figures. | We chose to train the generative model with biographies of people having lived prior to the 20<sup>th</sup> century, as we would like the generated text to mainly reflect the historical aspects of Venetian figures. | ||

The final dataset used for training comprises 1164 biographies. In further | The final dataset used for training comprises 1164 biographies. In further work, it could prove interesting to include more biographical sources in order to have a richer corpora, and assess whether the introduction of those sources resulted in more diverse generated biographies. | ||

=== Text generation === | === Text generation === | ||

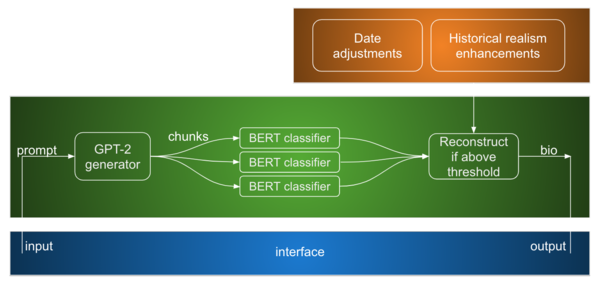

==== | [[File:Modules_overview.png|600px|thumb|An overview of the three main modules and their connections.]] | ||

This module was inspired by a GAN's architecture. The first submodule, [http://fdh.epfl.ch/index.php/VenBioGen#GPT2; GPT-2] acts as the generator by generating the biographies. These are then fed to a the second submodule, [http://fdh.epfl.ch/index.php/VenBioGen#BERT; BERT]. BERT then gives ratings to the biography which are used to determine whether the biography should be output. If it does not pass this test of realism, GPT-2 generates a new biography which is again fed to BERT. | |||

==== GPT-2 ==== | |||

The GPT-2 submodule is used for text generation. It is constituted of a GPT-2 <ref>[https://d4mucfpksywv.cloudfront.net/better-language-models/language-models.pdf Radford, Alec and Wu, Jeff and Child, Rewon and Luan, David and Amodei, Dario and Sutskever, Ilya, Language Models are Unsupervised Multitask Learners, 2019]</ref> model which has been fine-tuned using Venetian biographies in order to be able to, given a prompt, generate a made up biography. This submodule hence plays the role of the "generator" in our GAN inspired module. | The GPT-2 submodule is used for text generation. It is constituted of a GPT-2 <ref>[https://d4mucfpksywv.cloudfront.net/better-language-models/language-models.pdf Radford, Alec and Wu, Jeff and Child, Rewon and Luan, David and Amodei, Dario and Sutskever, Ilya, Language Models are Unsupervised Multitask Learners, 2019]</ref> model which has been fine-tuned using Venetian biographies in order to be able to, given a prompt, generate a made up biography. This submodule hence plays the role of the "generator" in our GAN inspired module. | ||

| Line 74: | Line 96: | ||

The Date adjustments submodule is used in order to increase the realism of the generated biographies. This is done in several ways - | The Date adjustments submodule is used in order to increase the realism of the generated biographies. This is done in several ways - | ||

* | * One of the problems encountered in the generated biographies involves the lifespans of biographees. In fact, they are sometimes unrealistic and span over more than a centennial. We fix this by doing the following - the years are fetched using regex and then scaled in order to make sure that a biographee's lifespan is realistic. A realistic lifespan is determined by a gaussian distribution. | ||

* | * Another problem which is found in generated biographies is the inconsistency between the centuries and the years mentioned. We fix this by doing the following - the centuries mentioned are fetched as well and are adjusted such that they match the years that are in the biography. | ||

* | * Another problem which is present in the generated biographies in that the dates of different events are sometimes incoherent. For instance, some biographees die before they are born. This is fixed by doing the following - events (determined by verbs) are fetched and filtered down to "birth" and "death" events; their corresponding dates are fetched and adjusted so that they are realistic. | ||

=== Entity Replacement === | === Entity Replacement === | ||

The entity replacement submodule is used in order to increase historical realism. This is done by searching for historical figures in the generated biographies and checking whether they belong to the era indicated by the dates in the biography. If they don't, a replacement if fetched from a historical figures dataset <ref>[https://www.kaggle.com/c/historical-figures/overview/description N/A, made available under a "Kaggle InClass" competition, Historical Figures]</ref> such that the replacement is as accurately close to the historical figure as possible | The generated biographies sometimes include references to actual historical figures. However, these figures sometimes belong to another era than the one corresponding to the generated biography. The entity replacement submodule aims to solve this issue. It hence is used in order to increase historical realism. This is done by searching for historical figures in the generated biographies and checking whether they belong to the era indicated by the dates in the biography. If they don't, a replacement if fetched from a historical figures dataset <ref>[https://www.kaggle.com/c/historical-figures/overview/description N/A, made available under a "Kaggle InClass" competition, Historical Figures]</ref> such that the replacement belongs to the era of the biography and is as accurately close to the historical figure as possible. This closeness is determined by two factors - | ||

* The replacement should be as geographically close to the figure being replaced as possible. This is done by looking for figures sharing the same city. If it is found, it is fetched. If there is no figure sharing the same city, we move on to the higher level which is the state level. If it is again not found, we move on to the country level and potentially the continent level if it again not found on the country level. | |||

* The replacement should be as professionally close to the figure being replaced as possible. This is done by looking for figures sharing the same occupation. If it is found, it is fetched. If there is no figure sharing the same city, we move on to the higher level which is the industry level. If it is again not found, we move on to the domain level. | |||

In case no replacements are found for the historical figures who do not belong to the era of the biography, we keep do not alter them. One solution which could be explored is to change the era of the biography to match the era of the historical figures who are mentioned. Given our time constraint, this solution was not explored and remains an interesting direction to explore in the future. | |||

== Quality assessment == | == Quality assessment == | ||

| Line 89: | Line 115: | ||

=== Results === | === Results === | ||

==== | ==== Evaluation metrics ==== | ||

Among the 160 evaluated biographies, out of which 80 were real and 80 were fake (either adjusted or not): | Among the 160 evaluated biographies, out of which 80 were real and 80 were fake (either adjusted or not): | ||

| Line 100: | Line 126: | ||

* 73.75% of biographies classified as fake were fake bios. The evaluators thus had a '''specificity''', or '''true negative rate''', of 0.74. (And 26.25% of biographies classified as fake were actually real biographies.) | * 73.75% of biographies classified as fake were fake bios. The evaluators thus had a '''specificity''', or '''true negative rate''', of 0.74. (And 26.25% of biographies classified as fake were actually real biographies.) | ||

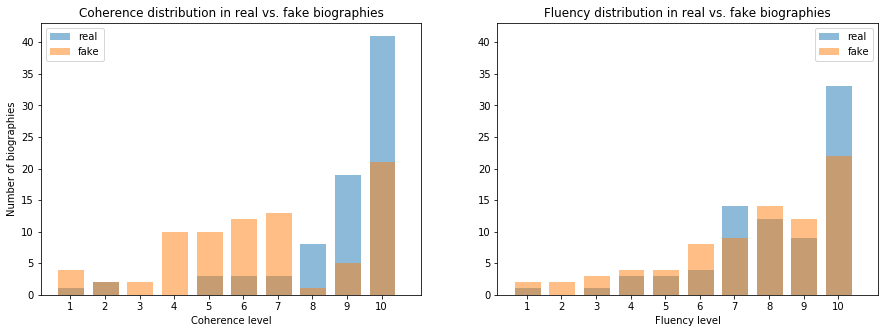

In terms of fluency and coherence, the average results are presented bellow. Fluency measured how naturally the text read and flowed, including grammar, while coherence measured the intra-biography coherence, in terms of dates, professions and achievements, references to other people, consistency in naming, etc. | In terms of fluency and coherence, the average results are presented bellow. Fluency measured how naturally the text read and flowed, including grammar, while coherence measured the intra-biography coherence, in terms of dates, professions and achievements, references to other people, consistency in naming, etc. | ||

| Line 106: | Line 134: | ||

* The average '''coherence''' for real biographies was '''8.8''', while for the fake biographies it was '''6.625'''. | * The average '''coherence''' for real biographies was '''8.8''', while for the fake biographies it was '''6.625'''. | ||

The following histograms present a visualization of fluency and coherence over their 10 point scale for each type of biography: | |||

[[File:Eval_results.png]] | |||

==== Adjusted versus unadjusted biographies ==== | ==== Adjusted versus unadjusted biographies ==== | ||

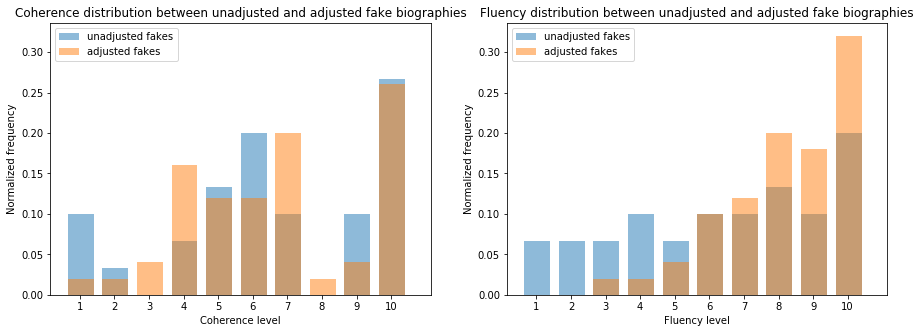

We can observe the adjustments module made a significant contribution to the level of realism of the generated biographies. Indeed, on average, when compared to an unadjusted biography, an adjusted one was misclassified as real almost twice as often. | |||

In particular, the misclassification rates were the following: | |||

* 16.67% of unadjusted biographies were incorrectly classified as real (and thus 83.33% of them were correctly classified as fake). | |||

* 32.00% of adjusted biographies were incorrectly classified as real (and thus 68.00% of them were correctly classified as fake). | |||

The two histograms below allow a more detailed comparison between unadjusted and adjusted biographies. Since there was a different number of evaluations conducted between the two categories, the histograms are normalized so that each category has a total area of 1 in each of the histograms. We can observe the adjustments improve fluency most significantly, possibly due to the removal of repetitive, very similar sentences, as well as some lists of published works or lists of similar nature. A small improvement in lower coherence levels is also observable. | |||

[[File:Fakes_eval_results.png]] | |||

==== Final qualitative assessment ==== | ==== Final qualitative assessment ==== | ||

In conclusion, our model exceeded our expectations. On average, 1 out of 4 biographies was realistic enough to fool a human evaluator, at least on a first read. We expected a much lower success rate, but it appears the generative model works unexpectedly well with these "wikipedia-style" prompts - for this reason, though through our interface we offer the semi-structured prompt (composed of a name and an occupation), in the back end our generative and enhanced generative components both feature an API which supports biography generations from fully unstructured prompts. | |||

Another area of interest is whether the model was able to pick up any underlying historical reference. Our evaluation did not cover this, as we do not have the required historical knowledge (that would enable us to evaluate the generated biographies in that regard) ourselves. It thus remains an interesting direction for future work, provided qualified evaluators are available. | |||

=== Two examples of generated biographies === | === Two examples of generated biographies === | ||

Latest revision as of 17:47, 13 December 2020

Introduction

In this project, we use generative models to come up with creative biographies of Venetian people up to the 20th century. Our motivation was originally to observe how such a model would pick up on underlying relationships between Venetian actors in old centuries as well as their relationships with people in the rest of the world. These underlying relationships might or might not come to light in every generated biography, but the model certainly has the potential to pick up on some of them and offer fresh perspectives on historical tendencies.

Motivation

This project is an interesting exploration into the intersection between Humanities and Natural Language Processing (NLP) applied to text generation.

By generating fictional biographies of Venetians up to and including the 19th century, this model allows, on one hand, us to evaluate the current state, progress and limitation of text generation. Even more worthwhile, the question of whether underlying historical tendencies - most commonly under the form of international or inter-familial relationships - are learnt by the model can be explored.

We were able to create a pipeline which creates fictional biographies with a level of realism that exceeded our initial expectations. In fact, on average, 1 out of 4 fake biographies was able to fool us when we conducted an evaluation. To generate the fake biographies, almost all of our pipeline was engaged, with the sole exception of the web interface and server. The generative module handled the generation of unadjusted biographies which satisfied decent realism criteria by employing its two models. In addition, the enhancements module was used to increase realism in the biographies which were deemed "realistic" enough. These adjustments concern mainly dates’ fine-tuning - to make sure that the dates in a given biography are as coherent as possible - as well as entity replacement – a submodule which makes sure that the historical figures mentioned in a biography correspond to the era of the biography

Though the interface module was not used to generate biographies for evaluation, it has the nevertheless important task of facilitating interaction with the back end. It should also be mentioned that the back end provides a more extensive API than what is made available through this web interface.

Overall, we are satisfied with the obtained results, though it is impossible for us to authoritatively comment on their true historical accuracy and references to less well-known historical events and tendencies.

Project Plan and Milestones

The project can be roughly split into three modules, all of which will presented in greater detail in later sections of this page:

- an interface and webserver, to allow interaction with the model;

- a generative model, employing a GPT-2 model finetuned to generate venetian biographies, and a BERT model finetuned to act as a realness discriminator preventing lower quality biographies of being outputted;

- an enhancements module, which applies date and historical figures adjustments to the generated biographies in order to make them more realistic and congruent.

Below, we present an overview of the project's evolution and milestones:

Milestone 1 (up to 1 October)

- Brainstorm project ideas

- Prepare presentation to present our two ideas for the project

- Venetian biographies generator, one of the projects proposed by the professor, using a Language Model to generate fictional biographies of Venetians

- Chat-a-ghost, our original idea, inspired by the one above, where we would create a chat bot that would try emulate a fictional Venetian with whom the user could chat in order to obtain insights and better understand its (fictional) life

Milestone 2 (up to 14 November)

- Finalize project planning

- Build generative model, using Python with Tensorflow 1.x (to guarantee compatibility with pre-built GPT-2 and BERT models)

- Finetune GPT-II model

- Finetune Bert

- Connect both models to create generative pipeline

- Examine generated biographies, and acceptance threshold, and think about further improvements

Milestone 3 (up to 20 November)

- Create frontend for interface (leveraging Bootstrap and jQuery)

- Create backend for interface (using flask), and connect it to the generative module

- Finalize the RESTful API for communication between both ends

Milestone 4 (up to 5 December)

- Add post-processing date adjustment module

- Add historical realism enhancement module

- Assess feasibility and costs involved with outsourcing evaluation to a platform such as AMZ Mechanical Turk

Milestone 5 (up to 11 December)

- Have reached a decision with regards to evaluation procedure

- Conduct model evaluation

- Review code

- Finalize documentation

- Finalize report

Interface

In order to create an engaging, user-friendly experience, we created an interface which displays the generated biographies. A RESTful API was used to link the backend to the frontend, and the Flask framework was used to develop the server. Users can come up with a name and occupation combination and start the generation process by clicking the 'Generate' button. It is possible to specify the desired realism level, where a higher level results in more refined biographies, though, on average, a higher level also takes more time to produce a result (the discriminator requires a higher standard, and several generated biographies can fail to pass this requirement before one is accepted).

A useful feature for when the user needs inspiration is the "Randomize fields" button, which creates a random name and occupation combination from a list of 303 surnames, 21 forenames, and 94 occupations commonly used throughout Venetian history.

The interface is easily accessible and the code to run it can be found in our GitHub repository.

Generative module

Data processing pipeline

As a first step in the processing pipeline, we used the wikipediaapi [1] and wikipedia [2] packages to query articles from the Wikipedia category "People from Venice" [3].

The main task of this part of the data processing pipeline was to map Wikipedia HTML text to human-readable text, which includes eliminating section titles as well as removing references, lists, and tables. Detecting these proved to be a challenging task, and a few articles in the dataset still contain undesirable elements.

We chose to train the generative model with biographies of people having lived prior to the 20th century, as we would like the generated text to mainly reflect the historical aspects of Venetian figures.

The final dataset used for training comprises 1164 biographies. In further work, it could prove interesting to include more biographical sources in order to have a richer corpora, and assess whether the introduction of those sources resulted in more diverse generated biographies.

Text generation

This module was inspired by a GAN's architecture. The first submodule, GPT-2 acts as the generator by generating the biographies. These are then fed to a the second submodule, BERT. BERT then gives ratings to the biography which are used to determine whether the biography should be output. If it does not pass this test of realism, GPT-2 generates a new biography which is again fed to BERT.

GPT-2

The GPT-2 submodule is used for text generation. It is constituted of a GPT-2 [1] model which has been fine-tuned using Venetian biographies in order to be able to, given a prompt, generate a made up biography. This submodule hence plays the role of the "generator" in our GAN inspired module.

BERT

The BERT submodule is used for text assessment. It is constituted of a BERT model [2] which is trained to assess the realism of texts. Each biography is split into chunks before being fed to this module. It gives each chunk a realism score. The smallest realism score is used to determine whether the module should generate a new biography. This submodule serves as the "discriminator" in our GAN inspired module.

Enhancements module

Date Adjustments

The Date adjustments submodule is used in order to increase the realism of the generated biographies. This is done in several ways -

- One of the problems encountered in the generated biographies involves the lifespans of biographees. In fact, they are sometimes unrealistic and span over more than a centennial. We fix this by doing the following - the years are fetched using regex and then scaled in order to make sure that a biographee's lifespan is realistic. A realistic lifespan is determined by a gaussian distribution.

- Another problem which is found in generated biographies is the inconsistency between the centuries and the years mentioned. We fix this by doing the following - the centuries mentioned are fetched as well and are adjusted such that they match the years that are in the biography.

- Another problem which is present in the generated biographies in that the dates of different events are sometimes incoherent. For instance, some biographees die before they are born. This is fixed by doing the following - events (determined by verbs) are fetched and filtered down to "birth" and "death" events; their corresponding dates are fetched and adjusted so that they are realistic.

Entity Replacement

The generated biographies sometimes include references to actual historical figures. However, these figures sometimes belong to another era than the one corresponding to the generated biography. The entity replacement submodule aims to solve this issue. It hence is used in order to increase historical realism. This is done by searching for historical figures in the generated biographies and checking whether they belong to the era indicated by the dates in the biography. If they don't, a replacement if fetched from a historical figures dataset [3] such that the replacement belongs to the era of the biography and is as accurately close to the historical figure as possible. This closeness is determined by two factors -

- The replacement should be as geographically close to the figure being replaced as possible. This is done by looking for figures sharing the same city. If it is found, it is fetched. If there is no figure sharing the same city, we move on to the higher level which is the state level. If it is again not found, we move on to the country level and potentially the continent level if it again not found on the country level.

- The replacement should be as professionally close to the figure being replaced as possible. This is done by looking for figures sharing the same occupation. If it is found, it is fetched. If there is no figure sharing the same city, we move on to the higher level which is the industry level. If it is again not found, we move on to the domain level.

In case no replacements are found for the historical figures who do not belong to the era of the biography, we keep do not alter them. One solution which could be explored is to change the era of the biography to match the era of the historical figures who are mentioned. Given our time constraint, this solution was not explored and remains an interesting direction to explore in the future.

Quality assessment

To evaluate the generated biographies' realism, three types of biographies were prepared: real biographies of Venetians, which had been used for the model training, unadjusted fake biographies of Venetians, and adjusted fake biographies of Venetians. To create the fake biographies, it was necessary to temporarily abandon the semi-rigid prompt format we more commonly use and make available through the interface. Otherwise, the first sentence of each biography would have given away its real or fake nature. As such, the prompts used were instead the first sentence of wikipedia articles of other Italian people (for example, from Naples or Bologna), slightly changed so they were related to Venice instead of their true birthplace, when applicable.

In total, 160 biographies were read and evaluated, 80 of which were real biographies, 30 unadjusted fake biographies, and 50 adjusted fake biographies. More biographies were dedicated to the adjusted version since they are the true result of all modules, but 30 were still dedicated to the unadjusted biographies in order to provide a baseline results for just the GAN-inspired generative module's output.

Results

Evaluation metrics

Among the 160 evaluated biographies, out of which 80 were real and 80 were fake (either adjusted or not):

- Only 75.0% of the biographies which were classified as real were actually real. This means, the evaluators' precision was 0.75. (And 25.0% of biographies evaluated as real were actually fake!)

- Only 77.63% of fake biographies were correctly classified as such. The evaluators had a negative predictive value of 0.78. (And 22.37% of fake biographies were actually misclassified as real!)

- 78.75% of the real biographies were correctly classified as real; that is, the evaluators' recall, or sensitivity, was 0.79. (And 21.25% of them were actually misclassified as being fake.)

- 73.75% of biographies classified as fake were fake bios. The evaluators thus had a specificity, or true negative rate, of 0.74. (And 26.25% of biographies classified as fake were actually real biographies.)

In terms of fluency and coherence, the average results are presented bellow. Fluency measured how naturally the text read and flowed, including grammar, while coherence measured the intra-biography coherence, in terms of dates, professions and achievements, references to other people, consistency in naming, etc.

- The average fluency for real biographies was 8.25, while for the fake biographies it was 7.525.

- The average coherence for real biographies was 8.8, while for the fake biographies it was 6.625.

The following histograms present a visualization of fluency and coherence over their 10 point scale for each type of biography:

Adjusted versus unadjusted biographies

We can observe the adjustments module made a significant contribution to the level of realism of the generated biographies. Indeed, on average, when compared to an unadjusted biography, an adjusted one was misclassified as real almost twice as often.

In particular, the misclassification rates were the following:

- 16.67% of unadjusted biographies were incorrectly classified as real (and thus 83.33% of them were correctly classified as fake).

- 32.00% of adjusted biographies were incorrectly classified as real (and thus 68.00% of them were correctly classified as fake).

The two histograms below allow a more detailed comparison between unadjusted and adjusted biographies. Since there was a different number of evaluations conducted between the two categories, the histograms are normalized so that each category has a total area of 1 in each of the histograms. We can observe the adjustments improve fluency most significantly, possibly due to the removal of repetitive, very similar sentences, as well as some lists of published works or lists of similar nature. A small improvement in lower coherence levels is also observable.

Final qualitative assessment

In conclusion, our model exceeded our expectations. On average, 1 out of 4 biographies was realistic enough to fool a human evaluator, at least on a first read. We expected a much lower success rate, but it appears the generative model works unexpectedly well with these "wikipedia-style" prompts - for this reason, though through our interface we offer the semi-structured prompt (composed of a name and an occupation), in the back end our generative and enhanced generative components both feature an API which supports biography generations from fully unstructured prompts.

Another area of interest is whether the model was able to pick up any underlying historical reference. Our evaluation did not cover this, as we do not have the required historical knowledge (that would enable us to evaluate the generated biographies in that regard) ourselves. It thus remains an interesting direction for future work, provided qualified evaluators are available.

Two examples of generated biographies

Below, we present two generated biographies, which were incorrectly evaluated as being real. The first was adjusted while the second was not.

Francesco Faà di Bruno (29 March 1625 – 27 March 1688) was an Italian priest and advocate of the poor, a leading mathematician of his era and a noted religious musician. [SEP] Born in Venice, Faà di Bruno studied mathematics at the University of Padova and then entered the Society of Jesus in 1639. He returned to Padova in 1645, where he was elected priest of the Dorsoduro, the Jesuits' highest office, and was ordained in 1648. In 1649 he was appointed as the Patriarch of Venice, a position he held until 1651.In 1658 he was appointed cardinal protonotary archpriest of Santa Maria in Trivio and then as Patriarch of Venice in 1664, again in 1667. He was born in Venice and died in Rome.

Leo Nucci (16 April 1642 - 24 September 1691) was an Italian operatic baritone, particularly associated with Verdi and Verismo roles. [SEP] He was born in Venice, and was one of the most notable pupils of the celebrated operatic singer Francesco Sacrati. He was born to a rich family, and operated a great deal of personal and professional capital in opera, music publishing, and the theatrical production of plays. In particular, he established a correspondence with the composer Carlo Goldoni, and with the musical director Luca Carlevaris, that would influence the musical practice of his generation. His output included libretti for musicals by Giulio Cesare Dell'Acqua, Carlo Gozzi, Carlo Bevilacqua, the composer Carlo Bernardi, the composer Giovanni Antonio Fetti, the pianist Leon Battista Gritti, the singer Giulia Cesare da Rimini, and the music director Luca Carlevari. Nucci also arranged for the music of Francesco Grassi and the music of Andrea Ricci, two of the most important music directors of the Venetian era. He was the first to do this in over a decade, and the first to do so in a major role. Nucci brought to the table the musical virtuoso talents of Goldoni, Ciera, Carlo Coccia, Luca Carlevaris, the composer Leon Battista Gritti, and the music director Luca Carlevari. He played a role in Francesco Grassi's debut production of Zatta er mazzarellike, the music for the La Fenice frescoes in the Accademia.

Links

References

- ↑ Radford, Alec and Wu, Jeff and Child, Rewon and Luan, David and Amodei, Dario and Sutskever, Ilya, Language Models are Unsupervised Multitask Learners, 2019

- ↑ Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova, BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, 2019

- ↑ N/A, made available under a "Kaggle InClass" competition, Historical Figures