Switzerland Road extraction from historical maps: Difference between revisions

| Line 17: | Line 17: | ||

'''Milestone 2: ''' (Now) | '''Milestone 2: ''' (Now) | ||

* Prepare a small dataset (60 samples) for training and testing with Convolutional Neural Network, main part of the dhSegment tool. This dataset should consist of small patches extracted from the Dufour Map of Switzerland, their versions in jpeg format and binary labels created in GIMP. | * Prepare a small dataset (60 samples) for training and testing with Convolutional Neural Network, main part of the dhSegment tool. This dataset should consist of small patches extracted from the Dufour Map of Switzerland, their versions in jpeg format and binary labels created in GIMP. | ||

* Determine the way of downloading a huge amount of samples from GeoVITe. | |||

* Prepare for the mid-term presentation and write the project plan and milestone. | * Prepare for the mid-term presentation and write the project plan and milestone. | ||

* Try the dhSegment tool with the created dataset. Evaluate the results, modify the algorithm. Make conclusions about using this tool for road extraction, advantages and disadvantages of this approach. | * Try the dhSegment tool with the created dataset. Evaluate the results, modify the algorithm. Make conclusions about using this tool for road extraction, advantages and disadvantages of this approach. | ||

Revision as of 02:23, 25 November 2021

Introduction

Historical maps provide valuable information about spatial transformation of the landscape over time spans. This project, based on historical maps of Switzerland, is to vectorize road network and landcover and to visualize the transformation using a machine vision library developed at the DHLAB.

The main data source of this project is GeoVITe (Geodata Versatile Information Transfer environment),a browser-based access to geodata for research and teaching, operated by the Institute of Cartography and Geoinformation of ETH Zurich (IKG) since 2008.

Motivation

Historical maps contain rich information, which are helpful in urban planning, historical study, and various humanities research. Digitization of massive printed documents is a significant step before further research. However, most historical maps are scanned in rasterized graphical images. To conveniently use geographic data extracted from these maps in GIS software, vectorization is needed.

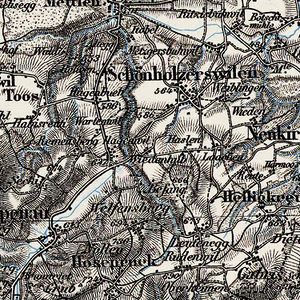

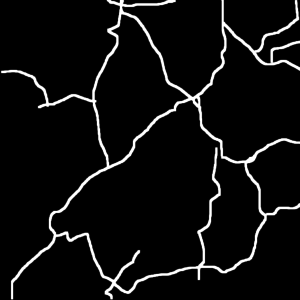

However, vectorization process has always been a challenge due to manual painting. In this project, we try to use dh-segmentation tool for automatic vectorization. With 60 high-resolution patches(1km*1km) for the training dataset, the model is tested on randomly selected patches and proposed to approximate idealized main roads of Dufour map of Switzerland.

Plan and Milestones

Milestone 1:

- Choose the topic for the project, present the first ideas. Get familiar with the data provided.

- Define the final subject of the project. Find reliable sources of information. Get familiar with the dhSegment tool.

Milestone 2: (Now)

- Prepare a small dataset (60 samples) for training and testing with Convolutional Neural Network, main part of the dhSegment tool. This dataset should consist of small patches extracted from the Dufour Map of Switzerland, their versions in jpeg format and binary labels created in GIMP.

- Determine the way of downloading a huge amount of samples from GeoVITe.

- Prepare for the mid-term presentation and write the project plan and milestone.

- Try the dhSegment tool with the created dataset. Evaluate the results, modify the algorithm. Make conclusions about using this tool for road extraction, advantages and disadvantages of this approach.

Milestone 3:

- Download the final dataset automatically using the python script: patches with corresponding coordinates completely covering the whole map of Switzerland.

- Test the dhSegment tool on the final dataset.

Milestone 4:

- Get the vectorised map of roads with skeletonization in Python using OpenCV.

- Visualise the results and prepare a final presentation.

| Deadline | Task | Completion |

|---|---|---|

| By Week 4 |

|

✓ |

| By Week 6 |

|

✓ |

| By Week 8 |

|

✓ |

| By Week 10 |

|

... |

| By Week 12 |

|

... |

| By Week 14 |

|

... |

Methodology

Dataset:

- Dufour Map from GeoVITe

- The 1:100 000 Topographic Map of Switzerland was the first official series of maps that encompassed the whole of Switzerland. It was published in the period from 1845 to 1865 and thus coincides with the creation of the modern Swiss Confederation.

- Classification : Main roads

- Layer: Topographic Raster Maps - Historical Maps - Dufour Maps

- Coordinate system: CH1903/LV03

- Predefined Grids: 1:25000

- Patch Size: 1000 by 1000 pixels

DhSegment:

A generic framework for historical document processing using Deep learning approach, created by Benoit Seguin and Sofia Ares Oliveira at DHLAB, EPFL.

Data preparation:

- GeoVITe: Automatic data crawling / Manually data accessing

- Swisstopo: black-and-white images -> difficult to annotate with low resolution

Labeling:

60 patches (1000x1000 pixels) using GIMP for model testing:

- Original patches with spatial information: tiff

- Patches for training: jpeg

- labels in black and white: png

Testing:

Limitation

The main limitation of our project is due to the data source platform. GeoVITE only allows small patches downloading, while automatic downloading from other sources leads to unsatisfying low-quality images.