Jerusalem 1840-1949 Road Extraction and Alignment: Difference between revisions

Junzhe.tang (talk | contribs) |

Junzhe.tang (talk | contribs) |

||

| Line 31: | Line 31: | ||

* '''Preprocessing''' | * '''Preprocessing''' | ||

To make the image data fit into the neural networks and to make the old city region more dominant in the image, we cropped and scaled the original image by hand for subsequent uses. | To make the image data fit into the neural networks and to make the old city region more dominant in the image, we cropped and scaled the original image by hand for subsequent uses. | ||

* '''Map annotating''' | |||

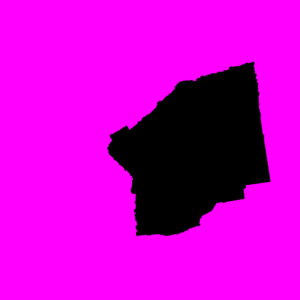

We used Procreate to pixel-wisely label 46 maps according to whether the pixel belong to the old city with different colors (RGB(0, 0, 0) for pixels inside the wall, RGB(255, 0, 255) for pixels outside the wall). | |||

* '''Training''' | * '''Training''' | ||

We divided the 46 annotated patches into 36 and 6 for training and validating respectively, and trained the model with learning rate 5e-5, batch size: 2, N_epochs: 40, and data augmentation like rotation, scaling and color. | |||

{|class="wikitable" style="margin: 1em auto 1em auto;" | {|class="wikitable" style="margin: 1em auto 1em auto;" | ||

| Line 44: | Line 49: | ||

* '''Prediction''' | * '''Prediction''' | ||

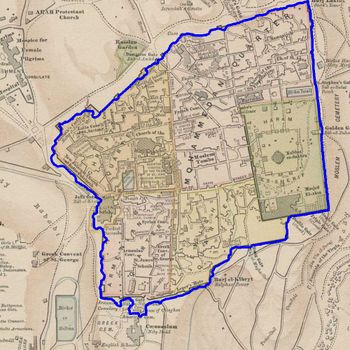

By doing prediction on the testing set, we got the predicted old city region and naturally got the contour of it, i.e. the wall. | By doing prediction on the testing set, we got the predicted old city region and naturally got the contour of it, i.e. the wall. | ||

{|class="wikitable" style="margin: 1em auto 1em auto;" | {|class="wikitable" style="margin: 1em auto 1em auto;" | ||

|- | |- | ||

| Line 51: | Line 57: | ||

* '''Postprocessing''' | * '''Postprocessing''' | ||

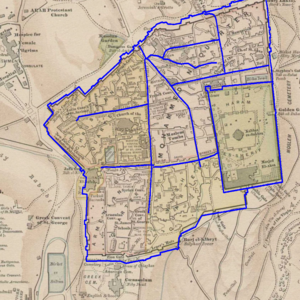

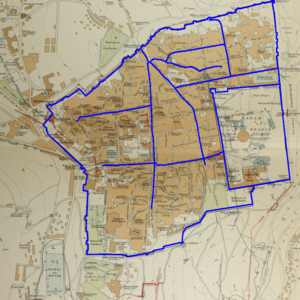

We | We removed the minor noises by retain the largest connected region and drew the contour of it. We put the contour on the original image and find that it generally fits well, although there are some minor deviations. | ||

[[File:1887res contour.jpg|350px|thumb|center|Figure 7: After removing noises and drawing contour]] | [[File:1887res contour.jpg|350px|thumb|center|Figure 7: After removing noises and drawing contour]] | ||

Revision as of 18:56, 20 December 2021

Motivation

The creation of large digital databases on urban development is a strategic challenge, which could lead to new discoveries in urban planning, environmental sciences, sociology, economics, and in a considerable number of scientific and social fields. Digital geohistorical data can also be used and valued by cultural institutions. These historical data could also be studied to better understand and optimize the construction of new infrastructures in cities nowadays, and provide humanities scientists with accurate variables that are essential to simulate and analyze urban ecosystems. Now there are many geographic information system platforms that can be directly applied, such as QGIS, ARCGIS, etc. How to digitize and standardize geo-historical data has become the focus of research. We hope to propose a model that can associate geographic historical data with today's digital maps, analyze and study them under the same geographic information platform, same coordinate projection, and the same scale. Eventually, it can eliminate errors caused by scaling, rotation, and the deformation of the map carrier that may exist in historical data and the entire process is automated and efficient.

The scale is restricted to Jerusalem in our project. Jerusalem is one of the oldest cities in the world and is considered holy to the three major Abrahamic religions—Judaism, Christianity, and Islam. We did georeferencing among Jerusalem’s historical maps from 1840 to 1949 and the modern map from OpenStreetMap so that the overlaid maps will reveal changes over time and enable map analysis and discovery. We focused on the wall of the Old City as the morphological feature to do georeferencing because the region outside the Old City has seen many new constructions while the Old City has not great changes and the closed polygon of the wall is relatively more consistent than other features like road networks. More specifically, we used dhSegment, a historical document segmentation tool, to extract the wall of the Old City of Jerusalem and proposed an alignment algorithm exploiting the geometrical features of the wall to align the maps.

Deliverables

- The latitudes and longitudes of the four vertices of the raw maps.

- Preprocessed (cropped and resized) patches of the raw maps with images information (including the position of the sub-image in the raw map) stored in a csv file.

- 46 1000 * 1000 annotations of the Old City area.

- Trained segmentation model.

- Code and instructions to reproduce the results on github.

Methodology

Dataset

- 126 historical maps of Jerusalem from 1837 to 1938.

- Modern geographical data of Jerusalem from OpenStreetMap.

Wall Extraction

We first used dhSegment to do image segmentation in order to extract the wall polygon from the maps. dhSegment [1] is a generic approach for Historical Document Processing, which relies on a Convolutional Neural Network to predict pixelwise characteristics. The whole process include preprocessing, training, predicting and postprocessing.

- Preprocessing

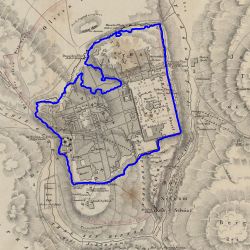

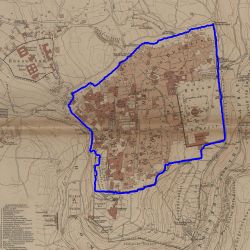

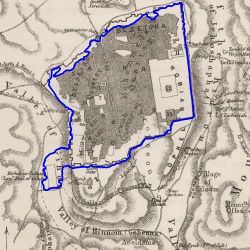

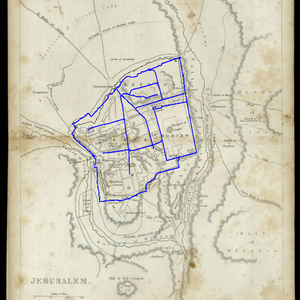

To make the image data fit into the neural networks and to make the old city region more dominant in the image, we cropped and scaled the original image by hand for subsequent uses.

- Map annotating

We used Procreate to pixel-wisely label 46 maps according to whether the pixel belong to the old city with different colors (RGB(0, 0, 0) for pixels inside the wall, RGB(255, 0, 255) for pixels outside the wall).

- Training

We divided the 46 annotated patches into 36 and 6 for training and validating respectively, and trained the model with learning rate 5e-5, batch size: 2, N_epochs: 40, and data augmentation like rotation, scaling and color.

- Prediction

By doing prediction on the testing set, we got the predicted old city region and naturally got the contour of it, i.e. the wall.

- Postprocessing

We removed the minor noises by retain the largest connected region and drew the contour of it. We put the contour on the original image and find that it generally fits well, although there are some minor deviations.

Wall Alignment

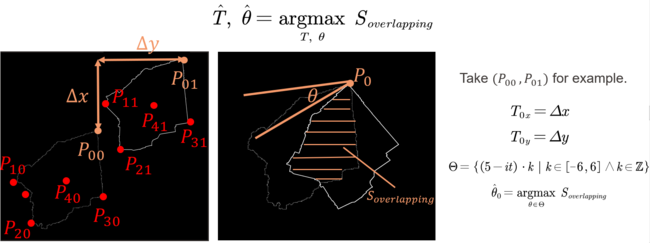

After obtaining the wall contour, we try to fit the wall anchor from the OpenStreetMap on it and obtain the transformation matrix. The algorithm is as follows.

- Scaling

Firstly, we do an approximate scaling to scale the walls into nearly the same size. Since at the first stage the walls are not in the same direction, the scaling got here is not accurate enough. So after doing the rotation steps, we will scale the size again. Of course, we can do the translation, rotation, scaling many times iteratively, but here only once can get a good result.

- Translation & Rotation

We traverse the point-pair set to select the reference point-pair, perform translation and rotation to obtain maximized overlapping area of the two polygons.

The translation and rotation step is to find the best translation and rotation angle that maximizes the overlapping area of the two walls. Since it’s computationally expensive to search the translation pixel by pixel, and the angle degree by degree, we here narrow down the search space. We limit the search space of translation by only considering the translations that can fit the key point pairs, (P_{00}, P_{01}), (P_{10}, P_{11}), (P_{20}, P_{21}), (P_{30}, P_{31}), together. Here we can also use more key points, like the centroids. For every translation, we fix the key point and rotate the wall every 5 degrees from -30 degrees to 30 degrees and calculate the overlapping area. To calculate the overlapping area, we use the FloodFill algorithm to fill the area bounded by the walls respectively and use the logical-and operation to obtain the overlapped region and then see the number of pixels that are not zero to be the area. After the iterations, we get the maximized overlapping area and the transformation matrix.

Results

Wall Extraction

For the wall extraction step, the neural network gives good predictions. But still it does not work well for some maps. For some maps, there is some concavity and convexity. This may owe to underfitting or overfitting. For some maps, the model just cannot make a prediction. This may be because our training set lacks samples that have similar features to these maps.

Wall Alignment

We plot the overlapped area ratio w.r.t the year of the map and find that the alignment results of more recent maps are better.

We add the main road from OpenStreetMap to the wall and do alignment. The result also shows that the alignment results of more recent maps are better.

Project Plan and Milestones

Milestones

- Do survey on semantic segmentation and registration algorithms, get familiar with our dataset and decide what feature to be used for alignment.

- Preprocess the dataset.

- Annotate the maps and use dhSegment for segmentation.

- Design and implement the alignment algorithms.

- Connect the processing steps and make the whole process a pipeline.

Plans

| Date | Task | Completion |

|---|---|---|

| By Week 4 |

|

✓ |

| By Week 6 |

|

✓ |

| By Week 8 |

|

✓ |

| By Week 10 |

|

✓ |

| By Week 11 |

|

-- |

| By Week 12 |

|

-- |

| By Week 13 |

|

-- |

| By Week 14 |

|

-- |

Future Work

- Fine tune the model for better wall segmentation performance;

- Improve the alignment algorithm to be more robust to bad segmentation results;

- Support road extraction and alignment. (Not sure)