Sanudo's Diary: Difference between revisions

No edit summary |

No edit summary |

||

| (9 intermediate revisions by 2 users not shown) | |||

| Line 33: | Line 33: | ||

<div style="display: flex; justify-content: center; align-items: flex-start; gap: 10px; margin-bottom: 20px;"> | <div style="display: flex; justify-content: center; align-items: flex-start; gap: 10px; margin-bottom: 20px;"> | ||

<div style="display: flex; justify-content: center; width: 50%;"> | <div style="display: flex; justify-content: center; width: 50%;"> | ||

[[File:SanudoImage.jpg|thumb|Sanudo]] | [[File:SanudoImage.jpg|thumb|Marin Sanudo]] | ||

</div> | </div> | ||

<div style="display: flex; justify-content: center; width: 50%;"> | <div style="display: flex; justify-content: center; width: 50%;"> | ||

| Line 39: | Line 39: | ||

|+ <span style="white-space: nowrap;">Sanudo's Background Information</span> | |+ <span style="white-space: nowrap;">Sanudo's Background Information</span> | ||

|- | |- | ||

| Status || Venetian historian, author and diarist. Aristocrat. | | Status || Venetian historian, author and diarist. Aristocrat. | ||

|- | |- | ||

| Occupation || Historian | | Occupation || Historian | ||

|- | |- | ||

| Life || 22 May 1466 - † 4 April 1536 | | Life || 22 May 1466 - † 4 April 1536 | ||

|- | |- | ||

|} | |} | ||

| Line 82: | Line 82: | ||

</div> | </div> | ||

<br> | <br> | ||

Sanudo's Diary is organized as a 58-volumne diary | |||

== Methodology == | |||

=== Generating Place Entities === | |||

The first task we had was extracting the relevant place entities from the OCR generated text file of Sanudo's index. This was essential because our eventual goal was to visualize name and place entities (i.e. important places and names documented by Sanudo) from the index onto a map of Venice. For this, we needed to filter out the place entities that existed only in Venice (not outside of it), and link them to co-ordinates to display on a map. Furthermore, each place corresponded to one or more "indices", i.e. columns in which the place name appeared in Sanudo's diaries. | |||

<b>1. Pre-processing OCR generated text file</b><br> | |||

The text file was sourced from the Internet Archive. We processed it to split the content into columns by identifying a specific text pattern. In summary, the columns are delineated by 2x3 line breaks and two page numbers, which served as key markers for the division. | |||

The place entities exist in the diary in the form of an indexed list at the end of certain columns. We extracted a list of place entities by | |||

<b>2. Place name verification pipeline</b><br> | |||

We identified three APIs which we could use to locate whether an entity exists in Venice or not. These were: Nominatim (OpenStreetMap), WikiData, and Geonames. We created a pipeline to extract place entities from the Sanudo's index and use the three APIs to search if the entity exists in Venice. If any of the three APIs found a match for the same place in Venice, we stored the id, alternative names, coordinates and place indices. Below is an example of the resulting JSON for "Marano": | |||

<pre> | |||

{ | |||

"id": 21, | |||

"place_name": "Marano", | |||

"place_alternative_name": [ | |||

"FrìuliX" | |||

], | |||

"place_index": [ | |||

"496", | |||

"497", | |||

"546", | |||

"550", | |||

"554", | |||

"556", | |||

"557", | |||

"584" | |||

], | |||

"nominatim_coords": [ | |||

"45.46311235", | |||

"12.120517939849389" | |||

], | |||

"geodata_coords": null, | |||

"wikidata_coords": null, | |||

"nominatim_match": true, | |||

"geodata_match": false, | |||

"wikidata_match": false, | |||

"latitude": "45.46311235", | |||

"longitude": "12.120517939849389", | |||

"agreement_count": 1 | |||

} | |||

</pre> | |||

We analyzed which of the three APIs could successfully determine whether the place entities extracted from Sanudo's diary were in Venice. One challenge we faced was the disambiguation of place names. Many place names could have changed over time or referred to multiple locations. To resolve this, we manually checked for ambiguities and then refined the place extraction pipeline to eliminate conflicts in the results. For instance, several places had an alternative name to be "veneziano", which had to be removed from the search query to the API. Furthermore, the Geodata API mistakenly included some locations in Greece in the final result of places in "Venice". We added special cases in the place_extraction pipeline to cover these errors. | |||

The next step is to match each instance of a place appearing in the text by extracting the specific paragraph that contains the place name. If a "paragraph," as determined in the earlier steps, contains the place name, we refine the extraction to ensure it represents the full, actual paragraph. This involves checking whether the "paragraph" might be part of the same larger paragraph as adjacent "paragraphs" in either the next or previous column. We also achieved this through a simple pipeline that involves determining whether the matching "paragraph" is the first or last file in the column's directory. In the case of the former, whether the immediately preceding "paragraph" ends with a period; in the case of the latter, whether the "paragraph" itself ends with a period. | |||

=== Paragraph Matching Methods === | |||

To identify all paragraphs containing a specific place_alias for each combination of ([place_name + alternative_name] x place_index) corresponding to a given place, we retrieve all paragraphs within each column in the place_index and apply five matching methods to compare the place_alias with each paragraph. Each method scans slices of the text within the paragraph, adjusting the slice length to be one character shorter than, equal to, or one character longer than the length of the place_alias. The methods are as follows: | |||

<ul> <li><b>Exact Match</b>: Compares slices of the text directly with the *place_alias*. A match is successful if the slice matches the *place_alias* exactly, ignoring differences in capitalization.</li> <li><b>Regular Expression Match</b>: Treats the *place_alias* as a pattern and checks the entire paragraph to see if it matches the pattern exactly, without considering case sensitivity.</li> <li><b>FuzzyWuzzy Match</b>: Uses a similarity score to compare the *place_alias* with slices of the text. If the similarity score exceeds a predefined threshold, it counts as a match. This method allows for approximate matches while disregarding case differences.</li> <li><b>Difflib Match</b>: Calculates a similarity ratio between the *place_alias* and slices of the text. If the ratio meets or exceeds a predefined threshold, it considers the match successful, tolerating small variations between the two.</li> <li><b>FuzzySearch Match</b>: Identifies approximate matches by allowing a specific number of character differences (referred to as "distance") between the *place_alias* and slices of the text. A match is confirmed if any such approximate matches are found.</li> </ul> | |||

<b>Note:</b> Cosine similarity was considered but abandoned due to its tendency to overlook fuzzy matches and its disproportionately high processing time compared to other methods.<br> | |||

=== Associating Person Names with Place Names === | |||

As a further step, we identify person names associated with each place name. For the current feasibility of the project, we adopt a straightforward criterion: <i>person names that appear in the same paragraph as a given place name are considered "associated" with that place.</i> <br><br> | |||

For now, we deliberately avoid using the human name index from the document or constructing database entities specifically for place names. Instead, we attach extracted names directly to a field in the database row corresponding to each paragraph. This decision is driven by concerns that: | |||

<ul> <li>Names appearing in a paragraph might not appear in the name index, or vice versa.</li> <li>Different individuals might share the same name, complicating the association.</li> </ul> | |||

Database Output Format | |||

The resulting database relation table will document all names associated with a place name and their specific context. The table format is as follows:<br> | |||

<b>Place_name_id, Paragraph, People_names</b><br><br> | |||

This format ensures flexibility while maintaining sufficient detail for further analysis.<br> | |||

=== Database Entity === | |||

We create a database with the following schema to define a place entity. The database consists of three tables, as below: | |||

1. <code>places</code> | |||

{| class="wikitable" | |||

! Column Name !! Data Type !! Not Null !! Default Value !! Primary Key !! Description | |||

|- | |||

| id || INTEGER || YES || NULL || YES || A unique identifier for each place in the database. | |||

|- | |||

| place_name || TEXT || YES || NULL || NO || The name of the place (e.g., city, town, landmark). | |||

|- | |||

| latitude || REAL || YES || NULL || NO || The latitude of the place in decimal degrees (geographical coordinate). | |||

|- | |||

| longitude || REAL || YES || NULL || NO || The longitude of the place in decimal degrees (geographical coordinate). | |||

|} | |||

2. <code>alternative_names</code> | |||

{| class="wikitable" | |||

! Column Name !! Data Type !! Not Null !! Default Value !! Primary Key !! Description | |||

|- | |||

| id || INTEGER || YES || NULL || YES || A unique identifier for each alternative name entry. | |||

|- | |||

| place_id || INTEGER || YES || NULL || NO || The ID of the place from the `places` table, linking alternative names to places. | |||

|- | |||

| alternative_name || TEXT || YES || NULL || NO || An alternative or historical name for the place. | |||

|} | |||

3. <code>place_indexes</code> | |||

{| class="wikitable" | |||

! Column Name !! Data Type !! Not Null !! Default Value !! Primary Key !! Description | |||

|- | |||

| id || INTEGER || YES || NULL || YES || A unique identifier for each place index entry. | |||

|- | |||

| place_id || INTEGER || YES || NULL || NO || The ID of the place from the `places` table, linking indexes to places. | |||

|- | |||

| place_index || INTEGER || YES || NULL || NO || An index or reference number associated with the place (e.g., historical, geographical, or catalog number). | |||

|} | |||

==Project Milestones== | ==Project Milestones== | ||

| Line 158: | Line 273: | ||

</ul> | </ul> | ||

</div> | </div> | ||

==Results== | ==Results== | ||

| Line 214: | Line 278: | ||

==Conclusion== | ==Conclusion== | ||

== References == | == References== | ||

Frontend references:<br> | Frontend references:<br> | ||

https://pov-dev.up.railway.app/ (development version)<br> | https://pov-dev.up.railway.app/ (development version)<br> | ||

Latest revision as of 12:27, 9 December 2024

Click to go back to Project lists

Our Github Page

Link to Google Doc

Diaries

Frontend

Introduction

The main work done in this project is to convert the geoname entities in the index of <Sanodo's diary> into digital form.

For the ultimate goal, it is to present the named entities in diary in an interactive way, e.g. map-based website. georeference the useful name entities with Venice places. Aiming to provide an immersive experience that allows users to explore the diary in a spatially interactive format, deepening their engagement with the historical narrative.

About Sanudo

| Status | Venetian historian, author and diarist. Aristocrat. |

| Occupation | Historian |

| Life | 22 May 1466 - † 4 April 1536 |

Sanudo's Diary

The Diaries of Marin Sanudo represent one of the most comprehensive daily records of events ever compiled by a single individual in early modern Europe. They offer insights into various aspects of Venetian life, from "diplomacy to public spectacles, politics to institutional practices, state councils to public opinion, mainland territories to overseas possessions, law enforcement to warfare, the city's landscape to the lives of its inhabitants, and from religious life to fashion, prices, weather, and entertainment".[2]

English selection & comment of Diary

Transforming Sanudo’s index (1496 - 1533)

| Diary Duration | 1496-1533 | 37 |

| Quantity | 58 Volumes | around 40000 pages |

| Content Style | deal with any matter | regardless of its ‘importance’ |

"...the continuity of events and institutions collapses into the quotidian. ...Unreflecting, pedantic, and insatiable, he aimed "to seek out every occurrence, no matter how slight," for he believed that the truth of events could only be grasped through an abundance of facts. 18 He gathered those facts in the chancellery of the Ducal Palace and in the streets of the city, transcribing official legislation and ambassadorial dispatches, reporting popular opinion and Rialto gossip"[1]

Sanudo's Diary is organized as a 58-volumne diary

Methodology

Generating Place Entities

The first task we had was extracting the relevant place entities from the OCR generated text file of Sanudo's index. This was essential because our eventual goal was to visualize name and place entities (i.e. important places and names documented by Sanudo) from the index onto a map of Venice. For this, we needed to filter out the place entities that existed only in Venice (not outside of it), and link them to co-ordinates to display on a map. Furthermore, each place corresponded to one or more "indices", i.e. columns in which the place name appeared in Sanudo's diaries.

1. Pre-processing OCR generated text file

The text file was sourced from the Internet Archive. We processed it to split the content into columns by identifying a specific text pattern. In summary, the columns are delineated by 2x3 line breaks and two page numbers, which served as key markers for the division.

The place entities exist in the diary in the form of an indexed list at the end of certain columns. We extracted a list of place entities by

2. Place name verification pipeline

We identified three APIs which we could use to locate whether an entity exists in Venice or not. These were: Nominatim (OpenStreetMap), WikiData, and Geonames. We created a pipeline to extract place entities from the Sanudo's index and use the three APIs to search if the entity exists in Venice. If any of the three APIs found a match for the same place in Venice, we stored the id, alternative names, coordinates and place indices. Below is an example of the resulting JSON for "Marano":

{

"id": 21,

"place_name": "Marano",

"place_alternative_name": [

"FrìuliX"

],

"place_index": [

"496",

"497",

"546",

"550",

"554",

"556",

"557",

"584"

],

"nominatim_coords": [

"45.46311235",

"12.120517939849389"

],

"geodata_coords": null,

"wikidata_coords": null,

"nominatim_match": true,

"geodata_match": false,

"wikidata_match": false,

"latitude": "45.46311235",

"longitude": "12.120517939849389",

"agreement_count": 1

}

We analyzed which of the three APIs could successfully determine whether the place entities extracted from Sanudo's diary were in Venice. One challenge we faced was the disambiguation of place names. Many place names could have changed over time or referred to multiple locations. To resolve this, we manually checked for ambiguities and then refined the place extraction pipeline to eliminate conflicts in the results. For instance, several places had an alternative name to be "veneziano", which had to be removed from the search query to the API. Furthermore, the Geodata API mistakenly included some locations in Greece in the final result of places in "Venice". We added special cases in the place_extraction pipeline to cover these errors.

The next step is to match each instance of a place appearing in the text by extracting the specific paragraph that contains the place name. If a "paragraph," as determined in the earlier steps, contains the place name, we refine the extraction to ensure it represents the full, actual paragraph. This involves checking whether the "paragraph" might be part of the same larger paragraph as adjacent "paragraphs" in either the next or previous column. We also achieved this through a simple pipeline that involves determining whether the matching "paragraph" is the first or last file in the column's directory. In the case of the former, whether the immediately preceding "paragraph" ends with a period; in the case of the latter, whether the "paragraph" itself ends with a period.

Paragraph Matching Methods

To identify all paragraphs containing a specific place_alias for each combination of ([place_name + alternative_name] x place_index) corresponding to a given place, we retrieve all paragraphs within each column in the place_index and apply five matching methods to compare the place_alias with each paragraph. Each method scans slices of the text within the paragraph, adjusting the slice length to be one character shorter than, equal to, or one character longer than the length of the place_alias. The methods are as follows:

- Exact Match: Compares slices of the text directly with the *place_alias*. A match is successful if the slice matches the *place_alias* exactly, ignoring differences in capitalization.

- Regular Expression Match: Treats the *place_alias* as a pattern and checks the entire paragraph to see if it matches the pattern exactly, without considering case sensitivity.

- FuzzyWuzzy Match: Uses a similarity score to compare the *place_alias* with slices of the text. If the similarity score exceeds a predefined threshold, it counts as a match. This method allows for approximate matches while disregarding case differences.

- Difflib Match: Calculates a similarity ratio between the *place_alias* and slices of the text. If the ratio meets or exceeds a predefined threshold, it considers the match successful, tolerating small variations between the two.

- FuzzySearch Match: Identifies approximate matches by allowing a specific number of character differences (referred to as "distance") between the *place_alias* and slices of the text. A match is confirmed if any such approximate matches are found.

Note: Cosine similarity was considered but abandoned due to its tendency to overlook fuzzy matches and its disproportionately high processing time compared to other methods.

Associating Person Names with Place Names

As a further step, we identify person names associated with each place name. For the current feasibility of the project, we adopt a straightforward criterion: person names that appear in the same paragraph as a given place name are considered "associated" with that place.

For now, we deliberately avoid using the human name index from the document or constructing database entities specifically for place names. Instead, we attach extracted names directly to a field in the database row corresponding to each paragraph. This decision is driven by concerns that:

- Names appearing in a paragraph might not appear in the name index, or vice versa.

- Different individuals might share the same name, complicating the association.

Database Output Format

The resulting database relation table will document all names associated with a place name and their specific context. The table format is as follows:

Place_name_id, Paragraph, People_names

This format ensures flexibility while maintaining sufficient detail for further analysis.

Database Entity

We create a database with the following schema to define a place entity. The database consists of three tables, as below:

1. places

| Column Name | Data Type | Not Null | Default Value | Primary Key | Description |

|---|---|---|---|---|---|

| id | INTEGER | YES | NULL | YES | A unique identifier for each place in the database. |

| place_name | TEXT | YES | NULL | NO | The name of the place (e.g., city, town, landmark). |

| latitude | REAL | YES | NULL | NO | The latitude of the place in decimal degrees (geographical coordinate). |

| longitude | REAL | YES | NULL | NO | The longitude of the place in decimal degrees (geographical coordinate). |

2. alternative_names

| Column Name | Data Type | Not Null | Default Value | Primary Key | Description |

|---|---|---|---|---|---|

| id | INTEGER | YES | NULL | YES | A unique identifier for each alternative name entry. |

| place_id | INTEGER | YES | NULL | NO | The ID of the place from the `places` table, linking alternative names to places. |

| alternative_name | TEXT | YES | NULL | NO | An alternative or historical name for the place. |

3. place_indexes

| Column Name | Data Type | Not Null | Default Value | Primary Key | Description |

|---|---|---|---|---|---|

| id | INTEGER | YES | NULL | YES | A unique identifier for each place index entry. |

| place_id | INTEGER | YES | NULL | NO | The ID of the place from the `places` table, linking indexes to places. |

| place_index | INTEGER | YES | NULL | NO | An index or reference number associated with the place (e.g., historical, geographical, or catalog number). |

Project Milestones

2024/10/10 Week 3

Start work on project. Conducted background information research and clarified project goals.Reviewed related articles.

- Goals:

- Obtain diary text and extract place names.

- Match person names with place names.

- Link named entities to specific diary content.

- Planned integration with the Venice interactive map frontend.

2024/11/14 Week 9

Midterm presentation completed.

- Summary:

- Current Progress:

- Data processing: Data obtaining, index extraction, column extraction

- Filtering: Venice-name verification pipeline

- Future Plan:

- Handle data discrepancies

- Extract people names associated with place entities

- Embed information into a map

- Current Progress:

- Next Steps:

- Week 10: Extract people names associated with place entities

- Week 11: Create GIS-compatible format for pipeline output

- Week 12 (optional): Test integration with Venice front-end

- Week 13 (optional): Add context for name-place pairing

2024/12/5 Week 12

Till now, the main task is almost completed.

- Current Progress:

- Place Index Navigator: can now match and go back to the related paragraph with a given gname entity.

- Data structure (geoname, column number, related paragraph)built in csv format

- Italian name extraction: can now extract all Italian name entities from a text paragraph.

- Refined the Geoname verification pipeline, fixed several bugs

- Next steps:

- Work visualization

- Embed information into a map

Results

Conclusion

References

Frontend references:

https://pov-dev.up.railway.app/ (development version)

https://pov.up.railway.app/

Interactive(?) book:

https://valley.newamericanhistory.org/

Document references:

[1] Finlay, Robert. “Politics and History in the Diary of Marino Sanuto.” Renaissance Quarterly, vol. 33, no. 4, 1980, pp. 585–98. JSTOR, https://doi.org/10.2307/2860688. Accessed 10 Oct. 2024.

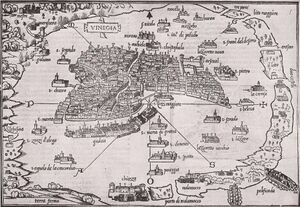

[2]Image source: https://evolution.veniceprojectcenter.org/evolution.html

[3]Ferguson, Ronnie. “The Tax Return (1515) of Marin Sanudo: Fiscality, Family, and Language in Renaissance Venice.” Italian Studies 79, no. 2 (2024): 137–54. doi:10.1080/00751634.2024.2348379.