Generation of Textual Description for Parcels: Difference between revisions

Ruyin.feng (talk | contribs) |

Ruyin.feng (talk | contribs) |

||

| (50 intermediate revisions by 2 users not shown) | |||

| Line 39: | Line 39: | ||

| 09.12 - 15.12 || Write the wikipage <br> Organize GitHub code <br> Prepare for the final presentation || Done | | 09.12 - 15.12 || Write the wikipage <br> Organize GitHub code <br> Prepare for the final presentation || Done | ||

|- | |- | ||

| 16.12 - 22.12 || Deliver GitHub repository and wikipage (18.12) <br> Final presentation (19.12) || | | 16.12 - 22.12 || Deliver GitHub repository and wikipage (18.12) <br> Final presentation (19.12) || Done | ||

|} | |} | ||

</div> | </div> | ||

| Line 364: | Line 364: | ||

====Evaluation Criteria for Summary Paragraph==== | ====Evaluation Criteria for Summary Paragraph==== | ||

Since the summary is a comprehensive description based on the Catastici and Sommarioni descriptions, it is challenging to evaluate each detailed property individually. Thus, we categorized all properties into three major properties. The first property includes information related to the parcel itself, such as geographic location, administrative region, function, and type. The second category pertains to the owner, encompassing details such as the owner's name, identity, and relationships. The third category covers rental-related information, including tenants and rental payments. | |||

In evaluating the summaries, we focus more on whether the new descriptions logically and reasonably explain the changes, differences, and consistencies between the two time periods, while aligning with common sense and contextual logic. Therefore, we chose plausibility as the primary metric for this evaluation. | |||

For each generated description, evaluate whether it: | For each generated description, evaluate whether it: | ||

| Line 375: | Line 377: | ||

*1: If the description is logical, reasonable, and aligns well with the context and common sense. | *1: If the description is logical, reasonable, and aligns well with the context and common sense. | ||

The evaluation metrics are shown below. | |||

<div style="display: flex; justify-content: center;"> | <div style="display: flex; justify-content: center;"> | ||

| Line 381: | Line 383: | ||

|+ Evaluation Metrics for Summary | |+ Evaluation Metrics for Summary | ||

|- | |- | ||

! !! | ! !! ParcelInfo !! OwnerInfo !! RentalInfo | ||

|- | |- | ||

| | | plausibility || 0 or 1 || 0 or 1 || 0 or 1 | ||

|} | |} | ||

</div> | </div> | ||

| Line 403: | Line 404: | ||

*4 (Good): The translation is mostly accurate, clear, and reasonably fluent, with only minor issues in adherence to Chinese language norms. | *4 (Good): The translation is mostly accurate, clear, and reasonably fluent, with only minor issues in adherence to Chinese language norms. | ||

*5 (Excellent): The translation is both accurate and highly fluent, seamlessly adhering to Chinese linguistic norms and providing an easy-to-understand and natural reading experience. | *5 (Excellent): The translation is both accurate and highly fluent, seamlessly adhering to Chinese linguistic norms and providing an easy-to-understand and natural reading experience. | ||

===Test Inner-Annotator Agreement=== | ===Test Inner-Annotator Agreement=== | ||

| Line 415: | Line 414: | ||

===Evaluation of Catastici Description=== | ===Evaluation of Catastici Description=== | ||

---- | ---- | ||

During the process of generating descriptions for Catastici and Sommarioni, we conducted two rounds of evaluation in total. The first evaluation revealed several shortcomings in the prompt design and data formatting. | During the process of generating descriptions for Catastici and Sommarioni, we conducted two rounds of evaluation in total. The first evaluation revealed several shortcomings in the prompt design and data formatting. So we could make targeted improvements and corrections based on the findings, which resulted in significant enhancements during the second evaluation. | ||

'''Misleading Features in Missing Data''': After the first round of evaluation, we observed that large language models (LLMs) often fabricate information for missing features, despite explicit instructions in the prompt prohibiting such behavior. To address this, we chose to remove missing features entirely from the data provided to the LLM. Additionally, we further clarified in the prompt that generating fabricated information is strictly prohibited, reducing the likelihood of hallucinated content. | '''Misleading Features in Missing Data''': After the first round of evaluation, we observed that large language models (LLMs) often fabricate information for missing features, despite explicit instructions in the prompt prohibiting such behavior. To address this, we chose to remove missing features entirely from the data provided to the LLM. Additionally, we further clarified in the prompt that generating fabricated information is strictly prohibited, reducing the likelihood of hallucinated content. | ||

| Line 431: | Line 430: | ||

</div> | </div> | ||

The evaluation scores and the results of inter-annotator are shown below. Prompt 1 was used in the initial evaluation round. During this round, the accuracy and conciseness scores for the owner's title and job, along with the accuracy of location and the conciseness of payment, were notably low. However, after refining both the dataset and Prompt 2, we observed significant improvements in the performance of these properties. | The evaluation scores and the results of inter-annotator are shown below. The Inter-Annotation Agreement across both rounds of evaluation largely exceeded 0.95, indicating a high level of reliability in the evaluation results. | ||

Prompt 1 was used in the initial evaluation round. During this round, the accuracy and conciseness scores for the owner's title and job, along with the accuracy of location and the conciseness of payment, were notably low. However, after refining both the dataset and Prompt 2, we observed significant improvements in the performance of these properties. | |||

<div style="display: flex; justify-content: center;"> | <div style="display: flex; justify-content: center;"> | ||

| Line 446: | Line 441: | ||

|+ Inter-Annotation Agreement for Catastici Description | |+ Inter-Annotation Agreement for Catastici Description | ||

|- | |- | ||

! style="width: 14%;" | !! Location !! | ! style="width: 14%;" | !! Location !! Function !! Owners' Name !! Owners' Title & Job !! Tenant !! Payment | ||

|- | |- | ||

| Prompt1-Accuracy || 0.95 || 1.0 || 0.95 || 0.9 || 1.0 || 0.95 | | Prompt1-Accuracy || 0.95 || 1.0 || 0.95 || 0.9 || 1.0 || 0.95 | ||

| Line 470: | Line 465: | ||

| Prompt2-Accuracy || 1.0 || 1.0 || 0.875 || 1.0 || 1.0 || 0.975 | | Prompt2-Accuracy || 1.0 || 1.0 || 0.875 || 1.0 || 1.0 || 0.975 | ||

|- | |- | ||

| Prompt1- | | Prompt1-Conciseness || 1.0 || 1.0 || 1.0 || 0.2 || 1.0 || 0.275 | ||

|- | |- | ||

| Prompt2- | | Prompt2-Conciseness || 1.0 || 1.0 || 0.6 || 1.0 || 1.0 || 0.975 | ||

|} | |} | ||

</div> | |||

<div style="text-align: center;"> | |||

[[File:Catastici_radar_chart_r1r2.png|900px|Catastici Evaluation]] | |||

</div> | |||

Here is a Catastici description compared between Round 1 and Round 2. The bolded brown sections highlight the parts that impacted the quality of the description. After restructuring the JSON and refining the template, the description became more concise, significantly reducing unnecessary content and resolving issues of misunderstanding. | |||

<div style="text-align: center;"> | |||

[[File:catastici_compare.png|900px|sommarioni example]] | |||

</div> | </div> | ||

===Evaluation of Sammarioni Description=== | ===Evaluation of Sammarioni Description=== | ||

---- | |||

After our first round of description generation and evaluation, we discover several problems about the sommarioni paragraph. | After our first round of description generation and evaluation, we discover several problems about the sommarioni paragraph. | ||

| Line 490: | Line 498: | ||

</div> | </div> | ||

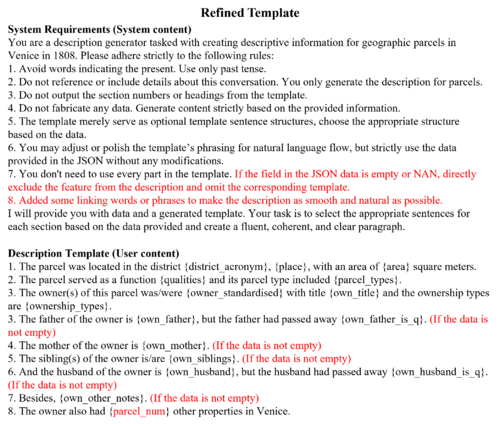

After evaluating the performance of Prompt 1 used in Round 1, we identified its primary weakness in terms of conciseness, particularly for the properties of owner family and ownership. Therefore, we focused on enhancing conciseness in Prompt 2. | The Inter-Annotation Agreement across both rounds of evaluation largely exceeded 0.9, indicating a high level of reliability in the evaluation results. After evaluating the performance of Prompt 1 used in Round 1, we identified its primary weakness in terms of conciseness, particularly for the properties of owner family and ownership. Therefore, we focused on enhancing conciseness in Prompt 2. | ||

<div style="display: flex; justify-content: center;"> | <div style="display: flex; justify-content: center;"> | ||

| Line 525: | Line 529: | ||

| Prompt2-Accuracy || 0.925 || 1.0 || 0.825 || 0.95 || 0.975 | | Prompt2-Accuracy || 0.925 || 1.0 || 0.825 || 0.95 || 0.975 | ||

|- | |- | ||

| Prompt1- | | Prompt1-Conciseness || 0.75 || 0.96875 || 0.65625 || 0.09375 || 0.75 | ||

|- | |- | ||

| Prompt2- | | Prompt2-Conciseness || 0.85 || 1.0 || 1.0 || 0.975 || 1.0 | ||

|} | |} | ||

</div> | |||

<div style="text-align: center;"> | |||

[[File:Sommarioni_radar_chart_r1r2.png|900px|sommarioni evaluation]] | |||

</div> | |||

Here is an Sommarioni description compared between Round 1 and Round 2. As we can see, after refining the template, the description becomes more concise, reducing a significant amount of unnecessary data and resolving the misunderstanding issue. | |||

<div style="text-align: center;"> | |||

[[File:s_example.png|900px|sommarioni example]] | |||

</div> | </div> | ||

===Evaluation of Summary=== | ===Evaluation of Summary=== | ||

---- | ---- | ||

The Inter-Annotation Agreement for all properties exceeded 0.75, indicating a high level of consistency in the annotation results. The evaluation score for ownerInfo was the lowest at 0.75, likely due to the complexity and difficulty of interpreting the diverse types of information it contains. In contrast, the evaluation scores for parcelInfo and rentalInfo were both above 0.9, demonstrating an almost perfect representation of parcel and rental information. | |||

<div style="display: flex; justify-content: center;"> | <div style="display: flex; justify-content: center;"> | ||

| Line 566: | Line 584: | ||

===Evaluation of Translation=== | ===Evaluation of Translation=== | ||

---- | ---- | ||

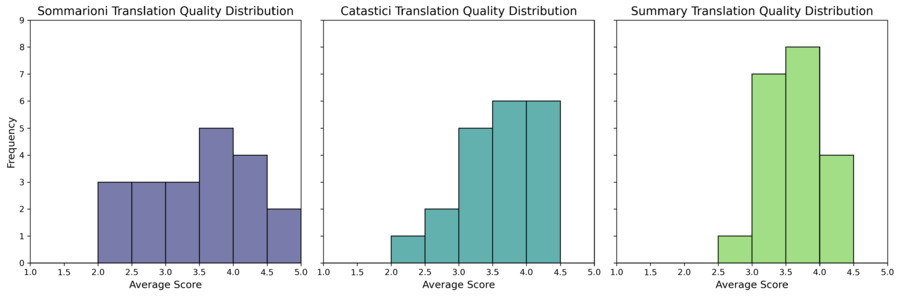

We used the average score from two annotators to represent the rating for each translation. The scores ranged from 2 to 5, indicating suboptimal performance. The main issues identified in the translation process are as follows: | |||

'''Inaccurate translation of proper nouns''': For example, S. Salvatore degli Incurabili was mistranslated due to a lack of contextual understanding. S. Salvatore refers to the "Holy Savior" while degli Incurabili literally means "of the Incurables". Historically, this phrase was associated with hospitals or charitable institutions in the Middle Ages or Renaissance that cared for patients with incurable diseases, such as leprosy or terminal illnesses. However, the large language model misinterpreted S. Salvatore as a personal name and rendered it phonetically in Chinese, while degli Incurabili was translated literally as "of the Incurables." These errors can lead to significant misunderstandings of the context. | |||

'''Cross-linguistic expression discrepancies''': Differences in language structures and grammar can create deviations in meaning. For instance, in the sentence The owner also had one other property in Venice, the word property was translated into Chinese as “物业.” However, “物业” primarily refers to property management organizations or personnel, which is inconsistent with the intended meaning referring to real estate. | |||

<div style="text-align: center;"> | <div style="text-align: center;"> | ||

[[File:Translation_result.png|900px| | [[File:Translation_result.png|900px|TranslationEvalution]] | ||

</div> | </div> | ||

===Results=== | ===Results=== | ||

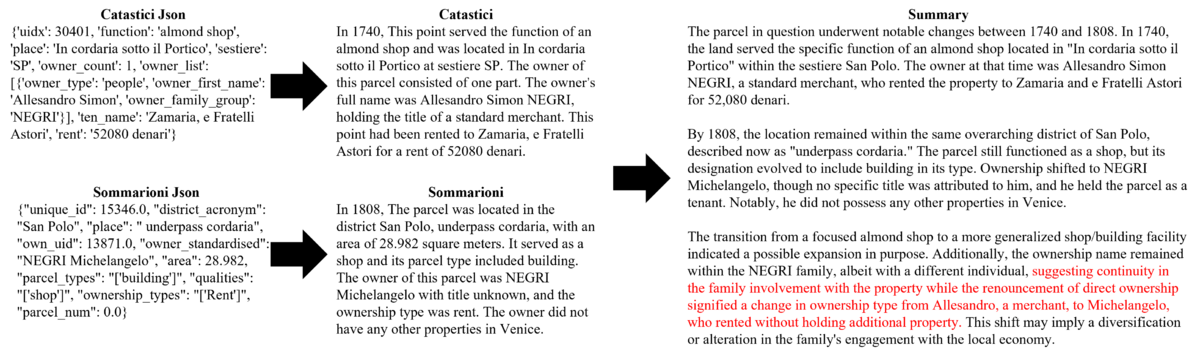

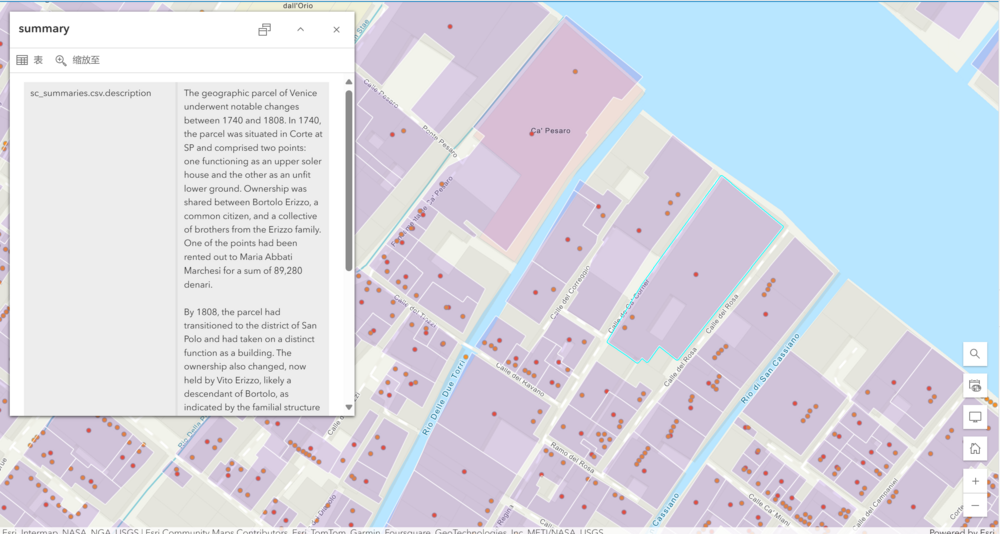

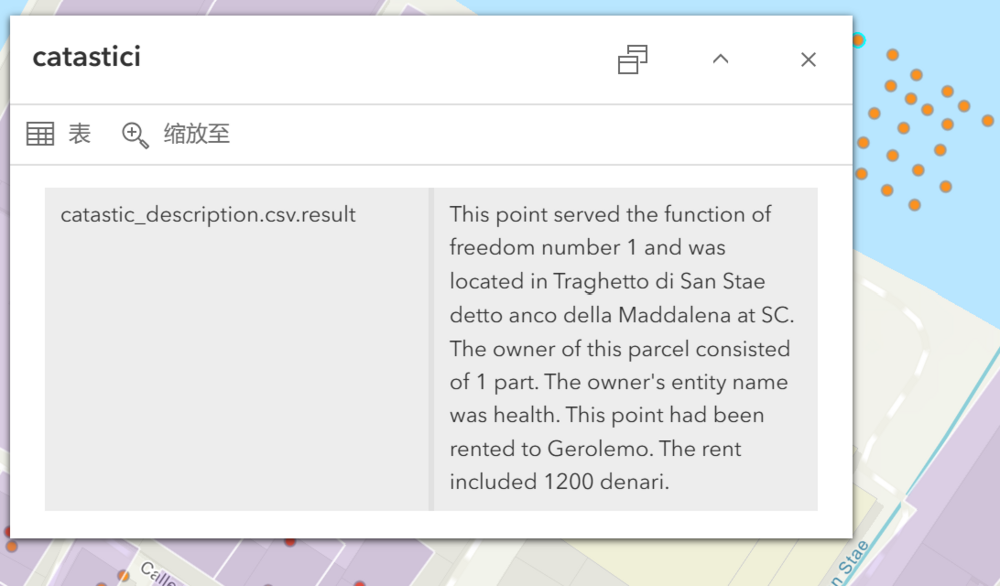

---- | Here is an example of our description. As shown in the picture below, the descriptions of the Catastici and Sommarioni datasets accurately reflect the original data. Furthermore, the summary paragraph effectively connects the two datasets. In this case, the owner of the parcel in both 1740 and 1808 shared the same family name, and the summary paragraph successfully highlights this connection, offering a reasonable hypothesis.. | ||

<div style="text-align: center;"> | |||

[[File:Description Example.png|1200px|An Example of Description]] | |||

</div> | |||

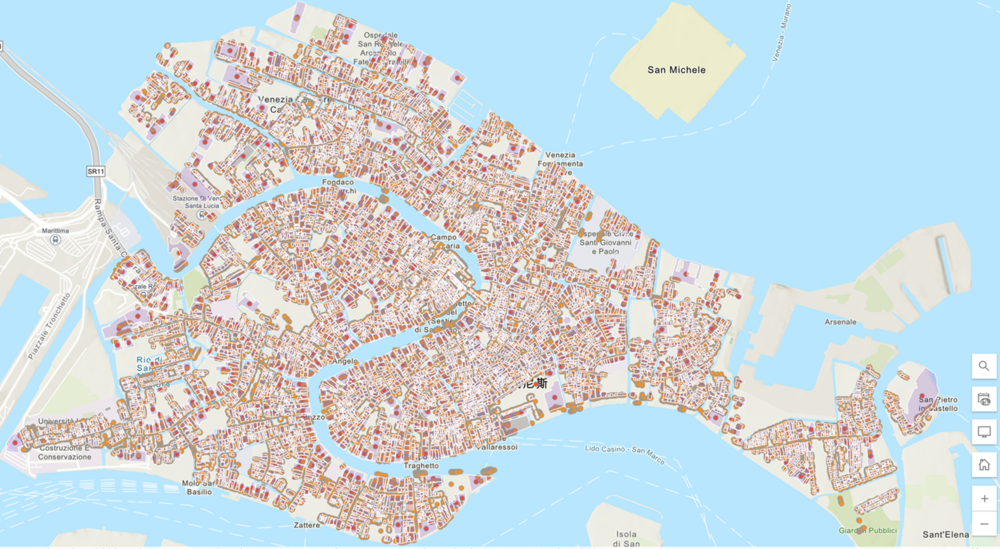

Additionally, to present our description more effectively, we used ArcGIS Online to create an interactive map, allowing users to view the description by clicking on the map. Below is an image of the online map. | |||

<div style="text-align: center;"> | |||

[[File:OnlineMap.png|1000px|Online Map]] | |||

</div> | |||

<div style="text-align: center;"> | |||

[[File:summary.png|1000px|An Example of Description]] | |||

</div> | |||

<div style="text-align: center;"> | |||

[[File:catastici_des.png|1000px|An Example of Description]] | |||

</div> | |||

[https://www.arcgis.com/home/webmap/viewer.html?webmap=acc03b68337f48a9902bf11a7901f3e3 Click here to view the interactive map] | |||

=Limitations and Future Works= | =Limitations and Future Works= | ||

'''Prompt Template Promotion''': | |||

As discussed in the previous section, we only conducted two rounds of promotion for our prompt template. The accuracy and conciseness of the Catastici and Sommarioni paragraphs, as well as the plausiblity of the summary paragraph can still be improved. Additionally, all the descriptions are currently quite similar to each other. It would be beneficial to design different tones for the descriptions to make them more diverse and engaging. | As discussed in the previous section, we only conducted two rounds of promotion for our prompt template. The accuracy and conciseness of the Catastici and Sommarioni paragraphs, as well as the plausiblity of the summary paragraph can still be improved. Additionally, all the descriptions are currently quite similar to each other. It would be beneficial to design different tones for the descriptions to make them more diverse and engaging. | ||

Due to budget limitations, we only used GPT-4o-mini to generate our descriptions and translations. This model has some limitations, particularly in translation. Therefore, our next step is to involve other large language models, such as GPT- | '''Model Improvement''': | ||

Due to budget limitations, we only used GPT-4o-mini to generate our descriptions and translations. This model has some limitations, particularly in translation. Therefore, our next step is to involve other large language models, such as GPT-4o and T5, to address these issues. | |||

'''Frontend Display''': | |||

The current frontend is not yet capable of fully showcasing all types of description information. Further testing is required to ensure usability and smooth interactions. Additionally, features like search functionality need to be implemented to enhance the user experience. | |||

=Reference= | =Reference= | ||

Wiseman, S., Shieber, S. M., & Rush, A. M. (2017). Challenges in data-to-document generation. arXiv preprint arXiv:1707.08052. | |||

https://arxiv.org/abs/1707.08052v1 | |||

Mueller, R.C., & Lane, F.C. (2020). Money and Banking in Medieval and Renaissance Venice: Volume I: Coins and Moneys of Account. Baltimore: Johns Hopkins University Press. https://dx.doi.org/10.1353/book.72157. | |||

Günther, P. (n.d.). The Casanova Tour. Retrieved from https://giacomo-casanova.de/catour16.htm | |||

Venetian lira. (n.d.). In Wikipedia. Retrieved December 18, 2024, from https://en.wikipedia.org/wiki/Venetian_lira | |||

=GitHub Repositories= | =GitHub Repositories= | ||

| Line 588: | Line 641: | ||

'''Course: ''' Foundation of Digital Humanities (DH-405), EPFL </br> | '''Course: ''' Foundation of Digital Humanities (DH-405), EPFL </br> | ||

'''Professor:''' [http://people.epfl.ch/frederic.kaplan Frédéric Kaplan]</br> | '''Professor:''' [http://people.epfl.ch/frederic.kaplan Frédéric Kaplan]</br> | ||

'''Supervisors: '''Alexander Rusnak, | '''Supervisors: '''Alexander Rusnak, Céderic Viaccoz, Tommy Bruzzese,Tristan Karch</br> | ||

'''Authors: ''' Ruyin Feng, Zhichen Fang | '''Authors: ''' Ruyin Feng, Zhichen Fang | ||

Latest revision as of 09:48, 19 December 2024

Introduction

The historical records of land management, cadastre, and taxation provide invaluable insights into the socio-economic and administrative evolution of regions over time. Among the most significant resources for understanding such systems in ancient Venice, Catastici (1740) and Sommarioni (1808) are two books offer different perspectives on Venetian land parcels, their ownership, and their taxation structures, reflecting the shifts in governance and societal organization over time.

The Catastici, compiled in 1740, serves as a snapshot of Venetian land management under the Venetian Republic. It meticulously documents the ownership, division, and use of land parcels during a period of relative stability. This record provides a foundation for understanding how land was distributed and administered before the onset of major political upheavals.

The Sommarioni, written in 1808, emerged during a period of significant political and social disruption following the fall of the Venetian Republic and under Napoleonic rule. This document captures a transformed landscape, reflecting the influence of changing administrative structures, evolving property ownership patterns, and new taxation policies. It reveals a dynamic reorganization of Venetian society and the economic pressures that shaped the era.

Historical transactional data is often difficult to interpret and lacks a clear representation of relationships between records. This project aims to transform structured data from historical archives related to cadastral records and leases into vivid and intuitive natural language descriptions. In addition to creating accurate and comprehensive descriptions for Venetian parcels from each period, we will compare and connect data from the two eras. Through this approach, the project seeks to provide new insights into the evolution of Venice’s administrative and socioeconomic framework.

Project Milestones and Pipeline

| Week | Task | Status |

|---|---|---|

| 07.10 - 13.10 | Define research questions Review relevant literature |

Done |

| 14.10 - 20.10 | Perform initial data checking and cleaning Address dataset-related questions |

Done |

| 21.10 - 27.10 | Autumn vacation | Done |

| 28.10 - 03.11 | Align Catastici and Sommarioni dataset Continue data cleaning |

Done |

| 04.11 - 10.11 | Develop description templates and prompts Prepare for the midterm presentation |

Done |

| 11.11 - 17.11 | Midterm presentation (14.11) Refine the description template and prompts |

Done |

| 18.11 - 24.11 | Translate Italian data into English |

Done |

| 25.11 - 01.12 | Design an evaluation plan Evaluate the prompts |

Done |

| 02.12 - 08.12 | Generate final results Evaluate the prompts and translation Begin writing the wikipage |

Done |

| 09.12 - 15.12 | Write the wikipage Organize GitHub code Prepare for the final presentation |

Done |

| 16.12 - 22.12 | Deliver GitHub repository and wikipage (18.12) Final presentation (19.12) |

Done |

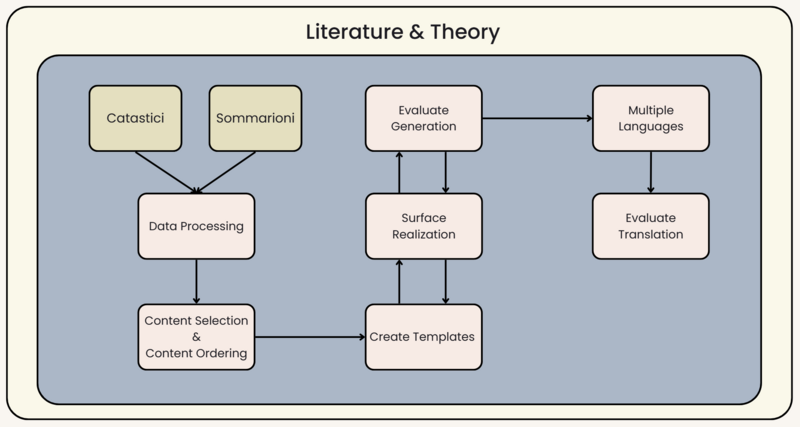

Methodology

The Catastici and Sommarioni datasets were derived from historical records through OCR conversion, which introduced significant noise. To address this, we first cleaned the datasets to remove noisy data, extracted relevant information, and standardized the data into English (excluding names of people and places). Next, we selected and ordered the key content required for generating descriptions, and designed templates to fine-tune GPT for generation.

The generated descriptions were evaluated manually to ensure their accuracy, conciseness, and plausibility. Additionally, we translated the descriptions into other languages and evaluated their adaptability in multilingual contexts.

Data Processing

Catastici

1. owner first name

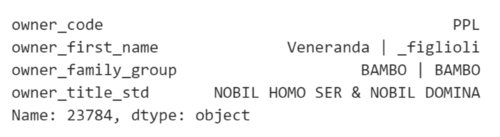

The owner_first_name field in the Catastici dataset contains various forms of representation. Most entries record the owner's first name directly, while unknown names are marked with a placeholder, "-". However, in addition to these, we identified 3,599 entries where the "first name" includes the placeholder "_". These placeholders do not represent the owner's real name but symbolize a certain familial identity associated with the owner. We collected all values representing family relationships and identities, identifying a total of 42 unique entries. Below are some examples.

| Origin Values | English Translation |

|---|---|

| _cugini | cousins |

| _nepoti | grandchildren |

| _herede | heir |

| _nepote | grandchild |

| _fratelli | brothers |

We focused on investigating this subset and proposed three potential hypotheses:

A. The familial identity serves as a supplementary label for an owner with a recorded name, meaning the familial identity and the actual name refer to the same person.

B. The familial identity is directly related to another owner, indicating a specific interpersonal relationship.

C. The familial identity is unrelated to any other owner and represents a separate individual.

Upon further investigation, the first two hypotheses were conclusively disproven. The supporting examples are as follows. In example A, the term figlioli translates to "children." It is implausible to assert that Veneranda and figlioli refer to the same individual, as the owner_first_name field lists figlioli (children), while the owner_title_std field includes titles such as nobil homo ser (noble gentleman) and nobil domina (noble lady). These titles clearly indicate that the owner represents multiple individuals of different genders. Consequently, the hypothesis A can be definitively dismissed. If hypothesis B were valid, the dataset would contain numerous contradictory relationships. For instance, Angela, Elena, and Cattarina in example B are traditional Italian female names, while _moglie means "wife." However, in 1740, same-sex marriage was not legally recognized in Italy. Therefore, the second owner could not possibly be the wife of Angela, Elena, and Cattarina. The second hypothesis is also disproven.Thus, we are left with hypothesis C as the most plausible explanation. there is no direct relationship between the names and familial roles appearing in the owner_first_name field.

2. rent

The rent field exhibited inconsistencies in currency units and included non-rental information. To resolve this, we standardized all entries to ducats under the golden standard. Following this unification, 15 non-monetary noise entries were identified, which were manually reviewed and corrected.

| Currency Conversion Rules |

|---|

| 1 ducato = 1488 denari |

| 1 lira = 240 denari |

| 1 grosso = 62 denari |

| 1 soldo = 12 denari |

| Original Values | English Translation |

|---|---|

| porzione di casa | Portion of a house |

| casa in soler | House in Soler |

| libertà di traghetto | Ferry liberty |

| 7 lire, 15 soldi, ogni tre mesi | 1860 denari for every three months |

3. quantity income & quality income

The fields quantity income and quality income capture non-monetary forms of rent payment or supplementary information about the lease. When non-monetary items are recorded as payment, quantity income specifies the amount, while quality income describes the items provided. Additionally, other rental-related information may be logged in either of these fields. Therefore, an essential step is to combine these two fields into a single representation to consolidate all non-monetary rental details.

| quantity_income | quality_income | combination method | example |

|---|---|---|---|

| NaN | NaN | / | quantity_income: NaN quality_income: NaN |

| NaN | 1 or more than 1 items | quality_income | quantity_income: NaN quality_income: per carità |

| 1 or more than 1 items | NaN | quantity_income | quantity_income: 12 lire in contanti quality_income: NaN |

| 1 item | 1 item | quantity_income + quality_income | quantity_income: 2 quality_income: legne di manzo |

| more than 1 items | more than 1 items (but equal to quantity_income) | split and add them separately | quantity_income: 2 pera, 8 lire quality_income: caponi, regalia |

| 1 item | 2 items | split and use the quantity_income to add each quality_income item | quantity_income: 8 lire de piccoli quality_income: sapone, zuccaro |

Sommarioni

1. Organize data by parcel

The Sommarioni dataset records the attribute information of different parcels, including their location, area, ownership, as well as details about the parcel's owner and the owner's family. However, some parcels also contain several subparcels with different parcel types. As a result, we group the information of the subparcels together to generate our description.

2. Clean owner name

The column 'owner_standardised' records the names of the owners. However, it also contains additional information about the owner, such as their identity. To avoid confusion, we have separated this information. For example, "CROTTA Lucrezia" is the widow of "CALVO" (where "Vedova" means widow in Italian). Therefore, we separated the two parts into the owner’s name and extra information. To maintain the coherence of the data, we will only translate the extra information in the next step.

| owner_standardised |

|---|

| CROTTA Lucrezia (Vedova CALVO) |

| owner name | extra info |

|---|---|

| CROTTA Lucrezia | Vedova CALVO |

Translation

Since all the values are in Italian, this poses challenges when generating English descriptions. So we utilized the GPT-4o API to translate the following properties into English.

| Translated Properties in the Sommarioni |

|---|

| extra information (seperate above) |

| place |

| ownership_types |

| qualities |

| own_title |

| own_other_notes |

| Translated Properties in the Catastici |

|---|

| function |

| owner_entity_group_std |

| owner_mestiere_std |

| owner_title_std |

| owner identities in owner_first_name (with placeholder "_") |

| quality_income, quantity_income (together) |

Geographical Connection

To analyze the changes in ancient Venice between 1740 and 1808, we link the two datasets based on their geographical coordinates. The Catastici dataset comprises point data, while the Sommarioni dataset consists of polygon data. The connection between the two is established by identifying which Catastici points fall within specific Sommarioni parcels. Since not all Sommarioni parcels have corresponding Catastici data, and not all Catastici data matches a Sommarioni parcel, the summary description generation focuses solely on the overlapping portions—specifically, cases where Catastici data is contained within Sommarioni parcels.

Content Selection and Ordering

Catastici

Taking data quality into account, we selected specific properties from the Catastici dataset and categorized them into 6 groups.

| Location | Function | Owner & Ownership | Owner Title & Job | Tenant | Payment |

|---|---|---|---|---|---|

| place | function | owner_first_name | owner_title_std | tenant_name | rent |

| sestiere | owner_family_name | owner_mestiere_std | quantity_income | ||

| owner_entity_group | quality_income | ||||

| owner_identity |

Sommarioni

Taking data quality into account, we selected specific properties from the Sommarioni dataset and categorized them into 5 groups.

| Location | Features | Owner & Ownership | Owners' Family | Othernotes |

|---|---|---|---|---|

| district_acronym | parcel type | owner_standardised | own_father | own_other_notes |

| place | qualities | ownership_types | own_father_is_q | parcel_ids |

| area | own_title | own_mother | ||

| own_siblings | ||||

| own_husband | ||||

| own_husband_is_q |

Template Design

Our project leverages GPT-4o-mini to generate descriptions. To guide the language model effectively and produce well-structured content, we generate three types of descriptions. The first type introduces information from Catastici (1740), while the second type details data from Sommarioni (1808). These two descriptions focus on individual parcels of land, aiming to be both accurate and concise. They are designed to include all relevant information about the geometry parcels in the dataset, while avoiding any fictional or irrelevant data.

Building on these two types of descriptions, we generate a third type—a summary description—which seeks to enrich the information by uncovering internal connections and deeper implications within the data. For example, the same parcel of land might belong to the same family during the Catastici and Sommarioni periods. By linking the descriptions from these two eras, we can uncover hidden narratives of familial inheritance. Thus, for the summary description, our primary goal is to ensure plausibility by logically connecting the changes and similarities between the two descriptions, avoiding unreasonable or speculative elements, and addressing implications or transitions inferred from the original data. This approach not only enables us to compare societal characteristics across different eras, such as changes in rent and social hierarchies, but also allows us to explore administrative boundaries and property inheritance over time.

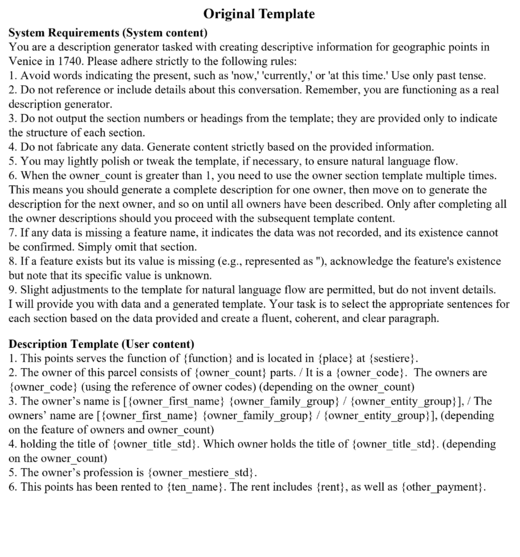

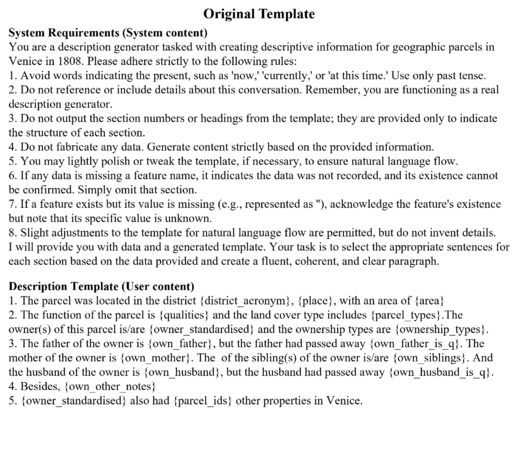

To ensure the language model interprets our instructions effectively, we designed specific prompt templates for each paragraph. The finalized templates are shown below, and the next section will discuss the iterative process of refining these prompt templates.

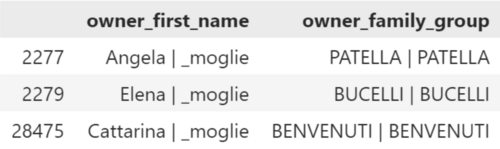

Prompt Evaluation

The evaluation process consists of five main steps. First, the reviewers carefully read the input content to thoroughly understand the context, key details, and specific requirements. Second, the reviewers score each description based on predefined criteria. Third, we assess the inter-annotator agreement among the reviewers to ensure consistency. Fourth, we analyze the evaluation results to identify patterns and insights. Finally, we refine and improve our prompt template based on these findings. Given that the requirements and objectives of each paragraph vary, we have developed distinct evaluation metrics tailored to each paragraph. The evaluation criteria of each paragraph are explained below.

Evaluation Criteria for Catastici and Sommarioni Paragraph

Since the first two paragraphs primarily focus on the information in the dataset, we prioritize accuracy and conciseness as the main evaluation indicators. The detailed criteria are explained below.

Evaluate Description for Accuracy

For each description, assess whether:

- All facts are correctly represented.

- The description aligns precisely with the metadata, without misrepresentation or omission of key details.

- There are no fabricated or incorrect elements.

Assign a score of:

- 0: If the description contains inaccuracies, misrepresentation, omissions, or fabricated elements.

- 1: If the description is fully accurate, complete, and faithfully represents the metadata.

Evaluate Description for Conciseness

For each description, assess whether:

- The description is free from redundant or repetitive content.

- The information is presented succinctly, without unnecessary elaboration or verbosity.

- The description focuses on delivering the key details without including irrelevant information.

Assign a score of:

- 0: If the description contains redundancy, repetition, or excessive elaboration.

- 1: If the description is concise, focused, and avoids unnecessary content.

To evaluate the generated descriptions more thoroughly and comprehensively, we divide the content based on the original data into different aspects and assess the accuracy and conciseness of each aspect. The evaluation metrics are shown below.

| Location | Features | Owners' Name | Owners' Title & Job | Tenant | Payment | |

|---|---|---|---|---|---|---|

| Accurate | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 |

| Concise | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 |

| Location | Features | Owner & Ownership | Owners' Family | Othernotes | |

|---|---|---|---|---|---|

| Accurate | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 |

| Concise | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 | 0 or 1 |

Evaluation Criteria for Summary Paragraph

Since the summary is a comprehensive description based on the Catastici and Sommarioni descriptions, it is challenging to evaluate each detailed property individually. Thus, we categorized all properties into three major properties. The first property includes information related to the parcel itself, such as geographic location, administrative region, function, and type. The second category pertains to the owner, encompassing details such as the owner's name, identity, and relationships. The third category covers rental-related information, including tenants and rental payments.

In evaluating the summaries, we focus more on whether the new descriptions logically and reasonably explain the changes, differences, and consistencies between the two time periods, while aligning with common sense and contextual logic. Therefore, we chose plausibility as the primary metric for this evaluation.

For each generated description, evaluate whether it:

- Logically connects the changes and similarities between the two descriptions.

- Avoids introducing unreasonable, exaggerated, or speculative elements.

- Maintains consistency with real-world knowledge and contextual logic.

- Adequately addresses any implications or transitions inferred from the original descriptions.

Assign a score of:

- 0: If the description contains illogical conclusions, exaggerated assumptions, or inconsistencies with the provided information or context.

- 1: If the description is logical, reasonable, and aligns well with the context and common sense.

The evaluation metrics are shown below.

| ParcelInfo | OwnerInfo | RentalInfo | |

|---|---|---|---|

| plausibility | 0 or 1 | 0 or 1 | 0 or 1 |

Evaluation Criteria for Translation

Our project aims to provide descriptions in multiple languages. After generating the English descriptions, we translated them into various languages. However, since we are only proficient in Chinese, we used the Chinese version as an example for evaluation. The evaluation criteria and metrics are outlined below.

For each generated description, evaluate whether it:

- Accuracy: Ensure the translation faithfully conveys the meaning of the original description without omissions, distortions, or errors.

- Readability: Assess how natural and fluent the translation is, ensuring it adheres to Chinese linguistic norms and avoids awkward or unnatural phrasing.

- Clarity: Confirm that the translated description is easy to understand and does not cause confusion or ambiguity.

Assign a score of:

- 1 (Very Poor): The translation contains significant errors or is extremely difficult to understand. It fails to convey the original meaning or has substantial inaccuracies.

- 2 (Poor): The translation has noticeable inaccuracies or awkward phrasing that make it challenging to follow, though some meaning is conveyed.

- 3 (Average): The translation is accurate but includes some phrases that are unnatural or difficult to comprehend in Chinese. It generally conveys the intended meaning but lacks fluency.

- 4 (Good): The translation is mostly accurate, clear, and reasonably fluent, with only minor issues in adherence to Chinese language norms.

- 5 (Excellent): The translation is both accurate and highly fluent, seamlessly adhering to Chinese linguistic norms and providing an easy-to-understand and natural reading experience.

Test Inner-Annotator Agreement

We calculated the inter-annotator agreement between the two annotators to ensure the quality and consistency of the annotations. One of the most commonly used methods for assessing agreement is Cohen's Kappa coefficient. However, in scenarios with extreme class imbalance (e.g., when all data points are assigned the same label, such as '1', which can occur with perfect results), Cohen's Kappa becomes unreliable or even misleading.

In contrast, percentage agreement is a simpler measure that directly calculates the proportion of matching labels between two raters without accounting for chance agreement. This makes it more suitable for extreme cases, as it is not affected by class imbalance or skewed label distributions. Therefore, we ultimately chose percentage agreement to evaluate the validity of the annotation results.

Evaluation and Final Result

Evaluation of Catastici Description

During the process of generating descriptions for Catastici and Sommarioni, we conducted two rounds of evaluation in total. The first evaluation revealed several shortcomings in the prompt design and data formatting. So we could make targeted improvements and corrections based on the findings, which resulted in significant enhancements during the second evaluation.

Misleading Features in Missing Data: After the first round of evaluation, we observed that large language models (LLMs) often fabricate information for missing features, despite explicit instructions in the prompt prohibiting such behavior. To address this, we chose to remove missing features entirely from the data provided to the LLM. Additionally, we further clarified in the prompt that generating fabricated information is strictly prohibited, reducing the likelihood of hallucinated content.

Information Misunderstanding: In the Catastici dataset, the owner's identity can take various forms, such as names, institutions, or familial relationships, which can overwhelm and confuse GPT when generating descriptions. To mitigate this, we enhanced the template by providing a clearer explanation of the data structure and offering more detailed guidance in the second round of evaluation.

Tense and Grammar Requirements: While GPT generally produces high-quality text, occasional errors in tense or noun plurality were observed. To improve accuracy, we updated the prompt to include explicit instructions for checking and ensuring correct tense and grammatical usage.

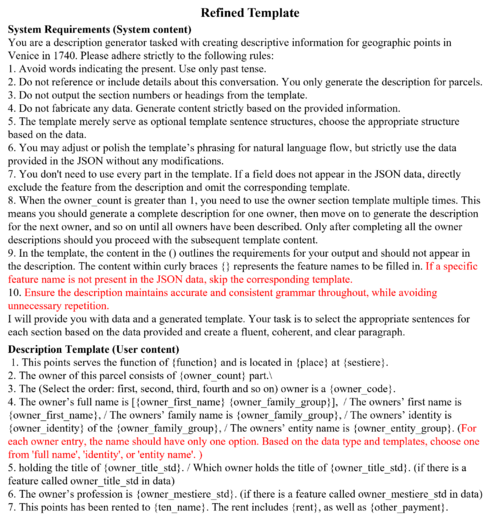

The evaluation scores and the results of inter-annotator are shown below. The Inter-Annotation Agreement across both rounds of evaluation largely exceeded 0.95, indicating a high level of reliability in the evaluation results.

Prompt 1 was used in the initial evaluation round. During this round, the accuracy and conciseness scores for the owner's title and job, along with the accuracy of location and the conciseness of payment, were notably low. However, after refining both the dataset and Prompt 2, we observed significant improvements in the performance of these properties.

| Location | Function | Owners' Name | Owners' Title & Job | Tenant | Payment | |

|---|---|---|---|---|---|---|

| Prompt1-Accuracy | 0.95 | 1.0 | 0.95 | 0.9 | 1.0 | 0.95 |

| Prompt2-Accuracy | 1.0 | 1.0 | 0.95 | 1.0 | 1.0 | 0.95 |

| Prompt1-Conciseness | 1.0 | 1.0 | 1.0 | 0.9 | 1.0 | 0.95 |

| Prompt2-Conciseness | 1.0 | 1.0 | 0.7 | 1.0 | 1.0 | 0.95 |

| Location | Function | Owner Name | Owner title & job | Tenant | Payment | |

|---|---|---|---|---|---|---|

| Prompt1-Accuracy | 0.675 | 1.0 | 0.875 | 0.55 | 1.0 | 0.975 |

| Prompt2-Accuracy | 1.0 | 1.0 | 0.875 | 1.0 | 1.0 | 0.975 |

| Prompt1-Conciseness | 1.0 | 1.0 | 1.0 | 0.2 | 1.0 | 0.275 |

| Prompt2-Conciseness | 1.0 | 1.0 | 0.6 | 1.0 | 1.0 | 0.975 |

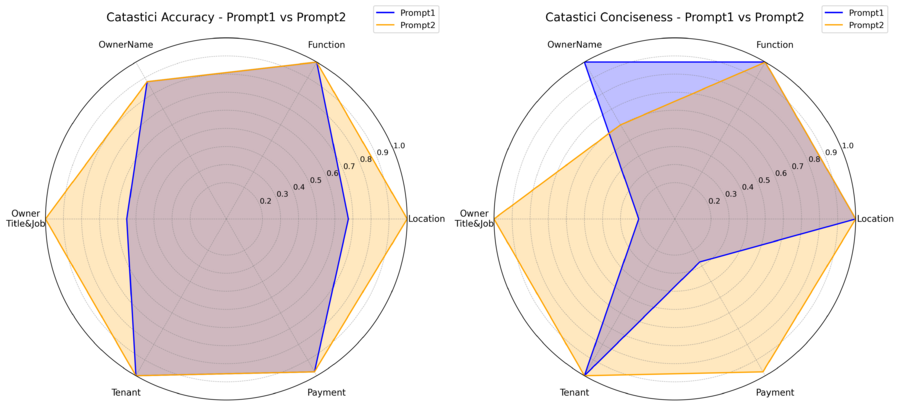

Here is a Catastici description compared between Round 1 and Round 2. The bolded brown sections highlight the parts that impacted the quality of the description. After restructuring the JSON and refining the template, the description became more concise, significantly reducing unnecessary content and resolving issues of misunderstanding.

Evaluation of Sammarioni Description

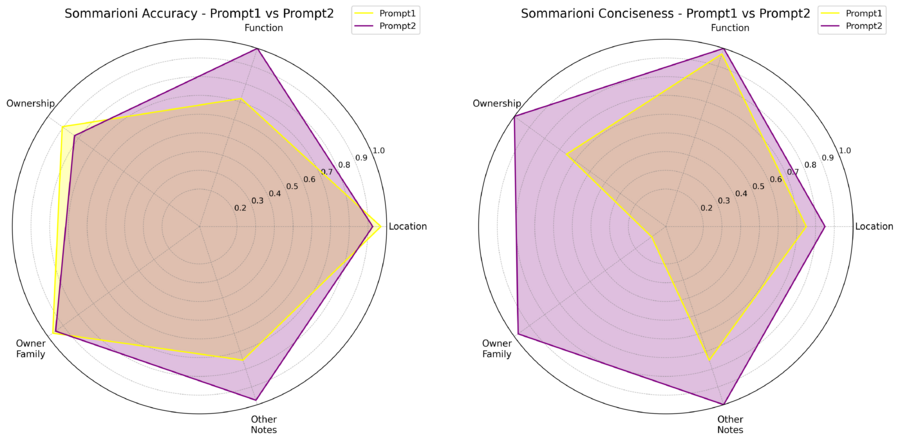

After our first round of description generation and evaluation, we discover several problems about the sommarioni paragraph.

Meaningless Information: When certain properties in the data record are missing, the description often explicitly mentions the absence of these data. Given that many records contain missing information, this results in excessive and unnecessary details, making the description less concise and harder to follow. As a result, we emphasized that the generated description should not include missing data in the second prompt.

Information Misunderstanding: We used parcel_ids to indicate the number of other properties owned by the owner. However, the language model occasionally misinterprets it as the identifier for a specific parcel. We renamed some abstract property names to more meaningful and detailed ones. For example, we have renamed parcel_ids to parcel_num.

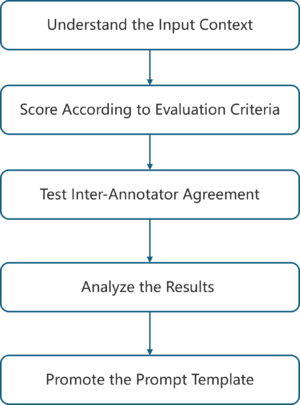

The Inter-Annotation Agreement across both rounds of evaluation largely exceeded 0.9, indicating a high level of reliability in the evaluation results. After evaluating the performance of Prompt 1 used in Round 1, we identified its primary weakness in terms of conciseness, particularly for the properties of owner family and ownership. Therefore, we focused on enhancing conciseness in Prompt 2.

| Location | Function | Ownership | Owner Family | Other Notes | |

|---|---|---|---|---|---|

| Prompt1-Accuracy | 0.9375 | 0.8125 | 0.8125 | 0.9375 | 0.875 |

| Prompt2-Accuracy | 0.95 | 1.0 | 0.85 | 0.9 | 0.95 |

| Prompt1-Conciseness | 0.875 | 0.9375 | 0.8125 | 0.9375 | 0.875 |

| Prompt2-Conciseness | 1.0 | 1.0 | 1.0 | 0.95 | 1.0 |

| Location | Function | Ownership | Owner Family | Other Notes | |

|---|---|---|---|---|---|

| Prompt1-Accuracy | 0.96875 | 0.71875 | 0.90625 | 0.96875 | 0.75 |

| Prompt2-Accuracy | 0.925 | 1.0 | 0.825 | 0.95 | 0.975 |

| Prompt1-Conciseness | 0.75 | 0.96875 | 0.65625 | 0.09375 | 0.75 |

| Prompt2-Conciseness | 0.85 | 1.0 | 1.0 | 0.975 | 1.0 |

Here is an Sommarioni description compared between Round 1 and Round 2. As we can see, after refining the template, the description becomes more concise, reducing a significant amount of unnecessary data and resolving the misunderstanding issue.

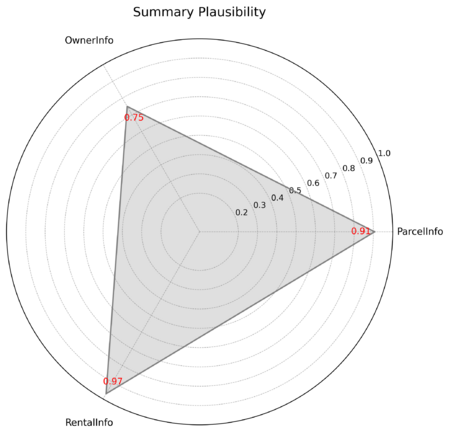

Evaluation of Summary

The Inter-Annotation Agreement for all properties exceeded 0.75, indicating a high level of consistency in the annotation results. The evaluation score for ownerInfo was the lowest at 0.75, likely due to the complexity and difficulty of interpreting the diverse types of information it contains. In contrast, the evaluation scores for parcelInfo and rentalInfo were both above 0.9, demonstrating an almost perfect representation of parcel and rental information.

| ParcelInfo | OwnerInfo | RentalInfo | |

|---|---|---|---|

| Plausibility | 0.8125 | 0.75 | 0.9375 |

| ParcelInfo | OwnerInfo | RentalInfo | |

|---|---|---|---|

| Plausibility | 0.91 | 0.75 | 0.97 |

Evaluation of Translation

We used the average score from two annotators to represent the rating for each translation. The scores ranged from 2 to 5, indicating suboptimal performance. The main issues identified in the translation process are as follows:

Inaccurate translation of proper nouns: For example, S. Salvatore degli Incurabili was mistranslated due to a lack of contextual understanding. S. Salvatore refers to the "Holy Savior" while degli Incurabili literally means "of the Incurables". Historically, this phrase was associated with hospitals or charitable institutions in the Middle Ages or Renaissance that cared for patients with incurable diseases, such as leprosy or terminal illnesses. However, the large language model misinterpreted S. Salvatore as a personal name and rendered it phonetically in Chinese, while degli Incurabili was translated literally as "of the Incurables." These errors can lead to significant misunderstandings of the context.

Cross-linguistic expression discrepancies: Differences in language structures and grammar can create deviations in meaning. For instance, in the sentence The owner also had one other property in Venice, the word property was translated into Chinese as “物业.” However, “物业” primarily refers to property management organizations or personnel, which is inconsistent with the intended meaning referring to real estate.

Results

Here is an example of our description. As shown in the picture below, the descriptions of the Catastici and Sommarioni datasets accurately reflect the original data. Furthermore, the summary paragraph effectively connects the two datasets. In this case, the owner of the parcel in both 1740 and 1808 shared the same family name, and the summary paragraph successfully highlights this connection, offering a reasonable hypothesis..

Additionally, to present our description more effectively, we used ArcGIS Online to create an interactive map, allowing users to view the description by clicking on the map. Below is an image of the online map.

Click here to view the interactive map

Limitations and Future Works

Prompt Template Promotion: As discussed in the previous section, we only conducted two rounds of promotion for our prompt template. The accuracy and conciseness of the Catastici and Sommarioni paragraphs, as well as the plausiblity of the summary paragraph can still be improved. Additionally, all the descriptions are currently quite similar to each other. It would be beneficial to design different tones for the descriptions to make them more diverse and engaging.

Model Improvement: Due to budget limitations, we only used GPT-4o-mini to generate our descriptions and translations. This model has some limitations, particularly in translation. Therefore, our next step is to involve other large language models, such as GPT-4o and T5, to address these issues.

Frontend Display: The current frontend is not yet capable of fully showcasing all types of description information. Further testing is required to ensure usability and smooth interactions. Additionally, features like search functionality need to be implemented to enhance the user experience.

Reference

Wiseman, S., Shieber, S. M., & Rush, A. M. (2017). Challenges in data-to-document generation. arXiv preprint arXiv:1707.08052. https://arxiv.org/abs/1707.08052v1

Mueller, R.C., & Lane, F.C. (2020). Money and Banking in Medieval and Renaissance Venice: Volume I: Coins and Moneys of Account. Baltimore: Johns Hopkins University Press. https://dx.doi.org/10.1353/book.72157.

Günther, P. (n.d.). The Casanova Tour. Retrieved from https://giacomo-casanova.de/catour16.htm

Venetian lira. (n.d.). In Wikipedia. Retrieved December 18, 2024, from https://en.wikipedia.org/wiki/Venetian_lira

GitHub Repositories

Credits

Course: Foundation of Digital Humanities (DH-405), EPFL

Professor: Frédéric Kaplan

Supervisors: Alexander Rusnak, Céderic Viaccoz, Tommy Bruzzese,Tristan Karch

Authors: Ruyin Feng, Zhichen Fang