Virtual Louvre: Difference between revisions

No edit summary |

|||

| (16 intermediate revisions by 3 users not shown) | |||

| Line 161: | Line 161: | ||

The scene starts with an empty room containing the player, the floor and the light. The rest is then fully generated from the files. | The scene starts with an empty room containing the player, the floor and the light. The rest is then fully generated from the files. | ||

== Code architecture and data processing == | === Code architecture and data processing === | ||

===Data processing=== | ====Data processing==== | ||

There are four external files required for the world generation, walls,doors,paintings, and the pictures corresponding to the paintings. The first three are json data structure while the last contains simple pictures. Unity and c# allows for efficient Json reading, a structure info is declared with all the corresponding json components and a unity function is called to fill the structure info and place it into a list. A transformation method assigns the missing values when required (size and cardinality). | There are four external files required for the world generation, walls,doors,paintings, and the pictures corresponding to the paintings. The first three are json data structure while the last contains simple pictures. Unity and c# allows for efficient Json reading, a structure info is declared with all the corresponding json components and a unity function is called to fill the structure info and place it into a list. A transformation method assigns the missing values when required (size and cardinality). | ||

| Line 168: | Line 168: | ||

Each json file is named with the name of the room to easely load a new room, the function just have to fetch the file with the corresponding name. This process is made in the class called room. And is repeated upon passing a door. The first things to be created once the data is loaded are the walls, they are stored in a dictionary with their name corresponding to their cardinality. Then the paintings stored in the room are transferred to their corresponding wall using the cardinality variable stored in their information structure. | Each json file is named with the name of the room to easely load a new room, the function just have to fetch the file with the corresponding name. This process is made in the class called room. And is repeated upon passing a door. The first things to be created once the data is loaded are the walls, they are stored in a dictionary with their name corresponding to their cardinality. Then the paintings stored in the room are transferred to their corresponding wall using the cardinality variable stored in their information structure. | ||

===Wall class and energie algorithm=== | ====Wall class and energie algorithm==== | ||

The wall class manages the placing of the paintings, which appeared to be more complex than expected and two algorithms had to be used to achieve it. The first thing the method does is to check if the paintings can fit on the wall side by side. If yes they are displayed inline. If no then a second physic based algorithm is used to place them : | The wall class manages the placing of the paintings, which appeared to be more complex than expected and two algorithms had to be used to achieve it. The first thing the method does is to check if the paintings can fit on the wall side by side. If yes they are displayed inline. If no then a second physic based algorithm is used to place them : | ||

Each painting is assigned an energie and repulsion force. Such that they will move away from each other and the side of the wall. Each painting has a random movement at each iteration and if the movement conduct to a lowering of the global energie then the new position is kept.They converge to a local minimum of energie that correspond to a place where they are further away as can be from each other and the wall. We added the door later and did not have the time to implement them in the algorithm, it will have to be done in the following version. The energie algorithm still needs to be optimise and can have some troubles when the walls are too long | Each painting is assigned an energie and repulsion force. Such that they will move away from each other and the side of the wall. Each painting has a random movement at each iteration and if the movement conduct to a lowering of the global energie then the new position is kept.They converge to a local minimum of energie that correspond to a place where they are further away as can be from each other and the wall. We added the door later and did not have the time to implement them in the algorithm, it will have to be done in the following version. The energie algorithm still needs to be optimise and can have some troubles when the walls are too long | ||

| Line 174: | Line 174: | ||

Once the position of each painting has been defined they instantiated and the texture is set from the data path stored in their info structure. | Once the position of each painting has been defined they instantiated and the texture is set from the data path stored in their info structure. | ||

==Rendering== | ===Rendering=== | ||

===Textures=== | ====Textures==== | ||

A texture is what unity uses to render the surface of the created 3d element, the 3d element we used was a 3d rectangle sized to the dimension of the painting when available which works well except when we have some paintings with fancy geometry (ovoides, clovers, etc). Unity uses two texture to render the material a main one and a detailed one, having detailed texture is more computationally expensive but the result is greatly improved. The main texture is the one that appears when far away and the detailed one is triggered when close to the element. Which is perfect for our application. | A texture is what unity uses to render the surface of the created 3d element, the 3d element we used was a 3d rectangle sized to the dimension of the painting when available which works well except when we have some paintings with fancy geometry (ovoides, clovers, etc). Unity uses two texture to render the material a main one and a detailed one, having detailed texture is more computationally expensive but the result is greatly improved. The main texture is the one that appears when far away and the detailed one is triggered when close to the element. Which is perfect for our application. | ||

===Normal map=== | ====Normal map==== | ||

Normal maps are precomputed reflection of incident light, they are necessary to reproduce the interaction of light on a surface. This allowed us to fake the brush strokes and asperities normally present on a canvas. We used two normal maps to create the desired effect. | Normal maps are precomputed reflection of incident light, they are necessary to reproduce the interaction of light on a surface. This allowed us to fake the brush strokes and asperities normally present on a canvas. We used two normal maps to create the desired effect. | ||

This really improved the experience and it feels more realistic than plain pictures. This is where the most work on 3d museums could be done, a dedicated normal map for each painting would be the way to render more accurately art work. | This really improved the experience and it feels more realistic than plain pictures. This is where the most work on 3d museums could be done, a dedicated normal map for each painting would be the way to render more accurately art work. | ||

===Lighting=== | ====Lighting==== | ||

The more we advanced on the project the more we realized how important lighting was. Finding about the normal maps was a major improvement to the quality of the project but so much more could be done by lighting the paintings correctly. Unity provides many tools to create proper lighting for a scene and an entire project could be pursued to find the optimal lighting. | The more we advanced on the project the more we realized how important lighting was. Finding about the normal maps was a major improvement to the quality of the project but so much more could be done by lighting the paintings correctly. Unity provides many tools to create proper lighting for a scene and an entire project could be pursued to find the optimal lighting. | ||

| Line 189: | Line 189: | ||

A light has been attached to the player so some reflections are visible on the paintings to give a more lively sensation. The problem being that the light is satisfactory, a dedicated normal map of each painting could be created to render better effects. This could be done by gathering reflection data from original paintings or through a machine learning algorithm that takes as input a painting and gives out a corresponding normal map. We processed by trial and error to find the right illumination of the rooms and a more researched based would have yielded better results. | A light has been attached to the player so some reflections are visible on the paintings to give a more lively sensation. The problem being that the light is satisfactory, a dedicated normal map of each painting could be created to render better effects. This could be done by gathering reflection data from original paintings or through a machine learning algorithm that takes as input a painting and gives out a corresponding normal map. We processed by trial and error to find the right illumination of the rooms and a more researched based would have yielded better results. | ||

==Interaction== | ===Interaction=== | ||

===doors=== | ====doors==== | ||

Doors are represented by glowing blue rectangles, they are placed accordingly to the original map. They are not considered in the placing algorithm and if a painting is too big they could be placed on top of each other. It could be possible to integrate them in an extension of the project but we did not give them a cardinality in the json file making it difficult to assign them to a specific wall. This same difficulty made it harder to make them stick out so we simply gave them a big enough square shape. Their collision box also enter into conflict with the raycast algorithm explaining why the name of the painting is not displayed when a door is in the way. | Doors are represented by glowing blue rectangles, they are placed accordingly to the original map. They are not considered in the placing algorithm and if a painting is too big they could be placed on top of each other. It could be possible to integrate them in an extension of the project but we did not give them a cardinality in the json file making it difficult to assign them to a specific wall. This same difficulty made it harder to make them stick out so we simply gave them a big enough square shape. Their collision box also enter into conflict with the raycast algorithm explaining why the name of the painting is not displayed when a door is in the way. | ||

| Line 196: | Line 196: | ||

They load new room upon collision, they update a collision boolean and set the new room name to their stored string. This will trigger the LoadNew function with the corresponding room name. | They load new room upon collision, they update a collision boolean and set the new room name to their stored string. This will trigger the LoadNew function with the corresponding room name. | ||

===Raycasting=== | ====Raycasting==== | ||

Raycasting is what is used to detect when the player is looking at a painting, it creates a line in the center and in the direction of the current camera. If the game object with which it collides is a painting then the text is displayed. Unity is very helpful here, they provide an event OnColision where the data of the two colliding object is given. It is easy to attach the text stored in the info structure of the painting object that collided, to the text present on the canvas. If the player presses q while the raycast hits a painting then the original picture used to make the texture of the painting is displayed. | Raycasting is what is used to detect when the player is looking at a painting, it creates a line in the center and in the direction of the current camera. If the game object with which it collides is a painting then the text is displayed. Unity is very helpful here, they provide an event OnColision where the data of the two colliding object is given. It is easy to attach the text stored in the info structure of the painting object that collided, to the text present on the canvas. If the player presses q while the raycast hits a painting then the original picture used to make the texture of the painting is displayed. | ||

===Image Display=== | ====Image Display==== | ||

The displaying of the original picture was trickier than expected. Screen dimensions variation and picture size differences made it impossible to hard code values in the display settings so a function resize had to be created that takes into account these parameters to fit correctly the size of the user screen. The map had the same problem and we were never able to make it readable. That is something that could be improved in the future. | The displaying of the original picture was trickier than expected. Screen dimensions variation and picture size differences made it impossible to hard code values in the display settings so a function resize had to be created that takes into account these parameters to fit correctly the size of the user screen. The map had the same problem and we were never able to make it readable. That is something that could be improved in the future. | ||

===Text display and canvas=== | ====Text display and canvas==== | ||

The canvas is a 2d element that holds the paintings pictures when activated, the text of the painting that got hit by the raycast and the initial title animation. The resize problem was discussed in the previous paragraph. Some special material had to be applied to the pictures to render them, they had to be from the unlit category to avoid them being completely dark. | The canvas is a 2d element that holds the paintings pictures when activated, the text of the painting that got hit by the raycast and the initial title animation. The resize problem was discussed in the previous paragraph. Some special material had to be applied to the pictures to render them, they had to be from the unlit category to avoid them being completely dark. | ||

==Quality and possible improvement== | ===Quality and possible improvement=== | ||

The game could be improved in many ways. We standardized the Louvre to the point where each room is made with the same brick wall and the floor is always the same wood floor. We don’t have the dimension nor the shape of the doors so their visualization is very limited. More information could be gathered on the paintings to give them a more realistic look. Bugs occur that are not handled, arriving to a room where a file is missing for instance. The raycast algorithm could be improved so that small painting can be more easily detected and door ignored. We could also have some more resize options, big rooms feel too big and small room too small. A smarter display algorithm could also be implemented. Overall all the functionalities are present but could be polished and reworked on. Video games offer unlimited feature addition and bug correction. | The game could be improved in many ways. We standardized the Louvre to the point where each room is made with the same brick wall and the floor is always the same wood floor. We don’t have the dimension nor the shape of the doors so their visualization is very limited. More information could be gathered on the paintings to give them a more realistic look. Bugs occur that are not handled, arriving to a room where a file is missing for instance. The raycast algorithm could be improved so that small painting can be more easily detected and door ignored. We could also have some more resize options, big rooms feel too big and small room too small. A smarter display algorithm could also be implemented. Overall all the functionalities are present but could be polished and reworked on. Video games offer unlimited feature addition and bug correction. | ||

==User guide== | |||

The player moves within the walls and can look at the paintings to see the name of the author and the title of the painting. He can choose to switch from first to third player. See the paintings full screen and toggle his collision box to avoid changing room. | |||

Player movement : | |||

(wasd) | |||

Move camera : | |||

(mouse/arrow keys) | |||

Zoom/change camera : | |||

(z) | |||

See source picture : | |||

(q) | |||

Show map : | |||

(m) | |||

Bar doors : | |||

(spaceBar) | |||

Display incomplete paintings : | |||

(r) | |||

= Milestone = | = Milestone = | ||

Latest revision as of 13:06, 24 January 2022

Abstract

The goal of this project is to allow anyone to feel a bit of the Louvre of 1923. Generated browsable rooms at scaled proportions will display some of the artworks that were there in 1923. The user will be able to walk through the different rooms and discover information about the exposed paintings.

Data processing is done with python and makes extensive use of pandas, regex and web scraping. The virtualisation uses Unity3D.

Motivation

Wandering between the Greek statues and the multi-centenary paintings of the Louvre, we might be tempted to forget that museum themselves have a history. Displays are not immutable. With the digitalisation, museum have a new opportunity to make their collections available. But a virtual collection is not a virtual museum. A museum, through its rooms and the links made between the artworks by disposition choices, is a media, an experience. Therefore, allowing the users to walk virtually through the rooms of the Louvre and see which paintings were in each room is the best way to make sense of the information that the catalogue offers us.

Historical introduction

The source used in the project is the Catalogue général des peintures (tableaux et peintures décoratives). Released in 1923, written by Louis Demonts, assistant curator for the paintings and drawings, and Lucien Huteau, this catalogue inventories some of the Louvre paintings from the French, Italian, Spanish, English, Flemish, Dutch and German Schools

Although the Louvre has been holding artworks since the monarchy, consisting in royal personal collections, it only became a real museum after the French revolution, with the opening of the Grande Galerie. Since, it never stops to collect works through donations, purchases or conquests and to evolve until becoming the largest and most visited art museum in the world.

Catalogues of the Louvre collection were common, but were not made in a systematic way at regular intervals. This very catalogue is not intended to be exhaustive, but to be “the safest guide for the visitor and the best starting point for the worker”. Its creation is argued to be an answer to the “upheaval that the last war [Word War I] has caused to the museum”, until a more complete inventory, planned to be in three volumes, is published. The Louvre was indeed turned upside down during the great war: hundreds of artworks have been evacuated or heavily protected, fearing destructions of cultural property by the advancing german army.[1]

Collection display is never frozen. The catalogue itself present collections allocation of its time as unperfect and suggests a more sensible logic of classification. Neither the given nor the proposed display configurations correspond to the current one. Some parts dedicated to paintings in 1923 contains now art objects or even antic statues.[2] This demonstrate the importance of considering a museum not only as a space, but also with its time dimension.[3]

Description and evaluation of the methods

Formal description of the source

The catalogue is constituted of :

- A map of a part of the first floor of the Louvre, on a double page

- An introduction (not used in the project)

- A list of abbreviations.

- A list of paintings, grouped by national schools.

- Labeled illustrations of some paintings, scattered through the list.

An item in the list has the form number author (lifespan) title position

- Number can be an index taken from the main catalogue range of unique ID or from collection-specific ranges. In the latter case, the number is indicated in parentheses. It can also be a star * or “S. N°” (without number).

- Author and lifespan works together as a block. Paintings are grouped by author. When a painting has the same author as the previous one, the block is replaced by a dash.

- Titles are not disambiguated nor universal. There are cases where multiple paintings have the same title and the same author. Some title do not match exactly the “official title” of the painting.

- Position are the name of the room in which the painting is. In some cases, they are followed by the cardinal direction of the wall on where the painting is. If the position is partially or totally the same as the previous position, the common part is replaced by a dash.

A labeled illustration is constituted of a black and white image of a painting and a caption indicating the number of the painting and the last name of the author, in capitals.

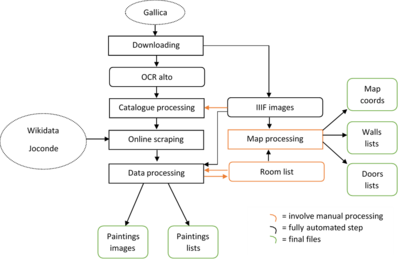

Data processing pipeline

Downloading

Alto OCR and IIIF images are directly downloaded from Gallica using the tool provided by the lab provided by the lab.

Evaluation

Alto OCR does not distinguish bold words, which would have been extremely useful in the following steps of processing. Also, it contains multiples errors that hinder the whole parsing process.

Catalogue processing

The objective is to get a list of artworks characterized by their author, their title and their position in the Louvre.

The first main step of the processing is to itemize the alto into entries. Words are seen as a long stream. An entry begin at word N if this word horizontal coordinate is on the left of a certain threshold, word N-1 horizontal coordinate is on the right of a certain threshold, and word N respect the format of a painting number or a page header or title. After the entries have been determined, non-artwork entries (void entries, page headers and illustration caption) are eliminated with simple rules. They are easily recognizable, they contain only capital letters and numbers, but OCR errors create exceptions that are not well handled.

The second main step is the processing of the entries themselves. The identification of the different elements of an entry is performed with regex rules. The regex method has the advantage over a CRF ML method of giving extremely predictable error, as the failures are all due to bad OCR or itemization errors. On the other hand, it handle very badly exceptions and the regex rules must be very carefully designed.

The process first identify the number, then tries to find a lifespan or a dash, deduce the author position from the lifespan’s if there is one, and finally tries to differentiate the title and the position. The position is predicted regarding to the presence of a roman number, a known position keyword or a dash. After that, the implicit positions and authors are merged with what is previously known. Authors are simply replaced where there is a dash, but partial positions are merged by replacing the last words of the known position by the words presents in the new position.

Evaluation

The itemization works well for standard cases, but does not handle exceptions. There are a few cases in which an artwork is not separated from a parasitic header or illustration caption, or when small artworks are not properly displayed in rows in the catalogue so they remain sticked together. The differentiation between true artwork entries and parasitic entries has been calibrated to minimize the number of true artworks classified as parasites. Therefore, almost no true artwork is misclassified, but there is some trash kept in the list. 2709 entries are found.

There are two main point of failure in the identification of the part of an entry, which are finding the lifespan, and the position.

- The lifespan is often in the classic format (year_of_birth-year_of_death) but some of them are a text summing up what is known about the period of life of the artist. The most common cause of failure is OCR. A lot of parentheses are missing and a lot of year numbers are wrongly identified as nonsensical series of letters. For exemple, (1810-1880) turned into i i S i o- i SSo)

- The position identification relies on keywords that have been manually gathered. Although they are as exhaustive as possible and include common OCR errors, there are still misclassifications. Most of the failures are due to bad entry itemization, where two or more real entries are concatenated in a single item.

The merging of the position is also error-prone, because of its naïve implementation. Some final so-called positions are impossible, meaning that they have been badly merged.

A last big issue with the part identification is that if the parsing fail for one entry, all the immediately following entries that share an author or a position will fail too. As a vast majority of authors are associated with multiple paintings, the most of the incomplete rows have failed because the failure of another row.

At the end of the process, 2254 out of 2709 entries are complete, which represent a ratio of 83%. It is not possible to quantify directly how many are correctly parsed, but a manual random check on 100 artworks suggests that more than 80% probably are (83 correct on 100 reviewed), so roughly 1800 artworks should be usable.

Online scraping

The goal of this part is to retrieve pictures and width and height of the paintings.

There is no database that contains most of the artworks of the catalogue, only various small websites that can retrieve some of them. Wikidata was chosen because of its completeness, ease to query and digital humanities friendly principles.

For each painting, a query is constructed with the last name and one of the first name of the author. Choosing only one firstname in the query is a tradeoff between finding wrong authors and not finding authors because their full name is not commonly known. Image URL and dimensions are then scraped from the html with BeautifulSoup. If there is no image URL, an attempt is made to get the joconde ID of the painting and find the right pop.culture.gouv.fr page.

Unsuccessful trials have been made to query directly the joconde government database and AKG image. They failed because no way was found to directly use HTTP queries on them.

Evaluation

690 images and 696 painting dimensions are found. 658 paintings share both informations.

The reasons for this weak result are that Wikidata is incomplete, that a single parsing error makes the query fail, and that the names of author or paintings not always match between the catalogue and the database. For example, Rembrandt is named Rembrandt Harmensz van Rijn in the catalogue but Wikidata only knows its last name.

On the other hand, as Wikidata never gives results that only partially match the query, it is strongly reliable.

Even though, the result are not fully correct because of the lack of specificity of the titles in the catalogue. As some titles are common to several paintings of the same author, some images appears multiple times, meaning that at least one of them is obviously wrong. In general, there is never any guarantee that the image retrieved is the true one and not an homograph.

As even scraping Wikidata does not provide fully reliable informations, it was decided to give priority to quality over quantity and not perform google search to get images, which would have been even more inaccurate.

Data cleaning and processing

This part regroups multiple small steps performed in order to generate clean data usable in Unity.

Positions are first split into room and specific wall using a small regex. They are then matched to a manually established list of valid rooms. The list itself has been created based on the unclean rooms names. It associates every valid name with all its known variations.

Width and height of the paintings, authors and title are standardized (punctuation removal, common format).

Finally, the paintings are turn into JSON files according to their positions.

Evaluation

Because the list of valid rooms is manually created only with the unclean information given by the catalogue, wrong decisions could have been made when grouping the names, so paintings may have been attributed to the wrong room.

Text cleaning and standardizing present no particular pitfall.

Picture retrieval

Catalogue pictures are retrieved. Using again the alto, illustrations and corresponding caption are selected. Because of OCR errors, two attempts are made to find the right entry of an illustration. First only based on the number, second based on the number and the author. If exactly one match is found, the picture is cropped from the IIIF image using alto coordinates.

Pictures are also retrieved from the URLs using urllib.

Evaluation

Not all illustrations could be matched. Some failures were due to OCR errors on the author name or numbers (especially on the parentheses that distinguish the two series of IDs). Some were due to a catalogue conception fault: as the caption consist only of a number and a last name, all the unnumbered paintings from the same author are undistinguishable.

Combining URLs and the catalogue itself, 787 images are available, which is about a quarter of the 2709 entries detected.

Map processing

The processing is performed fully manually with the aid of tkinter and is based on the valid room list previously established. It consists of two parts.

The first is dedicated to the creation of the rooms themselves in Unity. Using a naïve homemade interface, the relative coordinates of the corners of each room and their doors are extracted by clicking on them on the map. For each room, walls and doors are stored in dedicated JSON files.

The second serves the displaying of a map indicating the current room in Unity. With a similar interface, the absolute position of the center of each room is extracted by clicking on it.

Evaluation

Not all valid rooms appears on the map. Some are known to belong to the Louvre. For example, two out of four sides of the Cour Carrée are missing on the map. On the other hand, some rooms name are probably OCR or merging artefacts. On the 108 “valid” rooms proposed, only 75 have been identified. Furthermore, the catalogue contains inconsistencies. Some rooms that are seemingly the same are given two different names, like III and Salle des Sept Cheminées or Salle des Meubles XVIIIe and Salle du Mobilier XVIIIe. All this considered, there is a non-negligible risk that wrong choices were made and that the rooms does not exactly correspond to the historical truth.

A conceptual limitation is that each room is defined individually with its set of doors. Therefore, there is only a connection between a door and its corresponding room, and not between the two faces of a same door in two different rooms.

Construction of the virtual Louvre

Virtualisation introduction

The unity frameworks allowed us to create the simulation with pre build tools for 3d rendering and video game like mechanics. We didn’t want a google street view game which is often the go to choice of museum when they want to virtualise their collection. We wanted to be closer to a museum experience, be able to walk next to a painting and have the possibility to zoom on it and see how the light and the angle affect it.

To achieve this we decided to model each room as a rectangle with 4 walls. All the intricacies of the Louvre architecture is lost, but it is more a work on the paintings than on the architecture. For the same reason the walls have a plain texture with no *normal maps* added to them (further information on normal maps in the next sections).

General description

The player is a capsule with two cameras attached to him, one for movement (third player), and one for zooming on the paintings (first player). It is also possible to see the original picture that was used for the painting with “q” press. The map can be displayed using ‘m’ (unfortunately the quality of the picture doesn’t allow for good localization). the name of the author and the title are displayed when the player looks at them, this is done with raycasting. The core of this project is to visualise the data we have been and also not been able to extract from the catalogue and the web. This is why we have a lot of white paintings and paintings on random walls. A default “canvas” like material, default size and random wall is assigned whenever the corresponding data is not available. So the position of the paintings may vary in the same room. By default the empty paintings are hidden, to display them simply press “r”.

Working in unity

In unity there is a graphical interface where you can create elements directly in a scene or prefabricate them in order to instantiate them later. On each element there is the possibility to attach scripts so that elements can perform complex actions. Every object had to be 3d modeled and his behavior coded, the walls, floor and paintings are all cubic shapes to ease the modeling work and the scene complexity.

The scene starts with an empty room containing the player, the floor and the light. The rest is then fully generated from the files.

Code architecture and data processing

Data processing

There are four external files required for the world generation, walls,doors,paintings, and the pictures corresponding to the paintings. The first three are json data structure while the last contains simple pictures. Unity and c# allows for efficient Json reading, a structure info is declared with all the corresponding json components and a unity function is called to fill the structure info and place it into a list. A transformation method assigns the missing values when required (size and cardinality).

Each json file is named with the name of the room to easely load a new room, the function just have to fetch the file with the corresponding name. This process is made in the class called room. And is repeated upon passing a door. The first things to be created once the data is loaded are the walls, they are stored in a dictionary with their name corresponding to their cardinality. Then the paintings stored in the room are transferred to their corresponding wall using the cardinality variable stored in their information structure.

Wall class and energie algorithm

The wall class manages the placing of the paintings, which appeared to be more complex than expected and two algorithms had to be used to achieve it. The first thing the method does is to check if the paintings can fit on the wall side by side. If yes they are displayed inline. If no then a second physic based algorithm is used to place them : Each painting is assigned an energie and repulsion force. Such that they will move away from each other and the side of the wall. Each painting has a random movement at each iteration and if the movement conduct to a lowering of the global energie then the new position is kept.They converge to a local minimum of energie that correspond to a place where they are further away as can be from each other and the wall. We added the door later and did not have the time to implement them in the algorithm, it will have to be done in the following version. The energie algorithm still needs to be optimise and can have some troubles when the walls are too long

Once the position of each painting has been defined they instantiated and the texture is set from the data path stored in their info structure.

Rendering

Textures

A texture is what unity uses to render the surface of the created 3d element, the 3d element we used was a 3d rectangle sized to the dimension of the painting when available which works well except when we have some paintings with fancy geometry (ovoides, clovers, etc). Unity uses two texture to render the material a main one and a detailed one, having detailed texture is more computationally expensive but the result is greatly improved. The main texture is the one that appears when far away and the detailed one is triggered when close to the element. Which is perfect for our application.

Normal map

Normal maps are precomputed reflection of incident light, they are necessary to reproduce the interaction of light on a surface. This allowed us to fake the brush strokes and asperities normally present on a canvas. We used two normal maps to create the desired effect. This really improved the experience and it feels more realistic than plain pictures. This is where the most work on 3d museums could be done, a dedicated normal map for each painting would be the way to render more accurately art work.

Lighting

The more we advanced on the project the more we realized how important lighting was. Finding about the normal maps was a major improvement to the quality of the project but so much more could be done by lighting the paintings correctly. Unity provides many tools to create proper lighting for a scene and an entire project could be pursued to find the optimal lighting. Light is so important that it could justify an entire reorganization of the Louvre generation. For now the rooms are destroyed when passing through a door, which works well and do not suffer from speed problems. But unity provides a tool that allows the user to bake lightmaps, it’s an expensive operation (every light source is portrayed as a beam reflecting multiple time on the different surfaces to create realistic lighting, the more reflection the more accurate and expensive it is). These lightmaps are then stored and are efficient way of creating realistic scenes. For now the normal maps are visible only when the playing settings are set to ultra which is not available for every computer and can induce some lag. So choosing to create a more data intensive project with every room premade and lightmaps precomputed would have probably rendered some better results with the expense of more loading time between the scenes.

Player light

A light has been attached to the player so some reflections are visible on the paintings to give a more lively sensation. The problem being that the light is satisfactory, a dedicated normal map of each painting could be created to render better effects. This could be done by gathering reflection data from original paintings or through a machine learning algorithm that takes as input a painting and gives out a corresponding normal map. We processed by trial and error to find the right illumination of the rooms and a more researched based would have yielded better results.

Interaction

doors

Doors are represented by glowing blue rectangles, they are placed accordingly to the original map. They are not considered in the placing algorithm and if a painting is too big they could be placed on top of each other. It could be possible to integrate them in an extension of the project but we did not give them a cardinality in the json file making it difficult to assign them to a specific wall. This same difficulty made it harder to make them stick out so we simply gave them a big enough square shape. Their collision box also enter into conflict with the raycast algorithm explaining why the name of the painting is not displayed when a door is in the way.

They load new room upon collision, they update a collision boolean and set the new room name to their stored string. This will trigger the LoadNew function with the corresponding room name.

Raycasting

Raycasting is what is used to detect when the player is looking at a painting, it creates a line in the center and in the direction of the current camera. If the game object with which it collides is a painting then the text is displayed. Unity is very helpful here, they provide an event OnColision where the data of the two colliding object is given. It is easy to attach the text stored in the info structure of the painting object that collided, to the text present on the canvas. If the player presses q while the raycast hits a painting then the original picture used to make the texture of the painting is displayed.

Image Display

The displaying of the original picture was trickier than expected. Screen dimensions variation and picture size differences made it impossible to hard code values in the display settings so a function resize had to be created that takes into account these parameters to fit correctly the size of the user screen. The map had the same problem and we were never able to make it readable. That is something that could be improved in the future.

Text display and canvas

The canvas is a 2d element that holds the paintings pictures when activated, the text of the painting that got hit by the raycast and the initial title animation. The resize problem was discussed in the previous paragraph. Some special material had to be applied to the pictures to render them, they had to be from the unlit category to avoid them being completely dark.

Quality and possible improvement

The game could be improved in many ways. We standardized the Louvre to the point where each room is made with the same brick wall and the floor is always the same wood floor. We don’t have the dimension nor the shape of the doors so their visualization is very limited. More information could be gathered on the paintings to give them a more realistic look. Bugs occur that are not handled, arriving to a room where a file is missing for instance. The raycast algorithm could be improved so that small painting can be more easily detected and door ignored. We could also have some more resize options, big rooms feel too big and small room too small. A smarter display algorithm could also be implemented. Overall all the functionalities are present but could be polished and reworked on. Video games offer unlimited feature addition and bug correction.

User guide

The player moves within the walls and can look at the paintings to see the name of the author and the title of the painting. He can choose to switch from first to third player. See the paintings full screen and toggle his collision box to avoid changing room.

Player movement :

(wasd)

Move camera :

(mouse/arrow keys)

Zoom/change camera :

(z)

See source picture :

(q)

Show map :

(m)

Bar doors :

(spaceBar)

Display incomplete paintings :

(r)

Milestone

Schedule

| Progress | Artwork retrieval | Map processing | Virtualisation |

|---|---|---|---|

| Done | Parsing of the catalogue | Creation of a GUI | Generation of a room |

| Connection with wikidata | |||

| To be done | |||

| Connection with other databases | Use of the GUI | Painting and doors generation | |

| Retrieval of the catalogue pictures | Consistency of room names | User interface and camera | |

| Link everything together | |||

Tasks

Catalogue parsing

Around 2200 out of 2700 artworks have been parsed. Improvement is limited due to OCR.

Online database scraping

Scraping wikidata allowed us to get around 300 pictures of paintings (out of 2200). As no online database seems to be exhaustive enough, we need to multiply the sources to get as much pictures of paintings as possible and deal with inconsistencies across the different databases. We plan to introduce an index of confidence for the picture, and to use the picture of painting of the catalogue itself.

Map processing

A small GUI has been created using tkinter to allow us to perform room identification in a systematic way. We need to perform the manual extraction and labelling of the rooms. The consistency of the room names with the catalogue's one need to be ensured.

Room generation in unity

Instead of generating the whole Louvre in one go, we take advantage of the video game oriented features of Unity3D to create each room on their own and travel between them through "gates". We are taking as input a list with walls and generate it in unity. We choose to define each wall as an object with two points and an orientation (north, west etc). we still need to add the generation of the doors.

Painting generator

We have to create the paintings at the right size displaying the author and the description. And then attach it to the right wall.

User environment

Creation the player and the possibility to interact with the environment must be done.

Linking everything together

The whole pipeling is almost complete. We need to map the pythonic room and artworks representation to a Unity3D one and agree on a data storage structure.

Links

References

- ↑ Informations and citations of the last two paragraphs come from the introduction of the Catalogue. https://gallica.bnf.fr/ark:/12148/bpt6k64771241/f12.image.r

- ↑ Current map of the Louvre (see 1st floor) : https://www.louvre.fr/sites/default/files/medias/medias_fichiers/fichiers/pdf/louvre-plan-information-francais.pdf

- ↑ Informations and citations of the last two paragraphs come from the introduction of the Catalogue. https://gallica.bnf.fr/ark:/12148/bpt6k64771241/f12.image.r