Quartiers Livres / Booking Paris: Difference between revisions

Bertil.wicht (talk | contribs) (→Today) |

|||

| (54 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

This project aims at presenting an interactive map of the the book industry in the middle of the XIX century based on [https://catalogue.bnf.fr/ark:/12148/cb326966746.public Pretod's directory of Paris typographers]. | This project aims at presenting an interactive map of the the book industry in the middle of the XIX century based on [https://catalogue.bnf.fr/ark:/12148/cb326966746.public Pretod's directory of Paris typographers]. | ||

<blockquote> Welcome in the middle of the XIX century. | <blockquote> "Welcome in the middle of the XIX century. | ||

You are an aspiring author and live as a contemporary of Balzac, Sue and Hugo in Paris. You just finished your project and urgently need to find workshops to print, edit and polish your work. | You are an aspiring author and live as a contemporary of Balzac, Sue and Hugo in Paris. You just finished your project and urgently need to find workshops to print, edit and polish your work. | ||

In the big city of Paris, you will need to choose careful every detail of your print preferences: the choice is large but you are searching for very specific tools, skills and craftmanship. | In the big city of Paris, you will need to choose careful every detail of your print preferences: the choice is large but you are searching for very specific tools, skills and craftmanship. | ||

May your work contain images, unusual typographies or handwritting, this app will provide you the best informations for where to look at. | May your work contain images, unusual typographies or handwritting, this app will provide you the best informations for where to look at. | ||

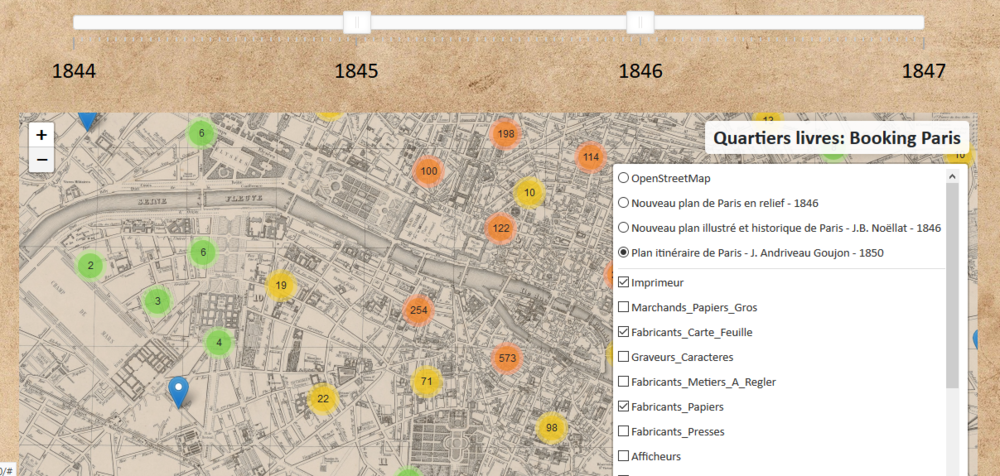

Discover our map: navigate through time and space using our time slider and leaflet visualisation. Search for the perfect workshop by clicking on the control panel and interacting with the markers made visible when zooming on their clusters. | Discover our map: navigate through time and space using our time slider and leaflet visualisation. Search for the perfect workshop by clicking on the control panel and interacting with the markers made visible when zooming on their clusters. | ||

Finally, reach your destination by checking the locations on our old maps of Paris.</blockquote> | Finally, reach your destination by checking the locations on our old maps of Paris."</blockquote> | ||

By navigating on our interactive map, the user will be able to see the different places (workshops, bookshops etc.) at their approximate location, categorized by their year and type of activities. Moreover, he will be able to see detailed and useful information about the workshops that are extracted from the original document. | By navigating on our interactive map, the user will be able to see the different places (workshops, bookshops etc.) at their approximate location, categorized by their year and type of activities. Moreover, he will be able to see detailed and useful information about the workshops that are extracted from the original document. | ||

| Line 14: | Line 14: | ||

=Data Presentation= | =Data Presentation= | ||

== | == Prétod directory == | ||

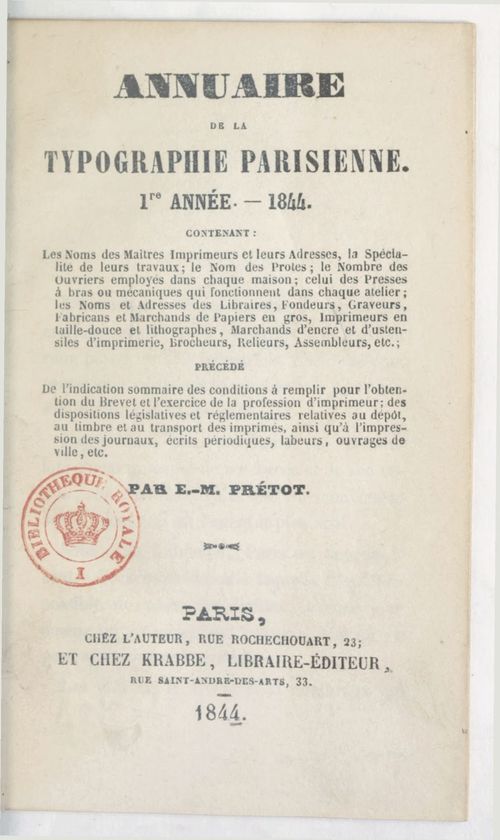

Our primary source for this work is the [https://catalogue.bnf.fr/ark:/12148/cb326966746/Pretod's yearbook]. Four of his directories are availaible on Gallica, between 1844 and 1847, in the form of images, alto OCR format and text file. | Our primary source for this work is the [https://catalogue.bnf.fr/ark:/12148/cb326966746/Pretod's yearbook]. Four of his directories are availaible on Gallica, between 1844 and 1847, in the form of images, alto OCR format and text file. | ||

| Line 24: | Line 24: | ||

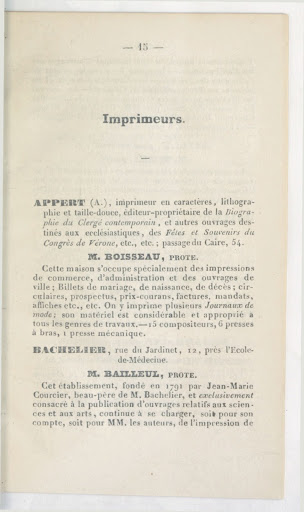

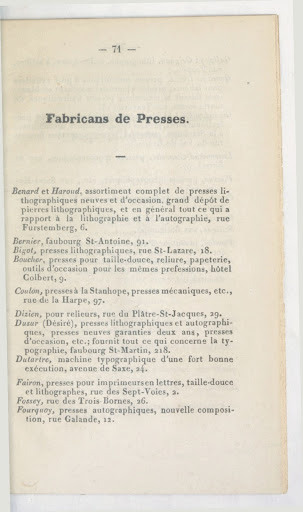

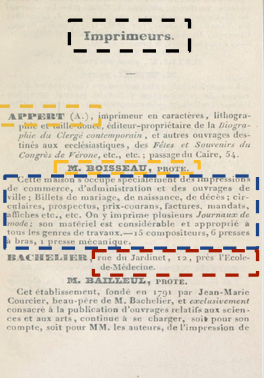

[[File:Pretod_1.jpg|500px]] [[File:Pretod_2.jpg|500px]] [[File:Pretod_3.jpg|500px]] | [[File:Pretod_1.jpg|500px]] [[File:Pretod_2.jpg|500px]] [[File:Pretod_3.jpg|500px]] | ||

== Old Paris maps == | |||

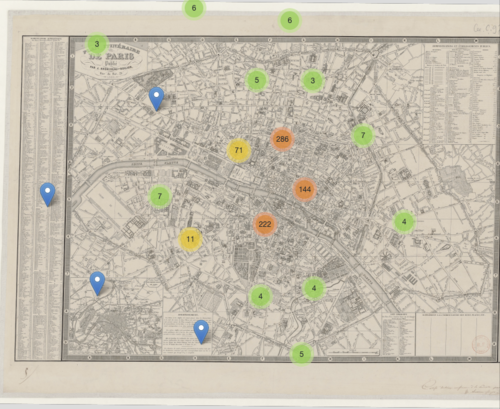

Our second main source, are three Paris maps from that same time (1845) found on the Gallica and provided by the BnF [https://gallica.bnf.fr/ark:/12148/btv1b550044782?rk=5364833;2/ website]: "Nouveau plan de Paris en relief" in 1846, "Nouveau plan illustré et historique de Paris" by J.B. Noëllat in 1846 and "Plan itinéraire de Paris" by J. Andriveau Goujon in 1850. The maps are not shown in their original form in the app, but have been geometrically distorded in order to match as closely as possible the GPS and Leaflet representation system. | Our second main source, are three Paris maps from that same time (1845) found on the Gallica and provided by the BnF [https://gallica.bnf.fr/ark:/12148/btv1b550044782?rk=5364833;2/ website]: "Nouveau plan de Paris en relief" in 1846, "Nouveau plan illustré et historique de Paris" by J.B. Noëllat in 1846 and "Plan itinéraire de Paris" by J. Andriveau Goujon in 1850. The maps are not shown in their original form in the app, but have been geometrically distorded in order to match as closely as possible the GPS and Leaflet representation system. | ||

The maps are different in what they propose to the reader. While the first one shows colors and a esthetic illustration of Paris 'intra-muros', the two other maps show with more detail the geography of Paris and the | The maps are different in what they propose to the reader. While the first one shows colors and a esthetic illustration of Paris 'intra-muros', the two other maps show with more detail the geography of Paris and the mapping of the streets. They lack at the same time colors that the reader may find appreciable in the first one. The "Plan itinéraire de Paris' also proposes additional information, such as the directory of public places and their location on the map. | ||

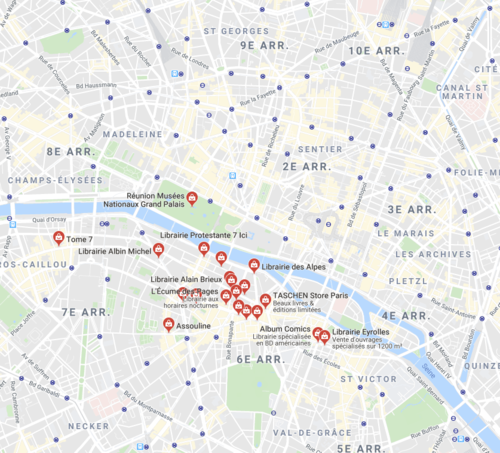

= Project process description = | = Project process description = | ||

== | The overall pipeline is schematized in the following diagram. | ||

[[File:pipeline.PNG]] | |||

== Data extraction and database construction == | |||

=== OCR === | |||

The documents by Prétod had already been "OCRized" and were available as text files for download in Gallica. Although the quality of their OCR is far from perfect, they allowed us to start the whole pipeline without major problems. | |||

=== Text to database parsing and segmenting === | |||

== Maps == | The documents contain only two distinct form of layout / structure: one for the printers ("imprimeurs", their names appear in UPPERCASE letters and they have a specific "Prote." field) and one for all the other workshops. | ||

For each document, the different workshops were first manually segmented into different source files, originally because of the different structures between the "imprimeurs" and the other activities. Even after long checks, we had a different number of files per year. With regular expression, it was then possible to extract the different features of interest: names, addresses, prote and comments. | |||

[[File:seg.PNG]] | |||

== Mapping data == | |||

Throughout time, Paris changed and some of the streets of our documents are no longer existant. Some address would then change between now and then. Two options are therefore availaible for converting the addresses to a set of coordinates: | |||

* Using a conversion file between old Paris addresses from the EPFL DH lab | |||

* Using the Google Geocoding API with usualy [https://pypi.org/project/geopy/ GeoPy queries] | |||

As the performance of the first option was low, we decided to opt for the Google API option and to count on its ability to convert old names or even unexistant places to conventional GPS coordinates. | |||

=== Maps === | |||

The old maps of Paris do not natively match with the OSM maps and leaflet plugin that allow for the interactive display of the data. Not only are the metrics different but also the precision of the maps. To transform the geometry of the map to fit the modern one, the maps were then georeferenced with the online tool [https://www.georeferencer.com/Georeferencer] and tiles extracted from the modified version. | |||

=== Leaflet plugin === | |||

On the app, we use a leaflet plugin to display the maps and the content of the different layers. | |||

A first slider allows the user to filter the data between a range of years. At each modification, the maps are dynamically re-generated in order to show only the locations (markers) of the relevant workshops. | |||

The locations are indicated with single markers, but when zooming out, close one are clustered and appear in the form of a circle indicating the mean location of the cluster and its size. This allows for a smoother visualisation for the user. | |||

On the right side, a control panel allows for choosing which activities (type of workshop) are to be displayed on the map. Once again, the data is dynamically added or removed when using these controls. | |||

Finally, when clicking on a single marker, the user is able to see the whole data corresponding to its workshop: name, address, comment, year and prote (if applies). | |||

This way, the user is really able to filter down the data of the annuaire in an interactive manner and to select the workshops of his interest. | |||

[[File:leaflet_slider.PNG|1000px]] | |||

= | == Interactivity shortcomings == | ||

Compared to our goal, we can already identify a few shortcomings and missing features, especially on the side of the user experience: | |||

1. Given the narrative context that we situate our app in, we are unable to provide relevant propositions of workshops and to select them based on the type of book that the user is interest in or other features | |||

2. The comments contain rich information and some tag-based interactivity (cf point 1.) is still to be implemented in the app based on NLP on the comments fields. | |||

3. Leaflet controls only allow us for 1-dimensional controls (here, the type of workshop and year range), so that we miss some features such as 2D filtering (printers of 1844, editors of 1845 and so on). | |||

4. One could also miss interactivity between the elements themselves. For instance, one workshop could link to other workshops that share some common properties (tag, district, etc) or to its occurences during the other years. | |||

= Performance assessment= | = Performance assessment= | ||

During the whole performance assessment, we will first choose to estimate the quality of the pipeline as if every step would be an indenpendent one, so as to identify the steps that can be improved later. We will discuss this model and hypothesis later during the discussion. | |||

Our main criterion for asserting an egality between two values is the exact correspondance of the values compaired, by simplicity. We will later see that this simple method has drawbacks and would need to be refined for a more advanced study. | |||

Each time that a ratio is provided, it means that the calculation has been done by sampling the dataset by the amount shown in the denominator. | |||

==OCR== | ==OCR== | ||

| Line 77: | Line 107: | ||

<code>OCR_addresses = 11/20</code> | <code>OCR_addresses = 11/20</code> | ||

We now continue with the performance of the OCR for the recognition of | We now continue with the performance of the OCR for the recognition of the names. It is assumed that the performance is independent of that of the addresses, since the position in the text is different and the names are not on several lines like the addresses, which seems to be a problem. | ||

The protests have the same typography as the names on the book, so it can be assumed that their recognition rate is the same as for the names of printers. | The protests have the same typography as the names on the book, so it can be assumed that their recognition rate is the same as for the names of printers. | ||

| Line 93: | Line 123: | ||

In almost half of the cases, we therefore have a result whose value on both is correct. | In almost half of the cases, we therefore have a result whose value on both is correct. | ||

If we now take into account the | If we now take into account the "protes": | ||

<code> | <code> | ||

perfect_protect_chances = 11/20 * 11/20 * 10/21 | perfect_protect_chances = 11/20 * 11/20 * 10/21 | ||

</code> | </code> | ||

| Line 110: | Line 139: | ||

== From address to GPS coordinates== | == From address to GPS coordinates== | ||

To convert the addresses into coordinates, we have two options at our disposal: | |||

* matching the addresses with the old paris database from the DHLab | |||

* using the Google Geocode API with GeoPy | |||

=== Identification by address directory of old Paris=== | |||

Let's first consider the old paris address database. | |||

To match the address between this database and our list of addresses, we have required an exact match between the address content (address indicator, street name and number). We will see later how we could have choosen a better option here. | |||

We see in the test_GEO.csv that only 29 lines were written following the matching in the old Paris address directory, i.e. a ratio of 29/6203, less than 1/200. | We see in the test_GEO.csv that only 29 lines were written following the matching in the old Paris address directory, i.e. a ratio of 29/6203, less than 1/200. | ||

| Line 119: | Line 155: | ||

</code> | </code> | ||

Given the low satisfaying results of this matching method, we decided to use the google geocoding API for our app. Again, that result will be discussed later. | |||

=== Identification by google geocoding API === | |||

Let's assume that the google geocoding API as an independent process. If this was indeed the case, one could naively compute the number of correct coordinates returned for a sample of known addresses and coordinates, such as the ones from the old paris addresses DB from the DHLab. | |||

However, the API clearly implements a matching strategy that tends to cluster the queries inputs and identify any address with close known equivalent. For instance, a query with mistakes such as "Sorbonne, Pzarés, 34..." will be recognised as very similar to "Sorbonne, Paris, 34" and correctly written addresses. | |||

Therefore, the performance of the API is not at all independent of the other elements of the pipeline and one can not measure only the quality of the API alone. | |||

We need at that point to abandon the idea of estimating the overall performance by combining individual (pipeline blocks) performance measure and consider a more general estimator for the whole pipeline. | |||

== Performance assessment discussion == | |||

As raised above and during the project presentation, the performance assessment assuming statistically independent transformations during the whole pipeline is based on a wrong hypothesis and more direct assessment methods should be defined. | |||

A very simple definition for the global quality of the application is defined by the ratio of the number of correctly placed points over the size of the dataset. | |||

By sampling over 50 (almost) randomly selected markers on the map and checking manually of the exactitude of the adsress, we can see that this estimator yields an overall performance of 36/50, which is much higher than what we estimated from the individual pipeline blocks. | |||

The main explanation that can be given to this result is that the estimator of the google API performance proposed above is wrong and has no statistical value. | |||

It should be understood that the previous attempt at estimating the performance of each step of the pipeline aims at better describing the process and the confidence we have in it and attempts at constructing an estimation on the hypothesis of independent steps. The comparison with the latter result clearly show the inconsistency of this approach. | |||

---- | |||

An other important matter during the development of the pipeline and more importantly for its performance evaluation is the means of conversion from addresses to GPS coordinates. We saw that we obtain very low performance when matching the addresses between the two databases and that therefore, the old paris address database only account for a very small ratio of the coordinates identification. For simplicity, we have always assumed that the evaluation of the performance of each module lied on the exact match between each compared content, such as the addresses strings. It is not garantuee however that this method is optimal at all; in fact, some simple experiments with the google geocode API leads us to thing that matching strategies can be much more efficient. In the last section, the results should always be read taking into account that the classification of "good" or "bad" performance is based on the exact word matching and not smarter strategies, whereas the global estimator that we introduced accounts for theses smart strategies proposed as part, for instance, of the google geocode API. | |||

This choice also appears as an important shortcoming of our original approach and the choice of metric for string comparison should be improved for further work on this project. | |||

= Analysis = | |||

In this semester project, the main focus wasn't on a specific theme or analysis, and didn't began with a specific problematic to answer. However, with the main source that we chose, we expected from the beginning to get a rich overview from the book business in Paris during that time. Still today, it is common knowledge that Paris has a rich cultural life, and especially with the book industry. For a french speaking swiss writer for example, you could only be considered famous if your book had recognition in France. And in order to do that, you should have printed your book in one of Paris publishing houses. | |||

So, even without an academic research question, our small narrative around our project, raised a small question : Where should you go if you are an aspiring writer and want to publish your newly written book ? This raise then another smaller question, where are the paris book neighbourhood ? We present those primary results in these part. | |||

For the our result's analysis, we will only look at two specific types of crafts and workshops; first, the booksellers, that appears together with publishers in our data. And secondly, the printer's workshops. | |||

== Maps == | |||

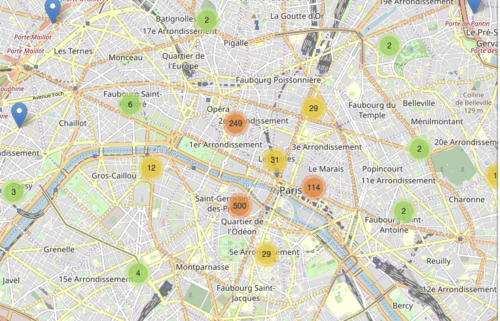

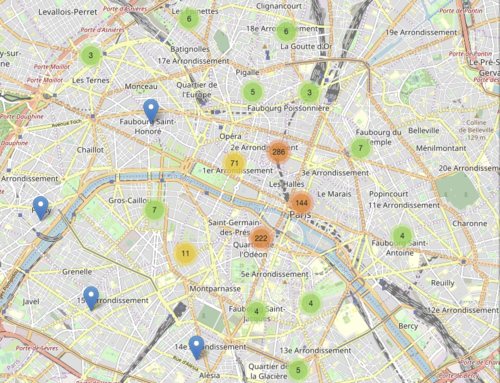

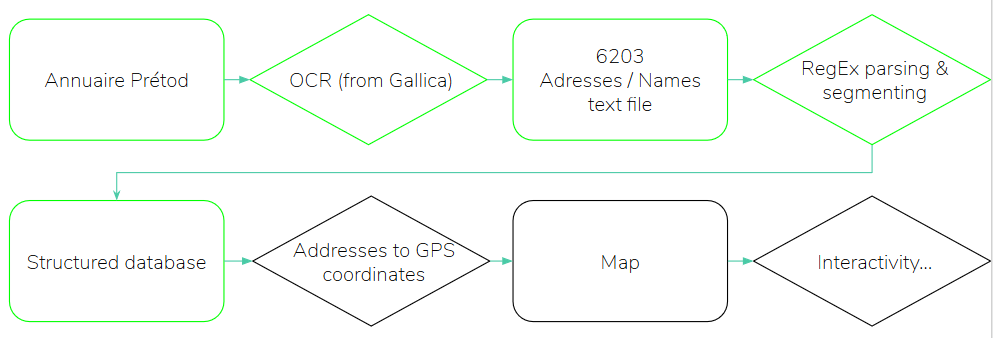

=== Bookshops and Publishers === | |||

[[File:LibrairesEditeursToday.png|500px]] [[File:LibrairesEditeursOld.png|500px]] | |||

=== Printers === | |||

[[File:ImprimeursToday.png|500px]] [[File:ImprimeursOld.png|500px]] | |||

== | == Brief Conclusions == | ||

The clusters present in the two maps appears roughly in the same areas. Adjustments in the code and the clustering, could make it a bit more clear, but we still can see that the two types of places aggregates in the same areas ; between the Ist, IVth and VIth arrondissement of Paris. | |||

= | == Today == | ||

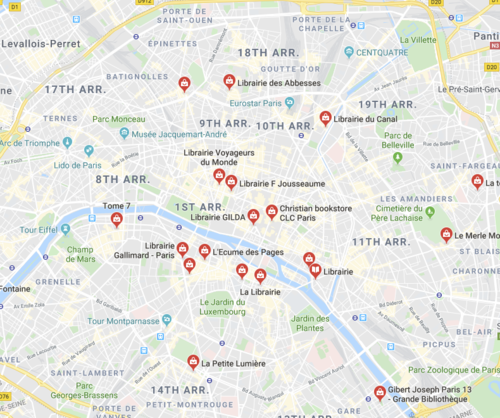

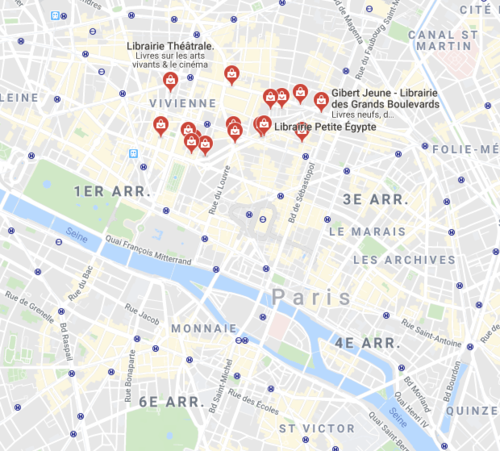

Even if our points can be shown on a modern map, they don't let us compare with the modern position of such places. Even if this is obviously not rigorous results, we wanted to show that still today, a lot of bookshops a present in the area today. In the image below, we present the result that you get on google if you look for libraries in paris, which is not very eloquent. | |||

[[File:LibrairiesGoogleMaps.png|500px]] | |||

However with more precise research on "librairies" with specific area name like "Saint-Germain" , "Le Marais" ou "le Sentier", We can see that there are still plenty of them in the area and that a single neighbourhood, you can find more bookshops than in cities like Geneva or Lausanne. | |||

[[File:LibrairiesMarais.png|500px]][[File:LibrairiesSentiers.png|500px]][[File:LibrairiesStGermain.png|500px]] | |||

By doing a more rigorous visualisation of today book industry, it would may be possible to show the evolution of this industry, and maybe by extension give account of the intellectual life and consumption of people. | |||

=Project calendar = | =Project calendar = | ||

Latest revision as of 17:49, 18 December 2019

Goal of the project

This project aims at presenting an interactive map of the the book industry in the middle of the XIX century based on Pretod's directory of Paris typographers.

"Welcome in the middle of the XIX century.

You are an aspiring author and live as a contemporary of Balzac, Sue and Hugo in Paris. You just finished your project and urgently need to find workshops to print, edit and polish your work. In the big city of Paris, you will need to choose careful every detail of your print preferences: the choice is large but you are searching for very specific tools, skills and craftmanship. May your work contain images, unusual typographies or handwritting, this app will provide you the best informations for where to look at. Discover our map: navigate through time and space using our time slider and leaflet visualisation. Search for the perfect workshop by clicking on the control panel and interacting with the markers made visible when zooming on their clusters.

Finally, reach your destination by checking the locations on our old maps of Paris."

By navigating on our interactive map, the user will be able to see the different places (workshops, bookshops etc.) at their approximate location, categorized by their year and type of activities. Moreover, he will be able to see detailed and useful information about the workshops that are extracted from the original document.

Data Presentation

Prétod directory

Our primary source for this work is the yearbook. Four of his directories are availaible on Gallica, between 1844 and 1847, in the form of images, alto OCR format and text file. In each document are present the names and the address of different type of activities related to typography, printing, editing and book production.

The documents are between 116 and 174 pages long, divided in sections (named after the type of workshop they adress) and following a similar structure throughout the years and their structure, with some exceptions.

As of december 2019, very little informations are found on the internet about the author. We know from its documents that he is typograph and lives in Paris according to his address shown at the front page of the documents.

Old Paris maps

Our second main source, are three Paris maps from that same time (1845) found on the Gallica and provided by the BnF website: "Nouveau plan de Paris en relief" in 1846, "Nouveau plan illustré et historique de Paris" by J.B. Noëllat in 1846 and "Plan itinéraire de Paris" by J. Andriveau Goujon in 1850. The maps are not shown in their original form in the app, but have been geometrically distorded in order to match as closely as possible the GPS and Leaflet representation system.

The maps are different in what they propose to the reader. While the first one shows colors and a esthetic illustration of Paris 'intra-muros', the two other maps show with more detail the geography of Paris and the mapping of the streets. They lack at the same time colors that the reader may find appreciable in the first one. The "Plan itinéraire de Paris' also proposes additional information, such as the directory of public places and their location on the map.

Project process description

The overall pipeline is schematized in the following diagram.

Data extraction and database construction

OCR

The documents by Prétod had already been "OCRized" and were available as text files for download in Gallica. Although the quality of their OCR is far from perfect, they allowed us to start the whole pipeline without major problems.

Text to database parsing and segmenting

The documents contain only two distinct form of layout / structure: one for the printers ("imprimeurs", their names appear in UPPERCASE letters and they have a specific "Prote." field) and one for all the other workshops.

For each document, the different workshops were first manually segmented into different source files, originally because of the different structures between the "imprimeurs" and the other activities. Even after long checks, we had a different number of files per year. With regular expression, it was then possible to extract the different features of interest: names, addresses, prote and comments.

Mapping data

Throughout time, Paris changed and some of the streets of our documents are no longer existant. Some address would then change between now and then. Two options are therefore availaible for converting the addresses to a set of coordinates:

- Using a conversion file between old Paris addresses from the EPFL DH lab

- Using the Google Geocoding API with usualy GeoPy queries

As the performance of the first option was low, we decided to opt for the Google API option and to count on its ability to convert old names or even unexistant places to conventional GPS coordinates.

Maps

The old maps of Paris do not natively match with the OSM maps and leaflet plugin that allow for the interactive display of the data. Not only are the metrics different but also the precision of the maps. To transform the geometry of the map to fit the modern one, the maps were then georeferenced with the online tool [1] and tiles extracted from the modified version.

Leaflet plugin

On the app, we use a leaflet plugin to display the maps and the content of the different layers.

A first slider allows the user to filter the data between a range of years. At each modification, the maps are dynamically re-generated in order to show only the locations (markers) of the relevant workshops.

The locations are indicated with single markers, but when zooming out, close one are clustered and appear in the form of a circle indicating the mean location of the cluster and its size. This allows for a smoother visualisation for the user.

On the right side, a control panel allows for choosing which activities (type of workshop) are to be displayed on the map. Once again, the data is dynamically added or removed when using these controls.

Finally, when clicking on a single marker, the user is able to see the whole data corresponding to its workshop: name, address, comment, year and prote (if applies).

This way, the user is really able to filter down the data of the annuaire in an interactive manner and to select the workshops of his interest.

Interactivity shortcomings

Compared to our goal, we can already identify a few shortcomings and missing features, especially on the side of the user experience:

1. Given the narrative context that we situate our app in, we are unable to provide relevant propositions of workshops and to select them based on the type of book that the user is interest in or other features

2. The comments contain rich information and some tag-based interactivity (cf point 1.) is still to be implemented in the app based on NLP on the comments fields.

3. Leaflet controls only allow us for 1-dimensional controls (here, the type of workshop and year range), so that we miss some features such as 2D filtering (printers of 1844, editors of 1845 and so on).

4. One could also miss interactivity between the elements themselves. For instance, one workshop could link to other workshops that share some common properties (tag, district, etc) or to its occurences during the other years.

Performance assessment

During the whole performance assessment, we will first choose to estimate the quality of the pipeline as if every step would be an indenpendent one, so as to identify the steps that can be improved later. We will discuss this model and hypothesis later during the discussion.

Our main criterion for asserting an egality between two values is the exact correspondance of the values compaired, by simplicity. We will later see that this simple method has drawbacks and would need to be refined for a more advanced study.

Each time that a ratio is provided, it means that the calculation has been done by sampling the dataset by the amount shown in the denominator.

OCR

We started with OCR between pages 20 and 25 of 1844 (20 addresses): number of correctly transcribed addresses/total number of addresses.

OCR_addresses = 11/20

We now continue with the performance of the OCR for the recognition of the names. It is assumed that the performance is independent of that of the addresses, since the position in the text is different and the names are not on several lines like the addresses, which seems to be a problem.

The protests have the same typography as the names on the book, so it can be assumed that their recognition rate is the same as for the names of printers.

We can now statistically evaluate the chances of having a perfect result (good addresses + good names) or a totally false result, assuming the independence of the recognition of addresses and names and without considering protests.

perfect_chances = 11/20 * 10/21

perfect_chances 0.2619047619761904047619

false_chances = 9/20 * 11/21 = 0.2357142857142857

false_chances + perfect_chances = 0.49761904761976190476

In almost half of the cases, we therefore have a result whose value on both is correct.

If we now take into account the "protes":

perfect_protect_chances = 11/20 * 11/20 * 10/21

In this case, we are close to 1/7th of perfect values

The most important value for OCR performance to define performance will be retained: address recognition, from 11/20.

Let's now look at the performance with which our algorithm clearly identifies names, comments and addresses and ranks them correctly in the dataframe. To do this, we manually look at each line of the text file and the database to analyze a small number of data. Although this performance is dependent on the quality of the OCR, it is independent of the previous measurement which only assessed the accuracy of address transcription and not the general segmentation. The measurement is carried out on 40 printing plants from 1844 starting on page 26 of the Prétod directory.

text_to_DF = 36/40

From address to GPS coordinates

To convert the addresses into coordinates, we have two options at our disposal:

- matching the addresses with the old paris database from the DHLab

- using the Google Geocode API with GeoPy

Identification by address directory of old Paris

Let's first consider the old paris address database. To match the address between this database and our list of addresses, we have required an exact match between the address content (address indicator, street name and number). We will see later how we could have choosen a better option here.

We see in the test_GEO.csv that only 29 lines were written following the matching in the old Paris address directory, i.e. a ratio of 29/6203, less than 1/200.

Among these 29 lines, we see that only 13 are actually address matches, and that the others contain errors in their address.

13/6203 = 0.002095760116072868

Given the low satisfaying results of this matching method, we decided to use the google geocoding API for our app. Again, that result will be discussed later.

Identification by google geocoding API

Let's assume that the google geocoding API as an independent process. If this was indeed the case, one could naively compute the number of correct coordinates returned for a sample of known addresses and coordinates, such as the ones from the old paris addresses DB from the DHLab.

However, the API clearly implements a matching strategy that tends to cluster the queries inputs and identify any address with close known equivalent. For instance, a query with mistakes such as "Sorbonne, Pzarés, 34..." will be recognised as very similar to "Sorbonne, Paris, 34" and correctly written addresses. Therefore, the performance of the API is not at all independent of the other elements of the pipeline and one can not measure only the quality of the API alone.

We need at that point to abandon the idea of estimating the overall performance by combining individual (pipeline blocks) performance measure and consider a more general estimator for the whole pipeline.

Performance assessment discussion

As raised above and during the project presentation, the performance assessment assuming statistically independent transformations during the whole pipeline is based on a wrong hypothesis and more direct assessment methods should be defined.

A very simple definition for the global quality of the application is defined by the ratio of the number of correctly placed points over the size of the dataset.

By sampling over 50 (almost) randomly selected markers on the map and checking manually of the exactitude of the adsress, we can see that this estimator yields an overall performance of 36/50, which is much higher than what we estimated from the individual pipeline blocks.

The main explanation that can be given to this result is that the estimator of the google API performance proposed above is wrong and has no statistical value.

It should be understood that the previous attempt at estimating the performance of each step of the pipeline aims at better describing the process and the confidence we have in it and attempts at constructing an estimation on the hypothesis of independent steps. The comparison with the latter result clearly show the inconsistency of this approach.

An other important matter during the development of the pipeline and more importantly for its performance evaluation is the means of conversion from addresses to GPS coordinates. We saw that we obtain very low performance when matching the addresses between the two databases and that therefore, the old paris address database only account for a very small ratio of the coordinates identification. For simplicity, we have always assumed that the evaluation of the performance of each module lied on the exact match between each compared content, such as the addresses strings. It is not garantuee however that this method is optimal at all; in fact, some simple experiments with the google geocode API leads us to thing that matching strategies can be much more efficient. In the last section, the results should always be read taking into account that the classification of "good" or "bad" performance is based on the exact word matching and not smarter strategies, whereas the global estimator that we introduced accounts for theses smart strategies proposed as part, for instance, of the google geocode API.

This choice also appears as an important shortcoming of our original approach and the choice of metric for string comparison should be improved for further work on this project.

Analysis

In this semester project, the main focus wasn't on a specific theme or analysis, and didn't began with a specific problematic to answer. However, with the main source that we chose, we expected from the beginning to get a rich overview from the book business in Paris during that time. Still today, it is common knowledge that Paris has a rich cultural life, and especially with the book industry. For a french speaking swiss writer for example, you could only be considered famous if your book had recognition in France. And in order to do that, you should have printed your book in one of Paris publishing houses.

So, even without an academic research question, our small narrative around our project, raised a small question : Where should you go if you are an aspiring writer and want to publish your newly written book ? This raise then another smaller question, where are the paris book neighbourhood ? We present those primary results in these part. For the our result's analysis, we will only look at two specific types of crafts and workshops; first, the booksellers, that appears together with publishers in our data. And secondly, the printer's workshops.

Maps

Bookshops and Publishers

Printers

Brief Conclusions

The clusters present in the two maps appears roughly in the same areas. Adjustments in the code and the clustering, could make it a bit more clear, but we still can see that the two types of places aggregates in the same areas ; between the Ist, IVth and VIth arrondissement of Paris.

Today

Even if our points can be shown on a modern map, they don't let us compare with the modern position of such places. Even if this is obviously not rigorous results, we wanted to show that still today, a lot of bookshops a present in the area today. In the image below, we present the result that you get on google if you look for libraries in paris, which is not very eloquent.

However with more precise research on "librairies" with specific area name like "Saint-Germain" , "Le Marais" ou "le Sentier", We can see that there are still plenty of them in the area and that a single neighbourhood, you can find more bookshops than in cities like Geneva or Lausanne.

By doing a more rigorous visualisation of today book industry, it would may be possible to show the evolution of this industry, and maybe by extension give account of the intellectual life and consumption of people.

Project calendar

| Deadline | Focus |

|---|---|

| 25.11 | Mapping a minimal set of data |

| 2.12 | Prototype of web application, Database ready from Annuaire Pretod,

Getting descriptions ready for the map, Extract sizes of printers workshop |

| 9.12 | Implementation of search result and visualisation of book neighboorhood in the city

Adding data from publishers from Bnf |

| 16.12 | Improvement of UI and final app design |

With the troubles we encountered with the data from the annuaire we finally didn't use the extra information about the size of the workshop, nor the data about every book published by the workshop.