Deciphering Venetian handwriting: Difference between revisions

Jeremy.mion (talk | contribs) No edit summary |

|||

| (121 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Introduction== | ==Introduction== | ||

The goal of this project is to create a pipeline that allows reestablishing the mapping between the | The cadasters are essential documents which describe the land reliably and precise manner. The shape of the lots, buildings, bridges, and layout of other urban works are meticulously documented and annotated with a unique number. The "[http://www.archiviodistatovenezia.it/siasve/cgi-bin/pagina.pl?Chiave=7053&ChiaveAlbero=7059&ApriNodo=0&Tipo=fondo Sommarioni]" are the key to reading the map as they contain the parcel number (ref to the map), owner's name, toponym, intended use of the land or building, surface area of the land. The Napoleonic [https://fr.wikipedia.org/wiki/Cadastre_de_France cadaster] is an official record of the entire French empire. On the 15th of September 1807, a new law gave the order that the French Empire is to be meticulously measured and documented. This allowed the government to tax their subjects for the land that they owned. More historical information can be found in this article from [https://www.lemonde.fr/talents-fr/article/2007/03/19/la-naissance-du-cadastre-en-1807_884751_3504.html Le Monde]. | ||

These documents now allow historians to research the evolution of the city. The cadaster that this project will focus on is the Venetian cadaster created between 1807 and 1816 as a result of the law previously mentioned. | |||

These documents are a very useful source of information for historians studying these periods of history. To help them in this task, large amounts of historical documents have been dititalized in the scope of the [https://www.timemachine.eu/ Venice Time Machine] project. The "Sommarioni" have been the focus of previous projects that have focused on manually digitalizing the content of these records. Sadly will doing this process the link to the page that contained the information was lost. A previous attempt to reestablish this link was done in the [http://veniceatlas.epfl.ch/tag/ocr/ Mapping Cadasters, DH101 project]. | |||

The motivation of this project was to attempt to improve the result that they obtained by trying a new approach. | |||

The goal of this project is to create a pipeline that allows reestablishing the mapping between the digital scans of the "Sommarioni" and the digital version of it as an excel spreadsheet. | |||

To produce this pipeline, a mix of a deep neural network for handwriting recognition, a cycleGAN to extract patches, and classical image processing technics, and unsupervised machine learning. | |||

The result of the pipeline is adding the links to the original source document. If our matching procedure used the Possesore field to identify the match a [https://images.center/iiif_sommarioni/reg1-0003/704,2578,1119,132/full/0/default.jpg link] to that patch will be added to the excel document. This is useful to check if the automatic matching is correct. A second image [https://images.center/iiif_sommarioni/reg1-0003/0,2578,6000,132/full/0/default.jpg link] is added to be able to see the entire line in the source document. | |||

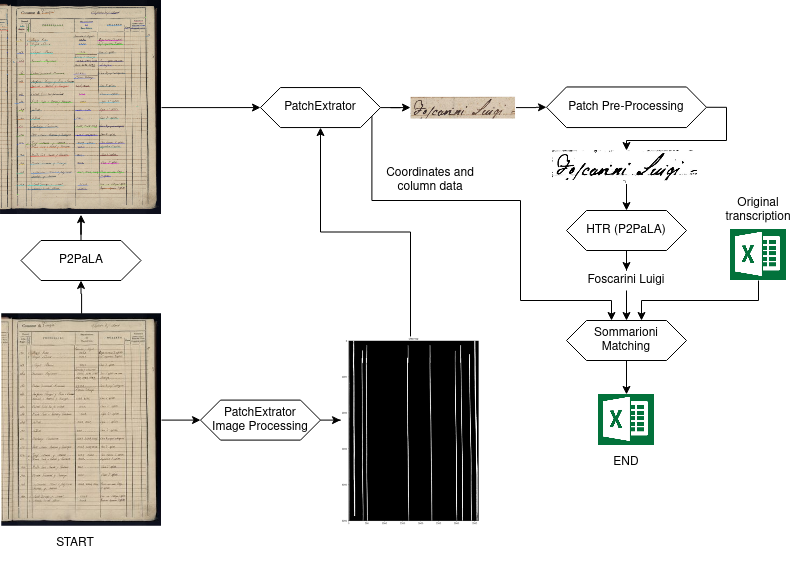

[[File:Sommarioni_Pipeline.png|center|Pipeline of the extraction process.]] | |||

==Planning== | ==Planning== | ||

| Line 32: | Line 43: | ||

===Week 09=== | ===Week 09=== | ||

* Input : Sommarioni images | * Input : Sommarioni images | ||

* Output : Patch of pixels containing text with coordinate of the patch in the Sommarioni | * Output : Patch of pixels containing text with coordinate of the patch in the Sommarioni | ||

* Step 1 : Segment hand written text regions in Sommarioni images | * Step 1 : Segment hand written text regions in Sommarioni images ✅ | ||

* Step 2 : Extraction of the patches | * Step 2 : Extraction of the patches ✅ | ||

===Week 10=== | ===Week 10=== | ||

* Input : transcription (Excel File), tuples (page id, patch) extracted in week 9 | * Input : transcription (Excel File), tuples (page id, patch) extracted in week 9 | ||

* Output : line in the transcription -> page id | * Output : line in the transcription -> page id | ||

* Step 1 : HTR recognition in the patch and cleaning : (patch, text) | * Step 1 : HTR recognition in the patch and cleaning : (patch, text) ✅ | ||

* Step 2 : Find matching pair between recognized text and transcription | * Step 2 : Find matching pair between recognized text and transcription ✅ | ||

* Step 3 : New excel file with the new page id column | * Step 3 : New excel file with the new page id column ✅ | ||

===Week 11=== | ===Week 11=== | ||

* Step 1 : Apply the pipeline validated on week 10 on the whole dataset | * Step 1 : Apply the pipeline validated on week 10 on the whole dataset ✅ | ||

* Step 2 : Evaluate the quality and based on that decide of the tasks for the next weeks | * Step 2 : Evaluate the quality and based on that decide of the tasks for the next weeks ✅ | ||

===Week 12=== | ===Week 12=== | ||

* Depending of the quality of the matching | * Depending of the quality of the matching | ||

** Improve image segmentation | ** Improve image segmentation ✅ | ||

** More precise matching (excel cell) -> (page id, patch) in order to have the precise box of each written text | ** More precise matching (excel cell) -> (page id, patch) in order to have the precise box of each written text ❌ | ||

** Use an IIF image viewer to show the results of the project in a more fancy way | ** Use an IIF image viewer to show the results of the project in a more fancy way ❌ | ||

==Methodology == | ==Methodology == | ||

The project can be summarized as a 4 steps pipeline as shown on figure | The project can be summarized as a 4 steps pipeline as shown on figure seen in the introduction. | ||

=== Step 1 - Text detection=== | === Step 1 - Text detection=== | ||

The first part of the project | The first part of the project consists in extracting the areas on the image of the page that contains the text. This is a required step since our handwriting recognition model requires as an input a single line of text. To extract the patches the first step is to identify the location of the text on the page. This information will be stored in the standard image metadata description called [http://www.primaresearch.org/tools/PAGELibraries PAGE]. The location will be stored by storing the baseline under some text. To extract the baseline we use the [https://github.com/lquirosd/P2PaLA P2PaLA] repository. It is a [[:wikipedia:Document layout analysis|Document layout analysis]] tool based on the pix2pix and CycleGAN. More information on the network being used can be found in the [https://arxiv.org/pdf/1703.10593.pdf Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks] paper. Since there is no ground truth for the locations of baselines in the Sommarioni dataset we used the pre-trained model provided with [https://github.com/lquirosd/P2PaLA P2PaLA]. We do not have a metric to measure the quality of this step of our pipeline. We conducted a qualitative visual inspection of results. The output is remarkably good given that it was not trained on data from this dataset. A few false positives were found, but no false negatives. False positives are not an issue since the next steps in our pipeline will remove most of them. | ||

[[File:Sommarioni_Line_detection.png|thumb|center|Fig. 1: Page with output of the baseline detection]] | [[File:Sommarioni_Line_detection.png|thumb|center|Fig. 1: Page with output of the baseline detection]] | ||

===Step 2 - Patch extraction=== | ===Step 2 - Patch extraction=== | ||

Once the baselines | Once the baselines are identified we need to extract the areas that contained text (cropping the image of the page to contain only a single sentence). No preexisting tool satisfied the quality requirements that we require. Therefore, we created [https://github.com/Jmion/PatchExtractor PatchExtractor]. [https://github.com/Jmion/PatchExtractor PatchExtractor] is a [[:wikipedia:Python (programming language)|python]] program that extracts the patches using as input the source image and the baseline file produced by [https://github.com/lquirosd/P2PaLA/tree/master P2PaLA]. [https://github.com/Jmion/PatchExtractor PatchExtractor] uses some advanced image processing to extract the columns from the source image. The information about the column that the patch is located in, is crucial. After [[:wikipedia:Handwriting recognition|HTR]] we can use the location information to match the columns of the spreadsheet with their equivalent column in the picture. | ||

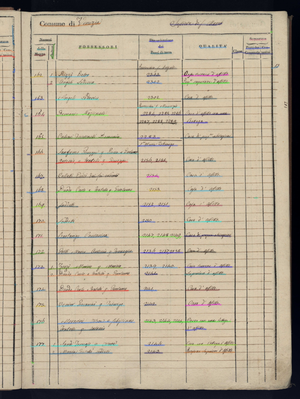

[[File:Censo-stabile_Sommarioni-napoleonici_reg-1_0015_013.jpg|thumb|center|Fig. 2: Original source image of the page]] | |||

[[ | A [[:wikipedia:Hough transform|Hough transform]] was considered and tested as a potential method to extract the vertical lines but to many issues with filtering the lines produced showed up. No useful information could be used from this approach. | ||

Using a simple pixel mask is sufficient and avoids issues with geometric distortions caused by the page shape while being scanned. | |||

The advanced column extractor can produce a clean binary mask of the location of the columns as seen in Fig. 3. To achieve this multiple steps including some such as: | The advanced column extractor can produce a clean binary mask of the location of the columns as seen in Fig. 3. To achieve this multiple steps including some such as: | ||

* Applying a Gabor | * Applying a [[:wikipedia:Gabor filter|Gabor filter]] (linear feature extractor) | ||

* Using a contrast threshold | * Using a contrast threshold (Binary and [https://en.wikipedia.org/wiki/Otsu%27s_method Otsu]) | ||

* Connected component size filtering (removing small connected components) | * [[:wikipedia:Gaussian blur|Gaussian blur]] | ||

* | * [[:wikipedia:Affine transformation|Affine transformation]] | ||

* [[:wikipedia:Bitwise operation|Bitwise operations]] | |||

* [[:wikipedia:Component (graph theory)|Connected component]] size filtering (removing small connected components) | |||

* [[:wikipedia:Cropping (image)|Cropping]] | |||

were done to transform the original picture into a column mask of the region of interest (ROI). The ROI is the part of the page that contains the text without the margins. | were done to transform the original picture into a column mask of the region of interest (ROI). The ROI is the part of the page that contains the text without the margins. We extract the ROI from the coordinates produced with [https://github.com/lquirosd/P2PaLA/tree/master P2PaLA]. | ||

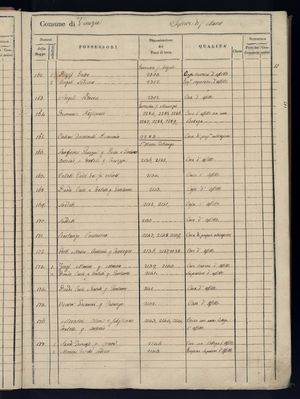

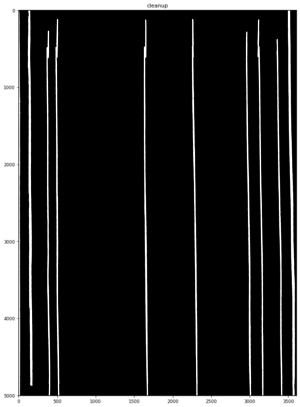

[[File:Sommarioni_Column_mask.png|thumb|center|Fig. 3: Binary column mask used by PathExtractor to identify the column numbers]] | [[File:Sommarioni_Column_mask.png|thumb|center|Fig. 3: Binary column mask used by PathExtractor to identify the column numbers]] | ||

| Line 86: | Line 100: | ||

[[File:Sommarioni_patch_1.png|thumb|center|Fig. 4: Neighboring patches that P2PaLA detected as a single baseline]] | [[File:Sommarioni_patch_1.png|thumb|center|Fig. 4: Neighboring patches that P2PaLA detected as a single baseline]] | ||

With the knowledge of the location of the columns PatchExtractor fixes these issues and produces 2 distinct images as can be seen in Fig. 5 | With the knowledge of the location of the columns [https://github.com/Jmion/PatchExtractor PatchExtractor] fixes these issues and produces 2 distinct images as can be seen in Fig. 5 | ||

[[File:Sommarioni_Patch_1.2.png]] | [[File:Sommarioni_Patch_1.2.png]] | ||

[[File:Sommarioni_Patch_1.1.png]] | [[File:Sommarioni_Patch_1.1.png]] | ||

The output of | The output of [https://github.com/Jmion/PatchExtractor PatchExtractor] will produce a patch per column and row containing text, as well as identifying to column in which the patch was extracted. | ||

The resulting output can then begin the pre-processing for the HTR. | |||

A possible area of research that would probably increase the quality of the column extraction and patch numbering would be to train a [[:wikipedia:Convolutional neural network|Convolutional neural network]] to identify the ROI. Using the location of the text patches is better than nothing but a know issue with this approach is that some scans contain part of the neighboring page text. This will increase the size of the ROI to include the 2 pages and a line is usually detected at the seam between the two. This is an unwanted side effect of this approach. Creating a training dataset for this task should be quite cheap in human labor since the segmentation mask is very simple. | |||

The resulting output can then begin the pre-processing for the [[:wikipedia:Handwriting recognition|HTR]]. | |||

===Step 3 - Handwritten Text Recognition=== | ===Step 3 - Handwritten Text Recognition=== | ||

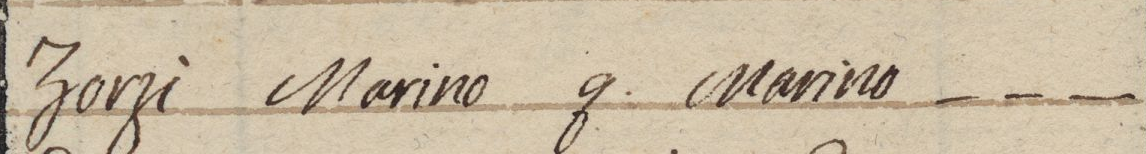

The third step of the pipeline is the handwritten text recognizer(HTR | The third step of the pipeline is the handwritten text recognizer (HTR) system. It takes as input the patches extracted during step 2 and produces the text. | ||

The HTR is a deep learning model. The deep learning architecture chosen is the PyLaia architecture that is a mix of Convolutional and 1D recurrent layers based on "Are Multidimensional Recurrent Layers Really Necessary for Handwritten Text Recognition?" (2017) by J. Puigcerver. | The HTR is a deep learning model. The deep learning architecture chosen is the PyLaia architecture that is a mix of Convolutional and 1D recurrent layers based on [http://www.jpuigcerver.net/pubs/jpuigcerver_icdar2017.pdf "Are Multidimensional Recurrent Layers Really Necessary for Handwritten Text Recognition?"] (2017) by J. Puigcerver [3]. | ||

We did not change the PyLaia architecture but we took it as a framework to train a new model. We first pre-train a model on the IAM dataset. The IAM dataset is a gold standart dataset for HTR. | We did not change the PyLaia architecture but we took it as a framework to train a new model. We first pre-train a model on the IAM dataset[5]. The [https://fki.tic.heia-fr.ch/databases/iam-handwriting-database IAM dataset] is a gold standart dataset for HTR. | ||

Then we took the model as starting point to train on a specific dataset made from hand-written text from venice. This process is called transfer learning. | Then we took the model as starting point to train on a specific dataset made from hand-written text from venice. This process is called transfer learning. | ||

To evaluate our models we used two standards metrics for HTR systems. We used the Word Error Rate (WER) and the Character Error Rate (CER). | To evaluate our models we used two standards metrics for HTR systems. We used the [[:wikipedia:Word error rate|Word Error Rate]] (WER) and the Character Error Rate (CER). | ||

We applied some pre-processing before feeding the patches to the HTR model. We used the pre-processing techniques that are used in PyLaia. The first thing is to enhance the image. | We applied some pre-processing before feeding the patches to the HTR model. We used the pre-processing techniques that are used in PyLaia. The first thing is to enhance the image. It is done by applying some traditional computer vision techniques to remove the background and clean the patches. Then we resize the patches have the same height. | ||

Then we can feed the patches in our system. These pre-processing steps are used before training but also on the | Then we can feed the patches in our system. These pre-processing steps are used before training but also on the patched extracted in step 2 when we use the HTR. | ||

We save the output in csv files containing 2 columns : the name of the patch and the text recognized by the HTR. | We save the output in csv files containing 2 columns : the name of the patch and the text recognized by the HTR. | ||

[[File:Sommarioni_step3-pipeline.png|thumb|center|Fig. 5: The patch extracted in step 2 is first preprocessed and then the HTR recognize the text in the patch. This patch is taken from the 2nd page of the first register (reg-1_0004_002).]] | |||

===Step 4 - Sommarioni matching=== | ===Step 4 - Sommarioni matching=== | ||

| Line 113: | Line 132: | ||

The fourth step consists of the actual matching. It takes as input the text recognized by the HTR with some data about the patch itself coming from the step 2 and the excel file containing the transcription. The goal of this step is to establish a mapping between the images and the excel file. | The fourth step consists of the actual matching. It takes as input the text recognized by the HTR with some data about the patch itself coming from the step 2 and the excel file containing the transcription. The goal of this step is to establish a mapping between the images and the excel file. | ||

The main challenges comes from the fact that there are errors and inconsistencies from every previous steps that we need to correct or mitigate. This is during the implementation of this part that we really realized some special cases that are in the | ====Challenges==== | ||

The main challenges comes from the fact that there are errors and inconsistencies from every previous steps that we need to correct or mitigate. This is is during the implementation of this part that we really realized some special cases that are in the Sommarioni. The detected special cases are the following : | |||

* Blank tables : tables that | * [https://images.center/iiif_sommarioni/reg1-0001/full/full/0/default.jpg Coverta], [https://images.center/iiif_sommarioni/reg1-0002/full/full/0/default.jpg anteporta] and incipit | ||

* | * [https://images.center/iiif_sommarioni/reg1-0200/full/full/0/default.jpg Blank tables] : tables that contain no data. | ||

* [https://images.center/iiif_sommarioni/reg1-0199/full/full/0/default.jpg Special tables] | |||

The errors and inconsistencies from the previous steps are the following : | The errors and inconsistencies from the previous steps are the following : | ||

* Columns numbers errors (from step 2) | |||

* HTR errors (from step 3) | * HTR errors (from step 3) | ||

* | * Patches do not contain a full cell of the table | ||

During this step we also realized some facts in the transcription excel file : | |||

* The Excel file is not completely sequential | |||

* Register 7 is not transcribed in the Excel File | |||

====Data wrangling and cleaning==== | |||

To handle those challenges we tried to clean the data and also correct/normalize the data that could be fixed based on a statistical analysis. Coverta, anteporta and incipit are simple to handle. The naming of the images of the Sommarionni allow us to just remove them and not take them into account. For the blank tables, the special tables and for the column numbers errors we take a statistical approach to handle them. The full exploratory data analysis is available on [https://github.com/basbeu/MatchingSommarioni/blob/master/Patch_statistics.ipynb github]. | |||

From step 2, we have the column numbers of each patch, and from step 3 we have string of characters for each patch. Taking those information we make feature vectors representing a page. The dimension of the feature vector is equal to the number of columns detected in the page. Each component is equal to the average length of the string of characters for this column in this page. The valid tables are really similar and the tables being a way to structure information, we can indeed see patterns in our representation of pages. | |||

To analyse the feature vectors we visualized them using PCA. The PCA plot per register show us a big cluster. However, we are only able to clearly define two cluster. We tried several clustering algorithm and also two projections techniques (PCA and tSNE). The valid cluster containing valid pages without column errors and another cluster of outliers spread in the space. The Figure 6 illustrates this with register 01. | |||

The blank page, special pages have clearly a different distribution of average string length per column. For error of columns this is a bit more tricky to detect. The errors of columns are often a complete shift of the column. A shift to the right occurs when there is an extra column detected in the left of the image. This could be because in the photograph we can see something else in the left. A shift to the left is the opposite. It is when the first column detected is directly the first column of data. | |||

We want to fix the shifted pages and then to be able to remove only the blank and special pages. We first cluster the features vectors in two clusters using KMeans algorithm. After this first step we iterate on the vectors that are in the cluster marked as outlier. We shift the vector to the left and to the right. We then observe if the shifted vectors is now in the correct cluster. If it is the case, we fix the column number for every patches of the page. | |||

We wanted to fix the shifted pages because the column number is a very meaningful information for the actual matching. With those information can also clean the columns further. For example, we know that in the columns Numero della mappa and subalerno, there should be a number in principle. | |||

[[File:Report_kmeans_pca_register_1.png |thumb|center|Fig. 6: Each point represent a feature vector of a page coming from the first register.]] | |||

====Matching==== | |||

The matching is a rule based matching where we iterate on the Excel file. At each step of the iteration we look for the patches that contains the information of the Excel line. In the Sommarionni the columns Numero della mappa and Subalterno form a key. With perfectly aligned columns, perfect HTR and all patches containing text extracted, the actual matching would only be a look up of the patch containing the numero della mappa and the subalterno. | |||

We cannot assume that based on the previous consideration. For that we based our matching on two columns : Possessore and Numero della mappa. Numero della mappa has the advantage to be part of the key. We search for equal numero della mappa that means that error of HTR can have a big impact in this result. Possessore are not unique at all, but it has the advantage to be a rather long string to compare. So we do not look for equality and we can that way reduce impact of the HTR errors. The search on Possessore is implemented in Information Retrieval fashion. We retrieve the patch that have the smallest distance to the query. The distance used is a Levenshtein distance. The Levenshtein aloow us to mitigate the HTR errors but there still is a challenge that there is no guarantee that a patch contains all the content of the Possessore cell in the Excel file. So we look for the minimal distance on a substring of the query. There is a parameter to tune that is the minimal length of the substring. The example below illustrates the need of flexibility in the way to compare Possessore Excel cell and text in patches : | |||

{|class="wikitable" | |||

! style="text-align:center;"|Possessore cell content | |||

! Text in a corresponding patch | |||

! Levenshtein distance | |||

! Minimal Levenshtein distance on substring (minimum substring size 10) | |||

|- | |||

|Todarini Luigi, per Giuseppel, e', 'TODARINI Luigi q. Giuseppe, e Ferdinando q. Teodoro, MOLIN Marco q. Gerolamo, RETI Giuseppe, e DOMENICHINI Fratelli q. Domenico | |||

|Todarini Luigi, per Giuseppel, e | |||

|97 | |||

|8 | |||

|- | |||

|} | |||

Our final matching strategy is the following : | |||

* Look for the numero della mappa | |||

** We only search in the patches belonging to the corresponding column | |||

** We search for equality | |||

* If there is only one result, the matching is done | |||

* Else we look for the Possessore | |||

We also tried to exploit the order in the Excel file. As it is ordered on the numero della mappa, we could say that we know the order in which we have to match the pages. There is a big exception that there is part of the register 05 that have numero della mappa number bigger than the register 6-bis. | |||

Using the order is really not easy as this is not trivial to know when there is a change of pages, moreover some pages are dropped during the cleaning and will never be matched. We tried to exploit the ordering defining a window in which the the matching page should be and if we do not match anything we make a guess in the window. This had as effect to decrease the results. The window functionality is still in the code but we use it with a window of 0 in our final version | |||

====Output==== | |||

The output of the step 4 is the final output of the project. We output a CSV/Excel file containing the original data and additional columns containing the matching and information about the matching. That can help the user/reader of the file to understand why the line is matched to this page/patch. It could also be useful if someone want to explore further the problem and/or it could be a new starting point to enhance the results. | |||

In order to make our work more readible and accessible we also added two columns containing IIIF link to the patch and line matched. This allow to quickly check a particular matching in its context. | |||

We add the following columns : | |||

{|class="wikitable" | |||

! style="text-align:center;"|Column | |||

! Description | |||

|- | |||

|matching_numero_della_mappa | |||

|List of candidate pages in which we found a patch by a "numero della mappa" search | |||

|- | |||

|- | |||

|matching_possessore | |||

|String of characters from the HTR output that had the minimal distance with the possessore | |||

|- | |||

|- | |||

|matching_type | |||

|Contains either POSSESSORE, NUMERO_DELLA_MAPPA or NUMERO_DELLA_MAPPA & POSSESSORE that refer how the matching was established, | |||

|- | |||

|- | |||

|matching_page | |||

|Name of the page that is matched | |||

|- | |||

|- | |||

|matching_patch | |||

|Link IIIF to the patch that is matched | |||

|- | |||

|- | |||

|matching_line | |||

|Link IIIF to the line containing the matching patch | |||

|- | |||

|} | |||

==Quality assessment== | ==Quality assessment== | ||

===HTR evaluation=== | |||

The HTR was first pre-trained on IAM and then train on a specific venitian dataset. We used twice the standard approach to separate our dataset in training, validation and testing sets. | |||

To assess the quality on the the testing set we used two metrics the Characters Error Rate (CER) and the Words Error Rate (WER). Those metrics are really standard for assessing HTR systems. | |||

For IAM we used a standard split that is used on research paper that can be downloaded [https://www.prhlt.upv.es/~jpuigcerver/iam_splits.tar.gz here]. We used the puigcerver split. | |||

For the venitian dataset, we separated uniformly at random in the three set with the ratio : 10% validation test, 20% test set and 70% training set. | |||

{|class="wikitable" | |||

! style="text-align:center;"|Step - dataset | |||

! CER | |||

! WER | |||

|- | |||

|Pre-processing - IAM dataset | |||

|0.0716 | |||

|0.228 | |||

|- | |||

|- | |||

|Training - Venitian Hand Written Text | |||

|0.05097 | |||

|0.192 | |||

|- | |||

|} | |||

Those results are satisfying. The results on IAM are really closed and even slightly better that the one that are given as reference in the PyLaia reference. The CER and WER even decrease after the transfer learning as intended. | |||

===Matching evaluation=== | |||

The matching is the final output of the project. Therefore, its quality is an indicator the quality of the whole project. | |||

To assess the matching, we manually build a test set. The test set consists of a copy of the excel file with an extra-column called gt_page. This column contains for a selection of lines the image filename of the Somamarioni page in which the line is found. | |||

We selected 10 images per registers. We take care to select at random images at the beginning, in the middle and at the end of the register. Then we manually inspect the images and found the corresponding Excel lines and annotate them. The size of this test set in number of lines is 1009 that corresponds to 4.3 % since the size of the excel size is 23428. | |||

The accuracy on the test set is 0.57. | |||

It means that the pipeline make sense but that it can be improved. The results are not very impressive because we think that the system with such an accuracy is not very usable. Nevertheless, it could be handy to find some patches/lines via the IIF links and at least the output is very interpretable. | |||

If a user reading the transcribed file wants to go back to the source, we think that it is very fast via the IIIF links to see the matching. In the case of a matching success, the user will save a lot of manual searching time. In case of failure, the user will not lose too much time as we think that he will realize very quickly that the matching is not correct. | |||

===Limitations=== | |||

We already mentioned some limitations of the system. Let summarize them : | |||

* Patch extractor : we do not have a metric to assess the quality of this module but we know that there always the same numbers of columns detected. | |||

* Usability : the output has not a very high accuracy which limits the usefulness of the output, but the project is at least a good first step to solve this matching task. | |||

* Running time : The whole pipeline take several hours to run completely. We think that for a task that is not intended to be ran several times it is good enough. It was clearly not the focus of the project. Many tasks could be parallelized to decrease the overall running time. | |||

=== Idea of improvements === | |||

The project was very delimited in time so we did not explore every possibilities and every ideas we thought. The summary of the idea of possible improvement are listed below : | |||

* Add a croping step on the Sommarioni image to extract only the table, this could be done by CNN like deep neural network if one build a supervised dataset. | |||

* Build a groundtruth for the patch extractor to better evaluate its performance and based on that maybe improve or choose an another technique. | |||

* Use supevised method to better classify the feature vector during the data wrangling phase of step 4. | |||

* After the matching add a step to smooth the results based on the sequence. | |||

==License== | |||

All contributions that we have done on the project have been granted a [https://opensource.org/licenses/MIT MIT license]. | |||

==Links== | ==Links== | ||

* [1][https://github.com/Jmion/VeniceTimeMachineSommarioniHTR Github link to pipeline] | |||

* [2][https://arxiv.org/pdf/1703.10593.pdf Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks] | |||

* [3][https://ieeexplore.ieee.org/document/8269951 Are Multidimensional Recurrent Layers Really Necessary for Handwritten Text Recognition?] | |||

* [4][http://www.archiviodistatovenezia.it/siasve/cgi-bin/pagina.pl?Chiave=7053&ChiaveAlbero=7059&ApriNodo=0&Tipo=fondo Archivio di Stato di Venezia] | |||

* [5][https://fki.tic.heia-fr.ch/databases/iam-handwriting-database IAM dataset] | |||

Latest revision as of 13:19, 14 December 2020

Introduction

The cadasters are essential documents which describe the land reliably and precise manner. The shape of the lots, buildings, bridges, and layout of other urban works are meticulously documented and annotated with a unique number. The "Sommarioni" are the key to reading the map as they contain the parcel number (ref to the map), owner's name, toponym, intended use of the land or building, surface area of the land. The Napoleonic cadaster is an official record of the entire French empire. On the 15th of September 1807, a new law gave the order that the French Empire is to be meticulously measured and documented. This allowed the government to tax their subjects for the land that they owned. More historical information can be found in this article from Le Monde.

These documents now allow historians to research the evolution of the city. The cadaster that this project will focus on is the Venetian cadaster created between 1807 and 1816 as a result of the law previously mentioned.

These documents are a very useful source of information for historians studying these periods of history. To help them in this task, large amounts of historical documents have been dititalized in the scope of the Venice Time Machine project. The "Sommarioni" have been the focus of previous projects that have focused on manually digitalizing the content of these records. Sadly will doing this process the link to the page that contained the information was lost. A previous attempt to reestablish this link was done in the Mapping Cadasters, DH101 project. The motivation of this project was to attempt to improve the result that they obtained by trying a new approach.

The goal of this project is to create a pipeline that allows reestablishing the mapping between the digital scans of the "Sommarioni" and the digital version of it as an excel spreadsheet. To produce this pipeline, a mix of a deep neural network for handwriting recognition, a cycleGAN to extract patches, and classical image processing technics, and unsupervised machine learning.

The result of the pipeline is adding the links to the original source document. If our matching procedure used the Possesore field to identify the match a link to that patch will be added to the excel document. This is useful to check if the automatic matching is correct. A second image link is added to be able to see the entire line in the source document.

Planning

| Week | Task |

|---|---|

| 09 | Segment patch of text in Sommarioni : (page id, patch) |

| 10 | Mapping transcription (excel file) -> page id (proof of concept) |

| 11 | Mapping transcription (excel file) -> page id (on the whole dataset) |

| 12 | Depending of the quality of the results : improve the mapping of page id, more precise matching, viewer web |

| 13 | Final results, final evaluation & final report writing |

| 14 | Final project presentation |

Week 09

- Input : Sommarioni images

- Output : Patch of pixels containing text with coordinate of the patch in the Sommarioni

- Step 1 : Segment hand written text regions in Sommarioni images ✅

- Step 2 : Extraction of the patches ✅

Week 10

- Input : transcription (Excel File), tuples (page id, patch) extracted in week 9

- Output : line in the transcription -> page id

- Step 1 : HTR recognition in the patch and cleaning : (patch, text) ✅

- Step 2 : Find matching pair between recognized text and transcription ✅

- Step 3 : New excel file with the new page id column ✅

Week 11

- Step 1 : Apply the pipeline validated on week 10 on the whole dataset ✅

- Step 2 : Evaluate the quality and based on that decide of the tasks for the next weeks ✅

Week 12

- Depending of the quality of the matching

- Improve image segmentation ✅

- More precise matching (excel cell) -> (page id, patch) in order to have the precise box of each written text ❌

- Use an IIF image viewer to show the results of the project in a more fancy way ❌

Methodology

The project can be summarized as a 4 steps pipeline as shown on figure seen in the introduction.

Step 1 - Text detection

The first part of the project consists in extracting the areas on the image of the page that contains the text. This is a required step since our handwriting recognition model requires as an input a single line of text. To extract the patches the first step is to identify the location of the text on the page. This information will be stored in the standard image metadata description called PAGE. The location will be stored by storing the baseline under some text. To extract the baseline we use the P2PaLA repository. It is a Document layout analysis tool based on the pix2pix and CycleGAN. More information on the network being used can be found in the Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks paper. Since there is no ground truth for the locations of baselines in the Sommarioni dataset we used the pre-trained model provided with P2PaLA. We do not have a metric to measure the quality of this step of our pipeline. We conducted a qualitative visual inspection of results. The output is remarkably good given that it was not trained on data from this dataset. A few false positives were found, but no false negatives. False positives are not an issue since the next steps in our pipeline will remove most of them.

Step 2 - Patch extraction

Once the baselines are identified we need to extract the areas that contained text (cropping the image of the page to contain only a single sentence). No preexisting tool satisfied the quality requirements that we require. Therefore, we created PatchExtractor. PatchExtractor is a python program that extracts the patches using as input the source image and the baseline file produced by P2PaLA. PatchExtractor uses some advanced image processing to extract the columns from the source image. The information about the column that the patch is located in, is crucial. After HTR we can use the location information to match the columns of the spreadsheet with their equivalent column in the picture.

A Hough transform was considered and tested as a potential method to extract the vertical lines but to many issues with filtering the lines produced showed up. No useful information could be used from this approach.

Using a simple pixel mask is sufficient and avoids issues with geometric distortions caused by the page shape while being scanned.

The advanced column extractor can produce a clean binary mask of the location of the columns as seen in Fig. 3. To achieve this multiple steps including some such as:

- Applying a Gabor filter (linear feature extractor)

- Using a contrast threshold (Binary and Otsu)

- Gaussian blur

- Affine transformation

- Bitwise operations

- Connected component size filtering (removing small connected components)

- Cropping

were done to transform the original picture into a column mask of the region of interest (ROI). The ROI is the part of the page that contains the text without the margins. We extract the ROI from the coordinates produced with P2PaLA.

This mask is then used to identify which column a baseline is in.

There is an extra challenge that we can fix with the knowledge of the location of the columns. Sometimes the baseline detection detects a single baseline for 2 columns that are close to each other as can be seen in Fig. 4

With the knowledge of the location of the columns PatchExtractor fixes these issues and produces 2 distinct images as can be seen in Fig. 5

The output of PatchExtractor will produce a patch per column and row containing text, as well as identifying to column in which the patch was extracted.

A possible area of research that would probably increase the quality of the column extraction and patch numbering would be to train a Convolutional neural network to identify the ROI. Using the location of the text patches is better than nothing but a know issue with this approach is that some scans contain part of the neighboring page text. This will increase the size of the ROI to include the 2 pages and a line is usually detected at the seam between the two. This is an unwanted side effect of this approach. Creating a training dataset for this task should be quite cheap in human labor since the segmentation mask is very simple.

The resulting output can then begin the pre-processing for the HTR.

Step 3 - Handwritten Text Recognition

The third step of the pipeline is the handwritten text recognizer (HTR) system. It takes as input the patches extracted during step 2 and produces the text.

The HTR is a deep learning model. The deep learning architecture chosen is the PyLaia architecture that is a mix of Convolutional and 1D recurrent layers based on "Are Multidimensional Recurrent Layers Really Necessary for Handwritten Text Recognition?" (2017) by J. Puigcerver [3].

We did not change the PyLaia architecture but we took it as a framework to train a new model. We first pre-train a model on the IAM dataset[5]. The IAM dataset is a gold standart dataset for HTR. Then we took the model as starting point to train on a specific dataset made from hand-written text from venice. This process is called transfer learning. To evaluate our models we used two standards metrics for HTR systems. We used the Word Error Rate (WER) and the Character Error Rate (CER).

We applied some pre-processing before feeding the patches to the HTR model. We used the pre-processing techniques that are used in PyLaia. The first thing is to enhance the image. It is done by applying some traditional computer vision techniques to remove the background and clean the patches. Then we resize the patches have the same height.

Then we can feed the patches in our system. These pre-processing steps are used before training but also on the patched extracted in step 2 when we use the HTR. We save the output in csv files containing 2 columns : the name of the patch and the text recognized by the HTR.

Step 4 - Sommarioni matching

The fourth step consists of the actual matching. It takes as input the text recognized by the HTR with some data about the patch itself coming from the step 2 and the excel file containing the transcription. The goal of this step is to establish a mapping between the images and the excel file.

Challenges

The main challenges comes from the fact that there are errors and inconsistencies from every previous steps that we need to correct or mitigate. This is is during the implementation of this part that we really realized some special cases that are in the Sommarioni. The detected special cases are the following :

- Coverta, anteporta and incipit

- Blank tables : tables that contain no data.

- Special tables

The errors and inconsistencies from the previous steps are the following :

- Columns numbers errors (from step 2)

- HTR errors (from step 3)

- Patches do not contain a full cell of the table

During this step we also realized some facts in the transcription excel file :

- The Excel file is not completely sequential

- Register 7 is not transcribed in the Excel File

Data wrangling and cleaning

To handle those challenges we tried to clean the data and also correct/normalize the data that could be fixed based on a statistical analysis. Coverta, anteporta and incipit are simple to handle. The naming of the images of the Sommarionni allow us to just remove them and not take them into account. For the blank tables, the special tables and for the column numbers errors we take a statistical approach to handle them. The full exploratory data analysis is available on github.

From step 2, we have the column numbers of each patch, and from step 3 we have string of characters for each patch. Taking those information we make feature vectors representing a page. The dimension of the feature vector is equal to the number of columns detected in the page. Each component is equal to the average length of the string of characters for this column in this page. The valid tables are really similar and the tables being a way to structure information, we can indeed see patterns in our representation of pages.

To analyse the feature vectors we visualized them using PCA. The PCA plot per register show us a big cluster. However, we are only able to clearly define two cluster. We tried several clustering algorithm and also two projections techniques (PCA and tSNE). The valid cluster containing valid pages without column errors and another cluster of outliers spread in the space. The Figure 6 illustrates this with register 01.

The blank page, special pages have clearly a different distribution of average string length per column. For error of columns this is a bit more tricky to detect. The errors of columns are often a complete shift of the column. A shift to the right occurs when there is an extra column detected in the left of the image. This could be because in the photograph we can see something else in the left. A shift to the left is the opposite. It is when the first column detected is directly the first column of data.

We want to fix the shifted pages and then to be able to remove only the blank and special pages. We first cluster the features vectors in two clusters using KMeans algorithm. After this first step we iterate on the vectors that are in the cluster marked as outlier. We shift the vector to the left and to the right. We then observe if the shifted vectors is now in the correct cluster. If it is the case, we fix the column number for every patches of the page.

We wanted to fix the shifted pages because the column number is a very meaningful information for the actual matching. With those information can also clean the columns further. For example, we know that in the columns Numero della mappa and subalerno, there should be a number in principle.

Matching

The matching is a rule based matching where we iterate on the Excel file. At each step of the iteration we look for the patches that contains the information of the Excel line. In the Sommarionni the columns Numero della mappa and Subalterno form a key. With perfectly aligned columns, perfect HTR and all patches containing text extracted, the actual matching would only be a look up of the patch containing the numero della mappa and the subalterno. We cannot assume that based on the previous consideration. For that we based our matching on two columns : Possessore and Numero della mappa. Numero della mappa has the advantage to be part of the key. We search for equal numero della mappa that means that error of HTR can have a big impact in this result. Possessore are not unique at all, but it has the advantage to be a rather long string to compare. So we do not look for equality and we can that way reduce impact of the HTR errors. The search on Possessore is implemented in Information Retrieval fashion. We retrieve the patch that have the smallest distance to the query. The distance used is a Levenshtein distance. The Levenshtein aloow us to mitigate the HTR errors but there still is a challenge that there is no guarantee that a patch contains all the content of the Possessore cell in the Excel file. So we look for the minimal distance on a substring of the query. There is a parameter to tune that is the minimal length of the substring. The example below illustrates the need of flexibility in the way to compare Possessore Excel cell and text in patches :

| Possessore cell content | Text in a corresponding patch | Levenshtein distance | Minimal Levenshtein distance on substring (minimum substring size 10) |

|---|---|---|---|

| Todarini Luigi, per Giuseppel, e', 'TODARINI Luigi q. Giuseppe, e Ferdinando q. Teodoro, MOLIN Marco q. Gerolamo, RETI Giuseppe, e DOMENICHINI Fratelli q. Domenico | Todarini Luigi, per Giuseppel, e | 97 | 8 |

Our final matching strategy is the following :

- Look for the numero della mappa

- We only search in the patches belonging to the corresponding column

- We search for equality

- If there is only one result, the matching is done

- Else we look for the Possessore

We also tried to exploit the order in the Excel file. As it is ordered on the numero della mappa, we could say that we know the order in which we have to match the pages. There is a big exception that there is part of the register 05 that have numero della mappa number bigger than the register 6-bis. Using the order is really not easy as this is not trivial to know when there is a change of pages, moreover some pages are dropped during the cleaning and will never be matched. We tried to exploit the ordering defining a window in which the the matching page should be and if we do not match anything we make a guess in the window. This had as effect to decrease the results. The window functionality is still in the code but we use it with a window of 0 in our final version

Output

The output of the step 4 is the final output of the project. We output a CSV/Excel file containing the original data and additional columns containing the matching and information about the matching. That can help the user/reader of the file to understand why the line is matched to this page/patch. It could also be useful if someone want to explore further the problem and/or it could be a new starting point to enhance the results.

In order to make our work more readible and accessible we also added two columns containing IIIF link to the patch and line matched. This allow to quickly check a particular matching in its context.

We add the following columns :

| Column | Description |

|---|---|

| matching_numero_della_mappa | List of candidate pages in which we found a patch by a "numero della mappa" search |

| matching_possessore | String of characters from the HTR output that had the minimal distance with the possessore |

| matching_type | Contains either POSSESSORE, NUMERO_DELLA_MAPPA or NUMERO_DELLA_MAPPA & POSSESSORE that refer how the matching was established, |

| matching_page | Name of the page that is matched |

| matching_patch | Link IIIF to the patch that is matched |

| matching_line | Link IIIF to the line containing the matching patch |

Quality assessment

HTR evaluation

The HTR was first pre-trained on IAM and then train on a specific venitian dataset. We used twice the standard approach to separate our dataset in training, validation and testing sets. To assess the quality on the the testing set we used two metrics the Characters Error Rate (CER) and the Words Error Rate (WER). Those metrics are really standard for assessing HTR systems. For IAM we used a standard split that is used on research paper that can be downloaded here. We used the puigcerver split. For the venitian dataset, we separated uniformly at random in the three set with the ratio : 10% validation test, 20% test set and 70% training set.

| Step - dataset | CER | WER |

|---|---|---|

| Pre-processing - IAM dataset | 0.0716 | 0.228 |

| Training - Venitian Hand Written Text | 0.05097 | 0.192 |

Those results are satisfying. The results on IAM are really closed and even slightly better that the one that are given as reference in the PyLaia reference. The CER and WER even decrease after the transfer learning as intended.

Matching evaluation

The matching is the final output of the project. Therefore, its quality is an indicator the quality of the whole project. To assess the matching, we manually build a test set. The test set consists of a copy of the excel file with an extra-column called gt_page. This column contains for a selection of lines the image filename of the Somamarioni page in which the line is found.

We selected 10 images per registers. We take care to select at random images at the beginning, in the middle and at the end of the register. Then we manually inspect the images and found the corresponding Excel lines and annotate them. The size of this test set in number of lines is 1009 that corresponds to 4.3 % since the size of the excel size is 23428.

The accuracy on the test set is 0.57. It means that the pipeline make sense but that it can be improved. The results are not very impressive because we think that the system with such an accuracy is not very usable. Nevertheless, it could be handy to find some patches/lines via the IIF links and at least the output is very interpretable. If a user reading the transcribed file wants to go back to the source, we think that it is very fast via the IIIF links to see the matching. In the case of a matching success, the user will save a lot of manual searching time. In case of failure, the user will not lose too much time as we think that he will realize very quickly that the matching is not correct.

Limitations

We already mentioned some limitations of the system. Let summarize them :

- Patch extractor : we do not have a metric to assess the quality of this module but we know that there always the same numbers of columns detected.

- Usability : the output has not a very high accuracy which limits the usefulness of the output, but the project is at least a good first step to solve this matching task.

- Running time : The whole pipeline take several hours to run completely. We think that for a task that is not intended to be ran several times it is good enough. It was clearly not the focus of the project. Many tasks could be parallelized to decrease the overall running time.

Idea of improvements

The project was very delimited in time so we did not explore every possibilities and every ideas we thought. The summary of the idea of possible improvement are listed below :

- Add a croping step on the Sommarioni image to extract only the table, this could be done by CNN like deep neural network if one build a supervised dataset.

- Build a groundtruth for the patch extractor to better evaluate its performance and based on that maybe improve or choose an another technique.

- Use supevised method to better classify the feature vector during the data wrangling phase of step 4.

- After the matching add a step to smooth the results based on the sequence.

License

All contributions that we have done on the project have been granted a MIT license.