Venice2020 Building Heights Detection: Difference between revisions

| (447 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

= Introduction = | = Introduction = | ||

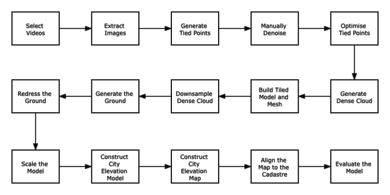

In this project, our main goal is to obtain the height information of buildings in Venice city. In order to achieve this goal, we construct a point cloud model of Venice from Google Earth and YouTube drone videos with the help of photogrammetry tools. Initially, we experimented on drone videos from YouTube. Since our goal is to detect the height of all the buildings in Venice, it is important for us to collect images of all the buildings in the city. However, in YouTube videos, we only found some landmark architecture whose height information is already available on the Internet. Hence, we switched the data scource to Google Earth images. With our current image source, we have successfully calculated the heights of Venice buildings including unknown buildings and famous ones. We also evaluated both subjective and objective quality of our City Elevation Map, which will be discussed in the following sections. The Venice 3d model along with height information can be used to create an virtual experience. Besides, the City Elevation map can be useful for urban planning and security purposes in the future. | |||

Venice, one of the most extraordinary cities in the world, was built on 118 islands in the middle of the Venetian Lagoon at the head of the Adriatic Sea in Northern Italy. The planning of the city, to be floating in a lagoon of water, reeds, and marshland always amazes | = Motivation = | ||

Venice, one of the most extraordinary cities in the world, was built on 118 islands in the middle of the Venetian Lagoon at the head of the Adriatic Sea in Northern Italy. The planning of the city, to be floating in a lagoon of water, reeds, and marshland always amazes travellers and architects. The beginning of the long and rich history of Venice was on March 25th 421 AD (At high noon). Initially, the city was made using mud and woods. The revolution of the city plan has changed over time since it was built. Today, "the floating city of Italy" is called "the sinking city". Over the past 100 years, the city has sunk 9 inches. According to environmentalists, because of global warming, the sea level will rise, which will eventually cover this beautiful city in upcoming years. To us engineers, it is important to keep track of the building height changes of Venice in order to take relevant actions in time to save the beautiful city. Since changes in natural disasters are sometimes unpredictable, to save the present beauty of Venice, we can recreate the Venice visiting experience virtually as a part of [https://en.wikipedia.org/wiki/Venice_Time_Machine Venice Time Machine]. This in the future will be relevant for travellers, historians, architects, and other enthusiasts in the world. Apart from the facts mentioned above, building height is one of the most important pieces of information to consider for urban planning, economic analysis and digital twin implementation. | |||

= Milestones = | |||

= | === Milestone 1 === | ||

* Get familiar with [https://github.com/openMVG/openMVG OpenMVG], [http://www.open3d.org/ Open3D], [https://pyntcloud.readthedocs.io/en/latest/ pyntcloud], [https://www.agisoft.com/ Agisoft Metashape], [https://www.blender.org/ Blender], [https://www.cloudcompare.org/main.html CloudCompare] and [https://www.qgis.org/en/site/ QGIS] | |||

* Collect high resolution venice drone videos on youtube and Google Earth images as supplementary materials | |||

=== Milestone 2 === | |||

* Align photos of Venice, generate sparse point clouds made up of only high-quality tie points and repeatedly optimize point cloud models by reconstruction uncertainty filtering, projection accuracy filtering and reprojection error filtering | |||

* Remove outliers automatically and manually, and build dense point clouds based on sparse cloud | |||

* Build Venice 3D model (mesh) and tiled model according to dense point cloud data | |||

=== Milestone 3 === | |||

* Generate ground plane with the largest support in the point cloud and redress the plane by translation and rotation | |||

* Construct City Elevation Model by a point-to-plane method and generate City Elevation Model based on z-coordinate after redressing | |||

=== Milestone 4 === | |||

* Align the City Elevation Map to the reference cadaster using QGIS's georeferencing and evaluate the subjective visual quality of the map | |||

* Evaluate the accuracy of height calculation of both Google Earth based Venice model and Youtube based Venice model | |||

= Methodology = | = Methodology = | ||

| Line 20: | Line 35: | ||

== Images Aquisition == | == Images Aquisition == | ||

[[File:a.png|thumb|upright=1.5|right|Figure 1: YouTube Video]] | [[File:a.png|x250px|thumb|upright=1.5|right|Figure 1: YouTube Video]] | ||

Point cloud denotes a 3D content representation which is commonly preferred due to its high efficiency and relatively low complexity in the acquisition, storage, and rendering of 3D models. In order to generate Venice dense point cloud models, sequential images from different angles in different locations need to be collected first based on the following two sources. | Point cloud denotes a 3D content representation which is commonly preferred due to its high efficiency and relatively low complexity in the acquisition, storage, and rendering of 3D models. In order to generate Venice dense point cloud models, sequential images from different angles in different locations need to be collected first based on the following two sources. | ||

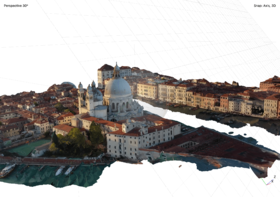

[[File:b.png|thumb|upright=1.5|right|Figure 2: Google Earth]] | [[File:b.png|x250px|thumb|upright=1.5|right|Figure 2: Google Earth]] | ||

=== Youtube Video Method === | === Youtube Video Method === | ||

| Line 32: | Line 47: | ||

=== Google Earth Method === | === Google Earth Method === | ||

In order to access the comprehensive area of Venice, we decided to use Google Earth as a complementary tool: we could | In order to access the comprehensive area of Venice, we decided to use Google Earth as a complementary tool: we could obtain images of every building we want at each angle. With Google Earth, we are able to have much more access to aerial images of Venice, not only will these images serve as images set to generate dense point cloud models in order to calculate building heights, but we can also use the overlapping part in different point cloud models to evaluate point cloud models in a cross-comparison way. | ||

In a nutshell, we apply a photogrammetric reconstruction tool to generate point cloud models. Photogrammetry is the art and science of extracting 3D information from photographs. As for the Youtube-based method, we will only target famous buildings which appear in different drone videos and select suitable images to form the specific building's image set. With regard to the Google Earth method, we will obtain images of the whole of Venice manually in a scientific trajectory of the photogrammetry route. | In a nutshell, we apply a photogrammetric reconstruction tool to generate point cloud models. Photogrammetry is the art and science of extracting 3D information from photographs. As for the Youtube-based method, we will only target famous buildings which appear in different drone videos and select suitable images to form the specific building's image set. With regard to the Google Earth method, we will obtain images of the whole of Venice manually in a scientific trajectory of the photogrammetry route. | ||

== Point Cloud | == Point Cloud Generation == | ||

Sparse cloud is generated after aligning the multi-angle photos, which can observe overlapping photo pairs. Then the dense point cloud uses the estimated camera positions generated during tie points based matching and the depth map for each camera, which can have greater density than LiDAR point clouds.[1] | |||

== Point Cloud Optimization == | |||

=== Outlier Removal === | === Outlier Removal === | ||

When collecting data from scanning devices, the resulting point cloud tends to contain noise and artifacts that one would like to remove. Apart from selecting points in Agisoft Metashape manually, we also use Open3D to deal with the noises and artefacts. In the Open3D, there are two ways to detect the outliers and remove them: '''statistical outlier removal''' and '''radius outlier removal'''. Radius outlier removal removes points that have few neighbors in a given sphere around them, and statistical outlier removal removes points that are further away from their neighbors compared to the average for the point cloud. We set the number of the neighbors to be 50, radius to be 0.01. Automatic outlier removal could remove around 75% percent of noise, but the effect isn't perfect, which is not as good as the manually gradual selection. Therefore, Automatic outlier removal could serve as a prepossessing step for the final de-noise, after automatic outlier removal, we still need to manually de-noise the point cloud. | |||

=== Error Reduction === | === Error Reduction === | ||

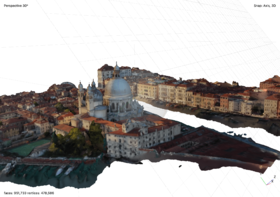

[[File:methodology.png|x195px|thumb|upright=1.5|right|Figure 3: YouTube Video]] | |||

'''The first phase''' of error reduction is to remove points that are the consequence of poor camera geometry. Reconstruction uncertainty is a numerical representation of the uncertainty in the position of a tie point based on the geometric relationship of the cameras from which that point was projected or triangulated, which is the ratio between the largest and smallest semi axis of the error ellipse created when triangulating 3D point coordinates between two images. Points constructed from image locations that are too close to each other have a low base-to-height ratio and high uncertainty. Removing these points does not affect the accuracy of optimization but reduces the noise in the point cloud and prevents points with large uncertainty in the z-axis from influencing other points with good geometry or being incorrectly removed in the reprojection error step. A reconstruction uncertainty of 10, which can be selected with the gradual selection filter and set to this value or level, is roughly equivalent to a good base-to-height ratio of 1:2.3 , whereas 15 is roughly equivalent to a marginally acceptable base-to-height ratio of 1:5.5. The reconstruction uncertainty selection procedures are repeated two times to reduce the reconstruction uncertainty toward 10 without having to delete more than 50 percent of the tie points each time.[1] | |||

'''The second phase''' of error reduction phase removes points based on projection accuracy. Projection accuracy is essentially a representation of the precision with which the tie point can be known given the size of the key points that intersect to create it. The key point size is the standard deviation value of the Gaussian blur at the scale at which the key point was found. The smaller the mean key point value, the smaller the standard deviation and the more precisely located the key point is in the image. The highest accuracy points are assigned to level 1 and are weighted based on the relative size of the pixels. A tie point assigned to level 2 has twice as much projection inaccuracy as level 1. The projection accuracy selection procedure is repeated without having to delete more than 50% of the points each time until level 2 is reached and there are few points selected. [1] | |||

'''The final phase''' of error reduction phase is removing points based on reprojection error. This is a measure of the error between a 3D point's original location on the image and the location of the point when it is projected back to each image used to estimate its position. Error-values are normalized based on key point size. High reprojection error usually indicates poor localization accuracy of the corresponding point projections at the point matching step. Reprojection error can be reduced by iteratively selecting and deleting points, then optimizing until the unweighted RMS reprojection error is between 0.13 and 0.18 which means that there is 95% confidence that the remaining points meet the estimated error.[1] | |||

== Plane Generation == | |||

[[File:ground.png|thumb|400px|upright=1.5|right|Figure 4: Plane Generation]] | |||

To calculate the height of the building, we have to find the ground of the Venice PointCloud model, which could be retrieved by a tool provided by Open3D. Open3D supports segmentation of geometric primitives from point clouds using "Random Sample Consensus", which is an iterative method to estimate parameters of a mathematical model from a set of observed data that contains outliers when outliers are to be accorded no influence on the values of the estimates. To find the ground plane with the largest support in the point cloud, we use segment_plane by specifying "distance_threshold". The support of a hypothesised plane is the set of all 3D points whose distance from that plane is at most some threshold (e.g. 10 cm). After each run of the RANSAC algorithm, the plane that had the largest support is chosen. All the points supporting that plane may be used to refine the plane equation, which is more robust than just using the neighbouring points by performing PCA or linear regression on the support set. | |||

== Plane Redressing == | |||

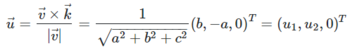

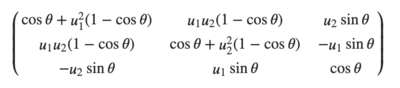

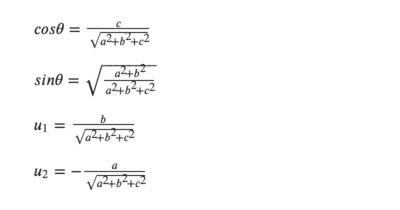

After selecting the most convenient plane and its supporting points, we define the plane equation in the 3D space. We want to build a PointCloud model where the ''Z'' coordinate of a point equals the height of this point in real world, so the next step is to redress the plane by projecting it on an ''X-O-Y'' space. For the projection calculation, we used the projection matrix mentioned below. | |||

Here, the plane equation is ''ax + by + cz + d = 0'' | |||

The redressing was performed in two steps, first translation and then rotation. | |||

For translation, the plane intersects ''Z''-axis at ''(0, 0, -d/c)''. So the translation is [[File:C.png|200px|center|]] which passes through the origin and vector ''v = ''(a, b, c)''<sup>T</sup>'' | |||

= | For the rotation, the angle between ''v and k = ''(0, 0, 1)''<sup>T</sup>''' is given by: | ||

[[File:D.png|200px|center|]] | |||

The axis of rotation has to be orthogonal to v and k. so its versor is: | |||

[[File:E.png|350px|center|]] | |||

The rotation is represented by the matrix: | |||

[[File:planeredress.png|400px|center]] | |||

Please note that: | |||

[[File:u1u2.png|400px|center|]] | |||

== Height Detection == | |||

=== Point-to-Plane Based Height Calculation and City Elevation Model Construction === | |||

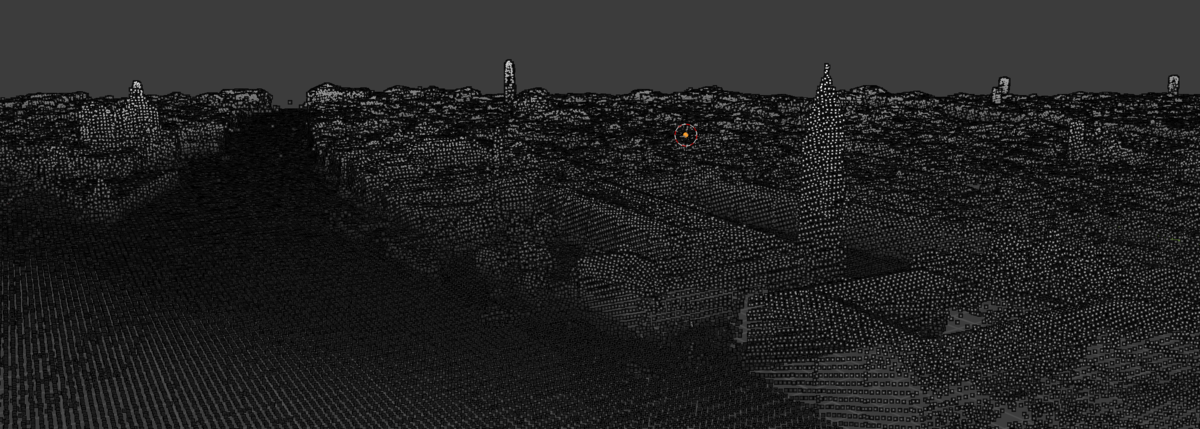

Once the plane equation is obtained in the previous step, we could start calculating the height of buildings. In the first step, we calculate the relative height of the buildings based on the formula of point-to-plane distance. The coordinate information of each point could be accessed by the 'points' attribute of the ‘PointCloud’ object with the help of 'Open3D'. Since we already have the plane equation, using this formula, we could obtain the distances between each point and the plane and save them in a (n, 1) array. | |||

For the | To visualise the height of the building, the first method is to change the colors of the points in the PointCloud model. For each point in the ‘PointCloud’, it has a ‘Colors' attribute. By calling this attribute, an (n, 3) array of value range [0, 1], containing RGB colors information of the points will be returned. Therefore, by normalising the ‘distances array’ values to the range of [0,1] and expanding the 'distances array' to three dimensions by replication, we get a (n, 3) ‘height array' in the form of (n, 3) 'colors array’. | ||

Basically, we transform the height information of each point to its 'colors' information, expressing the height by its color, higher points have lighter colors and vice versa. This model provides us with an intuitive sense of the building heights, which is more qualitative than quantitative. | |||

[[File:venice_blacknwhite.png|thumb|1200px|upright=1.5|center|Figure 5: City Elevation Model]] | |||

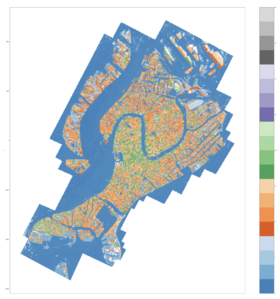

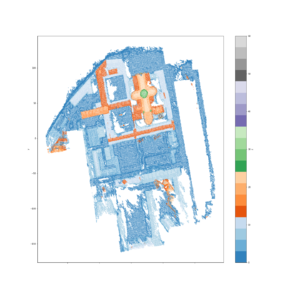

=== Coordinate Based Height Calculation and City Elevation Map Construction === | |||

To show the building heights more quantitatively, we decided to construct a City Elevation Map, which could be achieved by plotting the height information of points in a 2D map. However, there is a prerequisite: we should get the actual height information of every point. After implementing "Plane Redressing", we assume that the z-coordinate can be approximated as the height. But before directly using it, we still need to scale the model so that the height of buildings in the model matches the actual height of buildings. For instance, we know the actual height of a reference point in the real world, and we could also obtain the height of this reference point in the PointCloud model, therefore, we could obtain the scale factor in this PointCloud model. For all the other buildings in the model, the actual height equals the height in the PointCloud model multiplying the scale factor. | |||

To put it into practice, we need to set a reference point in the model, "Campanile di San Marco" is the best choice. The height of the highest building in Venice, "Campanile di San Marco", is 98.6 metres. In this step, the built-in function "Scale" from Open3D is adopted, and we finally obtained a 1:1 Venice PointCloud model where the z-coordinate of a point equals the actual height of this point in the real world. | |||

{|class="wikitable" style="text-align:right; font-family:Arial, Helvetica, sans-serif !important;;" | |||

[[File: | |- | ||

|[[File:Height_tab20c.png|280px|center|thumb|upright=1.5|Figure 6: Venice]] | |||

|[[File:Youtube1_height_map.png|300px|center|thumb|upright=1.5|Figure 7: San Marco]] | |||

|[[File:Youtube2_height_map.png|300px|center|thumb|upright=1.5|Figure 8: San Giorgio Maggiore]] | |||

|[[File:Youtube3_height_map.png|240px|center|thumb|upright=1.5|Figure 9: Santa Maria della Salute]] | |||

|} | |||

== Cadaster Alignment == | |||

[[File:alignment.png|400px|right|thumb|upright=1.5|Alignment|Figure 10: Alignment]] | |||

In order to map our City Elevation Map to the cadaster map, we use QGIS for georeferencing[https://www.sciencedirect.com/topics/social-sciences/georeferencing], which is the process of assigning locations to geographical objects within a geographic frame of reference. QGIS enables us to manually mark ground control points in both maps: Firstly, we select a representative location from the city elevation map, then we select the same location in the reference cadastre map. We set around ten pairs of ground control points to align the two maps, and the Venice zone EPSG 3004 should be chosen as the coordinate reference system when it comes to the transformation setting. The blue layer is the cadaster map while the red layer is our city elevation map. The georeferencing result is acceptable, where our height map matches the cadaster mostly accurately without distortion. | |||

= Quality Assessment = | |||

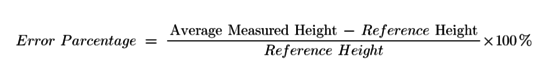

For each building, the average building height was obtained using Interactive point selection method of Open3D. To evaluate the precision of our model, we compared our average measured height with the actual building height. | |||

[[File:Error_rate.PNG|550px|center]] | |||

Then we take the absolute value of each building's error rate to compute the mean error. | |||

For each model, | |||

[[File:Mean.PNG|180px|center]] where n is the total number of buildings in the model | |||

== Objective Assessment of Google-Earth-Based Venice Model == | |||

The mean error of the building height measurement in the Google Earth based Venice Model is '''3.3%''' according to landmarks and other buildings below. | |||

In the | [[File:venice_whole.png|1200px|center|thumb|upright=1.5|Figure 11: The Whole Venice]] | ||

=== Assessment of Landmarks === | |||

In order to obtain the height information of below specific buildings in the model, we use function 'pick_points(pcd)' in Open3D to selct the vertex of building. Then we can get coordinate of the vertex and the z-coordinate represents the corresponding building's height. | |||

{| class="wikitable" style="text-align:center;" | |||

|- style="font-weight:bold; vertical-align:middle; background-color:#EAECF0;" | |||

! colspan="5" | | |||

|- style="font-weight:bold; vertical-align:middle; background-color:#EAECF0;" | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | Name of Buildings | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | Average Measured Height | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | Reference Height | |||

| Error | |||

| Pictures | |||

|- style="background-color:#F8F9FA;" | |||

| style="vertical-align:middle; font-family:Arial, Helvetica, sans-serif !important;;" | Basilica di San Marco | |||

| style="vertical-align:middle; font-family:Arial, Helvetica, sans-serif !important;;" | 44m | |||

| style="font-family:Arial, Helvetica, sans-serif !important;; color:#36B;" | 43m | |||

| style="vertical-align:middle;" | 2.32% | |||

| style="vertical-align:middle;" | [[File:BasilicadiSanMarco.png|200px|center]] | |||

|- style="background-color:#F8F9FA;" | |||

| style="vertical-align:middle; font-family:Arial, Helvetica, sans-serif !important;;" | Campanile di San Marco | |||

| style="vertical-align:middle; font-family:Arial, Helvetica, sans-serif !important;;" | 99m | |||

| style="font-family:Arial, Helvetica, sans-serif !important;; color:#36B;" | 98.6m | |||

| style="vertical-align:middle;" | 0.40% | |||

| style="vertical-align:middle;" | [[File:CampanilediSanMarco.png|200px|center]] | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | Chiesa di San Giorgio Maggiore | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | 42m | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | NA | |||

| NA | |||

| rowspan="2" | [[File:ChiesadiSanGiorgioMaggiore.png|200px|center]] | |||

|- style="background-color:#F8F9FA;" | |||

| style="vertical-align:middle; font-family:Arial, Helvetica, sans-serif !important;;" | Campanile di San Giorgio Maggiore | |||

| style="vertical-align:middle; font-family:Arial, Helvetica, sans-serif !important;;" | 72m | |||

| style="font-family:Arial, Helvetica, sans-serif !important;; color:#36B;" | 63m | |||

| style="vertical-align:middle;" | 12.5% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | Basilica di Santa Maria della Salute | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | 64m | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | NA | |||

| NA | |||

| rowspan="2" | [[File:BasilicadiSantaMariadellaSalute.png|200px|center]] | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | Campanile di Santa Maria della Salute | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | 49m | |||

| style="font-family:Arial, Helvetica, sans-serif !important;;" | NA | |||

| NA | |||

|- style="background-color:#F8F9FA;" | |||

| style="vertical-align:middle; font-family:Arial, Helvetica, sans-serif !important;;" | Basilica dei Santi Giovanni e Paolo | |||

| style="vertical-align:middle; font-family:Arial, Helvetica, sans-serif !important;;" | 54m | |||

| style="font-family:Arial, Helvetica, sans-serif !important;; color:#36B;" | 55.4m | |||

| style="vertical-align:middle;" | 2.52% | |||

| style="vertical-align:middle;" | [[File:BasilicadeiSantiGiovanniePaolo.png|200px|center]] | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Campanile di Chiesa di San Geremia | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 50m | |||

| style="background-color:#F8F9FA; color:#36B;" | 50m | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 0 | |||

| [[File:CampanilediChiesadiSanGeremia.png|200px|center]] | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Campanile dei Gesuiti | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 43m | |||

| style="background-color:#F8F9FA; color:#36B;" | 40m | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 7.50% | |||

| [[File:CampaniledeiGesuiti.png|200px|center]] | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Campanile San Francesco della Vigna | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 76m | |||

| style="background-color:#F8F9FA; color:#36B;" | 69m | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 10.14% | |||

| [[File:CampanileSanFrancescodellaVigna.png|200px|center]] | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Chiesa del Santissimo Redentore | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 47m | |||

| style="background-color:#F8F9FA; color:#36B;" | 45m | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4.44% | |||

| [[File:ChiesadelSantissimoRedentore.png|200px|center]] | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Hilton Molino Stucky Venice | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 45m | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | NA | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | NA | |||

| [[File:HiltonMolinoStuckyVenice.png|200px|center]] | |||

|} | |||

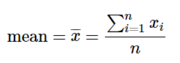

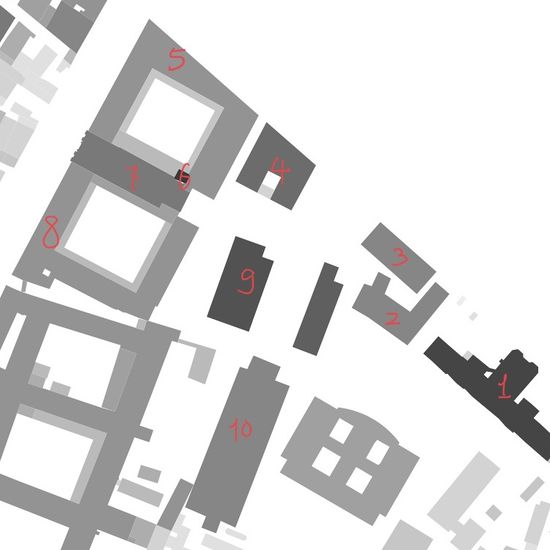

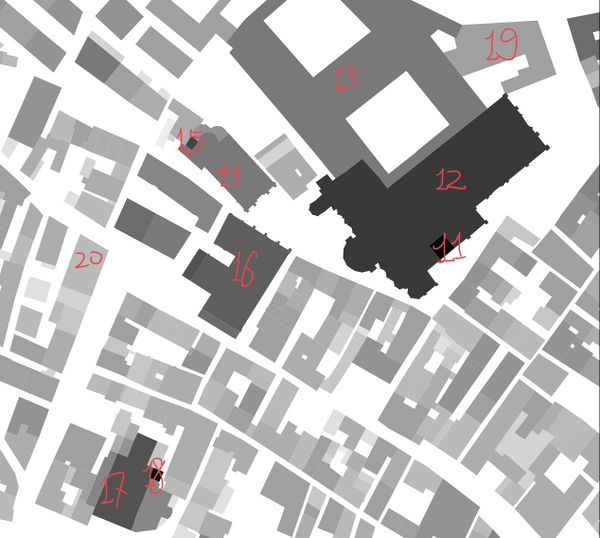

=== Assessment of other Buildings === | |||

== | {| class="wikitable" style="text-align:center;" | ||

|- style="text-align:left;" | |||

! colspan="4" | [[File:Assess2.jpg|550px|center|Figure 14: Buildings near Basilica dei Santi Giovanni e Paolo]] | |||

! colspan="4" | [[File:Assess3.jpg|600px|center|Figure 15: Buildings near Basilica dei Frari]] | |||

|- style="font-weight:bold; vertical-align:middle; background-color:#EAECF0;" | |||

| colspan="4" | Buildings near Basilica dei Santi Giovanni e Paolo | |||

| colspan="4" | Buildings near Basilica dei Frari | |||

|- style="font-weight:bold; vertical-align:middle; background-color:#EAECF0;" | |||

| Index | |||

| Average Measure Height | |||

| Reference Height | |||

| Error | |||

| Index | |||

| Average Measure Height | |||

| Reference Height | |||

| Error | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 1 | |||

| 28.25m | |||

| 28.1m | |||

| 0.53% | |||

| 11 | |||

| 63m | |||

| 63.07m | |||

| 0.11% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 2 | |||

| 19.5m | |||

| 19.44m | |||

| 0.30% | |||

| 12 | |||

| 31m | |||

| 30.94m | |||

| 0.19% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 3 | |||

| 18.5m | |||

| 18.77m | |||

| 1.43% | |||

| 13 | |||

| 20m | |||

| 20.8m | |||

| 3.84% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 4 | |||

| 23m | |||

| 22.59m | |||

| 1.81% | |||

| 14 | |||

| 20m | |||

| 21.28m | |||

| 6.01% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 5 | |||

| 17m | |||

| 16.95m | |||

| 0.29% | |||

| 15 | |||

| 26m | |||

| 28.22m | |||

| 7.86% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 6 | |||

| 31m | |||

| 30.83m | |||

| 0.55% | |||

| 16 | |||

| 24m | |||

| 24.92m | |||

| 3.69% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 7 | |||

| 20.5m | |||

| 21.02m | |||

| 2.47% | |||

| 17 | |||

| 26m | |||

| 26.4m | |||

| 1.51% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 8 | |||

| 17m | |||

| 17.24m | |||

| 1.39% | |||

| 18 | |||

| 46m | |||

| 46.58m | |||

| 1.24% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 9 | |||

| 27.5m | |||

| 26.31m | |||

| 4.52% | |||

| 19 | |||

| 15m | |||

| 15.87m | |||

| 5.48% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 10 | |||

| 19.5m | |||

| 19.41m | |||

| 0.46% | |||

| 20 | |||

| 10m | |||

| 10.56m | |||

| 5.30% | |||

|} | |||

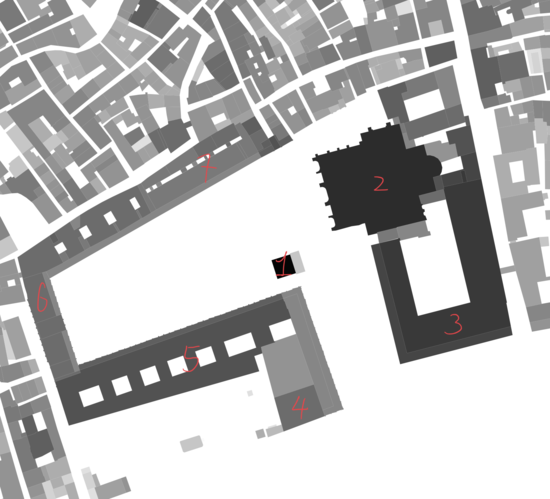

== Objective Assessment of Youtube-Video-Based Venice Model == | |||

{| class="wikitable" style="text-align:center;" | |||

|- | |||

! colspan="4" | [[File:Assess4.png|550px|center|Figure 14: Buildings near Basilica Cattedrale Patriarcale di San Marco]] | |||

! colspan="4" | [[File:Assess5.png|600px|center|Figure 15: Buildings near Basilica di Santa Maria della Salute]] | |||

|- style="font-weight:bold;"vertical-align:middle; font-size:16px; background-color:#EAECF0;" | |||

| colspan="4" | Buildings near Basilica Cattedrale Patriarcale di San Marco | |||

| colspan="4" | Buildings near Basilica di Santa Maria della Salute | |||

|- style="font-weight:bold;"vertical-align:middle; font-size:16px; background-color:#F8F9FA;" | |||

| Index | |||

| Average Measure Height | |||

| Reference Height | |||

| style="font-weight:bold;" | Error | |||

| Index | |||

| Average Measure Height | |||

| Reference Height | |||

| Error | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 1 | |||

| 99m | |||

| 98.6m | |||

| 0.40% | |||

| 8 | |||

| 21m | |||

| 21.76m | |||

| 3.49% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 2 | |||

| 44m | |||

| 43m | |||

| 2.32% | |||

| 9 | |||

| 19m | |||

| 20.12m | |||

| 5.56% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 3 | |||

| 30m | |||

| 30.59m | |||

| 1.92% | |||

| 10 | |||

| 11m | |||

| 11.15m | |||

| 1.34% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 4 | |||

| 24.33m | |||

| 23.21m | |||

| 4.82% | |||

| 11 | |||

| 22m | |||

| 23.21m | |||

| 5.21% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 5 | |||

| 26m | |||

| 26.35m | |||

| 1.32% | |||

| 12 | |||

| 11m | |||

| 12.07m | |||

| 8.86% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 6 | |||

| 21m | |||

| 22.21m | |||

| 5.44% | |||

| 13 | |||

| 14m | |||

| 14.18m | |||

| 1.26% | |||

|- style="vertical-align:middle; background-color:#F8F9FA;" | |||

| 7 | |||

| 21m | |||

| 21.53m | |||

| 2.46% | |||

| 14 | |||

| 16m | |||

| 16.98m | |||

| 5.77% | |||

|} | |||

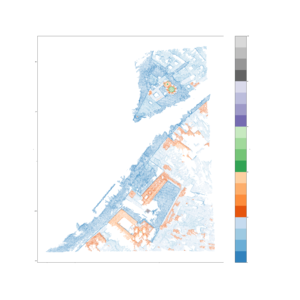

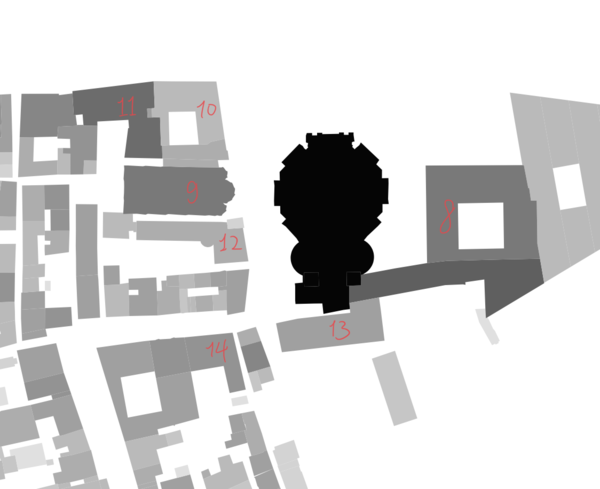

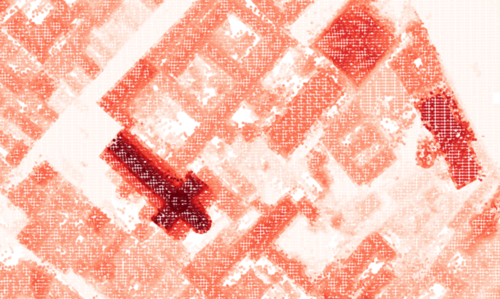

== | == Subjective Assessment of Google-Earth-Based Venice Model == | ||

During the subjective assessment, We gather ten volunteers to give their subjective scores on our part of Digital Elevation map to evaluate the visual quality of the map. Here we use Double Stimulus Impairment Scale (DSIS) which is a type of double stimulus subjective experiment in which pairs of images are shown next to each other to a group of people for the rating. One stimulus is always the reference image, while the other is the image that needs to be evaluated. The participants are asked to rate the images according to a five-levels grading scale according to the impairment that they are able to perceive between the two images (5-Imperceptible, 4-Perceptible but not annoying, 3-Slightly annoying, 2-Annoying, 1-Very annoying). We will compute the Differential Mean Opinion Score (DMOS) for the below two months. The DMOS score can be computed as: | |||

[[File:DMOS.png|150px|center|]] | |||

where N is the number of participants and DV<sub>ij</sub> is the differential score by participant i for the stimulus j. The score of the reference maps are always set to be 5.The DMOS score of the images are: | |||

[[File:DV.png|150px|center|]] | |||

{| class="wikitable" style="text-align:center;" | |||

|- style="font-weight:bold;" | |||

! colspan="2" style="vertical-align:middle; background-color:#EAECF0;" | DMOS | |||

! rowspan="13" style="vertical-align:middle; background-color:#EAECF0;" | [[File:Red_1.png|500px|center|thumb|upright=1.5|Figure 16: Details of the City Elevation Map (First Image)]] | |||

! rowspan="13" style="font-weight:normal; text-align:left;" | [[File:Red_2.png|500px|center|thumb|upright=1.5|Figure 17: Details of the Given Cadaster (First Reference)]] | |||

|- style="font-weight:bold;" | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Participant | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | First Image | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 1 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 2 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 3 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 6 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 7 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 8 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 9 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 10 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Average | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4.4 | |||

|} | |||

{| class="wikitable" style="text-align:center;" | |||

|- style="font-weight:bold; vertical-align:middle; background-color:#EAECF0;" | |||

! colspan="2" | DMOS | |||

! rowspan="13" | [[File:R3.png|500px|center|thumb|upright=1.5|Figure 18: Details of the City Elevation Map (Second Image)]] | |||

! rowspan="13" | [[File:R4.png|500px|center|thumb|upright=1.5|Figure 19: Details of the Given Cadaster (Second Reference)]] | |||

|- style="font-weight:bold;" | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Participant | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Second Image | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 1 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 2 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 3 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 3 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 6 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 3 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 7 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 8 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 9 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 10 | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 5 | |||

|- | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | Average | |||

| style="vertical-align:middle; background-color:#F8F9FA;" | 4.1 | |||

|} | |||

According to the DMOS result, both images have high scores which means acceptable fidelity in terms of subjective quality assessment. | |||

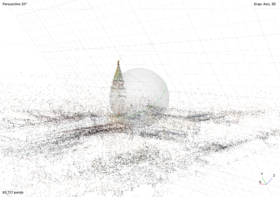

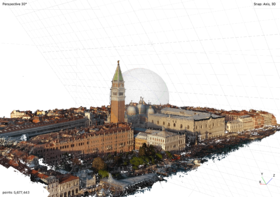

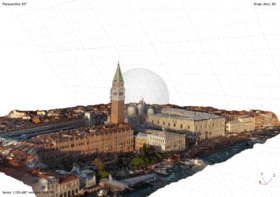

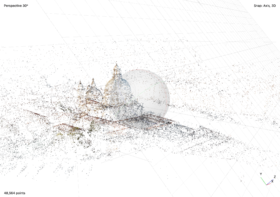

= Display of YouTube-Video-Based Venice Models = | |||

== Basilica Cattedrale Patriarcale di San Marco == | |||

{|class="wikitable" style="text-align:right; font-family:Arial, Helvetica, sans-serif !important;;" | |||

|- | |||

|[[File:tiedpoint2.png|280px|center|thumb|upright=1.5|Figure 20: Tied Point Model]] | |||

|[[File:densecloud2.png|280px|center|thumb|upright=1.5|Figure 21: Dense Cloud Model]] | |||

|[[File:tiled2.png|280px|center|thumb|upright=1.5|Figure 22: Tiled Model]] | |||

|[[File:mesh2.png|280px|center|thumb|upright=1.5|Figure 23: Mesh Model]] | |||

|} | |||

== Basilica di San Giorgio Maggiore == | |||

{|class="wikitable" style="text-align:right; font-family:Arial, Helvetica, sans-serif !important;;" | |||

|- | |||

|[[File:tiedpoint.png|280px|center|thumb|upright=1.5|Figure 24: Tied Point Model]] | |||

|[[File:Densecloud.png|280px|center|thumb|upright=1.5|Figure 25: Dense Cloud Model]] | |||

|[[File:tiled.png|280px|center|thumb|upright=1.5|Figure 26: Tiled Model]] | |||

|[[File:mesh.png|280px|center|thumb|upright=1.5|Figure 27: Mesh Model]] | |||

|} | |||

== Basilica di Santa Maria della Salute == | |||

{|class="wikitable" style="text-align:right; font-family:Arial, Helvetica, sans-serif !important;;" | |||

|- | |||

|[[File:tied_2.png|280px|center|thumb|upright=1.5|Figure 28: Tied Point Model]] | |||

|[[File:dense_2.png|280px|center|thumb|upright=1.5|Figure 29: Dense Cloud Model]] | |||

|[[File:tiled_2.png|280px|center|thumb|upright=1.5|Figure 30: Tiled Model]] | |||

|[[File:mesh_2.png|280px|center|thumb|upright=1.5|Figure 31: Mesh Model]] | |||

|} | |||

= Limitations and Further Work= | |||

= | == Limitations == | ||

* '''Challenges in model implementation from Google Earth Image''' | |||

Using Google Earth images is a solution to the problems mentioned above. But it is not a perfect method either. It seemed that we were in a dilemma when we tried to collect images from Google Earth: When we took screenshots from a long distance from the ground, the resolution of images was very low, the details of the buildings were not well rendered, thus causing the low quality of the PointCloud model. When we took screenshots from a short distance from the ground, we obtained a much better result, the details were much clearer. But to get this result, the number of images we needed to build the PointCloud model increase exponentially, and the calculation was almost impossible for a Personal Computer to afford. | |||

One possible solution is dividing Venice into smaller parts, building a PointCloud for every part, and merging the PointClouds models together in the end. However, this solution requires registration, which is not only quite imprecise, but also hard to implement. | |||

* | * '''Inaccurate Plane Generation and Redressing''' | ||

= | One of the inherent defects in the methodology is 'Plane Generation' and 'Plane Redressing' are imperfect. When we implement 'Plane Redressing', the ideal scenario is that after a single transformation, the plane equation would become ''0x + 0y + z = 0''. However, when we implement the transformation, it is not the case: the coefficients before x and y are not exactly 0. The error caused by this inherent defect could be neutralised by implementing the transformation for 1000 times: When the number of iterations increases, the coefficients before x and y approaches zero infinitely. | ||

== Further Work == | |||

* '''More Precise Georeferencing and Pixel Matching''' | |||

We only choose ten ground control points for QGIS to georeference the raster height map. Generally, The more points you select, the more accurate the image is registered to the target coordinates of the cadaster. In the future, after choosing enough ground control points, we will compare our Venice elevation map with the cadaster pixel by pixel in the same resolution. Both maps use the same color and grey scales. | |||

* '''Building Contour Sharpening''' | |||

In our Venice elevation map, the building footprint is blurred and not well detected in some areas. In the future, we will use Shapely in Python to find the polygons and OpenCV to implement contour approximation. | |||

= Planning = | = Planning = | ||

| Line 162: | Line 577: | ||

| | | | ||

* Configure the environment and master the related softwares and libraries | * Configure the environment and master the related softwares and libraries | ||

* Find suitable drone videos on YouTube and | * Find suitable drone videos on YouTube and extract photos for further photogrammetry work | ||

| align="center" | ✓ | | align="center" | ✓ | ||

|- | |- | ||

| week 8-9 | | week 8-9 | ||

| | | | ||

* Align photos, generate and denoise dense point clouds | * Align photos, generate and denoise dense point clouds, build mesh and tiled models of Venice | ||

* | * Collect screenshots of the whole Venice on Google Earth | ||

| align="center" | ✓ | | align="center" | ✓ | ||

|- | |- | ||

| week 10 | | week 10 | ||

| | | | ||

* | * Generate the plane of point cloud modela | ||

* Prepare for the midterm presentation | * Prepare for the midterm presentation | ||

| align="center" | ✓ | | align="center" | ✓ | ||

| Line 180: | Line 594: | ||

| week 11 | | week 11 | ||

| | | | ||

* | * Construct City Elevation Model | ||

* Redress the plane and generate City Elevation Map | |||

| align="center" | ✓ | |||

|- | |- | ||

| week 12 | | week 12 | ||

| | | | ||

* | * Align our City Elevation Map to the given Venice cadaster | ||

* Assess the visual quality and building's height accuracy of the Google earth based Venice model, and buildings' height accuracy of the Youtube based Venice model | |||

| align="center" | ✓ | |||

|- | |- | ||

| week 13 | | week 13 | ||

| | | | ||

* Write final report, refine the map layers with building height information and the final models | * Write final report, refine the map layers with building height information and the final models | ||

| align="center" | ✓ | |||

|- | |- | ||

| week 14 | | week 14 | ||

| | | | ||

* Refine the final report and | * Refine the final report, code and models | ||

* Prepare for final presentation | * Prepare for the final presentation | ||

| align="center" | ✓ | |||

|- | |- | ||

|} | |} | ||

= References = | |||

[1] https://pubs.er.usgs.gov/publication/ofr20211039 | |||

= Deliverables = | |||

* Source Codes: | |||

[https://github.com/yetiiil/venice_building_height https://github.com/yetiiil/venice_building_height] | |||

* Venice Point Cloud Models: | |||

[https://filesender.switch.ch/filesender2/?s=download&token=0b7afa31-f914-43e5-899d-ba29402f3754 Google Earth Model] | |||

[https://filesender.switch.ch/filesender2/?s=download&token=12fdf964-9d38-42f7-9270-81bbdb0711d7 Basilica di Santa Maria della Salute Model] | |||

[https://filesender.switch.ch/filesender2/?s=download&token=39e18118-9146-412e-881c-6e23409bef9f Basilica di San Giorgio Maggiore Model] | |||

[https://filesender.switch.ch/filesender2/?s=download&token=96271d6e-90c2-4d2c-a2b0-351df08c6945 Basilica Cattedrale Patriarcale di San Marco Model] | |||

In case the files expire, please contact yuxiao.li@epfl.ch | |||

Latest revision as of 11:58, 14 January 2022

Introduction

In this project, our main goal is to obtain the height information of buildings in Venice city. In order to achieve this goal, we construct a point cloud model of Venice from Google Earth and YouTube drone videos with the help of photogrammetry tools. Initially, we experimented on drone videos from YouTube. Since our goal is to detect the height of all the buildings in Venice, it is important for us to collect images of all the buildings in the city. However, in YouTube videos, we only found some landmark architecture whose height information is already available on the Internet. Hence, we switched the data scource to Google Earth images. With our current image source, we have successfully calculated the heights of Venice buildings including unknown buildings and famous ones. We also evaluated both subjective and objective quality of our City Elevation Map, which will be discussed in the following sections. The Venice 3d model along with height information can be used to create an virtual experience. Besides, the City Elevation map can be useful for urban planning and security purposes in the future.

Motivation

Venice, one of the most extraordinary cities in the world, was built on 118 islands in the middle of the Venetian Lagoon at the head of the Adriatic Sea in Northern Italy. The planning of the city, to be floating in a lagoon of water, reeds, and marshland always amazes travellers and architects. The beginning of the long and rich history of Venice was on March 25th 421 AD (At high noon). Initially, the city was made using mud and woods. The revolution of the city plan has changed over time since it was built. Today, "the floating city of Italy" is called "the sinking city". Over the past 100 years, the city has sunk 9 inches. According to environmentalists, because of global warming, the sea level will rise, which will eventually cover this beautiful city in upcoming years. To us engineers, it is important to keep track of the building height changes of Venice in order to take relevant actions in time to save the beautiful city. Since changes in natural disasters are sometimes unpredictable, to save the present beauty of Venice, we can recreate the Venice visiting experience virtually as a part of Venice Time Machine. This in the future will be relevant for travellers, historians, architects, and other enthusiasts in the world. Apart from the facts mentioned above, building height is one of the most important pieces of information to consider for urban planning, economic analysis and digital twin implementation.

Milestones

Milestone 1

- Get familiar with OpenMVG, Open3D, pyntcloud, Agisoft Metashape, Blender, CloudCompare and QGIS

- Collect high resolution venice drone videos on youtube and Google Earth images as supplementary materials

Milestone 2

- Align photos of Venice, generate sparse point clouds made up of only high-quality tie points and repeatedly optimize point cloud models by reconstruction uncertainty filtering, projection accuracy filtering and reprojection error filtering

- Remove outliers automatically and manually, and build dense point clouds based on sparse cloud

- Build Venice 3D model (mesh) and tiled model according to dense point cloud data

Milestone 3

- Generate ground plane with the largest support in the point cloud and redress the plane by translation and rotation

- Construct City Elevation Model by a point-to-plane method and generate City Elevation Model based on z-coordinate after redressing

Milestone 4

- Align the City Elevation Map to the reference cadaster using QGIS's georeferencing and evaluate the subjective visual quality of the map

- Evaluate the accuracy of height calculation of both Google Earth based Venice model and Youtube based Venice model

Methodology

Images Aquisition

Point cloud denotes a 3D content representation which is commonly preferred due to its high efficiency and relatively low complexity in the acquisition, storage, and rendering of 3D models. In order to generate Venice dense point cloud models, sequential images from different angles in different locations need to be collected first based on the following two sources.

Youtube Video Method

Our initial plan was to download drone videos of Venice on the Youtube platform, used FFmpeg to extract images from the video one frame per second, and generate dense point cloud models based on the images that we acquired. However, the videos we could get from drone videos are very limited: only a few of the videos are of high quality. Among those qualified videos, most videos only focused on monumental architecture such as St Mark's Square, St. Mark's Basilica, and Doge's Palace, where other buildings have a different extent of deficiencies from various angles in the point clouds. Therefore, a large proportion of Venice could not be 3D reconstructed if we only adopt Youtube Video Method.

Google Earth Method

In order to access the comprehensive area of Venice, we decided to use Google Earth as a complementary tool: we could obtain images of every building we want at each angle. With Google Earth, we are able to have much more access to aerial images of Venice, not only will these images serve as images set to generate dense point cloud models in order to calculate building heights, but we can also use the overlapping part in different point cloud models to evaluate point cloud models in a cross-comparison way.

In a nutshell, we apply a photogrammetric reconstruction tool to generate point cloud models. Photogrammetry is the art and science of extracting 3D information from photographs. As for the Youtube-based method, we will only target famous buildings which appear in different drone videos and select suitable images to form the specific building's image set. With regard to the Google Earth method, we will obtain images of the whole of Venice manually in a scientific trajectory of the photogrammetry route.

Point Cloud Generation

Sparse cloud is generated after aligning the multi-angle photos, which can observe overlapping photo pairs. Then the dense point cloud uses the estimated camera positions generated during tie points based matching and the depth map for each camera, which can have greater density than LiDAR point clouds.[1]

Point Cloud Optimization

Outlier Removal

When collecting data from scanning devices, the resulting point cloud tends to contain noise and artifacts that one would like to remove. Apart from selecting points in Agisoft Metashape manually, we also use Open3D to deal with the noises and artefacts. In the Open3D, there are two ways to detect the outliers and remove them: statistical outlier removal and radius outlier removal. Radius outlier removal removes points that have few neighbors in a given sphere around them, and statistical outlier removal removes points that are further away from their neighbors compared to the average for the point cloud. We set the number of the neighbors to be 50, radius to be 0.01. Automatic outlier removal could remove around 75% percent of noise, but the effect isn't perfect, which is not as good as the manually gradual selection. Therefore, Automatic outlier removal could serve as a prepossessing step for the final de-noise, after automatic outlier removal, we still need to manually de-noise the point cloud.

Error Reduction

The first phase of error reduction is to remove points that are the consequence of poor camera geometry. Reconstruction uncertainty is a numerical representation of the uncertainty in the position of a tie point based on the geometric relationship of the cameras from which that point was projected or triangulated, which is the ratio between the largest and smallest semi axis of the error ellipse created when triangulating 3D point coordinates between two images. Points constructed from image locations that are too close to each other have a low base-to-height ratio and high uncertainty. Removing these points does not affect the accuracy of optimization but reduces the noise in the point cloud and prevents points with large uncertainty in the z-axis from influencing other points with good geometry or being incorrectly removed in the reprojection error step. A reconstruction uncertainty of 10, which can be selected with the gradual selection filter and set to this value or level, is roughly equivalent to a good base-to-height ratio of 1:2.3 , whereas 15 is roughly equivalent to a marginally acceptable base-to-height ratio of 1:5.5. The reconstruction uncertainty selection procedures are repeated two times to reduce the reconstruction uncertainty toward 10 without having to delete more than 50 percent of the tie points each time.[1]

The second phase of error reduction phase removes points based on projection accuracy. Projection accuracy is essentially a representation of the precision with which the tie point can be known given the size of the key points that intersect to create it. The key point size is the standard deviation value of the Gaussian blur at the scale at which the key point was found. The smaller the mean key point value, the smaller the standard deviation and the more precisely located the key point is in the image. The highest accuracy points are assigned to level 1 and are weighted based on the relative size of the pixels. A tie point assigned to level 2 has twice as much projection inaccuracy as level 1. The projection accuracy selection procedure is repeated without having to delete more than 50% of the points each time until level 2 is reached and there are few points selected. [1]

The final phase of error reduction phase is removing points based on reprojection error. This is a measure of the error between a 3D point's original location on the image and the location of the point when it is projected back to each image used to estimate its position. Error-values are normalized based on key point size. High reprojection error usually indicates poor localization accuracy of the corresponding point projections at the point matching step. Reprojection error can be reduced by iteratively selecting and deleting points, then optimizing until the unweighted RMS reprojection error is between 0.13 and 0.18 which means that there is 95% confidence that the remaining points meet the estimated error.[1]

Plane Generation

To calculate the height of the building, we have to find the ground of the Venice PointCloud model, which could be retrieved by a tool provided by Open3D. Open3D supports segmentation of geometric primitives from point clouds using "Random Sample Consensus", which is an iterative method to estimate parameters of a mathematical model from a set of observed data that contains outliers when outliers are to be accorded no influence on the values of the estimates. To find the ground plane with the largest support in the point cloud, we use segment_plane by specifying "distance_threshold". The support of a hypothesised plane is the set of all 3D points whose distance from that plane is at most some threshold (e.g. 10 cm). After each run of the RANSAC algorithm, the plane that had the largest support is chosen. All the points supporting that plane may be used to refine the plane equation, which is more robust than just using the neighbouring points by performing PCA or linear regression on the support set.

Plane Redressing

After selecting the most convenient plane and its supporting points, we define the plane equation in the 3D space. We want to build a PointCloud model where the Z coordinate of a point equals the height of this point in real world, so the next step is to redress the plane by projecting it on an X-O-Y space. For the projection calculation, we used the projection matrix mentioned below.

Here, the plane equation is ax + by + cz + d = 0 The redressing was performed in two steps, first translation and then rotation.

For translation, the plane intersects Z-axis at (0, 0, -d/c). So the translation is

which passes through the origin and vector v = (a, b, c)T

For the rotation, the angle between v and k = (0, 0, 1)T' is given by:

The axis of rotation has to be orthogonal to v and k. so its versor is:

The rotation is represented by the matrix:

Please note that:

Height Detection

Point-to-Plane Based Height Calculation and City Elevation Model Construction

Once the plane equation is obtained in the previous step, we could start calculating the height of buildings. In the first step, we calculate the relative height of the buildings based on the formula of point-to-plane distance. The coordinate information of each point could be accessed by the 'points' attribute of the ‘PointCloud’ object with the help of 'Open3D'. Since we already have the plane equation, using this formula, we could obtain the distances between each point and the plane and save them in a (n, 1) array.

To visualise the height of the building, the first method is to change the colors of the points in the PointCloud model. For each point in the ‘PointCloud’, it has a ‘Colors' attribute. By calling this attribute, an (n, 3) array of value range [0, 1], containing RGB colors information of the points will be returned. Therefore, by normalising the ‘distances array’ values to the range of [0,1] and expanding the 'distances array' to three dimensions by replication, we get a (n, 3) ‘height array' in the form of (n, 3) 'colors array’.

Basically, we transform the height information of each point to its 'colors' information, expressing the height by its color, higher points have lighter colors and vice versa. This model provides us with an intuitive sense of the building heights, which is more qualitative than quantitative.

Coordinate Based Height Calculation and City Elevation Map Construction

To show the building heights more quantitatively, we decided to construct a City Elevation Map, which could be achieved by plotting the height information of points in a 2D map. However, there is a prerequisite: we should get the actual height information of every point. After implementing "Plane Redressing", we assume that the z-coordinate can be approximated as the height. But before directly using it, we still need to scale the model so that the height of buildings in the model matches the actual height of buildings. For instance, we know the actual height of a reference point in the real world, and we could also obtain the height of this reference point in the PointCloud model, therefore, we could obtain the scale factor in this PointCloud model. For all the other buildings in the model, the actual height equals the height in the PointCloud model multiplying the scale factor.

To put it into practice, we need to set a reference point in the model, "Campanile di San Marco" is the best choice. The height of the highest building in Venice, "Campanile di San Marco", is 98.6 metres. In this step, the built-in function "Scale" from Open3D is adopted, and we finally obtained a 1:1 Venice PointCloud model where the z-coordinate of a point equals the actual height of this point in the real world.

Cadaster Alignment

In order to map our City Elevation Map to the cadaster map, we use QGIS for georeferencing[1], which is the process of assigning locations to geographical objects within a geographic frame of reference. QGIS enables us to manually mark ground control points in both maps: Firstly, we select a representative location from the city elevation map, then we select the same location in the reference cadastre map. We set around ten pairs of ground control points to align the two maps, and the Venice zone EPSG 3004 should be chosen as the coordinate reference system when it comes to the transformation setting. The blue layer is the cadaster map while the red layer is our city elevation map. The georeferencing result is acceptable, where our height map matches the cadaster mostly accurately without distortion.

Quality Assessment

For each building, the average building height was obtained using Interactive point selection method of Open3D. To evaluate the precision of our model, we compared our average measured height with the actual building height.

Then we take the absolute value of each building's error rate to compute the mean error.

For each model,

where n is the total number of buildings in the model

Objective Assessment of Google-Earth-Based Venice Model

The mean error of the building height measurement in the Google Earth based Venice Model is 3.3% according to landmarks and other buildings below.

Assessment of Landmarks

In order to obtain the height information of below specific buildings in the model, we use function 'pick_points(pcd)' in Open3D to selct the vertex of building. Then we can get coordinate of the vertex and the z-coordinate represents the corresponding building's height.

| Name of Buildings | Average Measured Height | Reference Height | Error | Pictures |

| Basilica di San Marco | 44m | 43m | 2.32% | |

| Campanile di San Marco | 99m | 98.6m | 0.40% | |

| Chiesa di San Giorgio Maggiore | 42m | NA | NA | |

| Campanile di San Giorgio Maggiore | 72m | 63m | 12.5% | |

| Basilica di Santa Maria della Salute | 64m | NA | NA | |

| Campanile di Santa Maria della Salute | 49m | NA | NA | |

| Basilica dei Santi Giovanni e Paolo | 54m | 55.4m | 2.52% | |

| Campanile di Chiesa di San Geremia | 50m | 50m | 0 | |

| Campanile dei Gesuiti | 43m | 40m | 7.50% | |

| Campanile San Francesco della Vigna | 76m | 69m | 10.14% | |

| Chiesa del Santissimo Redentore | 47m | 45m | 4.44% | |

| Hilton Molino Stucky Venice | 45m | NA | NA | |

Assessment of other Buildings

| Buildings near Basilica dei Santi Giovanni e Paolo | Buildings near Basilica dei Frari | ||||||

| Index | Average Measure Height | Reference Height | Error | Index | Average Measure Height | Reference Height | Error |

| 1 | 28.25m | 28.1m | 0.53% | 11 | 63m | 63.07m | 0.11% |

| 2 | 19.5m | 19.44m | 0.30% | 12 | 31m | 30.94m | 0.19% |

| 3 | 18.5m | 18.77m | 1.43% | 13 | 20m | 20.8m | 3.84% |

| 4 | 23m | 22.59m | 1.81% | 14 | 20m | 21.28m | 6.01% |

| 5 | 17m | 16.95m | 0.29% | 15 | 26m | 28.22m | 7.86% |

| 6 | 31m | 30.83m | 0.55% | 16 | 24m | 24.92m | 3.69% |

| 7 | 20.5m | 21.02m | 2.47% | 17 | 26m | 26.4m | 1.51% |

| 8 | 17m | 17.24m | 1.39% | 18 | 46m | 46.58m | 1.24% |

| 9 | 27.5m | 26.31m | 4.52% | 19 | 15m | 15.87m | 5.48% |

| 10 | 19.5m | 19.41m | 0.46% | 20 | 10m | 10.56m | 5.30% |

Objective Assessment of Youtube-Video-Based Venice Model

| Buildings near Basilica Cattedrale Patriarcale di San Marco | Buildings near Basilica di Santa Maria della Salute | ||||||

| Index | Average Measure Height | Reference Height | Error | Index | Average Measure Height | Reference Height | Error |

| 1 | 99m | 98.6m | 0.40% | 8 | 21m | 21.76m | 3.49% |

| 2 | 44m | 43m | 2.32% | 9 | 19m | 20.12m | 5.56% |

| 3 | 30m | 30.59m | 1.92% | 10 | 11m | 11.15m | 1.34% |

| 4 | 24.33m | 23.21m | 4.82% | 11 | 22m | 23.21m | 5.21% |

| 5 | 26m | 26.35m | 1.32% | 12 | 11m | 12.07m | 8.86% |

| 6 | 21m | 22.21m | 5.44% | 13 | 14m | 14.18m | 1.26% |

| 7 | 21m | 21.53m | 2.46% | 14 | 16m | 16.98m | 5.77% |

Subjective Assessment of Google-Earth-Based Venice Model

During the subjective assessment, We gather ten volunteers to give their subjective scores on our part of Digital Elevation map to evaluate the visual quality of the map. Here we use Double Stimulus Impairment Scale (DSIS) which is a type of double stimulus subjective experiment in which pairs of images are shown next to each other to a group of people for the rating. One stimulus is always the reference image, while the other is the image that needs to be evaluated. The participants are asked to rate the images according to a five-levels grading scale according to the impairment that they are able to perceive between the two images (5-Imperceptible, 4-Perceptible but not annoying, 3-Slightly annoying, 2-Annoying, 1-Very annoying). We will compute the Differential Mean Opinion Score (DMOS) for the below two months. The DMOS score can be computed as:

where N is the number of participants and DVij is the differential score by participant i for the stimulus j. The score of the reference maps are always set to be 5.The DMOS score of the images are:

| DMOS | |||

|---|---|---|---|

| Participant | First Image | ||

| 1 | 5 | ||

| 2 | 4 | ||

| 3 | 4 | ||

| 4 | 4 | ||

| 5 | 5 | ||

| 6 | 4 | ||

| 7 | 5 | ||

| 8 | 5 | ||

| 9 | 4 | ||

| 10 | 4 | ||

| Average | 4.4 | ||

| DMOS | |||

|---|---|---|---|

| Participant | Second Image | ||

| 1 | 5 | ||

| 2 | 4 | ||

| 3 | 3 | ||

| 4 | 4 | ||

| 5 | 4 | ||

| 6 | 3 | ||

| 7 | 5 | ||

| 8 | 4 | ||

| 9 | 4 | ||

| 10 | 5 | ||

| Average | 4.1 | ||

According to the DMOS result, both images have high scores which means acceptable fidelity in terms of subjective quality assessment.

Display of YouTube-Video-Based Venice Models

Basilica Cattedrale Patriarcale di San Marco

Basilica di San Giorgio Maggiore

Basilica di Santa Maria della Salute

Limitations and Further Work

Limitations

- Challenges in model implementation from Google Earth Image

Using Google Earth images is a solution to the problems mentioned above. But it is not a perfect method either. It seemed that we were in a dilemma when we tried to collect images from Google Earth: When we took screenshots from a long distance from the ground, the resolution of images was very low, the details of the buildings were not well rendered, thus causing the low quality of the PointCloud model. When we took screenshots from a short distance from the ground, we obtained a much better result, the details were much clearer. But to get this result, the number of images we needed to build the PointCloud model increase exponentially, and the calculation was almost impossible for a Personal Computer to afford.

One possible solution is dividing Venice into smaller parts, building a PointCloud for every part, and merging the PointClouds models together in the end. However, this solution requires registration, which is not only quite imprecise, but also hard to implement.

- Inaccurate Plane Generation and Redressing

One of the inherent defects in the methodology is 'Plane Generation' and 'Plane Redressing' are imperfect. When we implement 'Plane Redressing', the ideal scenario is that after a single transformation, the plane equation would become 0x + 0y + z = 0. However, when we implement the transformation, it is not the case: the coefficients before x and y are not exactly 0. The error caused by this inherent defect could be neutralised by implementing the transformation for 1000 times: When the number of iterations increases, the coefficients before x and y approaches zero infinitely.

Further Work

- More Precise Georeferencing and Pixel Matching

We only choose ten ground control points for QGIS to georeference the raster height map. Generally, The more points you select, the more accurate the image is registered to the target coordinates of the cadaster. In the future, after choosing enough ground control points, we will compare our Venice elevation map with the cadaster pixel by pixel in the same resolution. Both maps use the same color and grey scales.

- Building Contour Sharpening

In our Venice elevation map, the building footprint is blurred and not well detected in some areas. In the future, we will use Shapely in Python to find the polygons and OpenCV to implement contour approximation.

Planning

| Week | Tasks | Completion |

|---|---|---|

| week 5 |

|

✓ |

| week 6-7 |

|

✓ |

| week 8-9 |

|

✓ |

| week 10 |

|

✓ |

| week 11 |

|

✓ |

| week 12 |

|

✓ |

| week 13 |

|

✓ |

| week 14 |

|

✓ |

References

[1] https://pubs.er.usgs.gov/publication/ofr20211039

Deliverables

- Source Codes:

https://github.com/yetiiil/venice_building_height

- Venice Point Cloud Models:

Basilica di Santa Maria della Salute Model

Basilica di San Giorgio Maggiore Model

Basilica Cattedrale Patriarcale di San Marco Model

In case the files expire, please contact yuxiao.li@epfl.ch