Extracting Toponyms from Maps of Jerusalem: Difference between revisions

No edit summary |

|||

| Line 237: | Line 237: | ||

No Open Street Map equivalent - and developing one would require a custom set of ground truth possibilities | No Open Street Map equivalent - and developing one would require a custom set of ground truth possibilities | ||

==Github Repository== | ==Github Repository== | ||

Revision as of 23:30, 14 December 2023

rubric

Written deliverables (Wiki writing) (40%)

Projet plan and milestones (10%) (>300 words)

Motivation and description of the deliverables (10%) (>300 words)

Detailed description of the methods (10%) (>500 words)

Quality assessment and discussion of limitations (10%) (>300 words)

The indicated number of words is a minimal bound. Detailed description can in particular be extended if needed.

Project Timeline

| Timeframe | Task | Completion |

|---|---|---|

| Week 4 |

|

✓ |

| Week 5 |

|

✓ |

| Week 6 |

|

✓ |

| Week 7 |

|

✓ |

| Week 8 |

|

✓ |

| Week 9 |

|

✓ |

| Week 10 |

|

✓ |

| Week 11 |

|

✓ |

| Week 12 |

|

|

| Week 13 |

|

|

| Week 14 |

|

Introduction

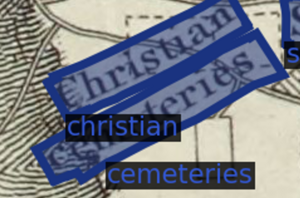

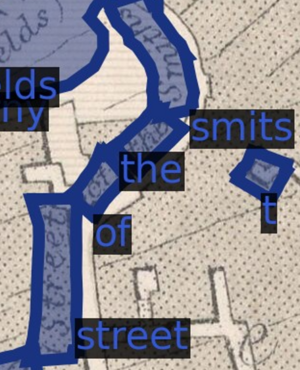

We aim to programmatically and accurately extract toponyms (place names) from historical maps of Jerusalem. Building from mapKurator, a scene text recognition pipeline developed specifically for toponym extraction by the University of Minnesota's Knowledge Computing Lab, we develop a novel label extraction and processing pipeline capable of significant accuracy improvements relative to mapKurator's spotter module alone. We then explore the success of our pipeline during generalization, describe the limitations of our approach, and suggest possibilities for future progress in accuracy or end-user interactivity.

Motivation

Development of an accurate, accessible, and generally applicable tool for extraction of map toponyms is an unrealized goal. Indeed, despite the deep-learning induced boon to scene text recognition (STR) seen in the past decade, STR in general remains difficult due to the sheer variety of shapes, fonts, colors, languages, and text backgrounds encountered.[1] In the context of historical maps and Jerusalem especially, STR issues are compounded by the fact that there is not necessarily agreement between cartographers over toponym spellings (in the case of toponyms transliterated from arabic, for example) or, as is true among biblical toponyms, even the precise location of certain places[2]. Properly collecting toponym locations and spellings from historical maps of Old Jerusalem would thus permit researchers to understand how such ambiguities have manifested as agreement or disagreement in geographic depiction throughout time.

In an effort to automate text recognition on historical map archives - our exact use case - the University of Minnesota's Knowledge Computing Lab has developed an end-to-end toponym extraction and rectification pipeline dubbed mapKurator. [3] While we do build on their methods, we identify three issues with simply implementing mapKurator on historical maps of Jerusalem to achieve our intended result. First, some elements of the full mapKurator pipeline (namely the use of Open Street Map to correct extracted labels) are not suitable for historical maps of Jerusalem due to the ambiguities described above. Second, the success of the chief tool used to extract text in mapKurator depends heavily on its model weights - weights that users may not have the computational power or ground truth labels to train. Third, mapKurator's text spotting tool only extracts individual words, requiring further processing to capture multi-word toponyms. We therefore decide to augment mapKurator's text spotting tool with a pipeline involving repeated sampling and methods for recovering proper toponyms from said sampling.

The contributions of this project are thus inroads into the collection of accurate toponyms from historical maps of Jerusalem and a general, accessible pipeline that can be mounted on a pre-trained STR label extractor to improve accuracy.

Deliverables

- A novel, general-use, and computationally accessible toponym extraction pipeline capable of augmenting pre-trained SOTA neural network models.

- A set of X ground truth toponyms across Y historical maps of Jerusalem.

- A set of X extracted toponyms across Y historical maps of Jerusalem.

- A set of X labels denoting font similarity across Y images of toponyms.

These deliverables are available via the Git repository linked at the bottom of this page.

Methodology

MapKurator

Pyramid Scan

Extracted Label Processing

Introduction & Definitions

Subword Deduplication

Nested Word Flattening

Single-Line Sequence Recovery

Multi-Line Sequence Recovery

Evaluation Metrics

Unmentioned thus far is how we develop our ground truth label set G. Using the online image annotating software VIA, we manually label X labels across Y maps. These Y maps were chosen for their high resolution, their preponderance of toponyms located directly over place locations (as opposed to inside legends), their visual diversity, and their linguistic diversity. Ground truth labels are annotated at the line level rather than the toponym level. For example, if "Church of" appears directly above "the Flagellation", we annotate each as a separate ground truth label - though we manually indicate that they belong to the same toponym by assigning a group id. Line-level annotations with the option for multiline combination allows us to report accuracy statistics at both the line- and multiline-level, shedding light on the power of our pipeline in different contexts.

To evaluate Ω for a given map, we first retain only those extracted and processed labels located in the same pixel space as a crop of the map which has all possible ground truth toponyms in G. We then calculate all pairwise geometrical IoUs between these subsets of Ω and G, find the 1-to-1 matched set that maximizes the sum of said IoUs, and divide this sum by the number of extracted labels (in the case of precision) or the number of ground truth labels (in the case of recall). Text-based accuracy statistics, meanwhile, report the sum of normalized Levenshtein edit distances from the 1-to-1 geometrically matched set divided by the pertinent number of labels.

Results

| Precision | Recall | |||

|---|---|---|---|---|

| Geometric | Textual | Geometric | Textual | |

| Baseline | ||||

| Pyramid + Processing | ||||

| Precision | Recall | |||

|---|---|---|---|---|

| Geometric | Textual | Geometric | Textual | |

| Baseline | ||||

| Pyramid + Processing | ||||

| Precision | Recall | |||

|---|---|---|---|---|

| Geometric | Textual | Geometric | Textual | |

| Baseline | ||||

| Pyramid + Processing | ||||

Limitations and Future Work

Show relative to mapKurator paper maps

Only X maps and Y labels for ground truth

Imbalanced font similarity labels, + the fact that font similarity labels may not generalize

No Open Street Map equivalent - and developing one would require a custom set of ground truth possibilities

Github Repository

Acknowledgements

We thank Professor Frédéric Kaplan, Sven Najem-Meyer, and Beatrice Vaienti of the DHLAB for their valuable guidance over the course of this project.

References

Webpages

Chief Literature

- Kim, Jina, et al. "The mapKurator System: A Complete Pipeline for Extracting and Linking Text from Historical Maps." arXiv preprint arXiv:2306.17059 (2023).

- Li, Zekun, et al. "An automatic approach for generating rich, linked geo-metadata from historical map images." Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 2020

Footnotes

- ↑ Liao, Minghui, et al. "Scene text recognition from two-dimensional perspective." Proceedings of the AAAI conference on artificial intelligence. Vol. 33. No. 01. 2019.

- ↑ Smith, George Adam. Jerusalem: The Topography, Economics and History from the Earliest Times to AD 70. Vol. 2. Cambridge University Press, 2013.

- ↑ Kim, Jina, et al. "The mapKurator System: A Complete Pipeline for Extracting and Linking Text from Historical Maps." arXiv preprint arXiv:2306.17059 (2023).