Extracting Toponyms from Maps of Jerusalem: Difference between revisions

| Line 36: | Line 36: | ||

==== Spline Connection Cost ==== | ==== Spline Connection Cost ==== | ||

We assume that word labels which belong to a sequence should be connectable via relatively short and non-curved splines. To implement this assumption, for each subword-deduplicated and nested-word-flattened word label $\psi \in | We assume that word labels which belong to a sequence should be connectable via relatively short and non-curved splines. To implement this assumption, for each subword-deduplicated and nested-word-flattened word label $\psi \in '''''Ψ''''', we first apply PCA to the points constituting bounding box $P_{\psi}$ to recover unit vector $\vec{d}_{\psi}$, the principal component basis for a given label's bounding box. Furthermore, for each $P_{\psi}$, we create the vectors $\vec{e}_{\psi,p}$ between successive points $p \in \Z$ along $P_{\psi}$, recovering directional vectors along each label's bounding box. We then use these bounding-box vectors to adjust the principal component direction vector in preparation for spline calculation as follows: | ||

Define $ RES_p = |\vec{d}_{\psi} \cdot \vec{e}_{\psi,p}| (1 - |\vec{d}_{\psi} \cdot \vec{e}_{\psi,p}|)$. For bounding-box vectors parallel or orthogonal to $\vec{d}_{\psi}$, this value will be 0. Our goal is to maximize the number of vectors (nearly or exactly) parallel or orthogonal to $\vec{d}_{\psi}$, as it would represent a more suitable principal direction for a given bounding box. Thus, our specific objective function is $\argmin_{d}\sum_{p}w_p(RES_p)^2$, where $w_p = $ the length of $\vec{e}_{\psi,p}$ (it is more important for longer bounding box vectors to agree with the principal component direction than shorter bounding box vectors). We retrieve the optimal principal component direction $\vec{d}_{\psi}^*$ via the Gauss-Newton algorithm for solving non-linear least squares optimizations. | Define $ RES_p = |\vec{d}_{\psi} \cdot \vec{e}_{\psi,p}| (1 - |\vec{d}_{\psi} \cdot \vec{e}_{\psi,p}|)$. For bounding-box vectors parallel or orthogonal to $\vec{d}_{\psi}$, this value will be 0. Our goal is to maximize the number of vectors (nearly or exactly) parallel or orthogonal to $\vec{d}_{\psi}$, as it would represent a more suitable principal direction for a given bounding box. Thus, our specific objective function is $\argmin_{d}\sum_{p}w_p(RES_p)^2$, where $w_p = $ the length of $\vec{e}_{\psi,p}$ (it is more important for longer bounding box vectors to agree with the principal component direction than shorter bounding box vectors). We retrieve the optimal principal component direction $\vec{d}_{\psi}^*$ via the Gauss-Newton algorithm for solving non-linear least squares optimizations. | ||

Revision as of 14:23, 20 December 2023

Introduction

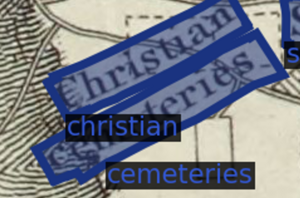

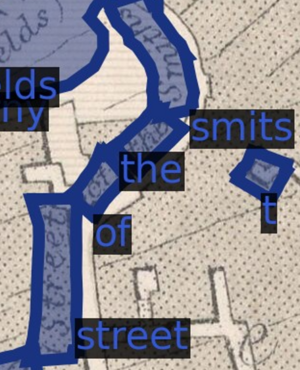

We aim to programmatically and accurately extract toponyms (place names) from historical maps of Jerusalem. Building from mapKurator, a scene text recognition pipeline developed specifically for toponym extraction by the University of Minnesota's Knowledge Computing Lab, we develop a novel label extraction and processing pipeline capable of significant accuracy improvements relative to mapKurator's spotter module alone. We then explore the success of our pipeline during generalization, describe the limitations of our approach, and suggest possibilities for future progress in accuracy or end-user interactivity.

Motivation

Development of an accurate, accessible, and generally applicable tool for extraction of map toponyms is an unrealized goal. Indeed, despite the deep-learning induced boon to scene text recognition (STR) seen in the past decade, STR in general remains difficult due to the sheer variety of shapes, fonts, colors, languages, and text backgrounds encountered.[1] In the context of historical maps and Jerusalem especially, STR issues are compounded by the fact that there is not necessarily agreement between cartographers over toponym spellings (in the case of toponyms transliterated from arabic, for example) or, as is true among biblical toponyms, even the precise location of certain places[2]. Properly collecting toponym locations and spellings from historical maps of Old Jerusalem would thus permit researchers to understand how such ambiguities have manifested as agreement or disagreement in geographic depiction throughout time.

In an effort to automate text recognition on historical map archives - our exact use case - the University of Minnesota's Knowledge Computing Lab has developed an end-to-end toponym extraction and rectification pipeline dubbed mapKurator. [3] While we do build on their methods, we identify three issues with simply implementing mapKurator on historical maps of Jerusalem to achieve our intended result. First, some elements of the full mapKurator pipeline (namely the use of Open Street Map to correct extracted labels) are not suitable for historical maps of Jerusalem due to the ambiguities described above. Second, the success of the chief tool used to extract text in mapKurator depends heavily on its model weights - weights that users may not have the computational power or ground truth labels to train. Third, mapKurator's text spotting tool only extracts individual words, requiring further processing to capture multi-word toponyms. We therefore decide to augment mapKurator's text spotting tool with a pipeline involving repeated sampling and methods for recovering proper toponyms from said sampling.

The contributions of this project are thus inroads into the collection of accurate toponyms from historical maps of Jerusalem and a general, accessible pipeline that can be mounted on a pre-trained STR label extractor to improve accuracy.

Deliverables

- A novel, general-use, and computationally accessible toponym extraction pipeline capable of improving accuracy for pre-trained SOTA neural network models.

- A set of X ground truth toponyms across 3 historical maps of Jerusalem.

- A set of X extracted toponyms across 3 historical maps of Jerusalem.

- A set of X labels denoting font similarity across 3 images of toponyms.

These deliverables are available via the Git repository linked at the bottom of this page.

Methodology

MapKurator

Pyramid Scan

Extracted Label Processing

Introduction & Definitions

Subword Deduplication

Nested Word Flattening

Sequence Recovery

Font Similarity

Spline Connection Cost

We assume that word labels which belong to a sequence should be connectable via relatively short and non-curved splines. To implement this assumption, for each subword-deduplicated and nested-word-flattened word label $\psi \in Ψ, we first apply PCA to the points constituting bounding box $P_{\psi}$ to recover unit vector $\vec{d}_{\psi}$, the principal component basis for a given label's bounding box. Furthermore, for each $P_{\psi}$, we create the vectors $\vec{e}_{\psi,p}$ between successive points $p \in \Z$ along $P_{\psi}$, recovering directional vectors along each label's bounding box. We then use these bounding-box vectors to adjust the principal component direction vector in preparation for spline calculation as follows:

Define $ RES_p = |\vec{d}_{\psi} \cdot \vec{e}_{\psi,p}| (1 - |\vec{d}_{\psi} \cdot \vec{e}_{\psi,p}|)$. For bounding-box vectors parallel or orthogonal to $\vec{d}_{\psi}$, this value will be 0. Our goal is to maximize the number of vectors (nearly or exactly) parallel or orthogonal to $\vec{d}_{\psi}$, as it would represent a more suitable principal direction for a given bounding box. Thus, our specific objective function is $\argmin_{d}\sum_{p}w_p(RES_p)^2$, where $w_p = $ the length of $\vec{e}_{\psi,p}$ (it is more important for longer bounding box vectors to agree with the principal component direction than shorter bounding box vectors). We retrieve the optimal principal component direction $\vec{d}_{\psi}^*$ via the Gauss-Newton algorithm for solving non-linear least squares optimizations.

Next, for each $\vec{d}_{\psi}^*$, we define a primary and secondary axis relative to the associated bounding box centroid $\bar{P}_{\psi}$ (the latter axis being orthogonal to $\vec{d}_{\psi}^*$). We call this local axis the bounding box's anchor. If the variance of the principal component for a given bounding box is six times larger than the variance of the secondary component, we assume the polygon may have sufficient size to warrant different local axes at different locations (for example, the word may be curved), and thus, we divide the bounding box to permit multiple anchor points. These anchor points are points through which splines are drawn.

We create Cubic Bezier splines to measure the cost of connecting word labels. Assume we are drawing a spline from anchor point $\xi_1$ to anchor point $\xi_2$ in distinct word labels $wl_1$ and $wl_2$. The first control point $cp_1$ is the centroid of $\xi_1$. The second control point is $cp_1$ plus the product of (1) the furthest point from $cp_1$ on $wl_1$'s bounding box in $\xi_1$'s principal direction and (2) $\xi_1$'s principal direction vector. The third control point is $\xi_2$ minus the product of (1) the furthest point from $cp_2$ on $wl_2$'s bounding box in $\xi_2$'s principal direction and (2) $\xi_2$'s principal direction vector. The fourth point is of course the centroid of $\xi_2$. We are, in effect, drawing a curve between two polygons that respects their orientation. After drawing such splines between all pairwise combinations of word labels (with distant geometric pairs excluded to reduce computational complexity), we can finally calculate the cost of connecting word labels via spline.

For a given spline, let $C$ = its maximum curvature, $AD$ = the euclidean distance between the two connected anchor points' centroids, and $GD$ = the minimum euclidean distance between two connected bounding boxes. The greater a spline's maximium curvature and the greater the distance between two polgyons, the less likely it is two polygons deserve to be grouped into a sequence. However, one should scale both by centroid distance to acheive scale invariance. Hence, the Spline Connection cost is equal to $C * AD * \frac{GD}{AD} = C*GD \in (0,\infty).$ The smaller this value, the more it makes sense for two word labels to belong to the same sequence.

Combining Word Labels Using Font and Spline Metrics

With both font similarity and spline connection cost metrics in hand, we identify those word label pairs for which spline connection cost is less than 0.5 and for which font similarity is greater than 0.5. We then commence pairwise combinations by prioritizing the smallest spline connection costs; combined labels inherit maximum font similarity scores and minimum spline connection to constituent labels' neighbors, while text is concatenated starting with the leftmost polygon's text in a given pair. This process is performed iteratively until no combinations can be made, at which point the resultant set Θ is assumed to be equivalent to G (some issues with this assumption are discussed in the limitations section below).

Evaluation Metrics

Unmentioned thus far is how we develop our ground truth label set G. Using the online image annotating software VIA, we manually label X labels across Y maps. These Y maps were chosen for their high resolution, their preponderance of toponyms located directly over place locations (as opposed to inside legends), their visual diversity, and their linguistic diversity. Ground truth labels are annotated at the line level rather than the toponym level. For example, if "Church of" appears directly above "the Flagellation", we annotate each as a separate ground truth label - though we manually indicate that they belong to the same toponym by assigning a group id. Line-level annotations with the option for multiline combination allows us to report accuracy statistics at both the line- and multiline-level, shedding light on the power of our pipeline in different contexts.

To evaluate Θ for a given map, we first retain only those extracted and processed labels located in the same pixel space as a crop of the map which has all possible ground truth toponyms in G. We then calculate all pairwise geometrical IoUs between these subsets of Θ and G, find the 1-to-1 matched set that maximizes the sum of said IoUs, and divide this sum by the number of extracted labels (in the case of precision) or the number of ground truth labels (in the case of recall). Text-based accuracy statistics, meanwhile, report the sum of normalized Levenshtein edit distances from the 1-to-1 geometrically matched set divided by the pertinent number of labels.

Results

| Precision | Recall | |||

|---|---|---|---|---|

| Geometric | Textual | Geometric | Textual | |

| Baseline | ||||

| Pyramid + Processing | ||||

| Precision | Recall | |||

|---|---|---|---|---|

| Geometric | Textual | Geometric | Textual | |

| Baseline | ||||

| Pyramid + Processing | ||||

| Precision | Recall | |||

|---|---|---|---|---|

| Geometric | Textual | Geometric | Textual | |

| Baseline | ||||

| Pyramid + Processing | ||||

Limitations and Future Work

One chief limitation of our project is the limited evidence pointing toward its generalizability. With only X ground truth labels developed from 3 maps – maps chosen in part for their relatively high resolution – and marked inter-map variation in accuracy boosts, it is not evident that our pipeline would assuredly yield significant benefits across other historical maps of Jerusalem, not to mention other maps in general. Furthermore, defining our baseline as the accuracy of mapKurator’s pre-trained extraction module on historical maps of Jerusalem is problematic given that it was developed using a different map archive. A more appropriate evaluation regime would involve applying our pipeline to the same set of maps and labels the mapKurator team used to create and evaluate their model, thus expanding the number and diversity of ground truth labels, decoupling the marginal effect of our pipeline from the marginal effect of substituting the mapKurator’s map archive with another, and (ideally) making a stronger case for our pipeline’s generalizability. Though this evaluation regime is outside the scope of this Jerusalem-based project, we consider it to be the most important step toward the further validation of our approach.

Certain stages of our pipeline could benefit from further tuning. Our spline-based approach toward single line sequence recovery does not accommodate cases where the centroid of two consecutive words is vertically mismatched (such as when a small, short word follows a larger word and both are bottom-justified, for example). One of our chosen maps uses a pervasive graphical element to represent trees that is consistently mistaken by the mapKurator spotter tool for a letter of text. Many such unresolved corner cases persist due to time limitations; addressing them would boost accuracy statistics. So too would developing further labels for font discriminator tool, which still misclassifies approximately X% of label pairs despite some Y manually labelled comparisons, likely due to imbalance in the number of matching fonts in the label set. In fact, given the narrow map coverage and imbalanced label set used to train the discriminator, it may still take considerable signal from the map the text is pulled from rather than the type of font used alone. Identifying and tackling each processing stage’s distinct imperfections is a necessary if time-intensive path toward making our pipeline production ready.

Finally, we recognize that certain elements of the mapKurator pipeline which we disregard due to their inability to directly apply to our map archive would be beneficial if adapted for our project. We refer primarily to the PostOCR module, in which extracted text is mapped to verified toponyms from OpenStreetMap using a fuzzy query function (the issue with directly using this module is described in the Motivation section above). One can imagine emulating this process with a different set of “valid” toponyms: simply run the spotter module over a vast set of Jerusalem maps, apply our label processing pipeline, retain a set of the most prolific toponyms (or perhaps words) across all maps as the substitute for OpenStreetMap’s dictionary, and revisit all extracted toponyms to modify or at least annotate any which exhibit minor spelling changes from this novel dictionary. Such a process would invoke the same sampling justification behind the pyramid scanning regime to create a global, archive-wide dictionary in an unsupervised manner, create a lens through which to view potential spelling disagreements, and facilitate an environment in which our pipeline actually improves as more unlabeled data is processed.

We believe the pipeline we have produced to be useful and sound despite the limitations listed above, and are eager to see how resolving them might further improve the extraction of toponyms from historical maps.

Project Timeline

| Timeframe | Task | Completion |

|---|---|---|

| Week 4 |

|

✓ |

| Week 5 |

|

✓ |

| Week 6 |

|

✓ |

| Week 7 |

|

✓ |

| Week 8 |

|

✓ |

| Week 9 |

|

✓ |

| Week 10 |

|

✓ |

| Week 11 |

|

✓ |

| Week 12 |

|

✓ |

| Week 13 |

|

✓ |

| Week 14 |

|

✓ |

Github Repository

Acknowledgements

We thank Professor Frédéric Kaplan, Sven Najem-Meyer, and Beatrice Vaienti of the DHLAB for their valuable guidance over the course of this project.

References

Webpages

Chief Literature

- Kim, Jina, et al. "The mapKurator System: A Complete Pipeline for Extracting and Linking Text from Historical Maps." arXiv preprint arXiv:2306.17059 (2023).

- Li, Zekun, et al. "An automatic approach for generating rich, linked geo-metadata from historical map images." Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 2020

Footnotes

- ↑ Liao, Minghui, et al. "Scene text recognition from two-dimensional perspective." Proceedings of the AAAI conference on artificial intelligence. Vol. 33. No. 01. 2019.

- ↑ Smith, George Adam. Jerusalem: The Topography, Economics and History from the Earliest Times to AD 70. Vol. 2. Cambridge University Press, 2013.

- ↑ Kim, Jina, et al. "The mapKurator System: A Complete Pipeline for Extracting and Linking Text from Historical Maps." arXiv preprint arXiv:2306.17059 (2023).