Opera Rolandi archive: Difference between revisions

| Line 77: | Line 77: | ||

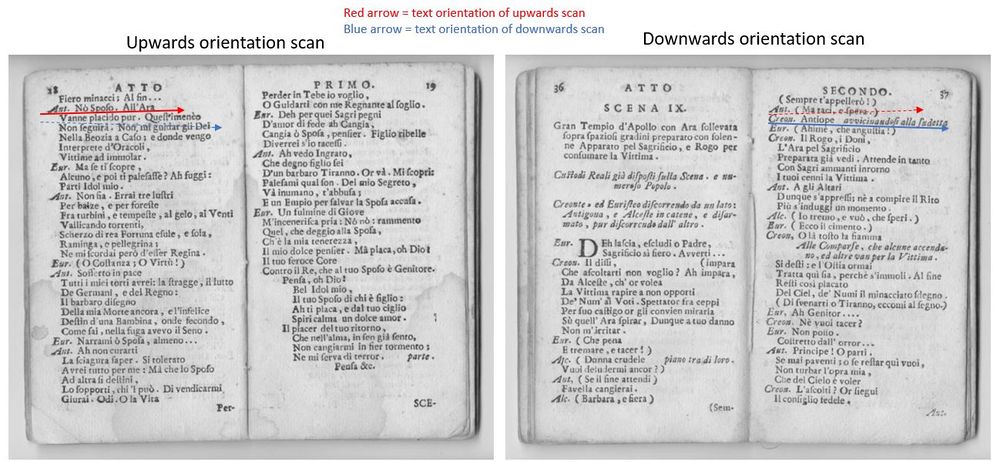

Indeed, as the digital images have different orientations due to the scanning, it was difficult to rearrange the words of our libretto into the correct sentences based uniquely on the x and y coordinates of the boxes surrounding our words. Different solutions have been proposed, like grouping the vertical coordinates into bins to reflect the sentences boxes, but this won't work as it still depends on the orientation of the document (one sentence can go upwards or downwards, depending on if the book is slightly turned upwards or downwards). | Indeed, as the digital images have different orientations due to the scanning, it was difficult to rearrange the words of our libretto into the correct sentences based uniquely on the x and y coordinates of the boxes surrounding our words. Different solutions have been proposed, like grouping the vertical coordinates into bins to reflect the sentences boxes, but this won't work as it still depends on the orientation of the document (one sentence can go upwards or downwards, depending on if the book is slightly turned upwards or downwards). | ||

[[File:OrientationScans.JPG| | [[File:OrientationScans.JPG|1000px|center|thumb|Orientation of texts in different scans]] | ||

The second problem that we encountered is the complexity of the scraping of the libretti. First the time to extract each page scan from one libretto of the collection is significant and defining which important pages to keep for the analysis (only the pages where the story content figures, removing the pages containing metadata) was difficult. | The second problem that we encountered is the complexity of the scraping of the libretti. First the time to extract each page scan from one libretto of the collection is significant and defining which important pages to keep for the analysis (only the pages where the story content figures, removing the pages containing metadata) was difficult. | ||

Revision as of 12:47, 12 December 2020

Abstract

The Fondazione Giorgio Cini has digitized 36000 pages from Ulderico Rolandi's opera libretti collection. This collection contains contemporary works of 17th- and 18th-century composers. These opera libretti have a diverse content, which offers a large amount of possibilities for analysis.

This project concentrates on a way to illustrate the characters’ interactions in the Rolandi's libretti collection through network visualization as well as the importance of each character in the acts they figure in. To achieve this, we retrieved important information using the DHLAB Deep Learning model dhSegment-torch and Google Vision OCR. We started from one opera libretto of Rolandi’s libretti collection, Antigona with RecordID MUS0030011 and generalized it to another libretto, Gli americani with RecordID MUS0010824.

Planning

| Week | To do |

|---|---|

| 12.11. (week 9) | Step 1: Segmentation model training, fine tuning & testing |

| 19.11. (week 10) | Step 2: Information extraction & cleaning |

| 26.11. (week 11) | Finishing Step 2 |

| 03.12. (week 12) | Step 3: Information storing & network visualization |

| 10.12. (week 13) | Finishing Step 3 and Finalize Report and Wikipage (Step 4: Generalization) |

| 17.12. (week 14) | Final Presentation |

Step 1

- Train model on diverse random images of Rolandi’s libretti collection (better for generalization aspects)

- Test model on diverse random images of Rolandi’s libretti collection

- Test on a single chosen libretto:

- If bad results, train the model on more images coming from the libretto

- If still bad results (and ok with the planning), try to help the model by pre-processing the images beforehand : i.e. black and white images (filter that accentuates shades of black)

- Choose a well-formatted and not too damaged libretto (to be able to do step 2)

Step 2

- Extract essential information from the libretto with OCR

- names, scenes and descriptions

- if bad results, apply pre-processing to make the handwriting sharper for reading

- If OCR extracts variants of same name:

- perform clustering to give it a common name

- apply distance measuring techniques

- find the real name (not just the abbreviation) of the character using the introduction at the start of the scene

Step 3

For one libretto:

- Extract the information below and store it in a tree format (json file):

- Assessment of extraction and cleaning results

- Create the relationship network:

- nodes = characters’ name

- links = interactions

- weight of the links = importance of a relationship

- weight of nodes = speech weight of a character + normalization so that all the scenes have the same weight/importance

Step 4: Optionnal

- See how our network algorithm generalizes (according to the success of step 1) to five Rolandi’s libretti.

- If this does not generalize well, strengthen our deep learning model with more images coming from these libretti.

Description of the Methods

Motivation

Our initial motivation was to create using all the Rolandi's libretti collection a timeline vizualisation of the opera's literary movements. As each movement has its own themes and characteristics, we wanted to extract, using OCR and NLP methods, the most common topics of each libretto and propose them as new metadata to the Fondazione Giorgio Cini. As the NLP algorithms are greatly accurate on English texts, we wanted to first translate each libretto from italian to english using Linguee's DeepL tool. There we encountered the problem which didn't permit to further permit the extraction of the complete texts.

Indeed, as the digital images have different orientations due to the scanning, it was difficult to rearrange the words of our libretto into the correct sentences based uniquely on the x and y coordinates of the boxes surrounding our words. Different solutions have been proposed, like grouping the vertical coordinates into bins to reflect the sentences boxes, but this won't work as it still depends on the orientation of the document (one sentence can go upwards or downwards, depending on if the book is slightly turned upwards or downwards).

The second problem that we encountered is the complexity of the scraping of the libretti. First the time to extract each page scan from one libretto of the collection is significant and defining which important pages to keep for the analysis (only the pages where the story content figures, removing the pages containing metadata) was difficult.

Therefore we shifted the direction of our project's goal and settled on the creation of a vizualisation tool to illustrate the evolution of the characters’ interactions and importance per libretto. This type of network analysis is way more interesting as already showed in the paper Literary Lab Pamphlet 2 by Franco Moretti, one of the many series of studies done at the Stanford Literary Lab on how to quantify novels or plays.

This new goal permits the discarding of or first problem of scan's orientations as the importance of each opera's character will be measure only through the occurences of its name in the begining of the dialogue. Regarding the second problem, we decided to focus only on a small subset of the Rolandi's libretti collection to ensure the creation of an accurate tool.

Dataset

- Choice of datasets (Format du texte, lisibilité des noms, séparation des scène/names propre)

- Choix de tout créer en se basant sur Antigone

Segmentation

- Choix de segmenter que les scènes et les abréviations des personnages

- Choix de ne pas se focaliser sur les dialogues (car impact de l'orientation du scan qui perturbe l'extraction des coordonnées des box et ainsi la remise en ordre des box dans une suite logique)

- Exemple de segmentations effectuées (background vert, noms rouge, ...) + nombre d'exemples pour training et validations

- Using the dh_segment model, we return for each pixel of the testing images a probability to be in the green, red, yellow or blue classes. This list of class probabilities has the same length as the image from which the words are going to be extracted.

OCR

- Use Google Vision API to extract all the words of our scans

- We store each word and its box coordinated into a csv file

Combination of OCR and Segmentation to Find Words of Interest

Using the prediction classes probabilities for each pixel of the image, which were computed using dh_segment, we can now create a mask to extract the words belonging to these classes. As the probability is distributed among all classes, one has to define an ocr_threshold which specifies at which point one pixel of the image belongs to one class. This 1 and 0 values will be stored as a mask for each attribute.

We define a range of the image from which to extract the words for each attribute (i.e. for the extraction of the names, we want to extract in the most left part of the left page, and the most left part of the right page.). We use this trick for two reason : first, this helps us order our words, finding the ones from the first page and then the ones form the second page, and second to optimize our algorithm so that it doesn't goes through all the image, and saves some time. We however choose a quite large range so that we are sure we do not miss any data. The picture below illustrates this. TODO insert schema.

Then, when we focus on a word and its particular box. We compute the mean of the class probability of all the pixels figuring in a smaller ratio of the box, which is centered in the true box. We don't read all the pixels of the box as the pixels further away from the center of the box have less chance of being in the class, and because at the end only the center ones matter. This allows us to have a better precision on our results. TODO create and insert schema.

We then compute the mean value of the corresponding pixels in the OCR result - that, recall, were either 0 or 1. We define a mean_threshold for each attribute which specifies at which point the word in the box is kept as being part of the class. We will only keep these words for our analysis. TODO create and insert schema.

After that, we return for each extracted word that matters to us their top left box x and y coordinate, that we got from the OCR results. We first order the words based on their x coordinates, to begin with the left page and then the right page. This is done using the defined ranges of the image from which to extract the words for each attribute. Then we order the words based on the y coordinates from top to bottom.

In the end, we have for each page the extracted words and their respective attribute (name, scene, description) in the order of appearance.

Creating the Network of a Libretto

From the list of all the ordered attributes of the libretto, we want to create a network that will be used to visualize the relationships between the characters in time. There are many challenges here.

Let's start with the problem of retrieving the names. In the dialogues, there are only abbreviations indicating which character is talking. We would want to find the full name of course. Luckily, in the description just below each scenes, there is a small text describing the place, and which characters play in the scene, by their complete name. We use this fact to find back the full name from the abbreviations.

We proceed the following way : first, with all the abbreviations we found, we create a pattern, that we will use to match the full name. For example, if we have Ant we will create a pattern An.*t*., and if we have Aut we will get Au.*t*.. We try to match them with words that appeared in the descriptions. The pattern means that we look for a word that begins with An followed by zero or many characters followed by t followed by zero or more characters. Once we found the full name for every abbreviations, a problem still remains : we might get some variants of the same name, for example Antigona or Autigona !

Because the OCR might have detected variants of the same name, we want to clean that. We could first think of clustering with K-means for example, but this doesn't work because we don't know a-priori the number of clusters, which corresponds to the number of characters in the libretto. There exists techniques such as K-metroids that could be a possible solution, but we would have to think a bit about how to implement it for strings. Because of time constraints, we choose another approach : similarity distances, and in our case the edit distance from Levenstein. This distance counts the number of edits (deletion, addition, replacement) between two strings, and if it is equal or less than two, we decide that they refer to the same name. For example, Antigona and Autigona would be of a distance of one, and thus grouped together. But then we need to decide which name is the true one. We do that by selecting the one that appears the most often, assuming that the OCR would be right most of the time.

For the scenes, we face another problem. We need a way to correctly count and order them. The scenes are starting from SCENA PRIMA to le last one of the act, then again for every act, and the very last one is SCENA ULTIMA. Moreover, the scenes are counted with roman numbers. Simply extracting the number is complicated for several reason : first, we would need to find back the index corresponding to the roman number. This could probably be done using a dictionary that maps the values. However, there is a second problem : because the typology of the scenes is very spaced (see figue TODO add figure), the OCR considers sometimes that each letter composing the number is a different word. And then we have again one of our first problem : which word appears first in the real libretto. So we need to find another algorithm to do that. We observe that the OCR detected quite well the SCENA. We will assume that every time we find a scene tagged SCENA, we increment the counter of scenes and add a new scene at this place. To find where a new act begin, and thus where to start the counter over, we simply look for an occurence of a scene tagged PRIMA. This will define where an act starts.

Finally, with all this, we get a tree where we have the acts, scenes, names of characters appearing in the scenes and their number of occurrences in the scene. TODO add figure of tree

Graph Representation of the Network

Now that we have the tree of the libretto, we can build our network visualization.

We create an interractive graph using D3.js. (TODO add link). Most of the code comes from here.

We need to tweak a bit our previous tree to format it in the following way :

- A key "nodes" which contains:

- key id, the name of the character

- key act_N: the weight of the node, being the number of times the character appeared in the act N. There are N key "act", depending of the number of acts figuring in the libretto.

- A key "links" which contains:

- key source, a character name

- key target, another character name

- key act_N: the weight of the link, being the number of scenes where the source and target characters appear together. There are N key "act", depending of the number of acts figuring in the libretto.

That way, we can visualize the importance of each character and each link between the characters, by looking at the size of the node or link. You can also choose which libretto and acts you want to visualize. Here is the example of the network of Antigone :

TODO insert picture of Antigone network

We see that in the all libretto, all the characters interact together and that TODO

Generalization

- Tried implementing all of the above for a new libretto, Gli

- Problems encountered:

- Not same format of printing (i.e. text focused in 2/5 of the pages)

- Text is really close to one another

- Loads of unnecessary words are being extracted by OCR, so hyperparameters threshold are too low.

- We try to extract to many character names. The top_N most common extracted abbreviations names hyperparameter is too high.

Quality Assesment

- Accuracy du testing de Segmentation ?

- zss which measures a tree structure edit distance

- We computed the total edit distance necessary between our extracted tree structure libretto with a ground truth one done manually.

- We computed per act edit distances necessary between our extracted tree structure libretto with the ground truth one. Indeed, we assume that we get a high edit distance whenever our model forgot/added a wrong scene, which will then shift the names (number) of the nodes in the tree structure by 1 and thus count wrongly the children of the tree (being the characters). Therefore this plot will point at the extra added/removed scene.

What Still Needs To Be Done

TODO Elisa