Europeana: A New Spatiotemporal Search Engine: Difference between revisions

Xinyi.ding (talk | contribs) |

Xinyi.ding (talk | contribs) |

||

| Line 41: | Line 41: | ||

Based on the above, the first step is binarization to convert greyscale images into black-and-white(BW) images. By comparing BW images with original ones, it finds that characters on binarized images is milder and vaguer. In this case, the original images are used to segment. | Based on the above, the first step is binarization to convert greyscale images into black-and-white(BW) images. By comparing BW images with original ones, it finds that characters on binarized images is milder and vaguer. In this case, the original images are used to segment. | ||

{|class="wikitable" style="margin: 1em auto 1em auto;" | |||

|- | |||

|[[File:Original orig_img.png|300px|thumb|center|Figure 3: Original Image]] | |||

|[[File:Labelled bw_img.png|300px|thumb|center|Figure 4: Binarized Image]] | |||

|} | |||

The next step is to segment pages into lines and regions. At last, images | The next step is to segment pages into lines and regions. At last, images | ||

Revision as of 13:09, 21 December 2022

Introduction and Motivation

Europeana as a container of Europe’s digital cultural heritage covers different themes like art, photography, and newspaper. As Europeana has covered diverse topics, it's difficult to balance the ways to present digital materials according to their content. The search for some specific topics needs to go through different steps, and the result of the search might also dissatisfy the user's intention. After having a deep knowledge of the structure of Europeana, we decided to create a new search engine to better present the resources according to their contents. Taking the time and scale of our group into account, we selected the theme Newspaper as the content for our engine. In order to narrow down the task further, we selected the newspaper La clef du cabinet des princes de l'Europe as our target.

La clef du cabinet des princes de l'Europe was the first magazine in Luxembourg. It appeared monthly from July 1704 to July 1794. There are 1,317 La clef du cabinet des princes de l'Europe magazines in Europeana. The page number for each magazine is between 75 to 85. In order to reduce the amount of data to a scale that can be dealt with on our laptops, we randomly selected 8,000 pages from the whole time span of the magazine.

In order to have a better presentation of the specific magazine on our engine, we mainly implement OCR, text analysis, database design, and webpage design.

OCR is the electronic or mechanical conversion of images of typed, handwritten, or printed text into machine-encoded text. This conversion from in-kind to digital format can not only be used for historical and cultural protection but also provide us access to a deep analysis of them based on the computer. In our work, we used OCR to convert the image format magazine to text and store the text in the database, which provides us with more convenience and chances to better deal with them.

For the text analysis part of our work, we used 3 methods: name entity, LDA, and n-gram to deal with the text we got.

For the presentation of the magazine, we developed a webpage to realize the search and analysis functions. The webpage aim at realizing interactivity between users, and let users have an efficient way to reach the content they'd like to get.

Deliverables

- The 8000 pages of La clef du cabinet des princes de l'Europe from July 1704 to July 1794 in image format from Europeana's website.

- The OCR results for 8000 pages in text format.

- The dataset for the text and results of text analysis based on LDA, name entity, and n-gram.

- The webpage to present the contents and analysis results for La clef du cabinet des princes de l'Europe.

- The GitHub repository contains all the codes for the whole project.

Methodologies

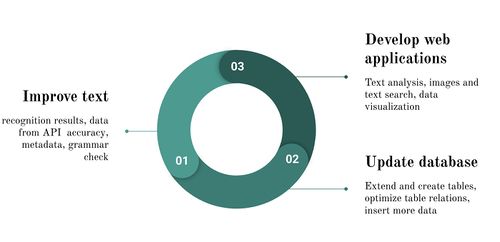

This project includes three main parts which are text processing, database development and web applications. At the same time, the project is conducted with a synergetic process of improving those three parts. Toolkits of this project contain Python for text processing and web applications, MySQL for database development, and FLASK for the webpage framework. In the end, the dataset is composed of four versions for 100 newspaper issues including 7950 pages, that is images, text from Europeana, text after OCR and text after OCR and grammar-checker.

1. Text processing

1.1 Data acquisition

Using the api given by Europeana's staff, the relevant data is acquired using web crawler. We first get the unique identifier for each journal, then use it to get the image url and ocr text provided by Europeana. We also get the publication date and the page number of every images, which is helpful for us to locate every page and retrieve them in the future. The crawling results is shown below.

1.2 Optical character recognition(OCR)

1.2.1 Ground truth and model

Corpus building: Transaction rules: Model Results'

1.2.2 OCR Procedure

Images for OCR is crawled from Europeana API. The resolution is 72 dpi and the color mode is in grayscale with white background. A low resolution introduces significant changes in the shape of letters. However, a few lines in images are blurry and glared, so noise is not a big problem during OCR. Besides, due to a simple and clear layout of the newspaper, results of segmentation are pretty good.

Based on the above, the first step is binarization to convert greyscale images into black-and-white(BW) images. By comparing BW images with original ones, it finds that characters on binarized images is milder and vaguer. In this case, the original images are used to segment.

File:Original orig img.png Figure 3: Original Image |

The next step is to segment pages into lines and regions. At last, images

1.3 Grammar checker

To optimize results obtained from OCR, this project used the grammar checker API to refine the text. After sending the requests to the server of the grammar checker, it will return a JSON file that contains all the modifications for the specific text. By using the offset and length information in the JSON file, we can locate the position of the word that should be modified in the original text. For every modification, we used the first possible value to replace the original word.

1.4 Text Analysis

- Named entity

- N-gram

2. Database development

2.1 Local MySQL database setup

2.2 MySQL database design

2.3 MySQL interaction

3. Webpage applications

Quality Assessment

Limitations

Project Plan and Milestones

| Date | Task | Completion |

|---|---|---|

| By Week 3 |

|

✓ |

| By Week 5 |

|

✓ |

| By Week 6 |

|

✓ |

| By Week 7 |

|

✓ |

| By Week 8 |

|

✓ |

| By Week 9 |

|

✓ |

| By Week 10 |

|

✓ |

| By Week 11 |

|

✓ |

| By Week 12 |

|

✓ |

| By Week 13 |

|

✓ |

| By Week 14 |

|

✓ |

Github Repository

https://github.com/XinyiDyee/Europeana-Search-Engine