Marino Sanudo's Diary: Difference between revisions

| Line 7: | Line 7: | ||

==Historical Context== | ==Historical Context== | ||

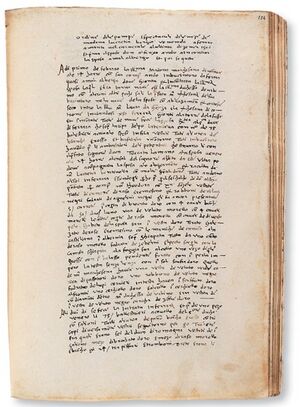

[[File: | [[File:Libretto.jpg|300px|thumb|left|A page of un diary]] | ||

Who Was Marino Sanudo? | Who Was Marino Sanudo? | ||

Revision as of 14:13, 15 December 2024

Introductions

The project focused on analyzing the diaries of Marino Sanudo, a key historical source for understanding the Renaissance period. The primary goal was to create an index of people and places mentioned in the diaries, pair these entities, and analyze the potential relationships between them.

Historical Context

Who Was Marino Sanudo? Marino Sanudo (1466–1536) was a Venetian historian, diarist, and politician whose extensive diaries, Diarii, provide a meticulous chronicle of daily life, politics, and events in Renaissance Venice. Sanudo devoted much of his life to recording the intricacies of Venetian society, governance, and international relations, making him one of the most significant chroniclers of his era.

The Importance of His Diaries Sanudo’s Diarii span nearly four decades, comprising 58 volumes of detailed observations. These writings offer invaluable insights into the political maneuvers of the Venetian Republic, social customs, and the geographical scope of Renaissance trade and diplomacy. His work captures not only significant historical events but also the daily rhythms of Venetian life, painting a vivid picture of one of the most influential states of the time.

Relevance Today Studying Marino Sanudo’s diaries remains highly relevant for modern historians, linguists, and data analysts. They provide a primary source for understanding Renaissance politics, diplomacy, and social hierarchies. Furthermore, the diaries’ exhaustive detail lends itself to contemporary methods of analysis, such as network mapping and data visualization, enabling new interpretations and uncovering hidden patterns in historical relationships. By examining the interconnectedness of individuals and places, Sanudo’s work sheds light on the broader dynamics of Renaissance Europe, offering lessons that resonate even in today’s globalized world.

Project Plan and Milestones

The project was organized on a weekly basis to ensure steady progress and a balanced workload. Each phase was carefully planned with clearly defined objectives and milestones, promoting effective collaboration and equitable division of tasks among team members.

The first milestone (07.10 - 13.10) involved deciding on the project's focus. After thorough discussions, we collectively chose to analyze Marino Sanudo’s diaries, given their historical significance and potential for data-driven exploration. This phase established a shared understanding of the project and laid the foundation for subsequent work.

The second milestone (14.10) focused on optimizing the extraction of indexes for names and places from the diaries. This required refining our methods for data extraction and ensuring accuracy in capturing and categorizing entities. Alongside this, we worked on identifying the geolocations of the places mentioned in the index, using historical and modern mapping tools to ensure precise identification.

The final stages of the project (19.12)marked the transition to analyzing relationships between the extracted names and places. Using the indexed data, we explored potential connections, identifying patterns and trends that revealed insights into Renaissance Venice's social, political, and geographic networks. . We collaboratively built a Wikipedia page to document our research and created a dedicated website to present our results in an accessible and visually engaging manner. This phase also included preparing for the final presentation, ensuring that every team member contributed to summarizing and showcasing the work.

By adhering to this structured approach and dividing tasks equitably, we achieved a comprehensive analysis of Sanudo’s diaries, combining historical research with modern digital tools to uncover new insights into his world.

| Week | Task |

|---|---|

| 07.10 - 13.10 | Define project and structure work |

| 14.10 - 20.10 |

Manually write a place index |

| 21.10 - 27.10 | Autumn vacation |

| 28.10 - 03.11 |

Work on the name dataset |

| 04.11 - 10.11 |

Finish the geolocation |

| 11.11 - 17.11 |

Midterm presentation on 14.11 |

| 18.11 - 24.11 |

Find naive relationship |

| 25.11 - 01.12 |

Standardization of the text |

| 02.12 - 08.12 |

Find relationship based on the distance |

| 09.12 - 15.12 |

Finish writing the wiki |

| 16.12 - 22.12 |

Deliver GitHub + wiki on 18.12 |

Methodology

Data preparation

In our project, which involved analyzing a specific book, the initial step was to obtain the text version of the book. After exploring several sources, including [1], we identified three potential websites for downloading the text. Ultimately, we selected the version available on [2] because it offered a more comprehensive set of tools for our analysis. We downloaded the text from this source and compared it to versions from Google Books and HathiTrust, confirming that it best suited our needs.

We then decided to focus our analysis on the indices included in each volume, which listed the names of people and places alongside the corresponding column numbers.

Indices of places

Our primary focus was on the places mentioned in Venice. The index of places was significantly shorter, allowing us to analyze it manually. Each entry in the index included headings that often indicated a hierarchical relationship, suggesting that a location belonged to a broader area indicated by the preceding indentation.

Dataset structure

The generated dataset consists of the following features:

id: A unique identifier assigned to each entry.

place: The primary name of the location mentioned in the index.

alias: Any alternative names or variations associated with the place.

volume: The specific volume of the book in which the place is mentioned.

column: The column number within the index where the place is listed.

parents: The broader location or hierarchical category to which the place belongs, derived from the indentation structure in the index.

This structured dataset captures both the explicit details from the index and the inferred hierarchical relationships, making it suitable for further analysis and exploration.

Indices of names

The index of names proved to be significantly more challenging to analyze than the index of places, as it spanned approximately 80 pages. To tackle this, we first provided examples of the desired output and then used an Italian-trained OCR model to process the text and generate a preliminary table of names. This approach differed from traditional OCR methods, allowing for a more accurate extraction tailored to our project.

Dataset Structure

The dataset generated for people consists of various features, such as a unique identifier (id) for each entry, the primary name of the person (name), any alternative names or variations (alias), the specific volume where the person is mentioned (volume), the column number within the index where the person is listed (column), the broader family or hierarchical category to which the person belongs (parents), and additional details or notes about the person (description). This structured dataset organizes the data in a way that preserves both explicit information and contextual relationships, enabling in-depth analysis and exploration.

Cleaning and Error Correction

After the automated extraction, the output was manually reviewed and corrected. Given that OCR often makes mistakes when generating text from images, our goal was to improve the dataset's quality without conducting a complete manual overhaul. Instead, we focused on correcting the most frequent and disruptive errors. A common issue encountered was the misinterpretation of page numbers as names, which led to cascading errors that required cleaning. Another frequent error involved the inclusion of dashes ("-") generated by the OCR, which needed removal.

Observations

Several observations emerged during the analysis. The first surname listed under a heading often applied to subsequent names following the same indentation, providing insight into familial groupings. In some cases, ellipses (...) were used in place of names. This practice was historically employed for names that were unknown at the time of writing, with the intention that they could be added later if discovered, or to deliberately anonymize individuals. These findings enriched our understanding of the index, offering valuable context for both its historical and structural significance.

Handling Numeric Characters

During the analysis of the "description" column, numeric characters were frequently observed due to incorrect OCR processing. Many of these numbers corresponded to page references and needed to be transferred to the "pages" column. However, the column also contained numbers unrelated to pagination, such as quantifications (e.g., "he had 3 children") or references to historical years. Historical years were often recognizable by their formatting, typically enclosed in round brackets or preceded by Italian prepositions commonly used to denote time periods, such as "nel," "da," or "di." Additionally, the structure of the dataset limited the maximum number of valid columns for volume 5 to 1074, meaning any larger number was undoubtedly a reference to a historical year.

Extraction Methodology

To address these inconsistencies, a systematic extraction process was designed. All numbers with three or four digits were identified within the "description" column. Numbers exceeding the maximum column count of 1074 were flagged as historical years, as were those preceded by prepositions indicating temporal references. Valid page numbers were transferred to the "pages" column, and once extracted, these numbers were removed from the "description" column to eliminate redundancy. This process relied on a combination of regular expressions and filtering techniques to ensure precision.

Limitations

Handling two-digit numbers posed significant challenges. Distinguishing between numbers representing pagination and those used for quantifications (e.g., "2 children") required manual inspection, which was impractical at scale. Consequently, two-digit numbers were excluded from the automated process to minimize errors. Although this approach may have resulted in some missing pagination data, it effectively avoided inaccuracies caused by misclassification. This method underscored the need to balance accuracy and practicality in processing complex datasets while acknowledging the inherent limitations of such efforts.

Text Management

After completing the processing of the indexes, the final step in preparing the data was to work on the full text. Although we considered using an alternative OCR system, the OCR provided by Internet Archive proved particularly useful. This system included OCR pages in JSON format, which provided the start and end coordinates for each page. Thanks to this feature, it was possible to split the text into individual pages, a crucial step given the need to organize the data by columns.

Once the text was divided into pages, it became necessary to identify and align the columns. A manual review of the OCR revealed that the text columns of interest only began after page 25. From that point onward, the columns were numbered starting at 5 and 6. This numbering was chosen to create a system consistent with the original text, ensuring the columns were properly aligned to the required format.

Through this methodology, each page was associated with a "start column" and an "end column," ensuring accurate structuring of the data in alignment with the original document format.

Finding the Geolocation

Extraction of Coordinates

Pipeline

Load and preprocess data

Nominatim API: Extract coordinates for "famous places."

Venetian Church Dictionary: Match coordinates for churches.

Catastici/Sommarioni: Match coordinates for private houses.

ChatGPT API: Fill in missing data.

Process: Each step enriches the dataset with newly found coordinates. Subsequent steps only process entries lacking geolocation, avoiding overwriting or misclassification of previous entries.

Considerations

Nominatim API: Returns few but highly accurate results.

Venetian Church Dictionary: Highly effective in associating churches, although some errors may arise depending on thresholds.

Catastici/Sommarioni: Currently yields no results, likely due to:limited testing capability on the full dataset and volume-specific content variations.

Temporal mismatch between Mario Sanudo's document and catastics data.

ChatGPT API: Associates most remaining instances with some errors, though not significantly high.

Nominatim API Trial

Nominatim is a geocoding API that processes either structured or free-form textual descriptions. For this study, the free-form query method was used.

Key Findings:

Using 'name' and 'city' fields yielded accurate but limited results (6/65). Including 'alias' and 'father' fields in the query reduced accuracy significantly. The API works best with simple and precise input, whereas long or complex phrases hinder matching.

Venetian Church Dictionary

This dictionary aids in geolocating churches by matching entries from the dataset with those in the dictionary using Italian and Venetian labels.

Steps:

Filter dataset entries containing "chiesa" (church) or synonyms in the 'name' field. Use only church entries with geolocation and either 'venetianLabel' or 'italianLabel' not null. Assess similarity between strings using Sentence-BERT (SBERT). Advantages:

Restricting to church-related entries minimizes unrelated matches. SBERT captures semantic similarities, such as "Chiesa di San Marco" and "Basilica di San Marco."

Catastici and Sommationi

This step aimed to associate private houses ("casa") with coordinates. However, no results were obtained due to several challenges, including hardware limitations that prevented the generation of string encoding for the large dataset within a reasonable timeframe, the possibility that the specific volume analyzed (vol. 5) lacked relevant entries, and a temporal mismatch between the analyzed Mario Sanudo document and the catastics records.

To address these challenges, a dictionary was created from the Catastici database to facilitate semantic similarity assessments with entries from the name index.

From the JSON file catastici_text_data_20240924.json, the property’s function, owner’s name, and coordinates were extracted. A structured dataframe was created by combining these tags. The geometry field was split into two columns for latitude and longitude. Duplicates were removed based on the function and owner’s name, keeping only the first occurrence for each combination. A new column, named "name," was generated by concatenating the function and owner’s name. Subsequently, only entries related to private houses ("casa") were retained for further analysis.

Since the dataset used a different local coordinate system, it was necessary to convert any matched coordinates to the appropriate reference system.

Despite following this methodology, no valid results were obtained. The primary reasons were hardware constraints, the volume-specific nature of the analyzed data, and the temporal mismatch between the catastics records and the Mario Sanudo document.

This step underscored the limitations of the Catastici dataset for this project and highlighted the need for improved computational resources and greater dataset compatibility to achieve better outcomes in future research.

ChatGPT API

For the remaining entries, ChatGPT was combined with geocoding APIs like OpenCage or Nominatim to infer coordinates.

Advantages:

Combines ChatGPT's descriptive power with the precision of geocoding APIs. Limitations: Requires external geocoding APIs, as ChatGPT alone cannot retrieve real-time coordinates.

Finding the relationship

Naive Relationships

In this analysis, a simple method was used to establish relationships by considering places and people mentioned within the same column. This approach assumes that if a place and a person appear together in the same entry, they might be related. However, this assumption has limitations.

Co-occurrence in the Same Column: Places and people mentioned in the same column were linked, based on the assumption that their proximity suggests some form of relationship. However, we cannot be certain if this proximity indicates an actual relationship or if they are simply listed together without a true connection.

Limitations: Temporal Gaps: It is not possible to determine whether the relationship exists because the place and person appear in the same column or if the relationship pertains to places or people mentioned in previous or subsequent pages. Context: Without additional context or a deeper understanding of the index structure, we cannot definitively determine whether the place and person are directly related, or if they are merely listed next to each other by chance.

In summary, this naive approach relies on the proximity of entries within the index to suggest relationships, but it lacks the ability to verify the true nature of these connections. More advanced methods would be required to establish more accurate relationships.

Improved Relationship Analysis

Goal of the Analysis

The goal of the improved relationship analysis was to determine whether a person and a place occurred together more frequently than just a few times in the dataset. This could provide useful insights, especially if these relationships were later weighted for further investigation. To achieve this, the first step was to select relevant text for each entry by evaluating the structure and content in the 'merged_data' dataframe.

Identifying Relevant Text

We began by checking whether the page number in the 'pages' column matched the 'start_col' or 'end_col' for each row. If a match was found with 'start_col', the second half of the text from the previous row was combined with the current text from the 'pages' column. If a match was found with 'end_col', the first half of the text from the next row was concatenated with the current text. This approach allowed the creation of a relationships table that could link people and places within the dataset.

Handling Names and Places

One challenge we faced was that the names of people or places were not always directly referenced in the text but often appeared with additional attributes, aliases, or titles. Therefore, we had to extract all the data related to a place and a name and construct two lists: one for the words by which places were likely to be called in the text and another for the words associated with names. This strategy allowed us to leave the original text intact and only evaluate the presence of at least one token from the place list and one from the name list, enabling us to read the output in a way that retained both syntactic and semantic meaning.

Refining the Process

To ensure accuracy, we first processed the lists by removing short words, stop words, numbers, NaN characters, and special characters. Our initial trial involved checking if 'name' and 'place' appeared within the same sentence. However, this approach resulted in only a small number of meaningful results (209 out of 3,170), with many of them being insignificant.

The second trial expanded the search by looking for 'place' combined with 'alias_place' and 'name' with 'description'. This broader search significantly increased the number of results, reaching more than 1,000.

Improving Token Matching

To further refine the process, the third trial introduced a more advanced method: assessing the similarity of tokens using Levenshtein distance. This technique calculates the similarity between two strings, which helped overcome errors introduced by OCR. By applying Levenshtein’s ratio, a similarity value between 0 and 1 was generated for each pair of tokens. If the similarity exceeded a set threshold, the tokens were considered a match. This approach further increased the number of valid results, bringing the total to 1,727 out of 3,170.

Categorizing Relationships

Once the matches were identified, the next step was to categorize the relationships between people and places. We used the minimum and maximum sentence boundaries identified earlier to pass these sentences to a large language model (LLM) system for relationship categorization. The LLM categorized the relationships into predefined types, such as a person nominated in that place but not physically present, a person belonging to the place, or a person working or living there. In cases where the model could not find a clear connection, such as in sentences like “John was appointed to the city council,” the system might return an empty string, indicating no clear relationship. For instances where the relationship was unclear but still relevant, such as “Jane recently visited her uncle’s house in Paris,” the model would categorize the relationship as "other," implying that the relationship could not be easily classified into the predefined labels.

Outcome and Significance

This method significantly improved the relationship analysis by providing a more structured way to understand and categorize interactions between people and places within the dataset. The categorization made the results more readable and allowed for deeper investigations in subsequent stages.

Result

Conclusion and further work

Achieved Results

Our work led to the reorganization and in-depth analysis of a complex corpus, making the data more accessible and comprehensible for further investigation. The extraction and structuring of information from indices and texts, the identification of relationships between people and places, and the application of advanced techniques such as textual similarity calculations allowed us to create richer and more useful datasets.

Challenges Addressed

The project highlighted several difficulties, including OCR-related errors, data misalignments, and semantic ambiguities. These obstacles were tackled using a methodical approach, including data filtering, targeted manual review, and the application of language models to improve the interpretation of relationships.

Contribution to Research

This work offers a contribution to computational historical analysis by demonstrating how modern tools and methodologies can be applied to historical materials to extract structured information. The identification and categorization of relationships between people and places provide a foundation for further studies on the social, cultural, and political contexts of the analyzed periods. This approach aims to complement traditional research methods by offering additional insights derived from large and complex datasets.

Future Perspectives

So far, our work has focused on analyzing only volume 5, but the developed method is scalable and can be extended to cover other volumes of the same corpus or similar materials. By employing a pipeline that integrates natural language processing techniques, structured data extraction, and relationship analysis, we have created a solid foundation for expanding the project. In the future, we could expand the analysis to additional volumes, enhance the pipeline to handle even more complex data, and develop interactive tools for exploring and visualizing the results.

In summary, our work has demonstrated the potential of technology to support humanities research, contributing to a deeper understanding of complex and historically significant materials.

GitHub repositories

Link: https://github.com/dhlab-class/fdh-2024-student-projects-marcopolo

Website

Link: https://fht-epfl.github.io/marcopolo-sanudo-fdh/

References

Sanudo Diarii Volume 5 https://books.google.ch/books?id=cm6srb292ToC&redir_esc=y

Hero video 1 http://www.unabibliotecaunlibro.it/video?ID=227&PID=64

Hero video 2 https://www.youtube.com/watch?v=JphHw6iU4m8

Website design: