WikiBio: Difference between revisions

Michal.bien (talk | contribs) (→XLNet) |

Michal.bien (talk | contribs) (→XLNet) |

||

| Line 58: | Line 58: | ||

* bi-directionality is not of much use here and takes more memory | * bi-directionality is not of much use here and takes more memory | ||

* considerably lower performance on text generation tasks than GPT-2 | * considerably lower performance on text generation tasks than GPT-2 | ||

Finally, our tests has shown that we should not consider XLNet for our biography generation effort, for a number of reasons: | |||

* there is no text sample length limit, but the GPU RAM is not, which makes the limitation to ~1500 tokens required anyway | |||

* the model performs poorly with short inputs | |||

* the text is gramatically correct but the coherence with semistructured data is very bad, supposedly due to bi-directional nature of the model | |||

=== GPT-2 === | === GPT-2 === | ||

Revision as of 20:23, 19 November 2020

Motivation

The motivation for our project was to explore the possibilities of natural-language generation in the context of biography generation. It is easy to get structural data from the Wikidata pages, but not all the Wikidata pages have a corresponding Wikipedia page. This project will showcase how we can use the structural data from the Wikidata pages to generate realistic biographies in the Wikipedia pages format.

Project plan

| Week | Goals | Result |

|---|---|---|

| 1 | Exploring data souces | Selected Wikipedia + Wikidata |

| 2 | Matching textual and structural data | Wikipedia articles matched with wikidata |

| 3 | First trained model prototype | GPT-2 was trained on english data |

| 4 | Acknowledge major modelling problems | GPT-2 was trained in Italian, issues with Wikipedia pages completion and Italian model performances |

| 5 | Code clean up, midterm preparation | Improved sparql request |

| 6 | Try with XLNet model, more input data, explore evaluation methods | Worse results with XLNet (even with more input data), Subjective quality assessment and Bleu/Gleu methods |

| 7 | Start evaluation surveys and automatic evaluation, improve GPT-2 input data | ... |

| 8 | Productionalization, finish evaluation | ... |

| 9 | Productionalization, evaluation analyse | ... |

| 10 | Final Presentation | ... |

Data sources

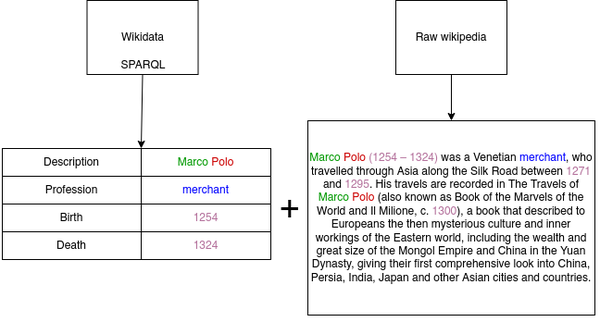

In this project, we make use of two different data sources:

- Wikidata is used to gather the structured information about the people who lived in the Republic of Venice. Multiple information are extracted from their wikidata entries, such as: birth and death times, professions and family names. To gather this data from wikidata, a customizable SPARQL query is used on the official wikidata SPARQL API

- Wikipedia is used to match the wikidata entries with the unstructured text of the article about that person. The "Wikipedia" package for python is used to find the matching pairs and then to extract the Wikipedia articles matching the entries.

Generation methods

The output of the above data sources is prepared jointly in the following manner:

- All the structured entries are transformed to text, by putting the custom control token in front of them and then concatenating them together

- The resulting text is the training sample for the model

We considered two language model architectures: XLNet and GPT-2. The comparison of the models and reason of selection of GPT-2 is described below

XLNet

XLNet is a late-2019 bi-directional language model, mostly used for tasks like sentiment analysis, classification and word correction. However, it was interesting for us due to its possibility to train on and generate text samples of virtually infinite length.

Pros:

- variable text length

- modern architecture

Cons:

- bi-directionality is not of much use here and takes more memory

- considerably lower performance on text generation tasks than GPT-2

Finally, our tests has shown that we should not consider XLNet for our biography generation effort, for a number of reasons:

- there is no text sample length limit, but the GPU RAM is not, which makes the limitation to ~1500 tokens required anyway

- the model performs poorly with short inputs

- the text is gramatically correct but the coherence with semistructured data is very bad, supposedly due to bi-directional nature of the model

GPT-2

Example generation output

- Input: <|start|> Marco Polo <|description|> Painter <|professions|> Painter, Writer <|birth|> 1720 <|death|> 1793 <|summary|>

- Output: Marco Polo (1720 – 1793) was a German painter of a distinguished life of high quality. In 1740 he was the first to paint in an Italian Renaissance style. He served as the painter of Giovanni Venez in Venice; his brother was Giovanni Magnan and daughter was a painter of his own time, Marco Polo. During his career he collaborated with the great Venetian painter Giovanni Battista Gugliati in the work for the Porte della Prudina, which was published in 1714. After his death, he would leave his paintings at Venice for the Palace of Santa Martina.