Deciphering Venetian handwriting: Difference between revisions

Jeremy.mion (talk | contribs) No edit summary |

Jeremy.mion (talk | contribs) No edit summary |

||

| Line 56: | Line 56: | ||

==Methodology == | ==Methodology == | ||

1 | ===1=== | ||

The first part of the project consisted in extracting the areas on the image of the page that contain text. This is a required step since our hand writing recognition model requires as an input a single line of text. To extract the patches the first step is to identify the location of the text on the page. This information will be stored in the standard image metadata description called [http://www.primaresearch.org/tools/PAGELibraries PAGE]. The location will be stored by storing the baseline under some text. To extract the baseline we used the [https://github.com/lquirosd/P2PaLA P2PaLA] repository. It is a Document Layout Analysis tool based on Neural Networks. Since there is no ground truth for the locations of baselines in the Sommarioni dataset we used the pre-trained model provided with P2PaLA. Since there is no groundtruth we do not have a metric to measure the quality of this step of our pipeline. We conducted qualitative visual inspection of results. The output is remarkably good given that it was not trained on data from this dataset. A few false positives were found, but no false negatives. | |||

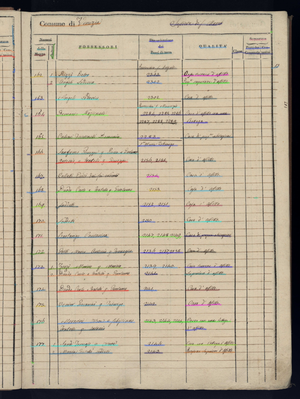

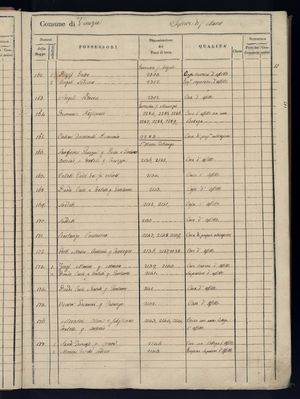

[[File:Sommarioni_Line_detection.png|thumb|center|Fig. 1: Page with output of the baseline detection]] | [[File:Sommarioni_Line_detection.png|thumb|center|Fig. 1: Page with output of the baseline detection]] | ||

2 | ===2=== | ||

Once the baselines were identified we needed to extract the areas that contained text (cropping the image of the page to contain only a single sentence). To do this no preexisting tool satisfied the quality requirements that we were looking for. We therefor created PatchExtractor. PatchExtractor is a python program that extracts the patches using as input the source image and the baseline file produced by P2PaLA. PatchExtractor uses some advanced image processing to extract the columns from the source image. The information about the column that the patch is located in is crucial since after HTR we can use this information to match the columns of the spreadsheet with there equivalent column in the picture. | |||

[[File:Censo-stabile_Sommarioni-napoleonici_reg-1_0015_013.jpg|thumb|center|Fig. 2: Original source image of the page]] | [[File:Censo-stabile_Sommarioni-napoleonici_reg-1_0015_013.jpg|thumb|center|Fig. 2: Original source image of the page]] | ||

Revision as of 15:19, 4 December 2020

Introduction

The goal of this create a pipeline that allows to reestablish the mapping between the original Venetian castrate "Sommarioni" and the digital version of it as an excel spread sheet. Will producing the transcription of the the spreadsheet the link to the original pages was lost. The purpose of this project is to take the spreadsheet and reestablish the link to the source document. The technologies used to achieve this goal are 2 deep neural nets, one to identify areas of the image that contain text, the other to process the handwriting and produce the text.

Planning

| Week | Task |

|---|---|

| 09 | Segment patch of text in Sommarioni : (page id, patch) |

| 10 | Mapping transcription (excel file) -> page id (proof of concept) |

| 11 | Mapping transcription (excel file) -> page id (on the whole dataset) |

| 12 | Depending of the quality of the results : improve the mapping of page id, more precise matching, viewer web |

| 13 | Final results, final evaluation & final report writing |

| 14 | Final project presentation |

Week 09

- Input : Sommarioni images

- Output : Patch of pixels containing text with coordinate of the patch in the Sommarioni

- Step 1 : Segment hand written text regions in Sommarioni images

- Step 2 : Extraction of the patches

Week 10

- Input : transcription (Excel File), tuples (page id, patch) extracted in week 9

- Output : line in the transcription -> page id

- Step 1 : HTR recognition in the patch and cleaning : (patch, text)

- Step 2 : Find matching pair between recognized text and transcription

- Step 3 : New excel file with the new page id column

Week 11

- Step 1 : Apply the pipeline validated on week 10 on the whole dataset

- Step 2 : Evaluate the quality and based on that decide of the tasks for the next weeks

Week 12

- Depending of the quality of the matching

- Improve image segmentation

- More precise matching (excel cell) -> (page id, patch) in order to have the precise box of each written text

- Use a IIF image viewer to show the results of the project in a more fancy way

Methodology

1

The first part of the project consisted in extracting the areas on the image of the page that contain text. This is a required step since our hand writing recognition model requires as an input a single line of text. To extract the patches the first step is to identify the location of the text on the page. This information will be stored in the standard image metadata description called PAGE. The location will be stored by storing the baseline under some text. To extract the baseline we used the P2PaLA repository. It is a Document Layout Analysis tool based on Neural Networks. Since there is no ground truth for the locations of baselines in the Sommarioni dataset we used the pre-trained model provided with P2PaLA. Since there is no groundtruth we do not have a metric to measure the quality of this step of our pipeline. We conducted qualitative visual inspection of results. The output is remarkably good given that it was not trained on data from this dataset. A few false positives were found, but no false negatives.

2

Once the baselines were identified we needed to extract the areas that contained text (cropping the image of the page to contain only a single sentence). To do this no preexisting tool satisfied the quality requirements that we were looking for. We therefor created PatchExtractor. PatchExtractor is a python program that extracts the patches using as input the source image and the baseline file produced by P2PaLA. PatchExtractor uses some advanced image processing to extract the columns from the source image. The information about the column that the patch is located in is crucial since after HTR we can use this information to match the columns of the spreadsheet with there equivalent column in the picture.

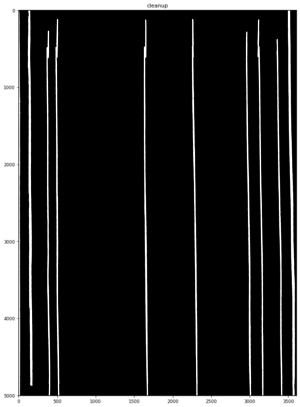

The advanced column extractor can produce a clean binary mask of the location of the columns as seen in Fig. 3. To achieve this multiple steps including some such as:

- Applying a Gabor kernel (linear feature extractor)

- Using a contrast threshold

- Connected component size filtering (removing small connected components)

- Gaussian blur

- Affine transformations

- Bit-wise operations

- Cropping

were done to transform the original picture into a column mask of the region of interest (ROI). The ROI is the part of the page that contains the text without the margins.

This mask is then used to identify which column a baseline is in.

There is an extra challenge that we can fix with the knowledge of the location of the columns. Sometimes the baseline detection detects a single baseline for 2 columns that are close to each other as can be seen in Fig. 4

With the knowledge of the location of the columns PatchExtractor fixes these issues and produces 2 distinct images as can be seen in Fig. 5

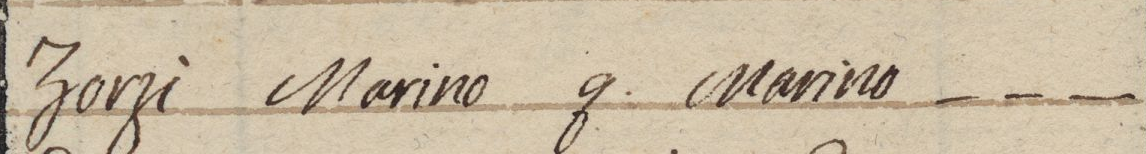

The output of PatchExtract will produce a patch per column and row containing text, as well as identifying to column in which the patch was extracted. The resulting output can then begin the pre-processing for the HTR.