Opera Rolandi archive: Difference between revisions

| Line 86: | Line 86: | ||

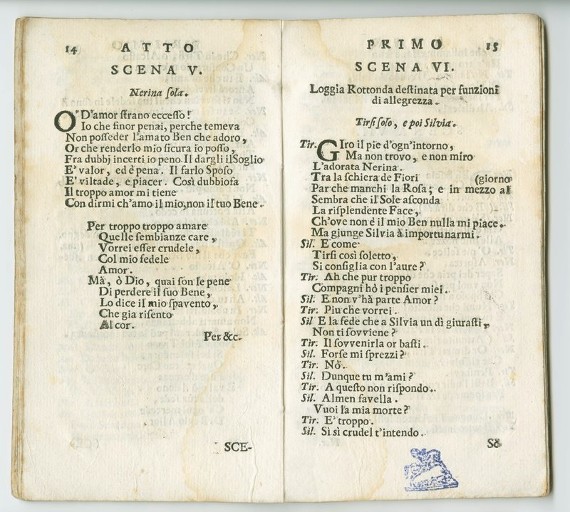

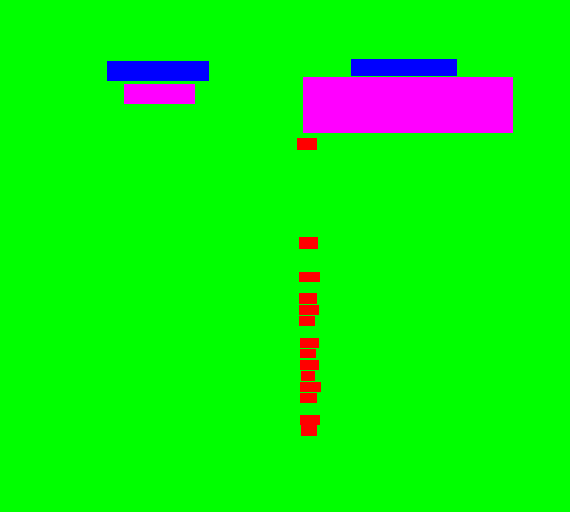

[[File:Page.jpg|upright=0.5]] [[File:Page seg.png|upright=0.5]] | [[File:Page.jpg|upright=0.5]] [[File:Page seg.png|upright=0.5]] | ||

The scenes are in blue, the descriptions in pink, the names in red and the background in green. | |||

We randomly choose 52 images of the collection, so that we have as many different types of libretti as possible. The layouts between two libretti can vary a lot, so it is important to have that diversity. | |||

We use one third of the input/output pairs for validation. | |||

We then train the model, which achieves an iou of 0.550 after 3162 iterations. | |||

We now have to find the libretto we want to test it on. To do so, we look a bit into the dataset and we find a libretto that we know : Antigone. | |||

There are six different Antigone libretto, so we test our model on all of them. | |||

The model outputs a numpy array for each image, of size ''dimensions of the image * 4". It contains for each of our four classes (background, name, scene, description) the probability that a pixel is in that class. | |||

We treat this output to display the result of the segmentation, such that a pixel is in a class if its probability of being in the class is more than a threshold. We look at the results for our 6 Antigone libretto, and this is what the best one looks like, for an arbitrary page : | |||

[[File:ant_name.jpg|upright=0.5]] [[File:ant_scene.jpg|upright=0.5]] [[File:ant_des.jpg|upright=0.5]] | |||

=== OCR === | === OCR === | ||

Revision as of 16:57, 7 December 2020

Abstract

The Fondazione Giorgio Cini has digitized 36000 pages from Ulderico Rolandi's opera libretti collection. This collection contains contemporary works of 17th- and 18th-century composers. These opera libretti have a diverse content, which offered us a large amount of possibilities for analysis.

This project chose to concentrate on a way to illustrate the characters’ interactions in the Rolandi's libretti collection through network visualization. We also highlighted the importance of each character in the libretto they figure in. To achieve this, we retrieved important information using Deep Learning models and OCR. We started from a subset of Rolandi’s libretti collection and generalized this algorithm for all Rolandi’s libretti collection.

Planning

| Week | To do |

|---|---|

| 12.11. (week 9) | Step 1: Segmentation model training, fine tuning & testing |

| 19.11. (week 10) | Step 2: Information extraction & cleaning |

| 26.11. (week 11) | Finishing Step 2 |

| 03.12. (week 12) | Step 3: Information storing & network visualization |

| 10.12. (week 13) | Finishing Step 3 and Finalize Report and Wikipage (Step 4: Generalization) |

| 17.12. (week 14) | Final Presentation |

Step 1

- Train model on diverse random images of Rolandi’s libretti collection (better for generalization aspects)

- Test model on diverse random images of Rolandi’s libretti collection

- Test on a single chosen libretto:

- If bad results, train the model on more images coming from the libretto

- If still bad results (and ok with the planning), try to help the model by pre-processing the images beforehand : i.e. black and white images (filter that accentuates shades of black)

- Choose a well-formatted and not too damaged libretto (to be able to do step 2)

Step 2

- Extract essential information from the libretto with OCR

- names, scenes and descriptions

- if bad results, apply pre-processing to make the handwriting sharper for reading

- If OCR extracts variants of same name:

- perform clustering to give it a common name

- apply distance measuring techniques

- find the real name (not just the abbreviation) of the character using the introduction at the start of the scene

Step 3

For one libretto:

- Extract the information below and store it in a tree format (json file):

- Assessment of extraction and cleaning results

- Create the relationship network:

- nodes = characters’ name

- links = interactions

- weight of the links = importance of a relationship

- weight of nodes = speech weight of a character + normalization so that all the scenes have the same weight/importance

Step 4: Optionnal

- See how our network algorithm generalizes (according to the success of step 1) to five Rolandi’s libretti.

- If this does not generalize well, strengthen our deep learning model with more images coming from these libretti.

Description of the Methods

Dataset

Segmentation

Our goal is to segment each image into these specific regions :

- Scenes : Where a new scene is indicated in the page

- Descriptions : The text just below the scene, where we will find information about the place, the characters of the scene, etc...

- Names : The name of the character that is just next to their speech

- Background : Everything else, that is not of interest for us

To do that, we use the tool dhSegment, which is a tool for document segmentation.

We need to create a training set to train the model for our specific task : libretto segmentation.

We use Gimp to produce pairs of images like this :

The scenes are in blue, the descriptions in pink, the names in red and the background in green.

We randomly choose 52 images of the collection, so that we have as many different types of libretti as possible. The layouts between two libretti can vary a lot, so it is important to have that diversity. We use one third of the input/output pairs for validation.

We then train the model, which achieves an iou of 0.550 after 3162 iterations.

We now have to find the libretto we want to test it on. To do so, we look a bit into the dataset and we find a libretto that we know : Antigone.

There are six different Antigone libretto, so we test our model on all of them.

The model outputs a numpy array for each image, of size dimensions of the image * 4". It contains for each of our four classes (background, name, scene, description) the probability that a pixel is in that class.

We treat this output to display the result of the segmentation, such that a pixel is in a class if its probability of being in the class is more than a threshold. We look at the results for our 6 Antigone libretto, and this is what the best one looks like, for an arbitrary page :

File:Ant name.jpg File:Ant scene.jpg File:Ant des.jpg