Rolandi Librettos: Difference between revisions

Aurel.mader (talk | contribs) |

Aurel.mader (talk | contribs) No edit summary |

||

| Line 266: | Line 266: | ||

|} | |} | ||

Given the ground truth and our predicted labels we now can calculate [https://en.wikipedia.org/wiki/Precision_and_recall precision and recall] metrics for the positivity rate of our feature extractions. Recall in this sense refers to the rate of true positives our extraction finds and precision to the rate of true positives to predicted positives. Below a table shows the recall and precision rates for each feature extraction. The number on which the calculated metrics are based on is indicated in brackets < | Given the ground truth and our predicted labels we now can calculate [https://en.wikipedia.org/wiki/Precision_and_recall precision and recall] metrics for the positivity rate of our feature extractions. Recall in this sense refers to the rate of true positives our extraction finds and precision to the rate of true positives to predicted positives. Below a table shows the recall and precision rates for each feature extraction. The number on which the calculated metrics are based on is indicated in brackets <br /> | ||

Please, note that for linking of entities only correctly extracted entities were considered, thus the number of observations between a specific extracted entity and the linking of the same entity might vary. | Please, note that for linking of entities only correctly extracted entities were considered, thus the number of observations between a specific extracted entity and the linking of the same entity might vary. | ||

Revision as of 12:01, 9 December 2020

Introduction

The Fondo Ulderico Rolandi is one of the greatest collections of librettos (text booklet of an opera) in the world. This collection of librettos which is in the possession of the Fondazione Cini consist of around 32’000 thousand librettos, spanning a time period from the 16th to the 20th century. This collection is being digitized and made accessible to the public in the online archives of the Fondazione Cini, where currently 1'110 librettos are available.

Project Abstract

The Rolandi Librettos can be considered as a collection of many unstructured documents, where each document describes an opera performance. Each document contains structured entity information about place, time and people (e.g.: composer, actors) who were involved in this opera. In our project we want to extract as much entity information about the operas as possible. This includes information as the title of the opera, when and in which city it was performed, who was the composer, etc. By extracting the entity information and linking it to internal and external entities, it is possible to construct one comprehensive data set which describes the Rolandi Collection. The linking of information to external entities, would allow us to connect our data set to the real world. This would for example include linking every city name to a real place and assigning geographical coordinates (longitude and latitude) to it. Constructing links in the data set as such, would allow us for example to trace popular operas which were played several times in different places or famous directors which directed many operas in different places. In a last step we want to construct one comprehensive end product which represents Rolandi Collection as a whole. Thus we want to visualize the distribution of operas Librettos in space and time and potentially construct indications of linking.

Planning

The draft of the project and the tasks for each week are assigned below:

| Timeframe | Task | Completion |

|---|---|---|

| Week 4 | ||

| 07.10 | Evaluating which APIs to use (IIIF) | ✅ |

| Write a scraper to scrape IIIF manifests from the Libretto website | ||

| Week 5 | ||

| 14.10 | Processing of images: apply Tessaract OCR | ✅ |

| Extraction of dates and cleaned the dataset to create initial DataFrame | ||

| Week 6 | ||

| 21.10 | Design and develop initial structure for the visualization (using dates data) | ✅ |

| Running a sanity check on the initial DataFrame by hand | ||

| Matching list of cities extracted from OCR using search techniques | ||

| Week 7 | ||

| 28.10 | Remove irrelevant backgrounds of images | ✅ |

| Extract age and gender from images | ||

| Design data model | ||

| Extract tags, names, birth and death years out of metadata | ||

| Week 8 | ||

| 04.11 | Get coordinates for each city and translation of city names | ✅ |

| Extracted additional metadata (opera title, maestro) from the title of Libretto | ||

| Setting up map and slider in the visualization and order by year | ||

| Week 9 | ||

| 11.11 | Adding metadata information in visualization by having information pane | ✅ |

| Checking in with the Cini Foundation | ||

| Preparing the Wiki outline and the midterm presentation | ||

| Week 10 | ||

| 18.11 | Compiling a list of musical theatres | ✅ |

| Getting better recall and precision on the city information | ||

| Identifying composers and getting a performer's information | ||

| Extracting corresponding information for the MediaWiki API for entities (theatres etc.) | ||

| Week 11 | ||

| 25.11 | Integrate visualization's zoom functionality with the data pipeline to see intra-level info | ✅ |

| Linking similar entities together (which directors performed the same play in different cities?) | ||

| Week 12 | ||

| 02.12 | Serving the website and do performance metrics for our data analysis | ✅ |

| Communicate and get feedback from the Cini Foundation | ||

| Continuously working on the report and the presentation | ||

| Week 13 | ||

| 09.12 | Finishing off the project website and work, do a presentation on our results | ⬜️ |

Methodology

Our data processing pipeline consists of four steps: 1) Data collection 2) Data extraction 3) Data linking 4) Visualization.

Data Collection

First we use an scrapper to obtain the metadata and the images for each libretto from the online librettos archive of the Cini foundation. In the IIIF frame work every object or manifest, in our case librettos, is one .json document, which stores metadata and a linking to a digitized version of the libretto. With the python libraries BeautifulSoup and request we could download those manifests and save them locally. In those manifest contained already contained entity information as publishing year and a long extensive title description, which could be easily extracted for each libretto.

Data Extraction

After obtaining the year, an extensive title description and a linking to a digitization, we were able to extract entity information for each libretto from two main source: The title description and the copertas of the librettos.

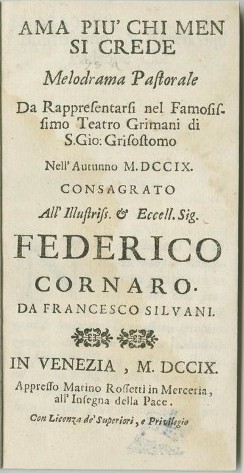

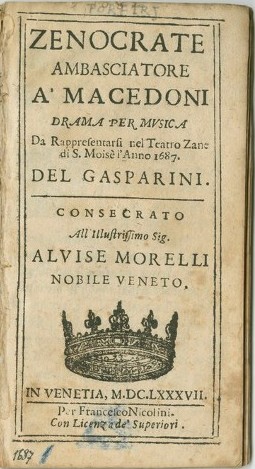

Data Extraction from Copertas

The coperta of a libretto corresponds to a book cover, which contains various information about the content and circumstances. Most importantly for us was that only the copertas contained information about where the librettos originated from. Furthermore the copertas sometimes also mentioned the composers. To localize the librettos we therefore had to extract the city information from the copertas.

First we downloaded the copertas from the Cini online archives. We could download the digitized copertas separably because they were specially tagged in the IIIF manifests.

Then we mad the copertas machine readable with an optical character recognition (OCR) algorithm. For this task we chose Tesseract, which had mainly the advantages of being easily usable as python plugin and furthermore not having any rate limit or costs associated. This was as such advantageous, becuase we often rerun our code our experimentially also OCRed additional pages, therefore a rate limit would have given the wrong intensives. On the other hand the OCR quality of Tesseract was from time to time very low and because of lacking OCR quality we were not able to extract some entities.

To extract city information we used a dictionary approach. We used the python library geonamescache, which contains lists of cities around the world with information about city population, longitude latitude and city name variations. With geonamescache we compiled a list of Italians cities which we would then search for in the copertas. Here we already considered name variations and filtered for cities which have a greater modern population then 20'000 inhabitants. With this procedure we obtained a first city extraction, which yielded for 63% of all our copertas a city name.

We enhanced the city extraction by also considering 1) incorrect written or 'fuzzy' city names, 2) big European cities and 3) by excluding very unlikely city extractions:

1) Given the sub-optimal OCR quality of Tesseract many city names were written incorrectly. To account for this we selected the 10 most common cities from our first extraction and searched for very similar variations in the copertas. This was done with a similarity matching.

2) We extended our city list to central european cities with a greater modern population as 150'000 inhabitants

3) We did a sanity check on our extracted cities and excluded cities which were unlikely to be correct

With this measure we could improve the quality of our city extraction and we we could increase the city extraction rate to 73%

Data Extraction from Titles

- composer

- location

-- from title information

-- using regex to find chunk of text that contains the location

-- search for location using spacy on the extracted chunk of text

-- use geopy to find latitude and longitude of the extracted location

-- use kmeans to cluster the extracted locations (make invariant to small changes in name)

-- infer latitude, longitude and location of a place if another element in the cluster has it

- title

- genre

- occasion

Metadata extraction from copertas

- place

- composer

Visualization

- place

- time

- linking entities

- others

Quality assessment

Evaluation

First, we evaluate how many and which percentage of entities we could extract for a given class compared to the number of librettos which were available. In the information retrieval context this correspondents to the recall plus the error rate !!!! of our extraction, e.i.: how many . Relative and absolute number of this retrieval rate are denoted in the table below:

| Feature Extraction | Cities | Theaters | Composer | Genre | Occasion |

|---|---|---|---|---|---|

| Relative | 72.97% | 76.21% | 27.74% | 95.04% | 01.62% |

| Absolute | 810 | 846 | 308 | 1055 | 18 |

This however does not tell us what percentage of the retrieved entities is actually correct. Therefore, in a second step we analysis which percentage of our entities is correct. To compute this we randomly selected a subset of 20 librettos and extract the ground truth by hand. By comparing now our extracted entities with this ground we can compute confusion matrices and metrics as precision and recall.

|

|

|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Given the ground truth and our predicted labels we now can calculate precision and recall metrics for the positivity rate of our feature extractions. Recall in this sense refers to the rate of true positives our extraction finds and precision to the rate of true positives to predicted positives. Below a table shows the recall and precision rates for each feature extraction. The number on which the calculated metrics are based on is indicated in brackets

Please, note that for linking of entities only correctly extracted entities were considered, thus the number of observations between a specific extracted entity and the linking of the same entity might vary.

| Cities | Composer | Theaters | Title | Genre | City Localization | Theater Localization | Title Wiki Data Linking | |

|---|---|---|---|---|---|---|---|---|

| Precision | 92.3% (N=13) | 100% (N=1) | 83.3% (N=12) | 80% (N=20) | 100% (N=20) | 100% (N=8) | 100% (N=12) | 66.6% (N=20) |

| Recall | 63.2% (N=19) | 25% (N=4) | 77% (N=13) | 100% (N=16) | 100% (N=20) | 100% (N=8) | 0.44% (N=12) | 77% (N=20) |

Reliability

- how reliable the extraction is

- what are the limitations (i.e. theaters changing names etc)

Efficiency of algorithms

- both computational and qualitative

- how well they can generalize to new data

Results

- small analysis of results

Motivation

- speed up metadata extraction

- extend existing metadata

- reduce to atomic entity existing metadata

- visualize metadata in an interactive and understandable way

Realisation

- description of the realization (?)