Switzerland and the Transatlantic Slavery: Difference between revisions

Amina.matt (talk | contribs) |

Yichen.wang (talk | contribs) |

||

| Line 183: | Line 183: | ||

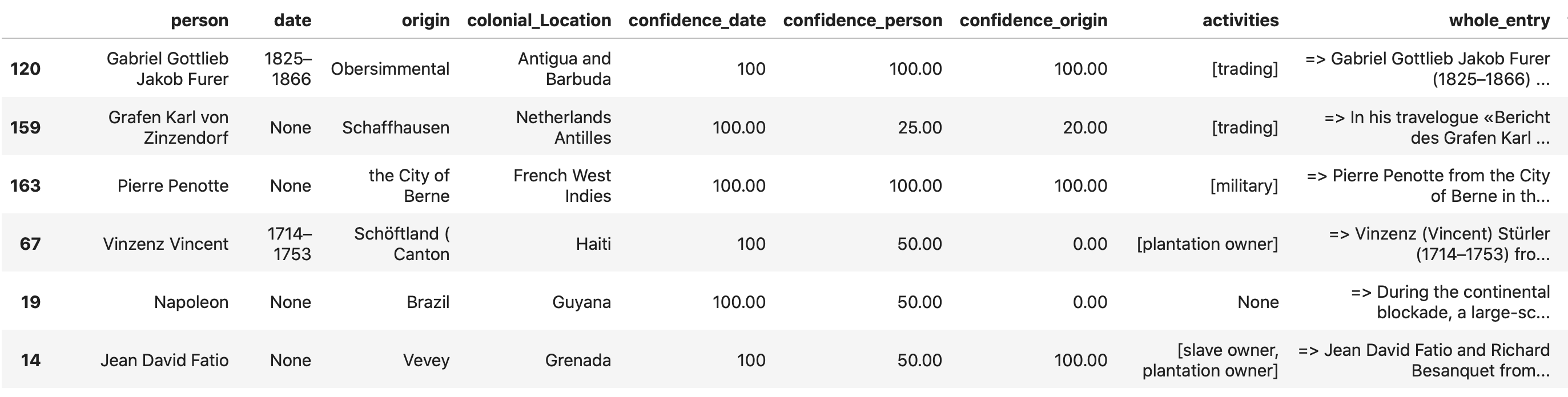

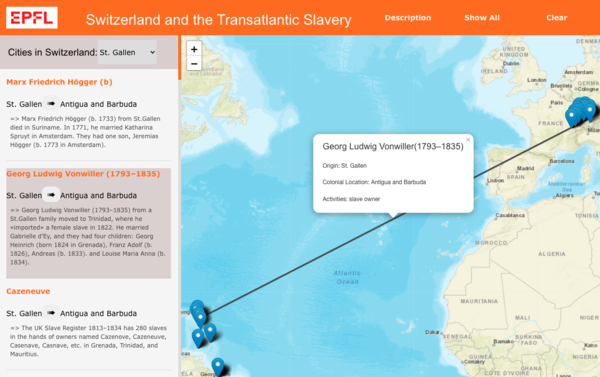

We used '''Javascript''', '''HTML''', and '''CSS''' to implement the visualisation. In order to display the map and draw the connections between places, Javascript library '''Leaflet.js''' is used. We store the extracted information in '''GeoJSON''' format for the map implementation because is a simple open standard format to store both geographical and non-spatial features. | We used '''Javascript''', '''HTML''', and '''CSS''' to implement the visualisation. In order to display the map and draw the connections between places, Javascript library '''Leaflet.js''' is used. We store the extracted information in '''GeoJSON''' format for the map implementation because is a simple open standard format to store both geographical and non-spatial features. | ||

[[File:map01.png|600px|center|thumb|This | [[File:map01.png|600px|center|thumb|This visualization screenshot shows all the connections between Switzerland and colonial locations in our dataset .]] | ||

When the "Show All" button is clicked, the map displays all the connections, then click on each line, key information about the entry will popup. | When the "Show All" button is clicked, the map displays all the connections, then click on each line, key information about the entry will popup. | ||

With the dropdown on the text panel on the left, user can filter the list based on the origin city in Switzerland. | With the dropdown on the text panel on the left, the user can filter the list based on the origin city in Switzerland. Click on the name of the two places will zoom the corresponding location on the map. Click on the arrow will draw the line between the two places. | ||

[[File:Mao03.png|600px|center|thumb|This visualisation screenshot shows one connection of a given entry.]] | [[File:Mao03.png|600px|center|thumb|This visualisation screenshot shows one connection of a given entry.]] | ||

Revision as of 00:13, 22 December 2021

Introduction

In the last decade, the narrative that Switzerland has nothing to do with slave trade, slavery and colonialism has been severely challenged.[1] [2]

between the 16th and the 19th centuries, there were a number of Swiss involved in slavery, the slave trade, and colonialism activities. Swiss trading companies, banks, city-states, family enterprises, mercenary contractors, soldiers, and private individuals participated in and profited from the commercial, military, administrative, financial, scientific, ideological, and publishing activities necessary for the creation and the maintenance of the Transatlantic slavery economy. In this project, focusing on the Caribbean Community member states, we are interested in discovering the trace of the colonial past of Switzerland.

Our primary source is the CARICOM Compilation Archive written by Hans Fässler, MA Zurich University, a historian from St.Gallen (Switzerland).

Motivation

The CCA(CARICOM Compilation Archive) archive is a single-page website with contents categorized by the colonial location. Each entry begins with an arrow. The author Hans Fassler also discussed with us the issue that the website provider is warning about the growing content of CCA. Although the archive is a very informative source about the colonial past of Switzerland, it certainly creates an obstacle for potentially interested readers to learn from it in depth. The motivation of this project is to discover the previously less known history of Switzerland and provide a framework to visualize the content of the archive in a more accessible and more interactive way.

This set of properties has been validated as relevant and valuable information by Hans Fassler. In our project, we will extract the following information about each entry in the archive:

- Person's name

- City of origin in Switzerland

- Colonial location

- Date of birth and death of the person or the active date in the location

- Colonial activities that this person was involved

As we discussed with Hans, he keeps the full content of each entry because it contains more detailed information. We would like to build the map visualization based on the information we extract. This would allow the entries to be easily understandable and interpretable since the map provides geographic information to help readers identify the places. The reader can also have the visual connection between the origin in Switzerland and the colonial locations. Also, based on the information we would like to extract, we can analyze the involvement of the Swiss in the colonial era.

Project Plan and Milestones

Base on the feedback of the midterm presentation the objectives have been revised. The material traces have been left for further work and some data analysis on the existing dataset has been suggested instead.

Step I : Information extraction with NLP tools(Stanford NER, NLTK)

Step II : Visualize the connection between Switzerland and Caribbean colonies

Step III : Highlight the material traces (not enough time to work on)

| Date | Task | Completion |

|---|---|---|

| By Week 4

(07.10) |

|

✓ |

| By Week 6

(21.10) |

|

✓ |

| By Week 10

(25.11) |

Step I

Step II

|

✓ |

| By Week 11

(02.12) |

Step I

Step II

|

✓ |

| By Week 12

(09.12) |

Step II

|

✓ |

| By Week 13

(16.12) |

Step II

Overall

|

✓ |

| By Week 14

(22.12) |

Overall

|

✓ |

Methodology

The methodology of our project is divided into three steps: text processing, data enrichment with geographical database and data visualization and analysis.

Text processing

In the [primary text source](https://louverture.ch/cca/), in each section there is a list of item/entries. Most items are separated by a return and the **=>** starting string. Each item references a different actor of colonial entreprise. The first step is to retrieve each item separately and append its index. This index is used for colonial location retrieval. Indeed the table of contents is mainly organized by colonial location (some sections don't refer explicitly to geographical location, and are treated separatly).

The processing of the text item itself is done with Natural Language tools as NLTK for tokenization and Stanford NER for Named Entities recognition (NER) and BIO taggings.

The NER is a text processing method that recognize and tag words that refer to named entity. In our case we are interested in using the 'PERSON' tag for (name or last name), as well as location (city, region, country) and date. In addition, we run [BIO](https://en.wikipedia.org/wiki/Inside–outside–beginning_(tagging)) tagging where the NE are labeled based on their position ('BEGING-INSIDE-OUTSIDE') with respect to other NE. This allows grouping of person tag into a single string. An example for both steps is given below.

NER-tagging

('=', 'O'),

('>', 'O'),

('Jean', 'PERSON'),

('Huguenin', 'PERSON'),

('(', 'O'),

('1685–1740', 'O'),

(')', 'O'),

('from', 'O'),

('Le', 'O'),

('Locle', 'ORGANIZATION'),

('(', 'ORGANIZATION'),

('Canton', 'ORGANIZATION'),

('of', 'ORGANIZATION'),

('Neuchâtel', 'ORGANIZATION'),

(')', 'O'),

BIO-tagging

('=', 'O'),

('>', 'O'),

('Jean Huguenin', 'PERSON'),

('(', 'O'),

('1685–1740', 'O'),

(')', 'O'),

('from', 'O'),

('Le', 'O'),

('Locle ( Canton of Neuchâtel', 'ORGANIZATION'),

The NER isn't completely reliable, and we can already notice some mislabeling, the limitations of NER are discussed in the limitations below.

Retrieving relevant informations requires to define which of the persons, locations and date tags are related to the main character of the text. Indeed, in the description multiple persons, as relatives or bosses are mentioned, and multiple locations as the location of origin but also the brother's baptized place. In order to sort amongst the possibilities we use pattern matching to match the structure of the different named entities to a syntax pattern. Our model contains the two schemas described below. With these two schemaswe can recognized around 75% of the item retrieved.

The pattern matching is so efficient that it is used solely to retrieve the origin location information. We find the first occurence of the word 'from' and retrieve the next strings as location. Our model accounts for several variation (e.g. 'the City of Geneva, Le Locle).

The other information relevant to our dataset is the activities in which our main person was involved in. The categorization is difficult as many characters are involved in multiple activities and that often their relatives activities are also related in the description. Based on our discussion with Hans Fassler and the study of our primary sources the following categories are relevant:

trading = ['company', 'companies', 'merchants', 'merchant'] military = ['soldier','captain','lieutenant','commander','regiment', 'rebellion', 'troops'] plantation = ['plantation', 'plantations'] slave_trade = ['slave ship', 'slave-ship'] slave_owner = ['slaves', 'slave', 'slave-owner'] racist = ['racism', 'racist', 'races']

The last category is related to the structural contributions of Swiss people , they include participations in Anti-Black Racism and Ideologies Relevant to Caribbean Economic Space , "Marine Navigation" and "African and European Logistics". The Marin Navigations section concerns primarly the development of navigation tools for Colonial Powered and the logistics contributions are related to banking or insurance companies.

Finally, the description contains many detailed that are worth keeping. Once the relevant information for data analysis and visualization are extracted, the full entry is added to the dataset. An example is given below.

Levels of confidence

For origin, data and person we calculate an accuracy value that indicates what is the level of confidence we have in the retrieved attribute. Note that there isn't any confidence level for the colonial location property as it comes directly from the author and is unambiguous.

Origin accuracy The origin location is found according to the schemes presented above. However, multiple locations exist in the same portion of text thus the actual location that we are looking for might be further away in the text. By counting the total of Swiss cities present in the text we can compute a level of confidence inversely proportional to it. Date and person accuracy For both the data and person, retrieved based on the NER tags, the accuracy levels are calculated using the tags occurrences. Following the argument presented above accuracy is calculated as the inverse of tags occurrences.

Dataset enrichment with geographical databases

One of the goal of this work is to visualize the archive content on a geographical map. We add geographical information for both colonial and Swiss location using the following methods. For colonial location Colonial locations are retrieved from the table of contents which organises the corpus mainly by countries. A few exceptions are regions from the Caribbean economic space, states for North America and other indications for structural contributions. Our model geolocalizes countries based on their capitals geographical coordinates, and states from North America based on their capitals too. Two different datasets are used respectively for countries and U.S. states. For the regions from the Caribbean economic spaces, the French West Indies are mapped to Guadeloupe, Danish West Indies to the U.S. Virgin Islands. Based on the content of Southern Africa section, we used South Africa as reference region and finally the East Indies are mapped to Indonesia. The structural contribution are more difficult to map, indeed as mentioned by the archive author "they cannot be assigned to one single Caribbean country". We decided to map them to Switzerland, in order to highlight that some contributions didn't take place abroad but where still part of the European colonial project. A finer grain retrieval would allow to extract more specific location for text item in section concerning several locations.

'For origin locations (Switzerland)

There are 194 origin with no geographic information, which represents 84 different locations. On this 194 entries without geographical coordinates, 57 were not even defined to start

Visualization

We used Javascript, HTML, and CSS to implement the visualisation. In order to display the map and draw the connections between places, Javascript library Leaflet.js is used. We store the extracted information in GeoJSON format for the map implementation because is a simple open standard format to store both geographical and non-spatial features.

When the "Show All" button is clicked, the map displays all the connections, then click on each line, key information about the entry will popup.

With the dropdown on the text panel on the left, the user can filter the list based on the origin city in Switzerland. Click on the name of the two places will zoom the corresponding location on the map. Click on the arrow will draw the line between the two places.

Results

Overall, we extract 464 text items from the division of the initial page. The combination of NER and BIO tagging with syntax structure pattern matching we can retrieve 75% of the entries.

Precisely, on this set, 117 items have no person name or location which makes them irrelevant (precisely 49 entries have no person defined, 16 entries where neither the person nor the location could be defined and in 52 cases the person and location are in the wrong order with respect to our schema). We are left with 327 entries.

The average confidence levels are respectively 66%, 52%, 37% for date, person and origin. The origin location is low but this means that many Swiss cities are referred in the text. Indeed, as we used the syntax matching we are pretty confident that this number is an indication of high occurrences of Swiss places and of the text complexity that of bad text processing.

Limitations

The limitations presentation follows the methodology steps.

Text processing

Limitation of NER versus pattern recognition . The results of NER processing are not reliable for all tags. For person name, Stanford NER performance are reliable and visual inspection shows good results. However, the Stanford NER is missing a lot of locations, most of them are either not recognized or miscategorized as organizations. The limitations of the tools made it worth it to use pattern matching.

Dataset enrichment with geographical databases

Data visualization and analysis

The complete archive has 464 items, i.e. entries about different actors. However, retrieving information such as the name and origin of the actor, as well as his activities and the location of the activities is difficult. The texts can be pretty complex and intricated, 'as were the implications of Switzerland in Black Slavery'[^1].

- David Louis Agassiz (1737–1807), uncle of the racist and glaciologist Louis Agassiz (1807–1873), was a financier who left Switzerland for France in 1747 with his friend Jacques Necker in order to work in the Parisian branch of the Thellusson et Vernet bank (investments in colonial companies, links with the slave trade). Until 1770, David Louis Agassiz cooperated with Pourtalès of Neuchâtel via the company «Joseph Lieutaud et Louis Agassiz». Necker was to become Louis XVI’s Minister of Finance, whereas David Louis Agassiz left for Britain where he acquired a considerable fortune and anglicised his name to Arthur David Lewis Agassiz. He was naturalised by a private Act of Parliament in 1766. Agassiz dealt in cotton, silk, sugar, cocoa, coffee, tobacco, and cochineal and had business relations with France, Spain, Portugal, Italy, Germany, Belgium, Denmark, the Netherlands, Sweden, Switzerland, Russia, North and South America and the East and West Indies. In 1776, Francis Anthony Rougemont (1713–1788) from a Neuchâtel family joined the partnership under the name of «Agassiz, Rougemont et Cie.», a company which had close ties with «MM Pourtalès et Cie.» from Neuchâtel (ownership of plantations on Grenada, indiennes industry, banking). Arthur David Lewis Agassiz’s son Arthur Agassiz (1771–1866), cousin of the racist Louis Agassiz, took over the family business, and later formed a company «Agassiz, Son & Company». In 1823, Arthur Agassiz was working in Port-au-Prince (Haiti) with «Jean Robert Bernard et Cie.».

There are 194 origin with no geographic information, which represents 84 different locations that are too small cities for our method.

Through processing, the number of workable entries is reduced to TO ADD.

For example Saint-Aubin, Bournens, Bourmens are too small to be in the Swiss cities dataset.

With respect to the above issues the following ideas could be implemented to improve the accuracy:

1)

The second part that transform [^1] Hans Fassler

Links

Github repository: Colonial-heritage-in-Switzerland

Primary source: caricom archives

Secondary sources: Dictionnaire historique de la Suisse DHS, swissNAME3d