Jerusalem: locating the colonies and neighborhoods

Introduction

The goal of this project is to study the construction of neighborhoods in Jerusalem over time. We collect information about Jerusalem neighborhoods from four different sources, including the book Jerusalem and its Environs, the Wikipedia category Neighbourhoods of Jerusalem, the Wikidata list of places in Jerusalem and Wikidata entity Neighborhood of Jerusalem. These sources provide us with information about Jerusalem neighborhoods with different focuses. We merge this content through matching methods and present it on a carefully organized and visualized web page. With various features our webpage provides, users can get a clear picture of the Jerusalem community in our map interface. At the same time, the fuzzy matching approach we use can be easily applied to other cities where there are multiple sources of information, showing the potential for reuse in the future.

Motivation

The study of the geography and chronology of neighborhoods in Jerusalem can provide valuable insights into the city's past and present. The location of a neighborhood can often reflect the social, economic, and political forces that shaped it, as well as the cultural traditions and values of its residents.

Examining the founding year of a neighborhood can also provide insight into the city's history and development. Visualizing the location and founded year of neighborhoods in Jerusalem can be a powerful tool for understanding the city's past and present. By mapping and analyzing these data, it is possible to gain a deeper understanding of the cultural, social, and economic dynamics of different neighborhoods and the forces that have shaped them.

A city with such a rich and varied history as Jerusalem has many different accounts of it. These accounts from various sources are an important basis when studying it. How to integrate the information from these sources is also one of the focuses of our research.

Existing interactive maps about Jerusalem neighborhoods usually do not include information about the neighborhoods that once existed, nor do they contain information about when the neighborhoods were built. Therefore, our work is of great importance in the study of the history of the Jerusalem community.

Deliverables

- OCR results of Development of Jerusalem neighborhoods information from Jerusalem and its Environs.

- Crawler results from Wikipedia category Neighbourhoods of Jerusalem, Wikidata list of places in Jerusalem and Wikidata entity Neighborhood of Jerusalem.

- Integrated database with multiple information sources after perfect matching and fuzzy matching.

- An interactive and user-friendly website showing the changes in neighborhoods of Jerusalem, which contains:

- A timeline page that illustrates the evolution of the construction of neighborhoods in Jerusalem over time.

- An inhabitant page and an initiative that respectively show the inhabitants and initiative information about each neighborhood.

- A dedicated page that contains relevant information for each neighborhood.

- A search function that enables users to search for neighborhoods by name.

Methodology

Data collection

OCR method for paper book

Jerusalem and its Environs is a comprehensive book written by Ruth Kark in 2001 that provides in-depth information about the development and history of Jerusalem neighborhoods throughout different time periods. In order to extract relevant data from the book for our study, we utilize OCR (Optical Character Recognition) technology to scan the text and also conducte manual proofreading to ensure the accuracy of the data. This is necessary due to the presence of punctuations and annotations in some community names, which can sometimes cause issues with OCR technology.

The data from the book includes important information about each neighborhood, such as its name, year of foundation, number of inhabitants, and initiating entity. In some cases, additional remarks are also included. This information is particularly valuable for our study, as it allows us to analyze the evolution and growth of different neighborhoods over time.

One of the key aspects of our study is the year of foundation for each neighborhood. This information is crucial for understanding the timeline of development in Jerusalem. However, it is important to note that not every neighborhood in the book has a precise year of construction listed. For neighborhoods where the year of foundation is given as an interval (e.g., "1894-1896") or where the construction year is ambiguous (e.g., "1900s" or "end of Mandate"), we choose the first year of the period for further analysis. This allows us to provide a consistent basis for comparison between neighborhoods and to accurately track the evolution of Jerusalem over time.

Crawler method for Wikipedia and Wikidata information

In order to gather data from the internet for our study, we implemente a web crawler using a combination of tools and technologies, including the API of Wikidata, the requests package, and the BeautifulSoup parser package. Our crawler is primarily focused on retrieving data from Wikipedia and Wikidata, which are both valuable sources of information about neighborhoods in Jerusalem.

For data on Wikipedia, we are primarily interested in collecting the coordinates of each neighborhood. This information is useful for mapping the locations of different communities and understanding their spatial relationships within the city.

For data on Wikidata, we focus particularly on neighborhoods that have an "inception" attribute, which serves as another source for the founding year of the neighborhoods. Although the data is limited, this information can be counted as an additional source to help us understand the timeline of development in Jerusalem and to track the evolution of different communities over time.

It is important to note that there is often a significant amount of overlap in the information that we collect from the internet. In order to create a cohesive and accurate dataset, we clean and match the data from different sources.

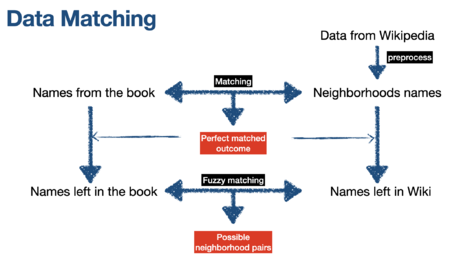

Data matching

Data matching is the process where we identify and combine data from multiple sources in order to create a more complete and accurate dataset.

Preprocessing data from Wikipedia sources

To deal with the overlap within two Wikipedia sources, we first identify neighborhoods with identical names and merge them. We also find out whether the two neighborhoods with different names are actually the same community by determining whether they have links that can redirect to each other. In this process, we retain information about the source of the data. The pre-processed neighborhoods are used for matching with neighborhoods from the book.

Matching data from the book and Wikipedia

Perfect matching

For neighborhoods where the name in the book is the same as the name in Wikipedia, we refer to this situation as a perfect match. This means that the information about these communities complements each other in the two sources of information. In these cases, we merge the information from the two sources together to create a more comprehensive dataset.

As a result, 10.3% (17 out of 165) neighborhoods from the book are perfectly matched.

Fuzzy matching

For the remaining neighborhoods, we develop a method of fuzzy matching. We use the fuzzywuzzy library from python to fuzzy match communities from the book and wikipedia. Fuzzywuzzy uses Levenshtein distance to calculate the similarity between two strings. For each neighborhood, we choose 3 alternatives and then sort the scores according to the match. For those scores that reach a set threshold 70, we consider them to be successfully fuzzy matched. Additionally, In addition, since it is difficult for us to fully approve the results of fuzzy matching, we performe manual screening for these results as well.

As a result, 10.9% (18 out of 165) neighborhoods from the book are fuzzily matched with neighborhoods from the Wikipedia.

Database establishment

In this project, we employ MySQL as the database management system. The database is established by installing MySQL on the system and creating a new database. Subsequently, the structure of the database is defined through the creation of tables and the specification of the columns within those tables. The preprocessed data is imported into the tables using the LOAD DATA INFILE statement. Finally, the database is accessed from Python code via the MySQL connector and mysql.connector.connect() function. Throughout the course of the project, SQL queries and the MySQL connector are utilized to retrieve and manipulate data from the database as required.

Our database is currently not accessible online as we do not have a server to host it. This means that the database can only be accessed and modified on the local system where it is installed. User can easily reproduce our database following the instruction on our GitHub page.

Webpage development

Based on the above processed information, we create an interactive website. Below is a description of our website features and interfaces.

Timeline map

The

Inhabitants map

Initiative map

Jerusalem neighborhoods list

Search function

Result assessment

As a significant portion of our research involves visualizing data, our evaluation of the results is based on our data matching results.

Limitations and Further Work

Limitations

- Our database only contains the founding year of each neighborhood, and does not include the termination year. As a result, all the neighborhoods in our database are displayed on the map, leading to a cluttered appearance. If we had access to the termination year of each neighborhood, we could simplify and improve the clarity of our timeline map.

- Many neighborhoods from the book are not listed on Wikipedia. This may be due to the disappearance of some neighborhoods or the lack of notability for a Wikipedia page. As a result, we are unable to obtain the coordinates of these neighborhoods and include them on the maps. If we had access to additional historical documents, we could better handle our data from the book.

Further Work

Obtaining more resources

- We could potentially acquire more resources from both the internet and books to expand the scope of our source material. For example, the primary source for the founding years is our reference book, with a small amount of additional data from Wikipedia and Wikidata. However the data on Wikipedia and Wikidata is very scattered and it is difficult to get all the detailed results from one crawler. Therefore our data is not complete. We have included a link to Wikipedia in the detailed page, and in the future we can try to get more information from Wikidata to make the dataset more complete.

More percise matching method

- Currently, we are making matches based on the names and redirect links of the neighborhoods and manually reviewing the top substitutions according to their scores. In the future, if we could obtain more priori knowledge about Jerusalem, we could make better matching.

Project Plan and Milestones

| Date | Task | Completion |

|---|---|---|

| By Week 3 |

|

✓ |

| By Week 4 |

|

✓ |

| By Week 5 |

|

✓ |

| By Week 6 |

|

✓ |

| By Week 7 |

|

✓ |

| By Week 8 |

|

✓ |

| By Week 9 |

|

✓ |

| By Week 10 |

|

✓ |

| By Week 11 |

|

✓ |

| By Week 12 |

|

✓ |

| By Week 13 |

|

✓ |

| By Week 14 |

|

✓ |

| By Week 15 |

|

✓ |