Data Ingestion of Guide Commericiale

Introduction

Project Timeline & Milestones

| Timeframe | Task | Completion |

|---|---|---|

| Week 4 |

|

✓ |

| Week 5 |

|

✓ |

| Week 6 |

|

✓ |

| Week 7 |

|

✓ |

| Week 8 |

|

✓ |

| Week 9 |

|

✓ |

| Week 10 |

|

✓ |

| Week 11 |

|

✓ |

| Week 12 |

|

|

| Week 13 |

|

|

| Week 14 |

|

Motivation and deliverables

REQUIRED FOR PROJECT: Motivation and description of the deliverables (5%) (>300 words)

Methodology

REQUIRED: Detailed description of the methods (5%) (>500 words)

During our work, we approached our problem in two ways: in a more generalizable way that could process any guide commercial, and a more streamlined way that would require more manual annotation to improve results. We started with our first approach, [and worked on this for the first couple months], but came to realize its margin for error was for too high, making us pivot toward our second approach. The points below highlight each step of the way for both these approaches.

Approach 1: General

Pipeline Overview

Pipeline Overview This process aims to extract, annotate, standardize, and map data from historical documents. By leveraging OCR, named entity recognition (NER), and geocoding, the pipeline converts unstructured text into geographically and semantically meaningful data.

Pipeline

- Process pages by:

- Convert batch of pages into images

- Pre-process images for OCR using CV2 and Pillow

- Use Pytesseract for OCR to convert image to string

- Perform Named Entity Recognition by:

- Prompt GPT-4o to identify names, professions, and addresses, and to turn this into entries in a table format.

- Standardize addresses that include abbreviations, shorthand, etc.

- Append “Venice, Italy” to the end of addresses to ensure a feasible location

- Geocode addresses by:

- Use a geocoding API like LocationHQ to convert the address to coordinates

- Take top result of search, and append to the map

Divide Up Pages

Since we wanted this solution to work for any guide commercial, not just the ones provided to us, we intended to develop a way to parse through a document, identifying pages of interest that had usable data. While we got a working solution that was able to weed out pages with absolutely no information, we were never able to find a reliable solution for finding pages with the specific type of data we wanted. A possible idea we had was to train a model for identifying patterns within each page and grouping them off of that, but due to timeline restrictions we decided against it. Because of this, we never ended up finalizing this step for approach 1, and proceeded with the rest of our pipeline.

Perform OCR

To start, we used PDF Plumber for extracting pages from our document in order to turn them into images. With the goal of extracting text from these pages, we then used libraries such as CV2 and Pillow in order to pre-process each image with filtering and thresholding to improve clarity, and lastly performed OCR using Pytesseract, tweaking certain settings to accommodate the old scripture better.

Named Entity Recognition

After having extracted all text from a page, we can then prompt GPT-4o to identify names, professions, and addresses, and turn our text into a table format with an entry for each person. Furthermore, since these documents use abbreviations and shorthand for simplifying their writing process, we need to undo these by replacing them with their full meaning. Finally, to make geocoding in the future possible, we need to specify that each entry belongs to Venice by adding "Venice, Italy" to the end of each address.

Geocoding

For geocoding, we found LocationHQ to be a feasible solution for our cause, successfully mapping addresses to close by locations within Venice. In order to implement this into our project, we attempted to query each address in our table. While some queries narrowed down the request to a single address, some would result in multiple possible options. Our heuristic for these scenarios was to simply pick the top option, as it seemed to be the closest match each time. Finally, after gathering the coordinates for our addresses, we would map them onto a visual map that we ended up not proceeding with in the end.

Approach 2: Streamlined

Pipeline Overview

This approach outlines a systematic process for extracting, annotating, and analyzing data from historical Venetian guide commercials. The aim is to convert unstructured document data into a clean, structured dataset that supports geographic mapping and data analysis.

Pipeline

- Manually inspect entire document for page ranges with digestible data

- Perform text extraction using PDF Plumber

- Semantic annotation with INCEpTION, separating our pages into entries with first names, last names, occupations, addresses, etc.

- Clean and format data?

- What were the steps?

- Map parish to provinces using dictionary

- Plot on a map using parish and number from entry

- Perform data analysis.

Find page ranges of interest

The main difference with this approach compared to approach 1 was that instead of relying on a program to group our pages, we manually went through our document and found a range of pages that were of interest to us. This allowed us to go into the next steps of our pipeline with confidence, knowing we don't need to deal with the uncertainty of whether our page had irrelevant data or not.

Perform Text Extraction

Furthermore, after testing both OCR and text extraction, we found that using PDF Plumber's function for extracting text from the PDF directly worked much more reliably. Because of this, we ended up using this for all of our pages, resulting in a similar output to the OCR with a much higher accuracy. This also freed us up from requiring to perform pre-processing for images, making the pipeline much simpler.

Semantic Annotation

For semantic analysis, we steered toward using INCEpTION in place of GPT-4o, largely due to previous projects already having trained a model for extracting names, addresses, and professions from similar text documents. Another large benefit from this was that we no longer needed to rely on an external API for this, allowing us to bypass the limitations with financing our requests. [ADD MORE DETAIL HERE AND REWRITE MAYBE???]

Data Clean Up

[SAHIL WRITE THIS PART PLS]

Plot on a Map

WE HAVNET DONE THIS YET

Evaluation of pipeline

In the final stages of the pipeline, there two types of errors that can occur.

1. The business fails to be mapped to a coordinate 2. The business successfully maps to a coordinate, but the coordinate is wrong.

Error 1: Initially, roughly 20% of the data could not be mapped.

Results

Limitations & Future Work

Limitations

OCR Results

In Approach 1, we used the pytesseract library for our OCR, but came to the realization that this was unfeasible due to the amount of mistakes it made. This led us to switching to PDFPlumber's text extraction utility, which let us extract the text straight from the PDF. The document we used were processed by Google, meaning their OCR will most likely be higher than most people's. This approach with text extraction will mean simply taking photos of a document will end up not working, and that the text needs to be part of the PDF.

Time and Money

In our Approach 1, we intended on using GPT-4o for natural entity recognition, as well as a geolocating API for mapping our addresses to the correct location in Venice. What we came to realize was that when we have thousands of entries to process, each requiring possibly multiple requests to each endpoint, this would not be possible without a financial burden or sacrifice of time. This became one of the leading causes we pivoted toward Approach 2, which would cut these dependencies out of our project.

Manual Inspection

In order for our Approach 2 to work, at two steps in our pipeline we needed to perform some level of manual work. Since our project was done using a smaller subset of a large document, this work was manageable with three people, but it's important to keep in mind for larger projects. The first was after our text extraction, where entries that had been divided onto more than 1 line had to be manually edited to fit onto a single line. The second was during our semantic annotation with INCEpTION, where there was a possibility for the program to flag occupations as names, resulting in us needing to correct all these cases by hand.

Language Barrier

Since none of us speak Italian, it is impossible for us to reliably verify that occupations, names, and other data from our document were correctly annotated. For our annotation work, we proceeded with the assumption that each entry followed the same pattern of listing the name first, then occupation, and finally the address.

Metrics

To gain insight into the accuracy of our overall pipeline as well as each step of it, below we have listed each step and the results from manual testing.

Text Extraction

Overview

For our text extraction, we directly pulled the text from our PDF document using PDFPlumber's built-in functions. As these documents were previously parsed and OCRd by Google, the accuracy of the OCR is much higher compared to doing it ourselves. Even then, we still noticed errors, and below are our findings.

Looking at the performance of our text extraction on five randomly chosen pages, we manually cross-checked every word on these pages against the original docu,ent, and counted the number of errors we found. As for the heuristic of what constitutes as an error, the criteria goes as follows:

- If a word does not show up as it is in the original document, it counts as one error.

- If 4 words are clumped together in the PDFPlumber when they should have spaces in between, this counts as 4 errors.

- If a word has an incorrect letter, it counts as 1 error

- If any marks are generated that were not intentionally in the old document, that counts as 1 error.

Pages

Page 1:

- Total Words: 312

- Correct Words: 292

- Accuracy: 93.59%

Page 2:

- Total Words: 256

- Correct Words: 254

- Accuracy: 99.22%

Page 3:

- Total Words: 282

- Correct Words: 266

- Accuracy: 94.33%

Page 4:

- Total Words: 278

- Correct Words: 259

- Accuracy: 93.17%

Page 5:

- Total Words: 310

- Correct Words: 300

- Accuracy: 96.77%

Conclusion

Our final total accuracy comes out to 95.42%.

Most errors were due to certain words being hand written too closely together, and then the OCR done by Google analyzed them to be together when they should be two distinct words. Similarly, certain commas were written slightly too far from the word, leading to the OCR separating the comma from the word it should be in front of. Only two of these errors were due to a letter being misinterpreted, giving us good confidence that the OCR was reliable.

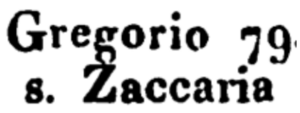

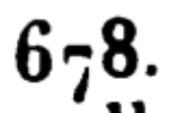

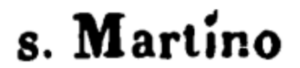

The figures below show examples of the errors we saw during our measuring process. Fig 1 and 2 show the two times that the OCR misinterpreted a character and gave an output that was different from the actual document. In Fig 1, the "g" above the "Z" extended in a way where it was read as "Ž", and in Fig 2, the "7" was written so low that the OCR read it as a dash ("-"). As for Fig 3, even though it might not look too close to the human eye, the OCR interpreted these two words to be too close to each other, resulting in "s.Martino" instead of the correct "s. Martino".

Semantic Annotation

Overview

In the end, we decided to use INCEpTION for semantically annotating each of our pages, using a trained model provided to us by the instructors of the course. This model had been used on a similar Guide Commerciale file, and served as a great starting point for us to build off of.

Looking at the performance of this model on five randomly chosen manually cleaned up pages from our text extraction process, we manually checked each entry on these pages, and counted the number of errors we found.

Page 1

- Total entries: 39

- Incorrect entries: 2

- Accuracy: 94.87%

Page 2

- Total entries: 31

- Incorrect entries: 2

- Accuracy: 93.55%

Page 3

- Total entries: 37

- Incorrect entries: 1

- Accuracy: 97.30%

Page 4

- Total entries: 30

- Incorrect entries: 0

- Accuracy: 100%

Page 5

- Total entries: 38

- Incorrect entries: 0

- Accuracy: 100%

Conclusion

Our final total accuracy comes out to 97.14%

Github Repository

Data Ingestion of Guide Commericiale