Extracting Toponyms from Maps of Jerusalem

rubric

Written deliverables (Wiki writing) (40%)

Projet plan and milestones (10%) (>300 words)

Motivation and description of the deliverables (10%) (>300 words)

Detailed description of the methods (10%) (>500 words)

Quality assessment and discussion of limitations (10%) (>300 words)

The indicated number of words is a minimal bound. Detailed description can in particular be extended if needed.

Project Timeline

| Timeframe | Task | Completion |

|---|---|---|

| Week 4 |

|

✓ |

| Week 5 |

|

✓ |

| Week 6 |

|

✓ |

| Week 7 |

|

✓ |

| Week 8 |

|

✓ |

| Week 9 |

|

✓ |

| Week 10 |

|

✓ |

| Week 11 |

|

✓ |

| Week 12 |

|

|

| Week 13 |

|

|

| Week 14 |

|

Introduction

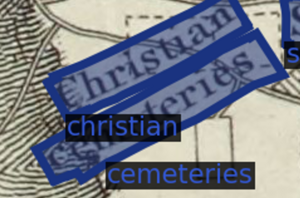

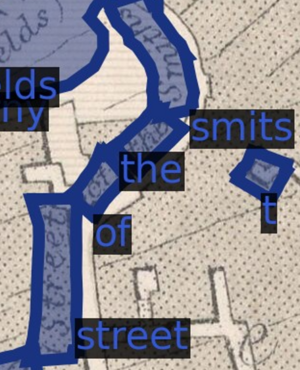

We aim to programmatically and accurately extract toponyms (place names) from historical maps of Jerusalem. With the help of a scene text recognition tool built by the Machines Reading Maps (MRM) team specifically for toponym extraction, we develop a novel label extraction and processing pipeline capable of significant accuracy improvements relative to MRM's mapKurator spotter module alone. We then explore the success of our pipeline during generalization, describe the limitations of our approach, and suggest possibilities for future progress in accuracy or end-user interactivity.

Motivation

Despite the deep-learning induced boon to scene text recognition (STR) seen in the past decade, STR remains difficult due to the sheer variety of shapes, fonts, colors, languages, and text backgrounds encountered. [1] In the context of historical maps and Jerusalem especially, STR issues are compounded by the fact that there is not necessarily agreement between cartographers over toponym spellings (in the case of transliterated arabic-language toponyms, for example) or, as is true for many biblical toponyms, even the exact location of certain places.

It's doubly difficult with Jerusalem, as there are biblical areas which have no agreed upon place.

Not everyone has the computational power to train a SOTA model.

The full mapKurator pipeline - namely the Open Street Map matching - is not suitable in this environment.

We have the ability to study evolution and disagreement in Jerusalem toponyms by language, time, and location, while at the same time creating an accessible pipeline valuable in other contexts.

Deliverables

- A novel, general-use, and computationally accessible toponym extraction pipeline capable of augmenting pre-trained SOTA neural network models.

- A set of X ground truth toponyms across Y historical maps of Jerusalem.

- A set of X extracted toponyms across Y historical maps of Jerusalem.

- A set of X labels denoting font similarity across Y images of toponyms.

Methodology

MapKurator

Pyramid

Text Recitification

Let $G_{j,k}$ represent subset $k$ of ground truth label $G_j$. Note that because we do not define $G_{j,k}$ to be a proper subset, it is possible $G_{j,k}$ = $G_j$. Now let the set $S_{j,k} = \{L_1, L_2, ..., L_{p_{j,k}}\}$ refer to the $p_{j,k}$ extracted labels that correspond to $G_{j,k}$ in its entirety. The goal of the Text Rectification stage is to retain the single most accurate extracted label $L_i$ in a given $S_{j,k}$ and exclude the rest - to filter $S_{j,k}$ such that there remains just one `representative' for $G_{j,k}$, in other words.

To organize our extracted labels into the sets $S_{j,k}$, we vectorize each extracted bounding box $P_i$ according to their bottom left and top right cartesian coordinates and implement DBSCAN on the four-dimensional vectors. \textcolor{red}{Tack on DBSCAN hyperparameters. Also, is this still true? ->} Outliers are slotted into their own individual $S_{j,k}$.

To filter our $S_{j,k}$ collections down to their most appropriate representatives, we first attempt to retain the label inside $S_{j,k}$ with the highest $C_i$. \textcolor{red}{Go into RANSACK.}

Let $\sigma_{j,k}^{*}$ be the single label from set $S_{j,k}$ after Text Rectification has occurred.

<math>G_{j,k}</math>

Text Amalgamation

Let $A_{j} = \{\sigma_{j,1}^{*}, \sigma_{j,2}^{*}, ...,\sigma_{j,r_j}^{*}\}$ refer to the $r_j$ extracted and Text-Rectified labels corresponding to subsets of $G_j$. The goal of the Text Amalgamation stage is to retain a single label from $A_{j}$: the label $\alpha_{j}^{*} = G_{j}$.

This process is performed iteratively. The first step in the amalgamation sequence consists of computing pairwise geometric and textual intersection over minimum (IoM) values between all $\sum_{j=1}^{M}r_j$ labels in the set $R = \{\sigma_{1,1}^*, \sigma_{1,2}^*, ..., \sigma_{1, r_1-1}^*, \sigma_{1, r_1}^*, \sigma_{2, 1}^*, ..., \sigma_{M, r_M-1}^*, \sigma_{M, r_M}^*\}$. For example, suppose we are comparing $\sigma_{a,b}^*$ and $\sigma_{c,d}^*$. In this case, geometric IoM equals $P_{a,b} \cap P_{c,d}$ divided by the area of the smaller polygon. Textual IoM, meanwhile, equals the number of non-unique shared characters in $T_{a,b}$ and $T_{c,d}$ divided by the length of the longer string. Those pairs with geometric IoM value $\gamma_{geom} > 0.75$ and textual IoM value $\gamma_{text} > 0.5$ are considered to exhibit a subset-parent relationship. They are therefore amalgamated, meaning (1) a new label $\sigma_{a,b,c,d}^*$ is added to $R$ with $P_{a,b,c,d} = P_{a,b} \cup P_{c,d}$ and $T_{a,b,c,d} = $ the longer string from $T_{a,b}$ and $T_{c,d}$, and (2) both $\sigma_{a,b}^*$ and $\sigma_{c,d}^*$ are dropped from $R$. When all possible amalgamations have been made based on the group of pairwise combinations satisfying our $\gamma_{geom}$ and $\gamma_{text}$ conditions, the sequence begins anew with updated $R$. The amalgamation stage terminates when $R$ ceases to yield possible amalgamations.

Once both rectification and amalgamation have occurred, the set of labels $L_1, L_2, ..., L_N$ has been condensed to $\alpha_{1}^*, \alpha_{2}^*, ..., \alpha_{M}^*$.

Word Combination

Evaluation

Results

Limitations

Future work

Github Repository

References

Literature

- Kim, Jina, et al. "The mapKurator System: A Complete Pipeline for Extracting and Linking Text from Historical Maps." arXiv preprint arXiv:2306.17059 (2023).

- Li, Zekun, et al. "An automatic approach for generating rich, linked geo-metadata from historical map images." Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 2020

Webpages

Acknowledgements

We thank Professor Frédéric Kaplan, Sven Najem-Meyer, and Beatrice Vaienti of the DHLAB for their valuable guidance over the course of this project.

- ↑ Liao, Minghui, et al. "Scene text recognition from two-dimensional perspective." Proceedings of the AAAI conference on artificial intelligence. Vol. 33. No. 01. 2019.