Tracking a Historic Market Crash through Articles

Introduction and Motivation

The impact of economic crises on the socio-economic fabric of society is immense, with widespread and far-reaching effects on social stability and people's standard of living, as they can cause markets to shrink, businesses to lose capacity and unemployment to soar. While the recovery period from economic crises can span several years and the economic damage can be comparable to that of a major natural disaster, we recognize that predicting the emergence of economic crises with complete accuracy is a challenging goal. We therefore turn to the potential link between financial newspapers and the early warning signs of economic crises. While we cannot expect to flawlessly predict crises through the news media, we believe that by analyzing textual data from financial newspapers, we can reveal some early signs of market trends that could be useful in mitigating the losses that individuals, businesses, and countries may suffer during economic crises.

The news media, as one of the main channels of information for the public, not only reflects the current economic situation, but also plays a crucial role in shaping the public perception of the economic situation. Therefore, the news media is not only a recorder of the economy, but the information it conveys may also influence the future direction of the economy.

As the world's largest economy, the economic dynamics of the United States have far-reaching implications for global markets. The global financial crisis of 2008, which began in the United States and quickly spread across the globe, had far-reaching consequences and lessons learned. The data from this period provide a valuable opportunity to study the patterns of major economic crises and crisis warning signals. In addition, compared with new media, traditional media are more authoritative and reliable, and can more faithfully reflect the economic conditions and public perceptions of the economic situation at the time. Therefore, we chose data on U.S. economic indicators from 2006 to 2013 as well as news reports from Bloomberg as our research dataset.

The goal of our research is to construct an accurate prediction model that extracts features from text data of news papers to predict changes in economic indicators. Through this prediction model, we expect to warn of major economic crises in advance and assist governments, enterprises and individuals to take early measures to mitigate the significant impacts of economic crises in order to protect the country, society and individuals from serious losses.

Methodology

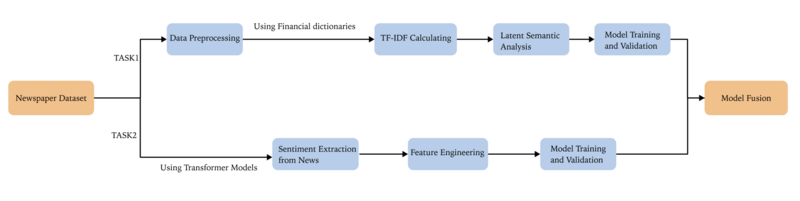

Pipeline

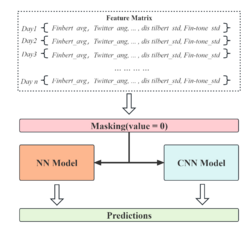

We divided the project into two independent tasks: one is the prediction based on TF-IDF, and the other is the prediction of sentiment scores of articles. After the completion of the two tasks, we integrated the two models to improve the prediction performance. The following picture illustrates the prediction process for our pipelines.

Data Collection

News dataset

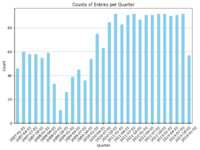

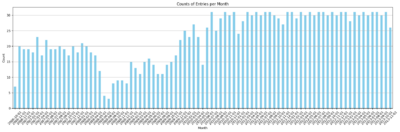

Bloomberg Businessweek is an economic and business-oriented weekly magazine published by Bloomberg, a global multimedia corporation. Renowned for its in-depth coverage, analysis, and commentary on global business, finance, technology, markets, and economics, this publication offers a comprehensive view of various industry trends, corporate strategies, and market dynamics. The dataset is generated from Bloomberg website through their public APIs by Philippe Remy and Xiao Ding. It contains 450,341 news from 2006 to 2013.

Predictive indicators

fredaccount.stlouisfed.org is affiliated with the Federal Reserve Bank of St. Louis and is part of its FRED (Federal Reserve Economic Data) system.FRED provides a wide range of economic data, including data from different sources. Users can find, download, plot and track economic data for various periods and regions on this site. Overall, fredaccount.stlouisfed.org is a valuable resource for anyone interested in researching and analyzing the economic situation. We looked up key economic indicators for the U.S. from 2006-2013.

Monthly indicators including:

- Employment Level

- Industrial Production Total Index (INDPRO)

- Manufacturing and trade Industries Sales (CMRMT)

Quarterly indicators including:

- Real Gross Domestic Product

- GDP Growth Rates

Data Preprocessing

Text Data Cleaning

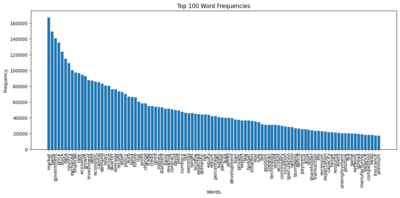

Textual data undergoes a rigorous cleaning process as the initial step. This involves the elimination of numerical digits, special characters, and punctuation marks from the text. This meticulous cleansing enhances the readability and suitability of the text for subsequent analyses.

Text Tokenization and Lemmatization

Post-cleaning, the text is subjected to tokenization and lemmatization. Tokenization disassembles the text into individual words or tokens, while lemmatization reduces these tokens to their base or root forms. This standardization minimizes the impact of varying word forms on analytical outcomes.

Stopwords Removal

The process of removing stopwords involves the exclusion of frequently occurring words that contribute minimal semantic value to the analysis. This includes a carefully curated set of stopwords, incorporating additional stopwords provided by citations2. By eliminating these extraneous words, the noise within the text is mitigated, resulting in more accurate analysis.

Feature Extraction

TF-IDF

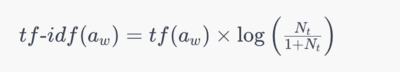

TF-IDF, an acronym for Term Frequency-Inverse Document Frequency, holds a fundamental position in text mining, serving as a cornerstone technique for assessing the significance of terms within a document collection. It operates on two core principles: Term Frequency (TF) and Inverse Document Frequency (IDF). In our analysis, we utilized a financial lexicon provided by MTC for TF-IDF computation. This lexicon facilitated the evaluation of the importance of specific financial terms within our text data.

- Term Frequency (TF) quantifies how often a term appears within an individual document. A term's frequency is higher when it occurs more frequently within a document.

- Inverse Document Frequency (IDF) encapsulates the significance of a term across the entire document corpus. It computes the reciprocal of a term's frequency across all documents, distinguishing common yet less consequential terms from those of higher corpus-specific importance.

The computation of TF-IDF involves multiplying TF by IDF, amalgamating a term's local significance within a single document with its global significance across the entire corpus.

In our research endeavors, the application of TF-IDF enables the evaluation of the significance of selected financial terms within individual articles. This methodology facilitates the identification of pivotal terms (a) within specific articles (w), prioritizing their contextual relevance over their frequency within the entire corpus. Furthermore, our approach avoids information leakage by utilizing daily occurrence counts of terms, ensuring the accuracy and reliability of our analytical processes. The frequency of the term in each article is defined as tf(a)w, the number of articles per day Nt, and the number of articles in which the term appears per day nt < Nt. The tf-idf function could be defined as:

Latent Semantic Analysis(LSA)

In the context of TF-IDF processing, the varying dimensions of text data due to grouping by quarters/months prompted us to employ Latent Semantic Analysis (LSA) for dimensionality reduction. LSA, employing Singular Value Decomposition (SVD) on the text-term frequency matrix, maps high-dimensional TF-IDF vectors to a unified lower-dimensional space, ensuring consistent data specifications. This approach not only reduces computational costs but also helps eliminate redundant information, enhancing the efficiency and performance of subsequent modeling tasks. Moreover, it captures latent semantic structures within the text data, providing substantial support for a deeper understanding of text relationships.

Transformer Models

We used four transformer models to extract the sentiment scores of the articles. Using transformer models for sentiment analysis involves applying advanced neural network architectures to understand and classify emotions in text. These models are known for their deep learning ability to process natural language, effectively interpreting complex linguistic nuances and contexts, and therefore detecting with a high degree of accuracy emotions in various texts, such as positive, negative, or neutral emotions.

-DistilBERT

DistilBERT is characterized by its smaller size and faster processing capabilities compared to its predecessor, BERT. It retains a significant majority of the original model's language understanding power. The model is optimized for performance and efficiency, designed to be cost-effective for training while still delivering robust NLP capabilities. The training foundation for DistilBERT is the same as BERT's, using the same pre-training datasets which typically include large corpora such as BooksCorpus and English Wikipedia.

-Twitter-roBERTa

This model(Twitter-roBERTa) is a variant of the roBERTa-base that has been trained on approximately 58 million tweets and fine-tuned for sentiment analysis. It is designed to classify sentiments expressed in tweets into negative, neutral, or positive categories. The model's training used the TweetEval benchmark and is tailored for English language tweets. This model is especially useful for analyzing social media content where expressing sentiments succinctly is common.

-FinBERT

FinBERT is a BERT model pre-trained on a wide range of financial texts to enhance financial NLP research and practice. It is trained on corporate reports, earnings call transcripts, and analyst reports, totaling 4.9 billion tokens. This model is particularly effective for financial sentiment analysis, a complex task given the specialized language and nuanced expressions used in the financial domain.

-FinBERT-Tone

This model(FinBERT-Tone) is a fine-tuned version of FinBERT specifically for analyzing the tone of financial texts. It has been adjusted on 10,000 manually annotated sentences from analyst reports, labeled as positive, negative, or neutral. The model excels in financial tone analysis tasks and is an excellent choice for those looking to extract nuanced sentiment information from financial documents.

After obtaining the sentiment scores for each news text, we aggregated the sentiment scores on a day-by-day basis. The mean and variance of the sentiment scores for the day predicted by the four pre-trained models were calculated and saved separately. Based on the assumption that neutral articles do not significantly affect people's perceptions, we did not include neutral (i.e., news with a sentiment score of 0) in the calculation of sentiment score statistics.

Machine Learning Models for Prediction

Model Introduction

Given the timestamped nature of the news text, our primary focus revolves around managing time series data. To tackle TF-IDF and sentiment analysis, we've adopted distinct prediction models. While exploring a spectrum of machine learning models and neural networks, we've meticulously filtered and retained the top-performing trio for each segment based on their efficacy within our dataset.

For TF-IDF, our selection comprises the GradientBoosting Regressor and Random Forest Regressor.

In the realm of sentiment analysis, we've opted for the Random Forest, Neural Networks, and Convolutional Neural Networks (CNN).

-GradientBoosting Regressor

The GradientBoosting Regressor is an ensemble learning method used for regression tasks. It builds a strong predictive model by combining multiple weaker models, usually decision trees, sequentially. It works by fitting each new model to the residuals (the difference between predicted and actual values) of the preceding model, gradually improving predictions. This iterative process focuses on reducing errors, enhancing model performance. GradientBoosting Regressor is adept at handling complex relationships in data and tends to provide more accurate predictions than individual models.

-Random Forest Regressor

The Random Forest Regressor constructs multiple decision trees during training and aggregates their predictions to provide a regression outcome. By combining predictions from diverse trees, it minimizes overfitting and variance while improving accuracy in regression tasks. This technique utilizes randomness in both data sampling and feature selection, making it robust against noise and suitable for handling complex datasets with numerous input variables. Its ability to handle non-linear relationships and maintain predictive performance makes it a popular choice in regression analysis.

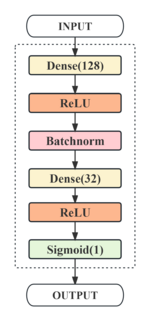

-Neural Networks (NN)

Neural Networks (NN) is a computing system that mimics the structure and function of the human brain to process complex data sets. It consists of multiple layers of nodes, each of which can receive, process and transmit information. Neural networks typically learn through a back-propagation algorithm that continuously adjusts the connection weights between nodes to best represent the characteristics of the input data. This learning ability allows neural networks to excel in tasks such as image recognition, natural language processing, and predictive modeling. The design and functional diversity of neural networks makes them a central component of artificial intelligence and machine learning research.

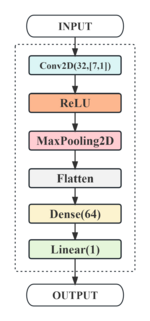

-CNN

Convolutional Neural Networks (CNNs) are a powerful tool in the field of deep learning and were first used for image recognition. In recent years, they have also been applied to time-series data analysis. In time-series analysis, CNNs can effectively capture local features and temporal dependencies in data. By using a convolutional layer, CNNs can process input sequences of different lengths to recognize patterns in data of different time intervals. The core advantage of temporal convolution is its ability to effectively extract time-dependent features while maintaining the order of the time series, which is crucial for prediction tasks.

Training Settings

Data Setting

Matching news features with economic indicators using a sliding time window, considering different lengths of the time series, with 31 and 92 days as the maximum number of days in a month and quarter, and missing dates are filled with zeros using post-padding.

Model Construction

- RF: One-dimensional spreading of the feature matrix is performed as input and max_features is set to 'sqrt' to allow the decision tree to continue to grow when the minimum branching condition is satisfied.

- NN: A double hidden layer structure incorporating BatchNormalization was designed to perform regression analysis using a linear activation function designed to capture the complex relationship between news text and economic indicators.

- CNN: A convolutional kernel of size (n,1) is applied to design time-series convolutional layers of different sizes for monthly and quarterly metrics in order to extract features at different time scales.

- MLP: An MLP architecture featuring two hidden layers integrated with BatchNormalization was crafted for regression analysis. It utilizes a linear activation function, tailored to comprehend the intricate correlations between news text and economic indicators.

Model Training and Testing

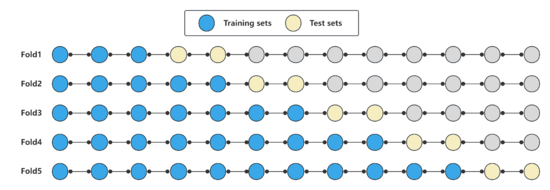

Time Series Cross-Validation: Given the unsuitability of regular cross-validation for time series data, we adopt a time window approach for conducting 5-fold cross-validation. This method evaluates the model's performance at various time points through time-series cross-validation, ensuring the retention of complete historical data to maintain stability in the length of the training sequence.

Masking Mechanism: In the sentiment analysis task, a masking mechanism was introduced to handle sparse data due to time windows, while regularization and early stopping were applied to mitigate overfitting.

Rolling test: We utilize the "expanding window" method for our rolling test, progressively enlarging the training and prediction window over time. Starting with a smaller historical dataset, the model undergoes training and predicts future data points. As time progresses, the window size increases incrementally, allowing the model to incorporate more historical data for training and prediction. This adaptive approach enables the model to better capture evolving patterns within the time series data and offers a dynamic assessment of its predictive performance across varying time frames.

Model Parameter Setting

| Financial indicators | Model name | Basic structure | Optimization (optimizer, loss function) | Training details |

|---|---|---|---|---|

| GDP | RandomForestRegressor | *n_estimators=50 *min_samples_split=2 | R2 | *max_depth=5 *random_state=42 |

| GDP | GradientBoostingRegressor | *learning_rate=0.1 *n_estimators=150 | R2 | *max_depth=5 |

| PAYEMS | RandomForestRegressor | *n_estimators=50 *min_samples_split=2 | R2 | *max_depth=10 *random_state=42 |

| PAYEMS | GradientBoostingRegressor | *learning_rate=0.1 *n_estimators=150 | R2 | *max_depth=3 |

| INDPRO | RandomForestRegressor | *n_estimators=100 *min_samples_split=2 | R2 | *max_depth=10 *random_state=42 |

| INDPRO | GradientBoostingRegressor | *learning_rate=0.1 *n_estimators=150 | R2 | *max_depth=3 |

| CMRMT | RandomForestRegressor | *n_estimators=100 *min_samples_split=5 | R2 | *max_depth=10 *random_state=42 |

| CMRMT | GradientBoostingRegressor | *learning_rate=0.1 *n_estimators=150 | R2 | *max_depth=3 |

| Basic structure | Optimization (optimizer, loss function) | Training details | |

|---|---|---|---|

| RF |

|

HRNetV2p-W18-Small |

|

| NN |

|

Adam(lr = 0.001) loss='mean square error' |

|

| CNN |

|

Adam(lr = 0.001) loss='mean square error' |

|

Model Integration

After obtaining the best performing TF-IDF model and sentiment score-based model, respectively, we expect to get better prediction performance through model fusion. We compared three different model fusion strategies.

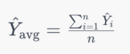

Average Fusion

Simple averaging is a good choice when multiple models have similar performance. Its basic formula is:

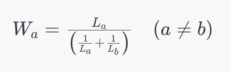

Loss-weighted Fusion

This method is a variant of the weighted fusion method we tried, and the basic idea is that when there is no a priori information available to determine the weights, the model with better performance (i.e., lower loss) should have more influence in the final prediction. The basic steps in loss-weighted fusion are:

1.Calculate the loss: first, you need to calculate the loss for both models on the same validation set.

2.Calculate weights: Next, calculate the weights for each model based on the losses, in this case we set the weights as the inverse of the losses.

3.Normalize the weights: make sure the weights sum to 1 for weighted averaging.

Model Stacking

The basic idea of model stacking is to use a new model to learn how best to combine the predictions of different models. This new model is often referred to as a "secondary learner". This approach explores the complex relationships between the predictions of the underlying models. In this project, we use linear regression as the secondary learner. The predictions of the two models are used as new feature inputs, and the linear regression model is trained to fit the mapping between the two and real economic indicators.

Results and Quality Assessments

Assessment

TF-IDF

In the comprehensive evaluation of model fitting, we considered various metrics. Especially given the varied scales of our datasets, MSE, MAE, MAPE, and R2 were chosen as our evaluation benchmarks to gain a holistic understanding of the model's performance across different facets. To ensure the model's interpretability and prevent overfitting, we employed R2 as the loss function during the grid search for TF-IDF hyperparameter tuning. During the testing phase, we integrated these four metrics to provide a comprehensive assessment of the model's performance.

Sentiment Analysis

When training for sentiment analysis, we examine three different loss functions. In the calculation of MSE, larger errors are penalized more heavily as they are squared, thus making the model more sensitive to outliers; furthermore, assuming that the errors satisfy a Gaussian distribution, MSE conforms to the maximum likelihood estimation of the Gaussian distribution that minimizes the errors. Because of MSE's sensitivity to large errors and optimization friendliness, we chose the most suitable MSE loss function for model training.

The following is a description of the assessment indicators:

Mean Squared Error (MSE) is a statistical measure used to evaluate the accuracy of a predictive model. It calculates the average of the squares of the differences between predicted and actual values. The squaring ensures that errors are positive and emphasizes larger errors more than smaller ones. A lower MSE value indicates a model with better predictive accuracy.

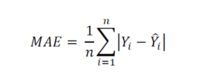

Mean Absolute Error (MAE)is a metric used to measure the average magnitude of errors between predicted and actual values in a dataset. To calculate MAE, you first find the absolute differences between predicted and actual values for each data point, then take the average of these absolute differences. In machine learning and statistical modeling, MAE serves as a common evaluation metric to assess the performance of models. A smaller MAE indicates better accuracy in predictions.

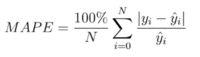

Mean Absolute Percentage Error(MAPE)measures the average percentage difference between predicted and actual values. It has an advantage on small-scale datasets such as CAGR as it represents errors in percentage terms, providing a clearer reflection of relative errors regardless of the data's scale. This makes it useful for comparisons across different scales as it offers a consistent measure unaffected by data magnitude.

Coefficient of Determination(R2)is a metric used to assess the goodness of fit of a regression model. It represents the proportion of variance in the dependent variable that is predictable from the independent variables. This score ranges from 0 to 1.

In particular, given that we have multiple economic indicators, we need to evaluate the performance of the models in a comprehensive manner.

For each model, we performed at least 5 repetitions of the experiment. The reproducibility of the model is ensured by setting random seed. The average of the five experiments was taken as the final performance evaluation of the model. The results of the repeated experiments can be accessed in the Deliverables.

Results

Prediction results based on TF-IDF

Prediction results based on TF-IDF generally showed promising performance in a training-testing split (4:1). However, this outcome might be influenced by overfitting due to the relatively small dataset size. To address this, we implemented an alternative approach utilizing a variable-length rolling window test. This allowed us to expand our test dataset, aiming for more objective prediction results. The following solely presents the model's error evaluation on the rolling test.

| Financial indicators | Model name | MSE | MAE | MAPE(%) | R2 |

|---|---|---|---|---|---|

| GDP | RandomForestRegressor | 7.358735e-07 | 0.000811 | 1.984610 | -3.118173 |

| GDP | GradientBoostingRegressor | 0.000591 | 4.299385e-07 | 1.451799 | -1.406067 |

| PAYEMS | RandomForestRegressor | 2.803849e+06 | 1399.882857 | 1.045994 | 0.361577 |

| PAYEMS | GradientBoostingRegressor | 3.084818e+06 | 1285.997459 | 0.962610 | 0.297601 |

| INDPRO | RandomForestRegressor | 4.740534 | 1.811326 | 1.876032 | 0.250167 |

| INDPRO | GradientBoostingRegressor | 5.368031 | 1.870319 | 1.942909 | 0.150913 |

| CMRMT | RandomForestRegressor | 26173.997374 | 9.204007e+08 | 2.168261 | 0.328040 |

| CMRMT | GradientBoostingRegressor | 24436.365040 | 9.425498e+08 | 2.031371 | 0.311869 |

Prediction results based on sentiment score

In evaluating the INTRO metrics, we explored various model and parameter configurations with and without masking (masking). After a series of experiments, we conclude that CNN-2 performs best under these conditions. Based on this finding, we believe we have found an effective model adapted to the current demand for forecasting time series data.

| Hyperparameters | Label | Loss Test | MSE_all | |

|---|---|---|---|---|

| CNN-1 | Default | INDPRO | mean_squared_error | 0.0458 |

| CNN-2 | Masking | INDPRO | mean_squared_error | 0.0296 |

| NN-1 | Default | INDPRO | mean_squared_error | 0.1097 |

| NN-2 | Masking | INDPRO | mean_squared_error | 0.097 |

| RF-1 | Default | INDPRO | MSE,RMSE,R2 | MSE: 0.1062, RMSE: 0.3258, R2: -0.0737 |

| RF-2 | Masking | INDPRO | MSE,RMSE,R2 | MSE: 0.1052, RMSE: 0.3244, R2: -0.0642 |

We experimented on a range of other key metrics as well, and ultimately confirmed that the CNN performs well and is an excellent model.

| Model | No. | Labels | Loss Function | Validation Loss | ||

|---|---|---|---|---|---|---|

| cnn | RF | NN | ||||

| Multi-Label Prediction | 1 | CMRMT | mean_squared_error | 0.0521 | 0.0963 | 0.0667 |

| 2 | CE16OV | mean_squared_error | 0.0518 | 0.0811 | 0.1008 | |

| 3 | INDPRO | mean_squared_error | 0.0564 | 0.1105 | 0.1155 | |

| 4 | RGDP | mean_squared_error | 0.0131 | 0.1361 | 0.0984 | |

| 5 | CAGR2 | mean_squared_error | 0.0269 | 0.0405 | 0.0415 | |

Prediction results of Integration

For model fusion, we chose the CMRMT metric for prediction. In order to compare the error between the predicted and true values more visually, we chose MAE、MAPE to evaluate the performance of the base and fused models. Since CNNs are trained on normalized metrics, we back-normalize the output of CNNs before calculating the error.

The MAE errors of different models are shown in the table. It can be seen: Linear stacking fusion has the smallest error and the average error between predicted and true values is smaller than the base model and other fusion models.

| Models | MAE | MAPE | |

|---|---|---|---|

| Original Model | sentiment-score-based CNN | 18897.58 | 1.47% |

| tfidf-based GBR | 41084.53 | 3.26% | |

| Fusion Model | Average Fusion | 25240.92 | 2.00% |

| Loss-Weighted Fusion | 21768.10 | 1.75% | |

| Linear Stacking Fusion | 9196.86 | 0.70% |

Limitations

Feature engineering

TF-IDF

The TF-IDF method has been exclusively applied to individual financial terms, neglecting potential synergies that could offer vital contextual insights. Furthermore, the uneven distribution of text volumes across certain months and quarters, particularly when text volumes are in single digits, has constrained the matrix dimensions post-dimensionality reduction through LSA. This limitation hinders the extraction of more diverse topic features.

Sentiment analysis

In the realm of Transformer models, their performance could suffer when applied to financial text analysis due to the lack of specific training in this domain. An intriguing observation surfaced: a significant portion of financial text was labeled as neutral, hinting at challenges in extracting nuanced semantic connections and text features within financial contexts.

In addition, our approach has been limited to calculating the statistics and TF-IDF matrix solely based on sentiment scores of the news text, without a comprehensive consideration of all its features.

Overfitting

When dealing with a small volume of text data, we initially achieved strong model fitting with a 4:1 train-test split. However, transitioning to a rolling test revealed a decline in predictive performance, suggesting potential overfitting issues due to the dataset's limited size. Despite applying regularization techniques and early stopping, the challenge persisted. Notably, there's a clear performance gap between the model's training and test sets, further highlighted by time-series cross-validation, indicating the impact of dataset size on model performance.

Future Work

Data Augmentation and Balancing Expanding the existing dataset is crucial for enhancing the model's generalization capability and accuracy. This involves gathering more diverse data samples, such as news texts and economic indicator data from different regions and periods. Besides, augmenting the dataset is critical to ensuring a more even distribution by maintaining a minimum volume of textual data for each month and quarter, thereby reducing the impact of data imbalances and improving the model's ability to generalize across different time periods.

Feature Expansion and Fusion Feature engineering entails exploring new sets of features and delving into potential correlations among existing ones. Moreover, optimizing feature fusion strategies is an important research direction. Our current approach involves decision fusion among different models, but limited feature dimensions might restrict the model's learning capacity. Hence, the focus lies in effectively integrating features from diverse sources and types to enhance the model's learning ability.

Multimodal Learning Multimodal learning encompasses the simultaneous processing and analysis of data from various modalities like text, images, and potentially other sources. This approach offers a broader perspective on complex and diverse datasets, significantly enhancing a model's capability to handle intricate information. Delving into multimodal learning will be crucial for future endeavors, as it provids a comprehensive view of data that amplifies the model's adaptability to diverse datasets.

Refined Feature Engineering Although deep learning models can autonomously learn complex data features, introducing more refined feature engineering techniques such as named entity recognition or topic analysis is still expected to optimize model training effectiveness and enhance understanding of financial text.

Manual Annotation and Utilization of Domain Expertise Manually annotating datasets and combining domain-specific knowledge in the finance sector to fine-tune Transformer models. This rigorous approach might enhance model performance by better capturing subtle nuances in financial text analysis.

Project Plan and Milestones

Weekly Project Plan

| Week | Tasks | Completion |

|---|---|---|

| Week 4 |

|

✓ |

| Week 5 |

|

✓ |

| Week 6 |

|

✓ |

| Week 7 |

|

✓ |

| Weeks 8–9 |

|

✓ |

| Week 10 |

|

✓ |

| Week 11 |

|

✓ |

| Week 12 |

|

✓ |

| Week 13 |

|

✓ |

| Week 14 |

|

✓ |

Milestones

Milestone 1

- Draft a comprehensive project proposal outlining aims and objectives.

- Identify datasets with appropriate time granularity and relevant economic labels.

- Prepare and clean selected datasets for analysis.

Milestone 2

- Master the NLP processing workflow and techniques.

- Construct TF-IDF representation and emotional indicators in news data.

- Conduct preliminary model adjustments to enhance accuracy based on initial data.

Milestone 3

- Implement pre-trained models for sentiment analysis and integrate them into the project.

- Apply decision fusion techniques to optimize model performance.

Milestone 4

- Prepare the final presentation summarizing and visualizing the project findings and outcomes.

- Create and finalize content for the Wikipedia page, documenting the project.

- Conduct a thorough project review and ensure all documentation is complete and accurate.

Deliverables

Source codes and training recordings:github

Datas:GoogleDrive