Sanudo's Diary

Motivation

Marino Sanudo's diaries are a rich source of political, cultural and geographical landscape of 15-16th century Venice. However, one of the main challenges in utilizing such a historical resource is the absence of standardized and georeferenced data that allows modern scholars to interact with the content in a spatial context.

This project aims to address that gap. The goal of this project is to extract named entities (people, places, events, etc.) from Marino Sanudo’s diaries and georeference place names on a modern map of Venice and its territories. After all, it is not often that such a vast historical narrative exists and survives to modern day. By offering a immersive, spatially interactive experience with the Diary, historical scholars and everyday students alike will enhance their understanding of the historical events described in the diaries. Scholars can compare the past and present geographical realities of Venice, through the eyes of Marino Sanudo.

Background

"...the continuity of events and institutions collapses into the quotidian. ...Unreflecting, pedantic, and insatiable, he aimed "to seek out every occurrence, no matter how slight," for he believed that the truth of events could only be grasped through an abundance of facts. He gathered those facts in the chancellery of the Ducal Palace and in the streets of the city, transcribing official legislation and ambassadorial dispatches, reporting popular opinion and Rialto gossip" [1]

| Status | Venetian historian, author and diarist. Aristocrat. |

| Occupation | Historian |

| Life | 22 May 1466 - † 4 April 1536 |

Marino Sanudo (1466-1536) was a Venetian historian, author and diarist. His Diarii was an irreplaceable source of history for his time. He began documenting in the diaries on Jan 1, 1496, and carried on writing the diaries up until 1533. The diaries consist of 40,000 pages published as I diarii, 58 vol. (1879–1902; “The Diaries”)[2]

The Diaries of Marin Sanudo represent one of the most comprehensive daily records of events ever compiled by a single individual in early modern Europe. They offer insights into various aspects of Venetian life, from "diplomacy to public spectacles, politics to institutional practices, state councils to public opinion, mainland territories to overseas possessions, law enforcement to warfare, the city's landscape to the lives of its inhabitants, and from religious life to fashion, prices, weather, and entertainment" [3].

| Diary Duration | 1496-1533 | 37 |

| Quantity | 58 Volumes | around 40000 pages |

| Content Style | deal with any matter | regardless of its ‘importance’ |

Deliverables

The deliverables of our Transforming Sanudo's Index project are the following:

- Named Entity Extraction and Categorization pipeline: an automatic pipeline for extracting named entities of places in Venice from Sanudo's diary.

- Interactive Diary: An interactive diary online showcasing the locations and people names along with context from the diaries' index.

Front-end Functionality and Key Design Features

Main Functions

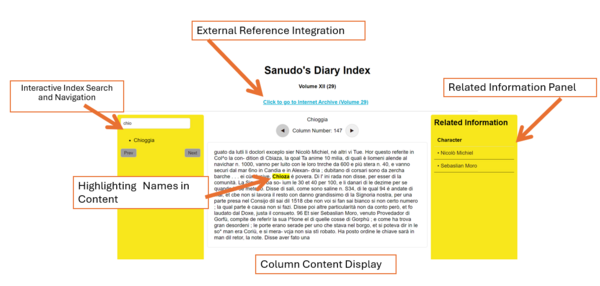

Interactive Index Search and Navigation

- Functionality: A search box allows users to filter and find place names within the index. The results are displayed dynamically in a paginated format, with Prev and Next buttons for easy navigation.

- Purpose: Streamlines the process of exploring the index, making navigation intuitive and efficient.

- Highlight: user-friendly and effective filtering and navigation capabilities.

Column Content Display

- Functionality: Clicking an index entry displays corresponding diary content, including column numbers and text excerpts. For places appearing in multiple columns, users can navigate with ◀ and ▶ buttons.

- Purpose: Connects index entries with diary content, enabling detailed exploration of historical data.

- Highlight: Illustrates the integration of index and diary content, emphasizing the significance of linking primary sources to structured data.

Highlighting Names in Content

- Functionality: Automatically highlights place names and aliases in the displayed text to make them easily identifiable.

- Purpose: Enhances readability and supports analysis by drawing attention to key information.

- Highlight: Provides a visual aid to emphasize important index name, improving user understanding.

Related Information Panel

- Functionality: Displays names of characters mentioned in the selected diary content. Future enhancements could include information on organizations, related events, and more. The panel updates dynamically with the content.

- Purpose: Offers contextual insights by linking places to associated people and events, deepening historical exploration.

- Highlight: Demonstrates dynamic data linking and the potential for future expansion.

- Note: name detection method: match pattern with'sier + two words after' . We have tried using AI language models to extract name from text, but deal to the run time efficiency (faster giving detection result) and the complex feature of communication between frontend and backend, we finally decided to use simpler method in current stage). In the future, more detailed info can be put. Ideas like: introduction to organizations appeared; related event happening at this place (for example, we read that Sanudo described in his diary that a certain plaza held lucky-drawing activities and was very famous at that time and place), and so on.

External Reference Integration

- Functionality: Provides direct links to relevant volumes on the Internet Archive for further validation and study.

- Purpose: Ensures transparency and offers users access to original scanned sources.

- Highlight: Reinforces authenticity and scholarly rigor through seamless integration of external references.

Data Processing Pipeline

- Functionality: Processes structured data from CSV files containing place names, columns, and diary excerpts, enabling dynamic front-end loading.

Key Design Highlights

1. Search and Pagination: Responsive search and simple pagination controls make exploring a large dataset manageable.

2. Dynamic Data Synchronization: Selecting an index entry updates the diary content and related information panel in real-time, showcasing well-integrated views.

3. Highlighting Algorithm: Matches and emphasizes key terms for readability, ensuring focus on critical information.

4. Accessible UI Design: A clean, structured layout (index on the left, diary content in the center, and related information on the right) ensures a seamless user experience.

5. Integrate Original Diary: Linking to the Internet Archive underscores data integrity and facilitates cross-referencing.

Project Milestones

| Week | Date | Current Progress | Future Goals |

|---|---|---|---|

| Week 3 | 2024/10/3 |

|

|

| Week 4 | 2024/10/10 |

|

|

| Week 5 | 2024/10/17 |

|

|

| Week 6 | 2024/10/24 |

|

|

| Week 7 | 2024/10/31 | Autumn Break | Autumn Break |

| Week 8 | 2024/11/7 |

|

|

| Week 9 | 2024/11/14 |

|

|

| Week 10 | 2024/11/21 |

|

|

| Week 11 | 2024/11/28 |

|

|

| Week 12 | 2024/12/5 |

|

|

| Week 13 | 2024/12/12 |

|

|

| Week 14 | 2024/12/19 |

|

|

Methodology

Generating Place Entities

We extract the relevant place entities from the OCR-generated text file of Sanudo's index. This is essential to our eventual goal of visualizing name and place entities (i.e., important places and names documented by Sanudo) from the index onto a map of Venice. Thus, we filter out the place entities that exist only in Venice (not outside of it) and link them to coordinates to display on a map. Furthermore, each place corresponds to one or more "indices," i.e., columns in which the place name appeared in Sanudo's diaries. We extract the name entities linked to the place entity at each index (see: Associating Person Names with Place Names). Finally, we would integrate the place and person names, along with the appropriate context in Sanudo's Index, to a visual map.

Pre-processing OCR Generated Text File

The text file was sourced from the Internet Archive and required extensive preprocessing to structure it for further analysis. The content was split into columns by identifying specific text patterns. Each page consists of two columns. Typically (though with exceptions due to inconsistencies in the provided OCR scans), pages are delineated in the OCR-processed text by two page numbers and a sequence of 2x3 line breaks, which serve as key markers for separation. Within each page, individual columns are further separated by three consecutive line breaks.

We utilized regular expression matching to identify and separate the text into pages, and subsequently into individual columns. However, due to inconsistencies in the OCR text—such as errors in identifying or numbering columns—a round of manual verification was conducted. This ensured that each extracted column was accurately associated with its corresponding column number and ready for integration into downstream pipelines, including the paragraph extraction pipeline.

The place entities relevant to this project are contained in the INDICE GEOGRAFICO section at the end of each volume. A typical entry in Volume 29, in the OCR text, looks like this:

Adrianopoli (Andemopoli) (Turchia), 207, 212, 299, 303, 304, 305, 306, 322, 323, 342, 358, 391, 549.

Here:

- Adrianopoli is the "primary" place name of the place denoted.

- Andemopoli is an alias.

- Turchia (Turkey) provides additional context.

- The column numbers span two lines, listing the columns where the place is mentioned.

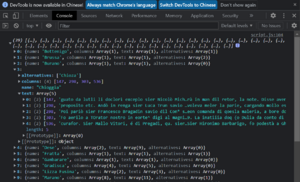

Using regular expression patterns, we extracted this data into a computer-processable list of dictionaries. Each dictionary takes the following format:

{[complete list of names, aliases, and additional information of a place]: [list of column numbers]}

For example, the entry above was converted into:

{[Adrianopoli, Andemopoli, Turchia]: [207, 212, 299, 303, 304, 305, 306, 322, 323, 342, 358, 391, 549]}

The resulting list of dictionaries was then manually cross-verified with the original index to confirm its accuracy and ensure that all content from the index was retained.

Place Name Verification Pipeline

The pipeline utilizes three APIs to locate whether a place entity exists in Venice: Nominatim (OpenStreetMap), WikiData, and Geonames. For example, to check if the place entity "Adrianopoli" exists in Venice, the pipeline sends the following HTTP GET request to the Nominatim API:

Request URL: https://nominatim.openstreetmap.org/search

Request Parameters: q: "Adrianopoli" (the place entity being searched) format: "json" (response format) addressdetails: 1 (spoof address information)

Request Headers: User-Agent: "Mozilla/5.0 (compatible; MyApp/1.0; +http://example.com)" (spoofed) Response Example:

The API returns a JSON object with information about matching locations. If "Adrianopoli" is located in Venice, the response includes its coordinates and address details, such as (data for example purposes ONLY):

[ { "lat": "45.43713", "lon": "12.33265", "display_name": "Adrianopoli, Venice, Veneto, Italy", "address": { "city": "Venice", "county": "Venice", "state": "Veneto", "country": "Italy" } } ]

If no matches are found or the location is not in Venice, the response is an empty array ([]).

Below is a real example of the data stored for Marano upon finding a match in Venice. Notice that the pipeline saves coordinates for each API, but ultimately stores the latitude and longitude to be the last successful API coords that provided a match.

{

"id": 21,

"place_name": "Marano",

"place_alternative_name": ["FrìuliX"],

"place_index": ["496", "497", "546", "550", "554", "556", "557", "584"],

"nominatim_coords": ["45.46311235", "12.120517939849389"],

"geodata_coords": null,

"wikidata_coords": null,

"nominatim_match": true,

"geodata_match": false,

"wikidata_match": false,

"latitude": "45.46311235",

"longitude": "12.120517939849389",

"agreement_count": 1

}

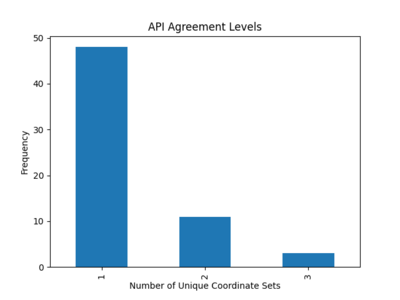

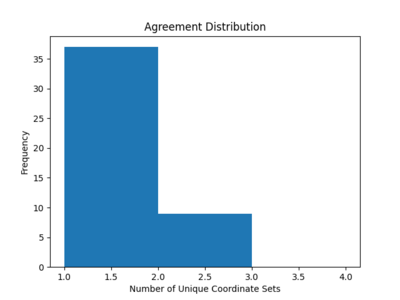

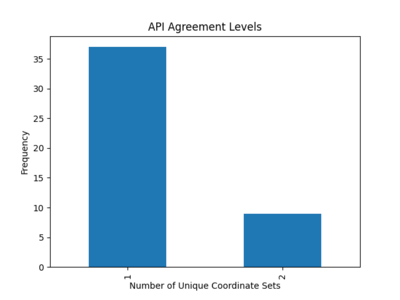

The agreement_count in the code represents the number of unique coordinate sets returned by the three APIs: Nominatim, Geodata, and Wikidata. It is a way to measure whether there is consensus among these APIs about the geographical location of a given place. We manually review the extracted place names to identify ambiguities based upon the agreement count. To resolve these ambiguities in the extracted data and improve the reliability of the results, we make these specific adjustments:

- Exclusion of Ambiguous Names: Several extracted place names had alternative names listed as "veneziano," which caused confusion in API queries. These names were removed from the search queries to improve accuracy.

- Error Handling for API Outputs: The Geodata API occasionally returned locations outside of Venice, such as places in Greece, as part of the results for Venetian entities. To address this issue, special case handling was added to the pipeline to filter out such erroneous results.

See Results for more information on the API accuracy pre- and post-refinement of the pipeline.

The next step is to match each instance of a place appearing in the text by extracting the specific paragraph that contains the place name. If a "paragraph," as determined in the earlier steps, contains the place name, we refine the extraction to ensure it represents the full, actual paragraph. This involves checking whether the "paragraph" might be part of the same larger paragraph as adjacent paragraphs in either the next or previous column.

Paragraph Matching Methods

To identify all paragraphs containing a specific place_alias for each combination of ([place_name + alternative_name] x place_index) corresponding to a given place, we retrieve all paragraphs within each column in the place_index and apply five matching methods to compare the place_alias with each paragraph.

The methods are as follows:

- Exact Match: Compares slices of the text directly with the place_alias. A match is successful if the slice matches the place_alias exactly, ignoring differences in capitalization.

- FuzzyWuzzy Match: Uses a similarity score to compare the place_alias with slices of the text. If the similarity score exceeds a predefined threshold, it counts as a match. This method allows for approximate matches while disregarding case differences.

- Difflib Match: Calculates a similarity ratio between the place_alias and slices of the text. If the ratio meets or exceeds a predefined threshold, it considers the match successful, tolerating small variations between the two.

- FuzzySearch Match: Identifies approximate matches by allowing a specific number of character differences (referred to as "distance") between the place_alias and slices of the text. A match is confirmed if any such approximate matches are found.

Note: Cosine similarity was considered but abandoned due to its tendency to overlook fuzzy matches and its disproportionately high processing time compared to other methods.

Manual cleaning efforts are required even after the geolocation step and before the data is fed into the paragraph-matching pipeline. For example, consider place names like "di S. Croce," which specifically refers to the district/parish within Venice dedicated to Saint Croce. However, in one instance where it appears in column 462, the actual text reads: "et dil seslier di Santa Croze rimase sier Polo Donado." In such cases, we isolate "Croce" and inject it into the alternative names/aliases list of the corresponding place in the post-geolocation results. This ensures that the paragraph-matching step can succeed, even when the text uses an alternate or abbreviated form of the place name.

Associating Person Names with Place Names

As a further step, we identify person names associated with each place name. For the current feasibility of the project, we adopt a straightforward criterion: person names that appear in the same paragraph as a given place name are considered "associated" with that place.

For now, we deliberately avoid using the human name index from the document or constructing database entities specifically for place names. Instead, we attach extracted names directly to a field in the database row corresponding to each paragraph.

Database Output Format

The resulting database relation table will document all names associated with a place name and their specific context. The table format is as follows:

Place_name_id, Paragraph, People_names

This format ensures flexibility while maintaining sufficient detail for further analysis.

Frontend development

The frontend was developed locally with the aid of ChatGPT (free plan) and completed in approximately 12 hours by one team member. The process began with an initial webpage design, followed by generating solutions through ChatGPT. The primary focus was on refining the CSV parsing logic and integrating it with the frontend UI. This included addressing issues with parsing place names in JSON-like formats (`['Name1', 'Name2']`), ensuring proper validation and conversion to valid JSON, debugging errors such as undefined variables, and enhancing error handling for edge cases. Once the parsing function reliably produced structured data (`name`, `aliases`, `column`, `text`) compatible with other functions, the prototype of the website was nearly complete.

Results

Place Name Verification Pipeline Refinement

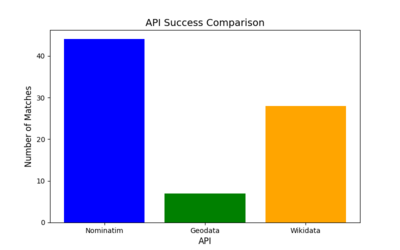

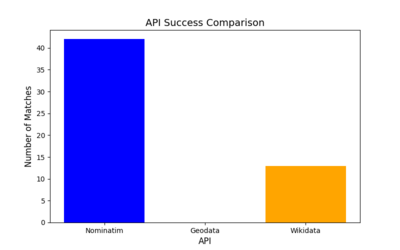

Results Before Refinement (12/05)

- Total Places Processed: 62

- API Success Rates:

- Nominatim: 69.35%

- Geodata: 12.90%

- Wikidata: 45.16%

- Disagreements: 13 places had conflicting results, with 49 locations showing agreement across APIs.

- Potential Conflicts: Two large discrepancies in coordinates for Marghera were identified, with differences exceeding 2.7 km between APIs.

Results After Refinement (12/18)

- Total Places Processed: 46 (decreased due to removing redundant alternative name entities such as "veneziano" from pipeline)

- API Success Rates:

- Nominatim: Improved to 91.30%.

- Geodata: Dropped to 0.00%.

- Wikidata: Decreased to 28.26%.

- Disagreements: Reduced to 9, with 37 locations fully agreed upon by all APIs.

- Potential Conflicts: No significant coordinate conflicts were detected.

Case Study: Brescia

Brescia was part of the Venetian Republic, also known as La Serenissima, from 1426 to 1797, benefitting from its trade network and governance. However, it is not a part of modern-day Venice. Below is the extracted place name information before we refined the pipeline to remove "false positives".

"id": 7,

"place_name": "Brescia",

"place_alternative_name": [

"BrexaJ"

],

"place_index": [

"36",

"51",

"55",

"56",

"60",

"65",

"66",

"67",

"73",

"94",

"101",

"115",

"160",

"171",

"177",

"195",

"200",

"204",

"211",

"223",

"259",

"270",

"298",

"322",

"330",

"334",

"335",

"345",

"382",

"383",

"385",

"446",

"462",

"463",

"468",

"501",

"504",

"518",

"532",

"565",

"600",

"648",

"661"

],

"nominatim_coords": null,

"geodata_coords": null,

"wikidata_coords": [

45.538888888889,

10.220280555556

]

As seen above, modern geolocation tools like Nominatim and Geodata struggle to identify such historical affiliations, as they focus on current administrative boundaries and lack temporal data. However, Wikidata excels in historical geolocation by storing temporal qualifiers and semantic relationships (e.g., "part of" with a specific time frame). Wikidata stores not only current geolocations but also historical affiliations and changes in administrative boundaries.

Final Observations

- A threshold of 1000 meters was used to identify potential conflicts between APIs based on large coordinate discrepancies. After refining the pipeline, no conflicts exceeding this threshold were found, suggesting that the new pipeline improved coordinate consistency.

- Nominatim showed the highest success rate and reliability for modern-day Venice entities. We infer that the success rate improved due to the removal of redundant alternative name place entities.

- Geodata's performance was poor, failing to match coordinates effectively.

- Wikidata showed moderate performance but appeared to have issues with accuracy and agreement. However, it excelled at placing entities within a historical context, which is relevant to this project.

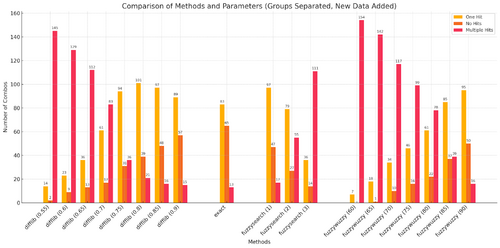

Parameter Tuning for Index-to-Paragraph Matching Methods

We conduct parameter tuning on the fuzzy matching methods (fuzzywuzzy, fuzzysearch, and difflib) to investigate if using a single automated matching method alone can yield decent to good matching results. The tuning focuses on the thresholds used to determine a match:

- For fuzzywuzzy, the threshold is the partial ratio (percentage similarity) between two strings required to count as a match.

- For difflib, the threshold is the similarity ratio calculated by its SequenceMatcher to consider the strings similar enough.

- For fuzzysearch, the threshold is the minimum allowable word distance between strings.

The parameter arrays tested are as follows:

{

"fuzzywuzzy": [60, 65, 70, 75, 80, 85, 90],

"fuzzysearch": [1, 2, 3],

"difflib": [0.55, 0.60, 0.65, 0.70, 0.75, 0.80, 0.85, 0.90]

}

For evaluation, we plot the total number of matches (hits) versus the column number for each method and parameter combination. This includes comparisons with the baseline exact matching scheme to determine how much each method overmatches (returns too many results) or undermatches (returns too few results).

A preliminary (but by no means definitive) look at the geographical index reveals that most place_index + place_name combinations typically have exactly one matching paragraph. Therefore, our goal is to:

1. Maximize the number of combinations with exactly one match.

2. Minimize the number of combinations with no matches or multiple matches.

Results are as follows:

Top Candidates

- Method: fuzzywuzzy (threshold = 85)

- One Hit: 85

- No Hits: 37

- Multiple Hits: 39

- Pros: Achieves a relatively high count of "One Hit" results, with moderate levels of "No Hits" and "Multiple Hits."

- Cons: Slightly higher "Multiple Hits" than ideal.

- Method: difflib (threshold = 0.75)

- One Hit: 94

- No Hits: 31

- Multiple Hits: 36

- Pros: Provides the best balance, with a very high "One Hit" count and relatively reasonable "No Hits."

- Cons: Slightly higher "Multiple Hits" compared to fuzzywuzzy (85).

- Method: difflib (threshold = 0.8)

- One Hit: 101

- No Hits: 39

- Multiple Hits: 21

- Pros: Achieves the highest "One Hit" count and significantly reduces "Multiple Hits."

- Cons: Slightly higher "No Hits" than other top candidates.

Final Decision

Taking all results into account, difflib (0.75) and difflib (0.8) emerge as the most optimal choices:

- If the priority is to minimize potential false positives (represented by multiple matches), difflib (0.8) is the better choice due to its very low "Multiple Hits" count.

- However, if avoiding false negatives (no matches) is more important, difflib (0.75) strikes a better balance.

Case Study: Matching Performance Analysis for "Marghera"

To evaluate how effectively the non-LLM matching methods capture or fail to capture place_name and column number combinations, we selected "Marghera" (and its alternative name "Margera") as a case study. "Marghera" is a region on the Venetian mainland, historically rural but later industrialized in the 20th century. This case study uses the following index and matching data:

Place Details

- Place Name: "Marghera"

- Alternative Name: "Margera"

- Place Index: [111, 112, 163, 169, 170, 171, 189, 266]

Data Analysis Across Thresholds

The results from applying different difflib thresholds are as follows:

Results at Threshold: Difflib (0.7)

1. True Positives (TP): Matches successfully detected all valid references to "Marghera" or its variants across most columns. Cross-validation confirms that certain text variations as a result of corrupted OCR, such as "Margen" (111) and "Muigera" (189), were correctly matched as "Margera."

2. False Positives (FP):

- Column 163: Likely triggered by unrelated words such as "materia."

- Column 171: Triggered by irrelevant words like "mandati," "Mario," and "Marco."

3. Summary: While this configuration performs well in identifying valid matches, it also introduces a higher number of false positives (e.g., columns 163 and 171).

- Metrics:

- TP: 12 (total hits, same for all TP, FP, and FNs below), FP: 3, FN: 0

- Precision: 0.8

- Recall: 1.0

- F1-Score: 0.889

Results at Threshold: Difflib (0.75)

1. Improvements over 0.7:

- False positives are reduced, particularly in column 163, with "materia" no longer triggering incorrect matches.

- One false positive remains in column 171, caused by unrelated terms such as "mandati," "Mario," and "Marco."

2. Losses from 0.7: One true positive is missed, but the overall accuracy improves as most results are now true positives.

3. Summary: Difflib (0.75) achieves a better balance, reducing false positives significantly while retaining all but one true positive.

- Metrics:

- TP: 11, FP: 2, FN: 1

- Precision: 0.846

- Recall: 0.917

- F1-Score: 0.880

Results at Threshold: Difflib (0.8)

1. True Positives (TP): Successfully detects valid matches in most columns, but there is a slight reduction in the number of true positives.

2. Losses from 0.75: Two true positives are lost, including in column 111 ("Margen"). Two false positives are removed, further improving precision.

3. Summary: Difflib (0.8) produces the most precise results but at the cost of reducing recall, as more valid matches are missed.

- Metrics:

- TP: 9, FP: 0, FN: 3

- Precision: 1.0

- Recall: 0.75

- F1-Score: 0.857

Final Observations

Across all configurations, no false negatives were detected. This suggests that all valid instances of "Marghera" or "Margera" were captured by at least one of the schemes. From this analysis, difflib (0.75) emerges as the optimal configuration for this case study. It strikes the best balance between precision and recall, reducing false positives while retaining nearly all true positives.

Limitations and Future Considerations

Future work on place name verification pipeline would exclude Geodata API from future analyses due to its low utility. It would continue to utilize Nominatim for location matching. However, the pipeline should improve historical name mapping, particularly utilizing Wikidata due to its comprehensive and temporal data approach. Future work could include using Wikidata's query language SPARQL to make historical queries about a place.

Reflecting on the purpose of the parameter tuning experiments, these results highlight the inherent challenges of relying solely on naive, non-LLM matching methods to achieve the dual objectives of retaining true positive matches and eliminating false positives. While configurations like difflib (0.75) offer a balanced solution for certain scenarios, they still fall short of providing consistently optimal results. The most reliable way to create an accurate geographical-names-and-index-matching list would involve manual curation, cross-validating the outputs from various matching methods and parameter combinations. This approach ensures that nuanced text variations, context, and potential errors are accounted for, albeit at the cost of significant time and effort.

It is also crucial to recognize that these results were obtained from analyzing a single volume. Other volumes of Sanudo’s diary may exhibit textual and contextual variations that could necessitate different combinations of matching methods and parameters to achieve the best results. This variability underscores the need for flexible and adaptive approaches when scaling these techniques to larger datasets.

Another notable limitation in our current approach is the reliance on the provided OCR text as the foundational data source. While this constraint aligns with the scope of the project, it inherently limits accuracy due to the quality of the OCR output. Future iterations of this project could explore alternative tools, such as Tesseract OCR, during the document scanning phase, which might offer improved accuracy in text extraction.

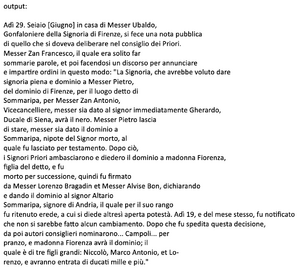

Additionally, early experiments with large language models, such as GPT-4o (as implemented in ChatGPT), to repair and enhance certain OCR-extracted paragraphs have shown intriguing and potentially promising results (see: attached screenshot). These experiments suggest that LLMs could effectively improve text quality by addressing OCR errors and contextual inconsistencies. However, the efficacy of such an approach requires extensive validation and rigorous testing, given that responses from LLMs might vary widely even to the same exact prompt. Furthermore, the integration of LLMs would demand significant computational resources and substantial usage of paid API services, presenting logistical and budgetary challenges.

References

GitHub: https://github.com/cklplanet/FDH-Sanudo-index

- ↑ Finlay, Robert. “Politics and History in the Diary of Marino Sanuto.” Renaissance Quarterly, vol. 33, no. 4, 1980, pp. 585–98. JSTOR, https://doi.org/10.2307/2860688.

- ↑ "Marino Sanudo." Encyclopaedia Britannica, Encyclopaedia Britannica, Inc. Accessed December 17, 2024. www.britannica.com/biography/Marino-Sanudo-Italian-historian-born-1466.

- ↑ Ferguson, Ronnie. “The Tax Return (1515) of Marin Sanudo: Fiscality, Family, and Language in Renaissance Venice.” Italian Studies 79, no. 2 (2024): 137–54. doi:10.1080/00751634.2024.2348379

Click to go back to Project lists

Our Github Page

Diaries