WikiBio

Motivation

The motivation for our project was to explore the possibilities of natural-language generation in the context of biography generation. It is easy to get structural data from the Wikidata pages, but not all the Wikidata pages have a corresponding Wikipedia page. This project will showcase how we can use the structural data from the Wikidata pages to generate realistic biographies in the Wikipedia pages format.

Project plan

| Week | Dates | Goals | Result |

|---|---|---|---|

| 1 | 8.10-15.10 | Exploring data souces | Selected Wikipedia + Wikidata |

| 2 | 15.10-22.10 | Matching textual and structural data | Wikipedia articles matched with wikidata |

| 3 | 22.10-29.10 | First trained model prototype | GPT-2 was trained on english data |

| 4 | 29.10-5.11 | Acknowledge major modelling problems | GPT-2 was trained in Italian, issues with Wikipedia pages completion and Italian model performances |

| 5 | 5.11-12.11 | Code clean up, midterm preparation | Improved sparql request |

| 6 | 12.11-19.11 | Try with XLNet model, more input data, explore evaluation methods | Worse results with XLNet (even with more input data), Subjective quality assessment and Bleu/Gleu methods |

| 7 | 19.11-26.11 | Start evaluation surveys and automatic evaluation, improve GPT-2 input data | Survey started, introduced full-article generation with sections |

| 8 | 26.11-3.12 | Productionalization, finish evaluation | Created wikibio.mbien.pl to present our results and started working on API for page uploads |

| 9 | 3.12-10.12 | Productionalization, evaluation analysis | Finished the wikipage, uploaded code and prepared the presentation slides |

| 10 | 10.12-16.12 | Final Presentation | Who knows? |

Data sources

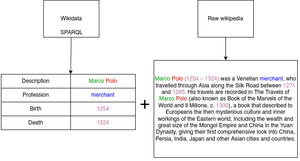

In this project, we made use of two different data sources: Wikidata and Wikipedia

Wikidata

Wikidata was used to gather the structured information about the people who lived in the Republic of Venice. To gather this data from wikidata, a customizable SPARQL query is used on the official wikidata SPARQL API. Only two strict constraints were set for data filtering:

- Instance of (wdt:P31), human (wd:Q5)

- Citizenship (wdt:P27), Republic of Venice (wd:Q4948)

Optionally, following data was also gathered - we used it for generation whenever they existed in the data

- ID of the object in the wikidata

- itemLabel (wikibase:label) - generally either equal to the person's full name, or the wikidata ID

- itemDescription (schema:description) - short, generally two-word, description of the person

- birth year (wdt:P569)

- death year (wdt:P570)

- profession (wdt:P106)

- last name (wdt:P734)

- first name (wdt:P735)

- link to picture in commons (wdt:P18)

Wikipedia

Wikipedia was used to match the wikidata entries with the unstructured text of the article about that person. The "Wikipedia" package for python was used to find the matching pairs and then to extract the Wikipedia articles matching the entries.

Generation methods

The output of the above data sources is prepared jointly in the following manner:

- All the structured entries are transformed to text, by putting the custom control token in front of them and then concatenating them together

- The resulting text is the training sample for the model

We considered two language model architectures: XLNet and GPT-2. The comparison of the models and reason of selection of GPT-2 is described below

XLNet

XLNet is a late-2019 bi-directional language model, mostly used for tasks like sentiment analysis, classification and word correction. However, it was interesting for us due to its possibility to train on and generate text samples of virtually infinite length.

Pros:

- variable text length

- modern architecture

Cons:

- bi-directionality is not of much use here and takes more memory

- considerably lower performance on text generation tasks than GPT-2

Finally, our tests has shown that we should not consider XLNet for our biography generation effort, for a number of reasons:

- there is no text sample length limit, but the GPU RAM is not, which makes the limitation to ~1500 tokens required anyway

- the model performs poorly with short inputs

- the text is gramatically correct but the coherence with semistructured data is very bad, supposedly due to bi-directional nature of the model

GPT-2

GPT-2 is an early-2019 Causal (left-to-right) Language Model. It it widely used for language modeling for text generation, and as such it was perceived as the best shot to get the decent language modeling performance.

Pros:

- modern architecture

- left-to-right: memory-efficient

- widely used for text generation

Cons:

- fixed 1024 tokens context frame

The model performed well to understand the semi-structured input based on the underlying wikidata. It allowed generating short, but consistent biographies.

Example generation output

- Input: <|start|> Marco Polo <|description|> Painter <|professions|> Painter, Writer <|birth|> 1720 <|death|> 1793 <|summary|>

- Output: Marco Polo (1720 – 1793) was a German painter of a distinguished life of high quality. In 1740 he was the first to paint in an Italian Renaissance style. He served as the painter of Giovanni Venez in Venice; his brother was Giovanni Magnan and daughter was a painter of his own time, Marco Polo. During his career he collaborated with the great Venetian painter Giovanni Battista Gugliati in the work for the Porte della Prudina, which was published in 1714. After his death, he would leave his paintings at Venice for the Palace of Santa Martina.

Generation hyperparameters tuning

Productionalization

Platform

To serve the generated biographies, we created the MediaWiki website: https://wikibio.mbien.pl/ The platform was based on the private server, running the docker-compose stack. The used containers were:

- mediawiki 1.35 from https://hub.docker.com/_/mediawiki

- mariadb 10.5 from https://hub.docker.com/_/mariadb

The server was also running traefik 1.7 as an ingress gateway and TLS terminator.

Functionalities such as Infobox:Person, required significant manual modifications to the vanilla MediaWiki installation. To enable this functionality, it was required to perform all the operations described in the following tutorial: https://www.mediawiki.org/wiki/Manual:Importing_Wikipedia_infoboxes_tutorial. Finally, the logos and favicons were replaced with the coat of arms of Republic of Venice, to give the platform more pleasant look.

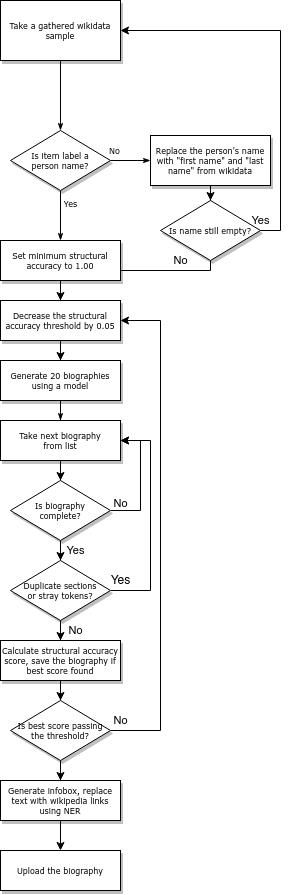

Automatic generation pipeline

The final generation pipeline was a complex, multi-phase operation. While the survey-based evaluation of our model was meant to show the overall performance, here we wanted to get only the well-conditioned biographies, ready to be served to the users. The overall pipeline is visible in the following diagram, with details explained in the further subsections

Post-processing wikidata

In general, itemLabel extracted from the wikidata was meant to contain the person full name. However, it was not always the case. Some labels contained the wikidata ID of the entry. These samples were found, and, whenever possible, their labels were replaced with the first and last name acquired via the data optional fields. If those fields were not existing either, the sample was dropped, having not enough information to start the generation.

Generation with structural accuracy score

After the data was ready, we used our fine-tuned GPT2-medium model to generate 20 biographies. Each of the generated biographies was then checked for validity:

- Whether it was complete - if it has less than 1024, or if the last token is the pad or end token.

- Whether there were no duplicate sections

- Whether there were no generated control tokens in the textual part of the biography

Then, the structural accuracy scores were calculated for each of the remaining biographies (see the metric details in evaluation section). If the best biography reached over 0.95 structural accuracy, the biography was accepted. Otherwise, the threshold was decreased by 0.05, and the generation procedure was repeated.

Procedural enrichment of the output

Evaluation

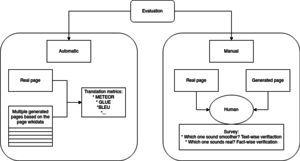

Objective (automatic)

Translation metrics

Structural accuracy

KNN over top TF-IDF Keywords for Profession in Time (Top-TIKPIT) matrix (Top-TIKPIT Neighbours)

Subjective (survey)

Trivia

After setting everything up, we googled randomly for WikiBio, and found that there was a dataset for table-to-text generation (so, very similar task) created just two years ago, with the same name! https://www.semanticscholar.org/paper/Table-to-text-Generation-by-Structure-aware-Seq2seq-Liu-Wang/3580d8a5e7584e98d547ebfed900749d347f6714 We promise there was no naming inspiration, and it's quite funny to see that coincidence