Europeana: A New Spatiotemporal Search Engine

Introduction and Motivation

1. search engine kraken 2. model ocr17

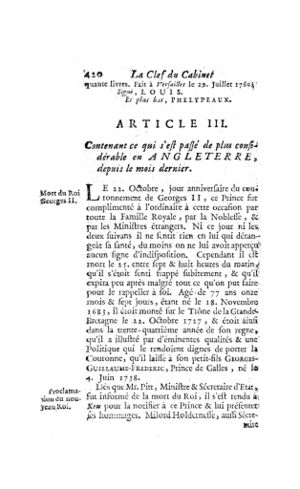

Europeana as a container of Europe’s digital cultural heritage covers different themes like art, photography, and newspaper. As Europeana has covered diverse topics, it's difficult to balance the ways to present digital materials according to their content. The search for some specific topics needs to go through different steps, and the result of the search might also dissatisfy the user's intention. After having a deep knowledge of the structure of Europeana, we decided to create a new search engine to better present the resources according to their contents. Taking the time and scale of our group into account, we selected the theme Newspaper as the content for our engine. In order to narrow down the task further, we selected the newspaper La clef du cabinet des princes de l'Europe as our target.

La clef du cabinet des princes de l'Europe was the first magazine in Luxembourg. It appeared monthly from July 1704 to July 1794. There are 1,317 La clef du cabinet des princes de l'Europe magazines in Europeana. The page number for each magazine is between 75 to 85. In order to reduce the amount of data to a scale that can be dealt with on our laptops, we randomly selected 8,000 pages from the whole time span of the magazine.

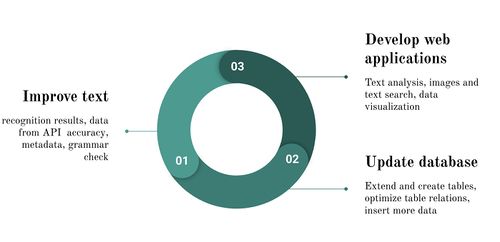

In order to have a better presentation of the specific magazine on our engine, we mainly implement OCR, text analysis, database design, and webpage design.

OCR is the electronic or mechanical conversion of images of typed, handwritten, or printed text into machine-encoded text. This conversion from in-kind to digital format can not only be used for historical and cultural protection but also provide us access to a deep analysis of them based on the computer. In our work, we used OCR to convert the image format magazine to text and store the text in the database, which provides us with more convenience and chances to better deal with them.

For the text analysis part of our work, we used 3 methods: name entity, LDA, and n-gram to deal with the text we got.

For the presentation of the magazine, we developed a webpage to realize the search and analysis functions. The webpage aim at realizing interactivity between users, and let users have an efficient way to reach the content they'd like to get.

Deliverables

- The 8000 pages of La clef du cabinet des princes de l'Europe from July 1704 to July 1794 in image format from Europeana's website.

- The OCR results for 8000 pages in text format.

- The dataset for the text and results of text analysis based on LDA, name entity, and n-gram.

- The webpage to present the contents and analysis results for La clef du cabinet des princes de l'Europe.

- The GitHub repository contains all the codes for the whole project.

Methodologies

This project includes three main parts which are text processing, database development and web applications. At the same time, the project is conducted with a synergetic process of improving those three parts. Toolkits of this project contain Python for text processing and web applications, MySQL for database development, and FLASK for the webpage framework. In the end, the dataset is composed of four versions for 100 newspaper issues including 7950 pages, that is images, text from Europeana, text after OCR and text after OCR and grammar-checker.

Text processing

Data acquisition

Using the API given by Europeana's staff, the relevant data is acquired by web crawler. We first get the unique identifier for each issue, then use it to get the image url and ocr text provided by Europeana. We also get the publication date and the page number of every images, which is helpful for us to locate every page and retrieve them in the future. The data is stored in <Title, Year, Month, Page, Identifier, Image_url, Text> format. The crawling result is shown below.

- 1317 Issues

- Number of pages per issue: roughly 80 pages

- Number of words per page: roughly 200-300 words

Optical character recognition(OCR)

Ground truth and the model

The reliability of OCR models depends on both the quantity and the quality of training data. Quantity needs to be produced and made freely available to other scholars. On the other hand, quality needs to be properly defined, since philological traditions vary from one place to another, but also from one period to another. The essentials of successful recognition for this type of newspaper are to target the old French during 18 centuries while meeting both quality and quantity of dataset. Therefore, the model for recognition used in this project is trained by OCR17. The corpus of Ground Truth(GT) is made of 30,000 lines taken from 37 French prints of the 17th century, following strict philological guidelines.

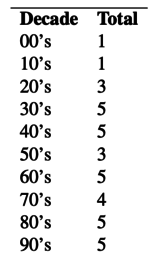

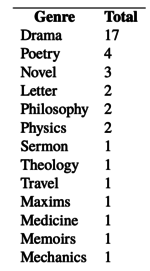

1. Corpus building: The training data is selected according to two main categories bibliographical (printing date and place, literary genre, author) and computational (size and resolution of the images) information. Regarding dates, prints are diachronically distributed over the century, with a special attention for books printed between 1620 and 1700. Regarding genre, the result can be seen as a two-tier corpus with a primary one consisting of literary texts (drama, poetry, novels. . . ) and a secondary one made of scientific works (medicine, mechanics, physics. . . ).

The inbalanced corpus are made for two main reasons. On the one hand, dramatic texts tend to be printed in italics at the beginning of the 17th century. On the other hand, they traditionally use capital letters to indicate the name of the speaker, which is an easy way to increase the amount of such rarer glyphs and is also helpful to deal with highly complex layouts.

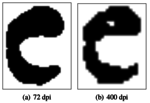

At the same time, low resolution of images would wrong recognition, the model is able handle low resolution images properly.

2. Transaction rules:

The transaction guideline in this model is to encode as much information as possible, as long as it is available in unicode. The result is therefore a mix between graphetic and graphemic transcription.

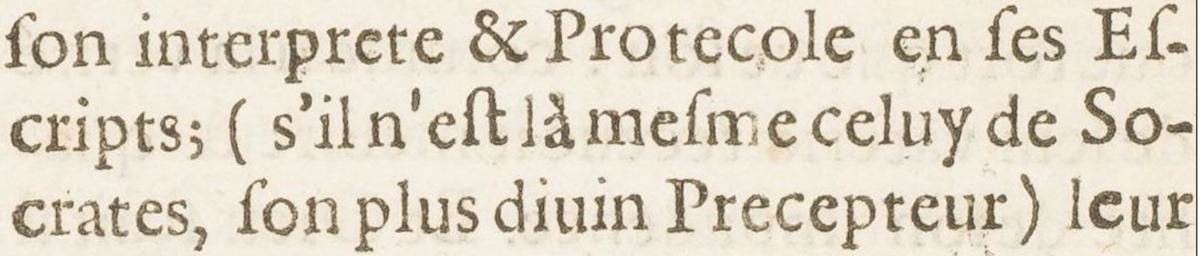

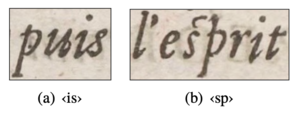

It means that it do not dissimilate‹u›/‹v› (diuin) or ‹i›/‹j›, we do not normalise accents (interprete and not interprète), we keep historical, diactritical (Eſcripts and not Ecrits) or calligraphic letters (celuy and not celui). We keep the long s (meſme and not mesme), but most of the other allographetic variations are not encoded.

One exception has been made to our unicode rule: aesthetic ligatures that still exist in French (‹œ› vs ‹oe›) have been encoded, but not those that have disappeared despite their existence in unicode.

3. Model:

The model has been trained on Kraken OCR engine and tested with small samples of 18th century out-of-domain prints to test the generality of our model – only with roman or italic typefaces. On top of training a model using the default setup regarding the network structure, training parameters. . . , several modifications, have been tested to maximize the final scores.

| Model | Test | 16th c. prints | 18th c. prints | 19th c. prints |

|---|---|---|---|---|

| Basic model | 97.47% | 97.74% | 97.78% | 94.50% |

| with enlarged network | 97.92% | 98.06% | 97.78% | 94.23% |

| + artificial data | 96.65% | 97.26% | 97.74% | 95.50% |

| with enlarged network | 97.26% | 97.68% | 97.84% | 94.84% |

The training process is completed on Kraken which is an optical character recognition package that can be trained fairly easily for a large number of scripts. Some training details can be seen here.

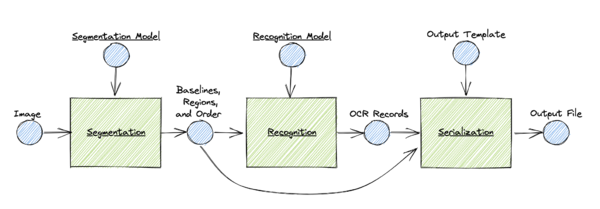

OCR Procedure

Images for OCR is crawled from Europeana API. The resolution is 72 dpi and the color mode is in grayscale with white background. A low resolution introduces significant changes in the shape of letters. However, a few lines in images are blurry and glared, so noise is not a big problem during OCR. Besides, due to a simple and clear layout of the newspaper, results of segmentation are pretty good.

Based on the above, the first step is binarization to convert greyscale images into black-and-white(BW) images. By comparing BW images with original ones, it finds that characters on binarized images is milder and vaguer. In this case, the original images are used to segment. e

The next step is to segment pages into lines and regions. Since the whole procedure of OCR is carried out on Kraken engine, page segmentation is implemented by the default trainable baseline segmenter that is capable of detecting both lines of different types and regions.

At last, recognition requires grey-scale images, page segmentation for images, and the model file. The recognized records are output as a text file after serialization.

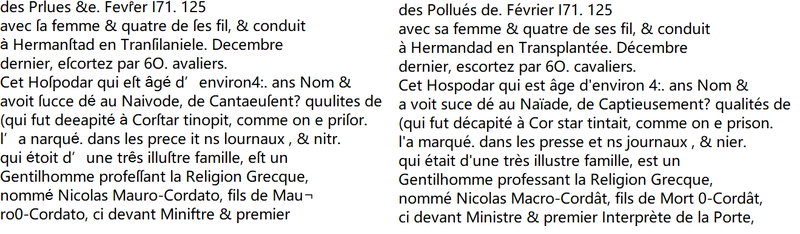

Grammar checker

As the results obtained from OCR kept the old French font, which will influence the performance of the grammar checker, we need to shift these ancient fonts to modern ones. To optimize the results obtained from OCR, we used the grammar checker API to refine the text. After sending the requests to the server of the grammar checker, it will return a JSON file that contains all the modifications for the specific text. By using the offset and length information in the JSON file, we can locate the position of the word that should be modified in the original text. For every modification, we used the first possible value to replace the original word.

Text Analysis

Named entity

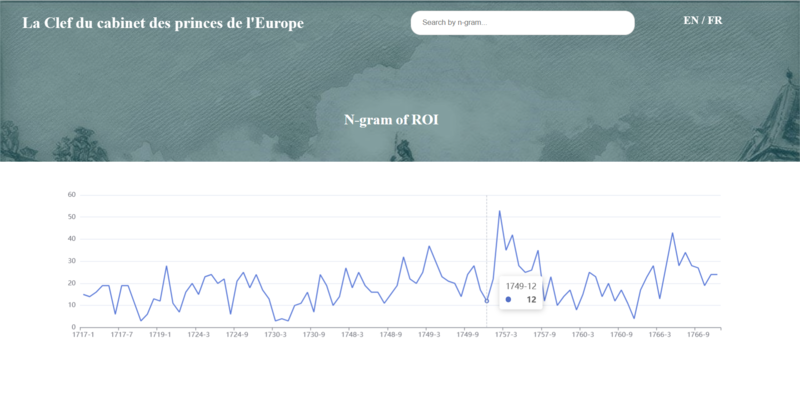

N-gram

In the fields of computational linguistics and probability, an n-gram is a contiguous sequence of n items from a given sample of text or speech. By defining the size of n, this method can infer the structure of a sentence from the probability of the occurrence of n words. This window of size n will pass through each word in the sentence in turn, and make this and the next n-1 words a statistical object. This project uses the N-gram method to accomplish the goal to calculate the word frequency, which may reflect some features of the specific era and the customary usage of specific words during a specific time span.

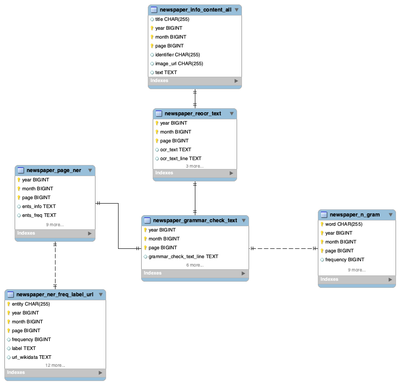

Database development

The version of MySQL server in this project is 8.0.28. The data interacts with MySQL database via Python and its Package pymysql and sqlalchemy. The database contains six main schemas newspaper_grammar_check_text, newspaper_info_content_all, newspaper_ner_freq_label_url, newspaper_page_ner, newspaper_reocr_text, and newspaper_n_gram. The details information of this database can be seen in the ER relationships figure.

Schema newspaper_info_content_all includes all original text, image URL and date information. Schema newspaper_reocr_text includes text after OCR in two formats--one is line by line as in pages, another is in one line getting rid of line break <\n>. Schema newspaper_grammar_check_text includes OCR text after grammar checking in on line. Schema newspaper_page_ner includes all entity information like entities, frequencies, QID of wiki knowledge and URL of wiki knowledge in dictionary format. Those four schemas' primary key is <year, month, page>, which means each row presents one page. The schema newspaper_ner_freq_label_url stores entities in a sparse way. Each row presents one entity in one page. The last schema newspaper_n_gram contains all words in the text and their frequency. Each row presents one word in page.

Such design is all for the web application such as search and visualization.

Webpage applications

Tools

Flask is a Python framework for building web apps. It's famous for being small, light and simple. And MySQL is a database system used for developing web-based software applications. We use Flask to build the front-end content and MySQL to connect with local database. By doing so we are able to retrieve data from the local server and present it on the webpage.

Feature Design

Our main goal is to design an efficient system for indexing the content of the newspapers and making it searchable. After retrieving. We also want to provide useful insights for the users. This may involve implementing techniques such as full-text indexing, metadata indexing, and natural language processing. So we design our web features as follows.

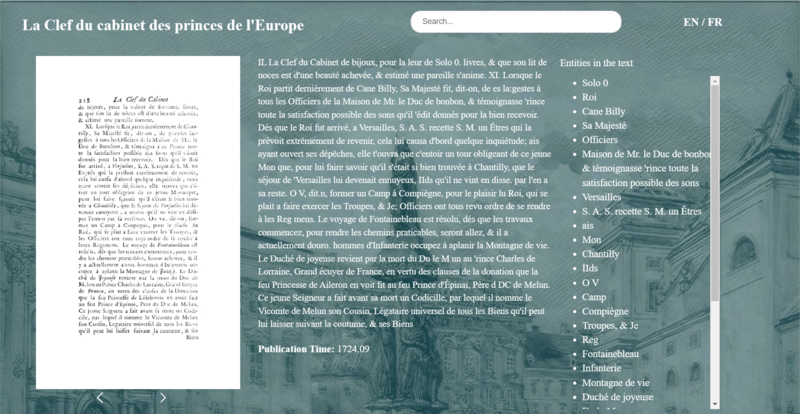

- Search Page

- Full Text Search: Allow users to search by the full text of the newspaper collection.

- Named Entities Search: Create facets using different types of named entities and allow users search by them.

- N-gram Search: Show the changes of the word frequency in a long time period and give some inspiration.

- Detailed Page

- Display the full text.

- Show the named entities contained in this page and provide external links to help users understand better.

- Able to go to previous or next page.

Retrieval Method

We use full-text search and facet search in the web.

- Full-text Search

- For full-text search, users can search for documents based on the full text of the newspaper. And we uses fuzzy match when executing the query statement. This allows us to get more retrieval results even when the search keyword isn't very precise.

- Facet Search

- For facet research, we create 3 different categories for retrieval -- People, Location, and Organization. When doing facet search, we still use fuzzy match to get as much results as possible. Besides, we also calculate the occurrences of the search keyword in each page, and output the results by descending order. In this way, we can put the most relevant data to the front and improve the search results.

Interface

We wish the web to have coherent themes, and to be easy to use and navigate with a clear hierarchy and logical organization of content. Following these rules we design the interface of the search engine, which is shown below.

Quality Assessment

Subjective Assessment

Objective Assessment

- Dictionary Method

- Original text 0.56

- Reocr text 0.57

- Text refined by grammar checker 0.61

- Entropy method

| 1-gram Entropy | 2-gram Entropy | |

|---|---|---|

| Old french | 4.24 | 7.88 |

| Original text | 4.27 | 7.99 |

| Reocr text | 4.13 | 7.74 |

| Grammar checker refined text | 4.17 | 7.76 |

| Random text | 5.41 | 10.83 |

Limitations

The recognition effect of OCR needs to be improved

Limited by printing technology, papermaking technology, and taking circulation time into account, the quality of the remaining resources may have been greatly affected. Like the magazine, this project has selected, there is a great amount of 'noise' on its pages, which will make it difficult for recognition of texts. Although in our OCR process, some argument methods like segmentation and binarization have been implemented, the 'noise' can still affect the performance of OCR to some degree. In addition, as the OCR model that we selected was been trained on other French prints of the 17th century, it may be lacking in relevance to this magazine, which can also influence the result of the OCR. For potential improvements in the future, according to the two issues, we suggest two possible ways. For the 'noise' in the text image, some image processing methods for noise removal could be added to the preprocessing stage of the OCR. To improve the recognition of specific issues, a new training dataset could be made. But at the same time, the process to generate ground truth manually may be a heavy burden.

Project Plan and Milestones

| Date | Task | Completion |

|---|---|---|

| By Week 3 |

|

✓ |

| By Week 5 |

|

✓ |

| By Week 6 |

|

✓ |

| By Week 7 |

|

✓ |

| By Week 8 |

|

✓ |

| By Week 9 |

|

✓ |

| By Week 10 |

|

✓ |

| By Week 11 |

|

✓ |

| By Week 12 |

|

✓ |

| By Week 13 |

|

✓ |

| By Week 14 |

|

✓ |

Github Repository

https://github.com/XinyiDyee/Europeana-Search-Engine

Reference

G. (2013, August 4). Entropy for N-Grams. Normal-extensions. Retrieved December 21, 2022, from https:////kiko.gfjaru.com/2013/08/04/entropy-for-n-grams/