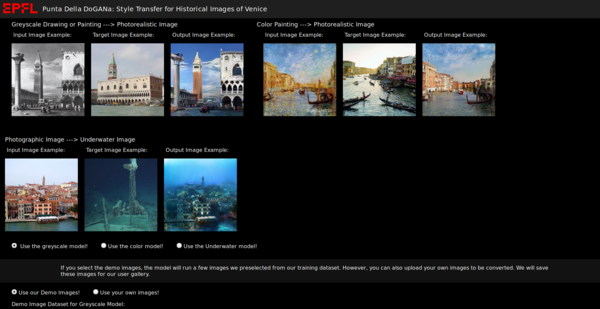

Photorealistic rendering of painting + Venice Underwater

Abstract

The main goal of our project is to automatically transform old paintings and drawings of Venice into a photorealistic representation of the past, thus create photos never taken. In order to achieve this goal, we utilize style transfer generative adversarial networks (GANs) to learn what separates a drawing or painting from a photograph and then utilize that model.

After a review of the current literature in the field, we settled on implementing the relatively new contrastive unpaired translation GAN with some key modifications. One such modification was to build a new auxiliary loss function to enforce geometric continuity between generated and original building. The second was to add spectral norm and self-attention layers to allow us to effectually expand the size of the network. The target data is a dataset of real photos of Venice, with datasets of either monochrome drawings, or monochrome paintings, or coloured paintings as the input. Finally, the trained models take a drawing or painting as input and deliver its photorealistic representation as output.

As a subgoal of the project, we have trained an additional GAN to create a visual representation of Venice as an underwater city.

Motivation

The primary goal of our project is to be able to see historical Venice through a camera lens. There exist many records documenting the visual appearance of Venice through drawings and paintings, but we cannot go back in time to see how it would have looked like in reality, like in a photo. The GAN models that we develop in this project solve this problem: we are able to render photorealistic depictions of historical Venice using paintings and drawings of the city, allowing us to “travel back in time” to see how Venice looked in reality.

But trying to depict historical Venice is almost a classic problem… Venice is slowly sinking and we want to see what it will look like in the future too. We added a secondary goal to our project, in which we “travel forward in time”: we trained an additional GAN model that renders photos of Venice today as photos of Venice as a sunken city.

We hope that the readers enjoy this travel in time in the city of Venice!

Milestones

Milestone 1

- Choose GAN architecture to use as base architecture for the project

- Selected architecture: Contrastive Unpaired Translation[1] (CUT)

- Data collection (web scraping and CINI foundation):

- Color paintings

- Monochrome paintings

- Monochrome drawings

- Underwater images

- Modern photos of Venice

Milestone 2

- Clean collected images:

- Restrict images to those that show more "standard" subjects: outdoor images of Venice

- Crop out image frames, watermarks

- Test initial results of the CUT model without hyperparameter tuning

Milestone 3

- Perform hyperparameter tuning on the CUT model for each of the final models (color, monochrome, underwater)

- Look into model extensions, in order to enhance learning ability

- Additions: Canny edge detection, spectral loss, self-attention layers

Milestone 4

- Find optimal parameters for each model

- Implement front end in order to visualize the model predicted results

- Same front end for color and monochrome models: displays input image and output image

- Underwater model front end: displays input image and output image with a slider representing the rising sea level

Deliverables

We invite the reader to see our project GitHub page[2] to test the results of our pretrained models, or to train new models.

Included in this repository is all the code for our model, so tech literate users can easily reproduce our results through their own training, or use the pre-trained models to test their own images. As the source code is well documented, it is also possible to expand on our work easily for further research.

We have also implemented a progressive web app that would allow the general public to play with our different models with their own photos our photos from our dataset. Though this application is not running live currently, it can be compiled to a production version and pinned to a public-facing URL easily.

The final training data used for each of the three models produced is located in the following folder: /dhlabdata4/makhmuto/DoGANa_datasets/.

Though the code for generating the models, the application, and the data collected are strong results, we consider the true deliverables of this project to be the trained models/ output images themselves, as our main goal was to create effective and useful style conversion networks. In this sense, we can say we succeeded on a highly difficult task despite the discussed limitations. We were able to create networks that effectively 'colorized' the monochrome drawings/ paintings with particular success on Venetian landmarks like St. Mark's Campanile, the grand canal, or the dome of Santa Maria della Salute. Likewise, our color painting model was able to smooth and correct painterly excess to make the appearance of the images significantly more photorealistic in texture, structure, and coloration. The underwater model, despite having the difficulty of a non-Venice image target dataset strongly learned underwater coloration and for some images even generated the mottled look of submerged objects covered in corals, patinas, and algae.

Methodology

Data collection

One of the primary challenges of this project is the acquisition and processing of a sufficiently large, high quality and diverse dataset. Our dataset consists of color paintings of Venice, monochrome paintings and drawings of Venice, photos of Venice, and underwater photos. The two main data sources used are the following:

- CINI dataset (provided by EPFL DH lab)

- ~800 monochrome drawings and graphics of Venice

- 331 paintings of Venice

- Web scraping:

- Sources: Google Images, Flickr

- Data acquired:

- ~1300 color paintings of Venice

- ~1600 photos of Venice

- ~700 images of underwater shipwrecks or sunken cities

Web scraping

We primarily use Selenium web driver for crawling, and urllib to download the source images. We used search terms such as “color painting of Venice” in English, Italian, French, Russian, and Mandarin. To make the model learn more Venetian properties of the paintings and photos, we overweighed the number of photos of landmarks in Venice, as the majority of paintings and drawings were of the same few landmarks, for instance, St Mark’s Square. Thus, we used additional search terms, such as “color painting st marks square” or “photo st marks square”.

Data processing

The data cleaning and processing component of the project was one of the most crucial steps, as the content and quality of our images defined what the GAN model was able to learn and reproduce. The main tasks of this part of the project were to parse the collected data for content, and to cut out irrelevant components.

Content parsing

We removed web scraped images that have less conventional portrayals of the city of Venice, or images that were in no way semantically related to the search terms. For instance, as we can see in Fig. 2, none of these images is a standard depiction of a Venetian setting: amongst the desired images, we also obtain images of paintings from Venice (but not of Venice), paintings of Venetian settings that do not portray a larger image of the city (just windows or graffiti, for example). We thus removed such images, as the goal is to create a model that imitates a photorealistic Venice.

Image processing

Some web scraped images had appropriate content but had frames or watermarks that we wanted to remove, as in Fig. 3. To do this, we cropped the images either manually or using the Python Image Library (PIL).

We also made a dataset with extra augmented images, taking the original input dataset and for each image creating a new, modified version of it with a random blur, zoom, slight rotation etc, in order to expand the size of our original dataset. We then combined these with the original monochrome images to form the augmented dataset.

Final data

After the data cleaning and processing steps, we had the final dataset consisting of:

- 940 monochrome paintings and graphics of Venice

- 901 color paintings of Venice

- 1141 photos of Venice

- 431 underwater images

- 1880 augmented monochrome images

Model selection

At the start of this project, we had planned to implement our model using the CycleGAN architecture, as it is a GAN architecture that allows translating an image from a source domain X to a target domain Y without necessarily having paired samples in domains X and Y. While doing some initial research for the project, however, we found a different model that better suited our needs: the contrastive unpaired translation (CUT) model. The CUT model was presented at ECCV 2020 in August and is was created by the same lab behind CycleGAN. This framework also allows for unpaired image-to-image translation, in addition to having a better performance than CycleGAN and a shorter training time. More details about the model architecture can be found in the paper.[3]

Model optimization

In order to optimize the model, in the first stage we optimized some of the model hyperparameters, and then added some additional features, such as edge detection inverse loss, spectral norm and self-attention blocks.

Original hyperparameter optimization

We tested the following hyperparameters of the original CUT model[4]

- Learning rate

- Number of epochs with initial learning rate

- Number of epochs with linearly decaying learning rate to zero

We observed that our model tended to overfit with higher learning rates, or with a high number of epochs with the initial learning rate. We thus made sure to do the following in subsequent experiments:

- Set learning rate to lower values (typically between 0.0001 and 0.00018); original default: 0.0002

- Set the number of epochs with the initial learning rate to be significantly lower than the number of epochs with a linearly decaying learning rate (typically 150:300); original default: 200:200

Edge detection loss

In the initial model results, we observed that the generator was learning to overwrite particular challenging aspects of images properly rather than modifying them coherently. This resulted in a worse performing model: some structures in the input such as boats or features of a building were deleted, as we can see in Fig. 4. To combat this issue, we imagined and implemented an edge detection inverse loss component into the model.

We use the Canny edge detection algorithm.[5] This algorithm allows us to convert the images into purely edges, by applying a succession of Gaussian filters and other components. Then, taking the edge images of the generator input and output, we used SSIM to compare their similarity. [6] After multiplying the similarity score by our edge loss parameter value (which lets us control how much the model takes the edge loss into account) we subtract the score from the standard loss created by the discriminator. The forces the generator to learn not just to create images which satisfy the discriminator, but also which maintain the edges (and thus the structures and objects) from the original input image.

Spectral Normalization

Another modification we made to prevent the model from "over-learning" the target data and generating new structures in place of the input image structures was adding spectral norm to the resnet blocks. Spectral normalization has been documented to combat mode collapse very efficiently and thus is relevant in this case as our problem is broadly analogous to mode collapse. Spectral normalization normalizes the weight for each layer with the spectral norm σ(W) such that the Lipschitz constant for each layer as well as the whole network equals one. With Spectral Normalization, we renormalize the weights whenever it is updated. This creates a network that mitigates gradient explosion problems and mode collapse.

Self-attention layers

Self-Attention blocks have become very popular in deep learning in recent years, particularly with natural language processing tasks. They have also been used in image classification tasks as well as featured in the famous BIGGAN model. These layers are effective in addition to convolution blocks because each filter calculates attention weights for all pixels relative to all other pixels, rather than just adjacent pixels as in a convolutional filter. This roughly allows the attention block to find subsections of the image (perhaps, for example, the buildings on either side of the canal) which are not contiguous but enforce some transformations on them in a relative manner. We tested self-attention blocks after the downscaling operation: before the resnet blocks, after them, and bracketing them. We found that the self-attention blocks were too likely to generate new structures when places after the resnet blocks, and thus we used them only before when they mostly appeared to learn structural features of the input images.

Performance evaluation

One of the foremost challenges in the project was to select our performance evaluation criteria: since the goal is to render paintings and drawings photorealistic, we had to rely on our subjective judgement of what we consider to be photorealistic.

We chose to focus on the following criteria to evaluate the performances of our model:

- Appropriate coloration according to reality: correctly colored buildings, no over-saturation for a single color.

- Consistent structures: in the rendering, buildings are not removed, and the structures are not modified too much, mainly enhancing or reducing detail to make the image more realistic.

- Not overfitting to noise: sometimes paintings can hold a great amount of detail in brush strokes, for instance in clouds, and the GAN model tends to interpret some of these details as structure details, whereas such noise should be ignored most of the time.

- Comprehensible rendering of underwater Venice (only underwater model): we aim to show a portrayal of an underwater version of Venice that has a similar structure as in the input photo, with appropriate coloration and light reflection to make it look like it is indeed underwater.

We can see in Fig. 5 and Fig. 6 how we observed bad performances in the models while tuning parameters, according to these evaluation criteria. In Fig. 5, we can see how the low Canny filter parameters caused the over-representation of the colour red from the input image in the output image. In Fig. 6, we see how the model overfits to the noise in the input image, transforming a cloud into a building.

To obtain the final underwater model, we mainly focused on learning optimization and edge detection loss.[7]

Quality Assessment

Model results

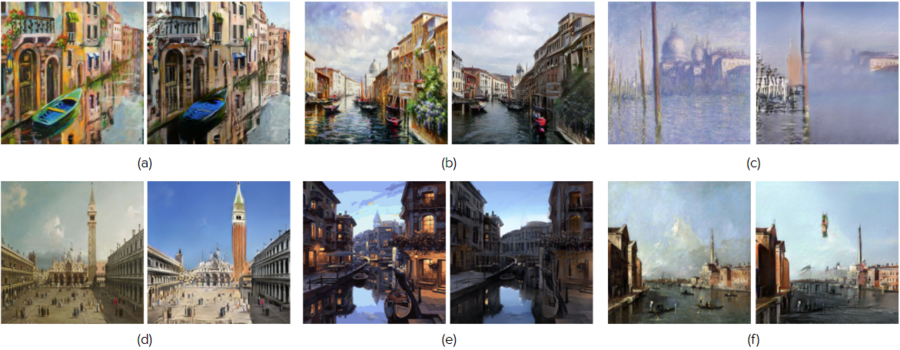

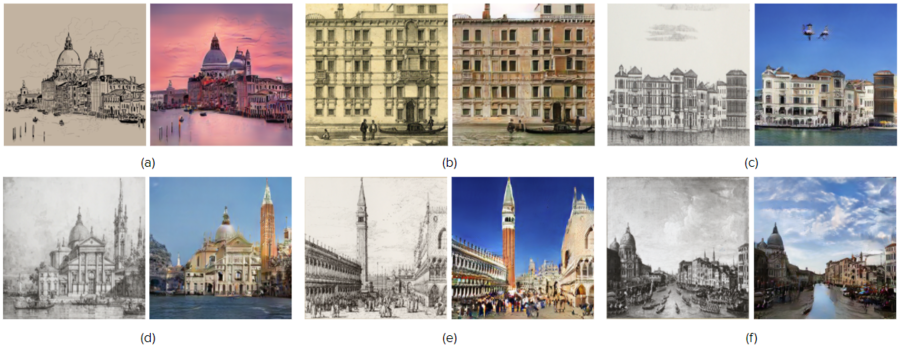

Photorealistic Venice (color and monochrome)

We present the results of the color and monochrome models in Fig. 7 and 8, respectively. The renderings of both models are quite impressive: each model is able to learn colors and structures quite well, although both models appear to have similar caveats in their learning.

The color model is able to effectively attenuate bright colors in Fig. 7a, 7b, 7e. It also enhances lackluster colors in Fig. 7d for the sky and it even renders St Mark's campanile wider and significantly brighter as it is in reality. In addition to correcting some of the structures to make them more realistic, it is able to attenuate some edges properly, for instance, the clouds in Fig. 7b, 7d and 7e. However, if the clouds are particularly detailed in the color painting, the model attempts to generate structures from them, as we see in Fig. 7f.

We can see that the color model performs generally well on more typical color palettes (enhanced compared to reality, as in Fig. 7a and 7b; muted compared to reality as in Fig. 7d). However, the model does not perform as well with more rare color palettes, as we see in Fig. 7c. This is because we had few images in the color painting dataset of similar colors, and thus the model has not been able to properly learn how to perform a style transfer from like colors.

The monochrome model also performs remarkably well regarding color selection, as we see in Fig. 8. The model has effectively learned the color of the sky, and even clouds (as in Fig. 8f). It has also consistently learned the colors of buildings. In particular, we see that the model has learned the colors of specific structures, such as St Mark's campanile, as is shown in Fig. 8d, 8e, and the model is able to identify it from various angles.

We see, however, that the monochrome model suffers from the same problems as the color model: sometimes the model recognizes non-existent structures in clouds, as in Fig. 8c. Also, more intricate details in the input images are sometimes misinterpreted in the output image, for instance replacing boats in the canals with pavement in Fig. 8f.

Overall, both models are very effective in the first two performance evaluation goals: appropriate coloration and realistic rendering of the painting/drawing subjects. The colors in both photorealistic renderings are consistent with reality, and both models make an effort to generate more realistic structures, by learning patterns and details that are seen in reality and are often portrayed in drawings and paintings.

In both models, however, the models overfit for noise in the clouds in input art, by generating structures in the clouds. This is caused by the trade-off of using edge detection: the models are better at recognizing structures that do exist, but it can also sometimes generate structures where they do not actually exist.

Underwater Venice

The results of the underwater model are impressively realistic, as can be seen in Fig. 9. Despite having tested fewer parameters and collected less data for the underwater model, it was able to learn the general color scheme and structure of underwater settings. We have effectively attained the goal of creating a model that is able to render a comprehensible image of underwater Venice.

However, one detail that we had overlooked in the implementation is that we have many images of the Venice canals: in the underwater rendering of the city, ideally, all water would disappear, as we already are underwater (or get replaced with sand, for instance). In order to do this, one would need more images of sunken cities that originally had canals as examples. This would be an interesting extension to consider in a further work, in addition to those proposed in Further work.

Limitations

We acknowledge several limitations of our methods, mainly caused by incorrect assumptions and time constraints.

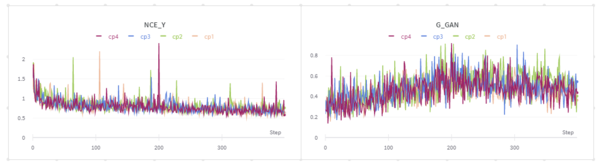

One incorrect assumption that was made at the start of the project was that we would be able to see differences in model performances using quantitative measures, such as generator loss. In the initial test of the edge detection component, we used sweeps to observe generator and NCE loss of different models, to choose how to select the optimal models. However, after initial tests, we observed that the quantitative loss metrics did not vary drastically between the various models, whereas the outputs were significantly different. We can see the loss functions of four trained models in Fig. 10, compared to each of their outputs for the same input image in Fig. 11. We thus continued to test models by manually evaluating their results, considering the factors described in the previous section.

Another limitation to our project was that in addition to the many preexisting hyperparameters of the CUT model, we added multiple extensions, which made the number of parameters to test unreasonable in the time frame that we had. We tested as many hyperparameter configurations as we could in the time frame given, occasionally causing us to delay our planning, but if we had a larger time frame we could further optimize the model performance by testing more configurations with a larger number of training epochs.

A final further limitation that would be easy to implement in a further work is to use a larger data set: we observed that the color and monochrome models both had remarkable performances in more standard settings, but were not as well-adapted to correctly identifying some more intricate details of the input drawings/paintings. This could be mitigated by using a larger and more varied input data set, which we, unfortunately, did not have time to collect as we prioritized a better model performance for more standard settings. Adding more varied input and output images would however significantly improve the results of our models.

Further work

In a further work, one could test more combinations of hyperparameters for our models, in addition to using larger and more images for the training. One could also adapt to more art styles (impressionist paintings, more sketch-like drawings) for a better performance for the models for more unusual input drawings and paintings.

There are plenty of further modifications to the model which could be made, such as performing spectral regularization at init in additional to normalization, adding progressive growing layers to increase image resolution, more experimentation with self-attention block constraints, among other options. Another interesting modification came from our observation of sunset and night time style images being created by the model - it is possible to identify the triggers for such images and add control to change the style without creating a whole new model.

This model could also be used to create photorealistic renderings from paintings of other cities, such as Paris or Rome, although it would have to be retrained on images of these cities. We hope that our project will allow others to see historical portrayals of Venice in a more modern way, to better understand how Venice looked in its earlier days.

Planning

| Week | Tasks |

|---|---|

| 9.11.2020 - 15.11.2020 (week 9) |

|

| 16.11.2020 - 22.11.2020 (week 10) |

|

| 23.11.2020 - 29.11.2020 (week 11) |

|

| 30.11.2020 - 6.12.2020 (week 12) |

|

| 7.12.2020 - 13.12.2020 (week 13) |

|

| 14.12.2020 - 16.12.2020 (week 14) |

|

Links

- Github repository for this project

- GitHub: Contrastive Unpaired Translation

- Wikipedia: Canny edge detector (for edge loss implementation)

- Fondazione Giorgio Cini

References

- ↑ Taesung Park and Alexei A. Efros and Richard Zhang and Jun-Yan Zhu: Contrastive Learning for Unpaired Image-to-Image Translation, in: European Conference on Computer Vision, Berkely, 2020.

- ↑ Github repository for this project

- ↑ Taesung Park and Alexei A. Efros and Richard Zhang and Jun-Yan Zhu: Contrastive Learning for Unpaired Image-to-Image Translation, in: European Conference on Computer Vision, Berkely, 2020.

- ↑ Github: Contrastive Unpaired Translation

- ↑ Wikipedia: Canny edge detector

- ↑ Wikipedia: Structural similarity

- ↑ Underwater model evaluation