Europeana: mapping postcards: Difference between revisions

No edit summary |

|||

| Line 82: | Line 82: | ||

=== Improved Text Recognition === | === Improved Text Recognition === | ||

Enhanced OCR | Enhanced OCR capabilities are crucial for the "Europeana: Mapping Postcards" project, as there is a pressing need to improve OCR technology for more effective handling of diverse scripts and languages, especially beneficial for postcards featuring non-standard fonts or multiple languages. Furthermore, the integration of advanced AI models is pivotal. These sophisticated models are essential for accurately interpreting nuanced textual content on postcards, including challenges like handwritten notes or faded text, thereby significantly refining the quality and reliability of the data extracted from these historical treasures. | ||

=== Enhanced Geographic Accuracy === | === Enhanced Geographic Accuracy === | ||

Revision as of 19:33, 19 December 2023

Introduction & Motivation

Introduction

Europeana is a digital platform and cultural heritage initiative funded by the European Union. The "Europeana: Mapping Postcards" project is a unique blend of history and advanced technology, utilizing Europeana's vast collection of cultural postcards. This initiative aims to map and bring to life the stories and origins of these historical treasures, offering a window into Europe's rich cultural and historical diversity.

Motivation

Despite the extensive value of Europeana's collection, it faces challenges, particularly with ambiguous or incorrect geographical information on postcards. Such inaccuracies can distort the true historical and cultural context, leading to potential misinterpretations.

Our project is driven by two key goals. Firstly, we aim to tackle the geographical inaccuracies in Europeana's postcard collection. By employing advanced OCR and AI technologies, including GPT-3.5 and GPT-4, our goal is to identify and correct these errors, enhancing the dataset's reliability and enriching our understanding of the postcards' historical and cultural significance. Secondly, we focus on refining the geographical details of the postcards. This correction and clarification enable a more accurate and immersive exploration of Europe's past. We envision transforming these postcards into a dynamic digital archive that offers an educational and engaging experience.

Our project marries Europeana's rich historical content with modern data analysis and correction techniques, aiming to create a more precise and engaging window into Europe's cultural heritage. We believe that clarifying these postcards' geographical details will offer a truer and more captivating exploration of European history for today's audience.

Deliverables

- 39,587 records related to postcards with image copyrights, along with their metadata, from the Europeana website.

- OCR results of a sample set of 350 images containing text.

- GPT-3.5 prediction results for a sample set of 350 images containing text, based on OCR results.

- A high-quality, manually annotated Ground Truth for a sample set of 309 images.

- GPT-3.5 prediction results for Ground Truth.

- GPT-4 prediction results for Ground Truth.

- An interactive webpage displaying the mapping of the postcards.

- The GitHub repository contains all the codes for the whole project.

Methodologies

Data collection

Using the APIs provided by Europeana, we used web scrapers to collect relevant data. Initially, we utilized the search API to obtain all records related to postcards with an open copyright status, resulting in 39,587 records. Subsequently, we filtered these records using the record API, retaining only those records whose metadata allowed for direct image retrieval via a web scraper, amounting to 20,000 records in total. We then organized this metadata, preserving only the attributes relevant to our research, such as the providing country, the providing institution, and potential coordinates. Employing a method of random sampling with this metadata, we downloaded some image samples locally for analysis.

Optical character recognition(OCR)

This project aims to accurately extract textual information from various types of postcards in the European region and further utilize this information for geographic location recognition. To address the diversity of languages and scripts across the European region, the project adopts a multilingual model to ensure coverage of multiple languages, thereby enhancing the comprehensiveness and accuracy of recognition.

PaddleOCR

PaddleOCR offers specialized models encompassing 80 minority languages, such as Italian and Bulgarian, which are particularly beneficial for this project.

In the project, postcards obtained from Europeana serve as the input for the original images (Fig. 1), and segmentation (Fig. 2) is conducted using these original images.

Based on the OCR results, we remove images that do not contain any textual information from the dataset.

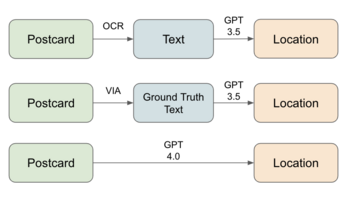

Prediction using ChatGPT

Due to the suboptimal performance of applying NER directly on OCR results, as OCR may contain grammatical errors in recognition or the text on the postcard itself may lack the names of locations, we decided to introduce an LLM, like ChatGPT, to attempt this task. Using OpenAI APIs, we mainly explored two approaches: one was to use GPT-3.5 for location prediction based on OCR results, and the other was to directly use GPT-4 for predictions based on images. Additionally, we required ChatGPT to return a fixed JSON format object, including the predicted country and city, eliminating the need for NER. We found that both methods significantly improved upon previous efforts. Although GPT-4 showed better performance, as it also had the image itself as additional information, we discovered that after multiple optimizations of the GPT-3.5 prompt, its results were not much inferior to GPT-4. Moreover, considering the cost, using OCR results with an optimized prompt for GPT-3.5 is an economical method. Therefore, we use this as our main pipeline.

Construction of Ground Truth

To scientifically evaluate the effectiveness of our prediction pipeline, it is necessary to create a ground truth for testing. To minimize the occurrence of postcard backs, we selected IDs that contain only one image for testing. Due to the highly uneven distribution of postcard providers on Europeana, we stipulated that no more than 30 IDs from the same provider were included in our random sampling. After sampling randomly from 35,000 IDs, we obtained 535 IDs from 24 different providers. Through the OCR process, we identified 350 IDs with recognizable text on the image, and after manual screening, we found 309 IDs to be meaningful postcards. We used GPT-3.5 to predict the OCR results of these 309 IDs and obtained a preliminary set of predictions, which we refer to as a noisy test set, as it is likely that there are still errors from the OCR model.

With the help of VGG Image Annotator (VIA), we decided to manually annotate this sample set of 309 IDs.During the annotation process, we only marked the text printed on the postcards, adopting a uniform standard and not annotating any handwritten script added later to the postcards. Additionally, we designated the origin (country and city) of each postcard, combining the postcard itself and its metadata. For postcards that mention a place name but cannot be located, we marked the country or city of origin as undefined. For other postcards whose origin could not be determined from the available information, we marked the country or city of origin as null.

After completing the Ground Truth, we then used our GPT-3.5 pipeline to predict using the manually annotated correct text results of the Ground Truth. Simultaneously, we used GPT-4 to perform predictive assessments on the Ground Truth as a comparison, to better evaluate the effectiveness of our prediction pipeline.

Enhanced Web Application Features

The application harnesses front-end technologies like React, HTML, and CSS for a responsive experience, integrating Mapbox for interactive mapping. This setup provides a detailed geographical context for exploring the postcards. It features advanced aggregation from the Test Images set, ensuring clear and effective display on the map. The dynamic approach adapts to user interactions, such as zooming, to maintain clarity and intuitive navigation. Interactive map pins reveal a drawer component when clicked. This feature elegantly displays the postcards associated with each pin, varying from single images to lists based on location, allowing users to delve into the historical context. The zoom functionality is designed to enhance engagement, offering detailed views at closer levels and a broader perspective at higher levels. This ensures an engaging and accessible experience throughout. The drawer component provides a user-friendly interface for in-depth exploration of postcards. It enables smooth and intuitive browsing, enriching the overall experience.

By combining these features with a user-centric design, the Europeana Postcards web application stands as a unique platform for exploring Europe's cultural and historical heritage, marrying historical content with modern technology to create an educational and engaging user experience.

Result Assessment

Based on Groud truth, we evaluated the accuracy of our three different pipelines(Fig. 4).

| Method | Location | Country |

|---|---|---|

| Text + GPT 3.5 | 62.8% | 72.7% |

| Groud Truth Text + GPT 3.5 | 71.3% | 89.1% |

| Image + GPT 4 | 76.1% | 89.1% |

Limitations & Future Work

Improved Text Recognition

Enhanced OCR capabilities are crucial for the "Europeana: Mapping Postcards" project, as there is a pressing need to improve OCR technology for more effective handling of diverse scripts and languages, especially beneficial for postcards featuring non-standard fonts or multiple languages. Furthermore, the integration of advanced AI models is pivotal. These sophisticated models are essential for accurately interpreting nuanced textual content on postcards, including challenges like handwritten notes or faded text, thereby significantly refining the quality and reliability of the data extracted from these historical treasures.

Enhanced Geographic Accuracy

Developing Specialized AI Algorithms: Creating AI algorithms tailored to interpret and correct geographical data is vital, especially for postcards with ambiguous or historically changed place names. Collaboration with Historical Experts: Partnering with historians or geographical experts can enhance the accuracy of location data, crucial for postcards depicting locations that have changed over time.

Integration with Other Historical Resources

Cross-Referencing with Historical Databases: Linking the postcard collection with other historical databases can enrich the contextual understanding. Integrating historical maps, texts, or photographic archives can provide a multi-faceted view of history. Collaborative Projects with Cultural Institutions: Collaborating with museums, archives, and cultural institutions can lead to joint projects, utilizing postcards as gateways to broader historical narratives and exhibitions. The "Europeana: Mapping Postcards" project, by focusing on these areas, aims to continue growing and evolving, thereby enhancing its role as a rich, interactive portal into Europe's cultural and historical heritage.

Project plan & Milestones

Project plan

| Timeframe | Task | Completion |

|---|---|---|

| Week 4 |

|

✓ |

| Week 5 |

|

✓ |

| Week 6 |

|

✓ |

| Week 7 |

|

✓ |

| Week 8 |

|

✓ |

| Week 9 |

|

✓ |

| Week 10 |

|

✓ |

| Week 11 |

|

✓ |

| Week 12 |

|

✓ |

| Week 13 |

|

✓ |

| Week 14 |

|

✓ |