Paris: address book of the past: Difference between revisions

Lea.marxen (talk | contribs) |

Lea.marxen (talk | contribs) |

||

| Line 32: | Line 32: | ||

| rowspan="8" | Alignment | | rowspan="8" | Alignment | ||

| tba | | tba | ||

| Preprocessing | | [[#Preprocessing | Preprocessing]] | ||

|- | |- | ||

| Week 5 | | Week 5 | ||

| Line 43: | Line 43: | ||

| Week 7 | | Week 7 | ||

| | | | ||

| rowspan="3" | Substituting abbreviations, | | rowspan="3" | [[#Preprocessing | Substituting abbreviations]], | ||

[[#Perfect Matching without Spaces | alignment without spaces]] | [[#Perfect Matching without Spaces | alignment without spaces]] | ||

|- | |- | ||

Revision as of 00:14, 21 December 2022

Introduction

This project works with around 4.4 million datapoints which have been extracted from address books in Paris (Bottin Data). The address books date from the period 1839-1922 and contain the name, profession and place of residence of Parisian citizens. In a first step, we align this data with geodata of Paris’s city network, in order to be able to conduct a geospatial analysis on the resulting data in a second step.

Motivation

In the 19th century, Paris was a place of great transformations. Like in many other European cities, the industrialization led to radical changes in people’s way of life, completely reordering the workings of both economy and society. At the same time, the city grew rapidly. While only half a million people lived there in 1800, the number of inhabitants increased by the factor of nearly 7 within one century. To get control over the expansion of the city and to improve people’s living conditions, the city underwent constructional changes during the Haussmann Period (1853-1870), leading to the grand boulevards and general cityscape that we know today.

While all those circumstances have been well documented and studied extensively, they could mainly be described in a qualitative manner, e. g. by looking at the development of certain streets. The Bottin dataset, providing information on persons, their professions and locations during exactly the time of change described above, will be able to open new perspectives on the research, as it permits to analyze the economic and social transformation on a grander scale. With this data, it will be possible to follow the development of a chosen profession over the whole city, or to look at the economic transformation of an Arrondissement throughout the century.

This project will give an idea of the potential which lies in the Bottin Data to contribute to the research on Paris’s development during the 19th century.

Deliverables

- Github

- Google Drive

Organisation

Planning:

| Part | Street Data | Bottin Data | Analysis | |

|---|---|---|---|---|

| Week 4 | Alignment | tba | Preprocessing | |

| Week 5 | tba | Perfect Matching with first Street Data | ||

| Week 6 | tba | |||

| Week 7 | Substituting abbreviations, | |||

| Week 8 | ||||

| Week 9 | ||||

| Week 10 | Updating Street Data | |||

| Week 11 | Fuzzy Matching,

Updating Street Data, Including profession tags | |||

| Week 12 | Analysis | |||

| Week 13 | - | - | ||

| Week 14 |

Alignment

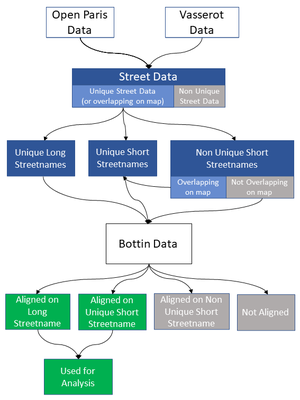

The alignment happened in two steps: First, two street network datasets of the years 1836 and 2022 were combined to create one dataset which incorporates all available geodata. Second, this dataset was used to align the Bottin datapoints with a matching street and thus a georeference.

Aligning Street Datasets

The Street Datasets

Vasserot Data (1836)

Open Street Data (2022)

Aligning Bottin Data with Street Data

After having constructed one dataset of past and present streets in Paris, it will be used to align each datapoint of the Bottin Data with a geolocation. The alignment process is decribed in detail in the following chapter. For this, we first introduce the Bottin dataset, then elaborate on the methods of the alignment process and finally estimate the quality of the alignment.

Bottin Dataset

The dataset referred to as “Bottin Data” consists of slightly over 4.4 million datapoints which have been extracted via Optical Character Recognition (OCR) out of address books named “Didot-Bottin” from Paris. The address books are from 55 different years within the time span of 1839 and 1922, with at least 37’177 entries (1839) up to 130’958 entries (1922) per given year. The address books can be examined at the Gallica portal[1]. Each entry consists of a person’s name, their profession or activity, their address and the year of the address book it was published in.

As the extraction process from the scanned books was done automatically by OCR, the data is not without errors. Di Lenardo et al.[2], who were responsible for the extraction process, estimated that the character error amounts to 2.6% with a standard deviation of 0.1%, while the error per line is 21% with a standard deviation of 2.9%.

Tagged Professions

This project was able to build upon previous work by Ravinithesh Annapureddy[3], who wrote a pipeline to clean and tag the profession of each entry in the data. As the code was not necessarily written for the exact dataset we worked with, the notebook “cleaning_special_characters.ipynb” could not be used, as it referenced the entries by row number in the dataframe. However, the notebooks “creating_french_dictionary_words_set.ipynb” and “cleaning_and_creating_tags.ipynb” were successfully run, adding to each entry in the Bottin Data a list of tags. Those tags were the result of a series of steps including the removal of stopwords (such as “le” or “d’”), the combination of broken words, spell correction (using frequently appearing words in the profession data and two external dictionaries) and the extension of abbreviations.

We faced two challenges using the pipeline by Ravinithesh. First, the pipeline was not optimized for large datasets, so it took a long time to run. Second, the created tags were stored in a list and there has not been any work done to cluster them to profession sectors or fields, which made the usage difficult during the analysis. We chose to convert the lists (e.g. [‘peints’, ‘papier’]) to a string where the tags were comma-separated (“peints, papier”). Effectively, this meant that we corrected misspelled professions, which for example led to almost 1000 more entries having the profession entry “vins”. But at the same time, it did not take an entry like “peints, papier” into account when selecting the profession “peints” in the analysis.

Alignment Process

The alignment was conducted on the street level. For this, the street column (“rue”) of the Bottin dataset was used to carry out a left join, using three different versions of the street network data as the dataset “on the right side”:

(1) The column with the full (long) street name of the street data,

(2) the column with the short street name (meaning without type of the street) of the street data, where the short street names are unique, and

(3) the street data with non-unique short street names.

The reason for joining both on the full and short street names lies in the fact that the entries in the Bottin dataset often only contain short versions of the street names and thus cannot be matched on the full street names. Moreover, the division into unique and non-unique short street names was made with regards to the analysis which followed afterwards. As the non-unique data is by nature ambiguous, it is not used in the analysis. However, as the streets still appear in the Bottin data, they have to be matched. Otherwise, we would have risked letting them match to wrong streets during the fuzzy matching step.

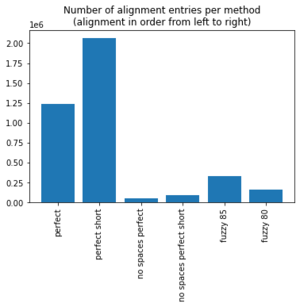

After each alignment, the newly aligned data was appended to already aligned data and the next alignment was repeated with all entries which had not been successfully aligned yet. Several matching methods were conducted consecutively, namely perfect matching, matching without spaces and fuzzy matching. Within each method, the alignment was first executed for the long street names (1), then for the unique short street names (2) and in the end for non-unique short street names (3).

The overall achieved alignment is 87.60%, with 85% of the aligned data matched with perfect matching, 4% without spaces and 11% with fuzzy matching.

Preprocessing

Before the alignment could be conducted, the same preprocessing function used for the street networks was run, meaning that characters with accents were substituted by their counterpart without accent and the signs -_ as well as double spaces were turned into one space. Additionally, as the dataset contained around 1.5 million abbreviations, we wrote two dictionaries with which we could substitute those abbreviations:

- The first dictionary was built using the Opendata Paris street network, as it lists streets in three versions: their long streetname (e.g. “Allée d'Andrézieux”), the abbreviated streetname (prefix abbreviated, e.g. “All. d'Andrézieux”), short streetname (e.g. “ANDREZIEUX”). We computed the difference between long and short street names (“Allée d’”) and the difference between abbreviated and short street names (“All. d‘”) and saved the resulting pair in the dictionary. This way, around 500’000 abbreviations could be substituted.

- The second dictionary was built manually, by printing the most common abbreviations left in data and saving them with their corresponding correct form in the dictionary. With only 152 dictionary entries, the number of abbreviations could be further lowered from around 1 million to less than 50’000.

Perfect Matching

The first method for the alignment consisted in perfect matching. Therefore, the preprocessed street (“rue_processed”) from Bottin Dataset was joined (left join) with the preprocessed street columns of the street dataset (on (1), (2) and (3) respectively, see Alignment Process).

With this method, already 75% of the whole dataset could be aligned.

More specifically, looking at all aligned data, 32.06% were perfectly aligned on the long street names (1), 40.94% on the unique short street names (2) and 12.48% on the non-unique short street names (3).

Perfect Matching without Spaces

As the OCR often did not recognize spaces correctly, we decided to align the streets after having deleted all whitespaces. Additionally, we deleted the characters | . : \ and saved the resulting strings in new columns respectively. On those columns, perfect matching like above was carried out. Although the relative number seems small – only 1.44% of all aligned data was aligned on (1), 1.95% on (2) and 0.36% on (3) – this still amounts to around 145’000 datapoints in the Bottin dataset.

Fuzzy Matching

Quality Assessment

Analysis

Limitations of the Project

faced some challenges when working on project

OCR Mistakes

- OCR mistakes together with abbreviations leading to wrong fuzzy matching -> idea: use customized distance function which punishes character substitutions/insertions at the end of the string more than at the beginning of the string (EXAMPLE)

Missing streets

- streets not in either one of the two datasets of 1836 or 2022: rue d'Allemagne -> get other street data from 19th century

- given data in Bottin dataset not necessarily a street, e.g. "cloître"

Ambiguous streetnames

- in 1836 street network dataset: datapoints with same streetname, but not located near each other

- many bottin datapoints aligned on "short streetname", while this streetname might refer to many different streets (at point in time when address book published clear?) -> try to incorporate when which street was built

Matching on street level

- worked with centroids of streets in order to represent each data point, which especially for long streets is imprecise -> additional step to clean and align address numbers

Small part of potential analysis

- time constraint: semester project, already resources for alignment

- knowledge constraint: get more (historical) knowledge about Paris to be able to put analysis into context

Outlook

Possible Directions of Research

- Perfection of Alignment: include address numbers, include more street network datasets, two-step alignment of short streetname and then type of street, other distance function with heavier penalty for substitution at the end of string, align on whole datapoint (name, profession, street) to account for businesses existing more than one year

- Work on Professions: Cluster in thematic fields, maybe classify by social reputation (-> gentrification of a quartier)

- Analysis: gentrification, development of arrondissements, influence of political decisions of social/economic landscape,...

- Visualization: interactive map

-> good alignment needed to derive facts/knowledge from data

References

- ↑ Gallica portal, Bibliothèque Nationale de France, https://gallica.bnf.fr/accueil/en/content/accueil-en?mode=desktop; example for an address book on https://gallica.bnf.fr/ark:/12148/bpt6k6314697t.r=paris%20paris?rk=21459;2

- ↑ di Lenardo, Isabella; Barman, Raphaël; Descombes, Albane; Kaplan, Frédéric, 2019, "Repopulating Paris: massive extraction of 4 Million addresses from city directories between 1839 and 1922.", https://doi.org/10.34894/MNF5VQ, DataverseNL, V2

- ↑ Annapureddy, Ravinithesh (2022), Enriching-RICH-Data [Source Code]. https://github.com/ravinitheshreddy/Enriching-RICH-Data